Technology Guide V2.0 — Updated May 2019

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Designing with Dante and AES67 / SMPTE ST 2110

Designing with Dante and AES67 / SMPTE ST 2110 AES-NY, AVoIP Pavillion October 16-19, 2019 Patrick Killianey Audinate, Senior Technical Training Manager [email protected] Audinate 1 Objectives: Design Principles (and Why We Recommend Them) Clarify Common Misunderstandings About Dante (and PTPv2). Highlights of the Dante update with SMPTE ST 2110 support. Audinate 2 Prerequisites: Dante Domain Manager is a key part to Audinate’s ST 2110 solution. If you are not familiar with this product, watch this video to learn its role and core functions. In Depth Tour of Dante Domain Manager https://youtu.be/xCY3JNpCu_k Audinate 3 History: Audio Networks SoLutions and Open Standards Audinate 4 History: 10Mbit 1996 (1) CD-ROM (44) 3¼” Floppy Disks https://en.wikipedia.org/wiki/Microsoft_Office_97 Audinate 5 History: Network Innovation 10Mbit 100Mbit 1996 1998 2001 Star, 32x32 Daisy Chain, 64x64 5⅓msec ⅙msec QoS PTP Quality of Service Precision Time Protocol (Prioritization of Time Sensitive Data) (Network Synchronization) ZeroConfig IGMP Snooping Automatic Peer-to-Peer Configuration Internet Group Management Protocol (No need for Static or DHCP config) (Reduces Impact of Multicast Distribution) Audinate 6 History: Network Innovation 10Mbit 100Mbit ≥1Gbit 1996 1998 2001 2006 2013 2015 Star, 32x32 Daisy Chain, 64x64 Star 5⅓msec ⅙msec 512x512 @ ¼msec Best Practices for Audio Networks AES AESTD1003V1 - June 6, 2009 http://www.aes.org/technical/documents/AESTD1003V1.pdf “Audio networking systems are characterized In practice, there are several issues for by the transport of uncompressed audio in compatibility between formats that should PCM format, which in principle could be be addressed and solved with specific reformatted as requested. -

Completed Projects / Projets Terminés

Completed Projects / Projets terminés New Standards — New Editions — Special Publications Please note that the following standards were developed by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), and have been adopted by the Canadian Standards Association. These standards are available in PDF format only. CAN/CSA-ISO/IEC 2593:02, 4th edition Information Technology–Telecommunications and Information Exchange Between Systems–34-Pole DTE/DCE Interface Connector Mateability Dimensions and Contact Number Assignments (Adopted ISO/IEC 2593:2000).................................... $85 CAN/CSA-ISO/IEC 7811-2:02, 3rd edition Identification Cards–Recording Technique–Part 2: Magnetic Stripe–Low Coercivity (Adopted ISO/IEC 7811-2:2001) .................................................................................... $95 CAN/CSA-ISO/IEC 8208:02, 4th edition Information Technology–Data Communications–X.25 Packet Layer Protocol for Data Terminal Equipment (Adopted ISO/IEC 8208:2000) ............................................ $220 CAN/CSA-ISO/IEC 8802-3:02, 2nd edition Information Technology–Telecommunications and Information Exchange Between Systems–Local and Metropolitan Area Networks–Specific Requirements–Part 3: Carrier Sense Multiple Access with Collision Detection (CSMA/CD) Access Method and Physical Layer (Adopted ISO/IEC 8802-3:2000/IEEE Std 802.3, 2000) ................. $460 CAN/CSA-ISO/IEC 9798-1:02, 2nd edition Information Technology–Security Techniques–Entity Authentication–Part -

Transition to Digital Digital Handbook

TRANSITION TO DIGITAL DIGITAL HANDBOOK Audio's video issues tion within one audio sample, the channels of audio over a fiber-optic in- There can be advantages to locking preambles present a unique sequence terface. This has since been superseded the audio and video clocks, such as for (which violate the Biphase Markby AES 10 (or MADI, Multichannel editing, especially when the audio and Code) but nonetheless are DC -freeAudio Digital Interface), which sup- video programs are related. Althoughand provide clock recovery. ports serial digital transmission of 28, digital audio equipment may provide 56, or 64 channels over coaxial cable or an analog video input, it is usually bet- Like AES3, but not fiber-optic lines, with sampling rates ter to synchronize both the audio and A consumer version of AES3 -of up to 96kHz and resolution of up to the video to a single higher -frequencycalled S/PDIF, for Sony/Philips Digi-24 bits per channel. The link to the IT source, such as a 10MHz master refer-tal Interface Format (more formallyworld has also been established with ence. This is because the former solu- known as IEC 958 type II, part of IEC- AES47, which specifies a method for tion requires a synchronization circuit60958) - is also widely used. Essen- packing AES3 streams over Asynchro- that will introduce some jitter into thetially identical to AES3 at the protocol nous Transfer Mode (ATM) networks. signal, especially because the video it- level, the interface uses consumer - It's also worth mentioning Musical self may already have some jitter. To ac- friendly RCA jacks and coaxial cable.Instrument Digital Interface (MIDI) for broadcast operations. -

(L3) - Audio/Picture Coding

Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document L3 INCITS/ISO/IEC 9281-1:1990:[R2013] Information technology - Picture Coding Methods - Part 1: Identification IS 9281-1:1990 INCITS/ISO/IEC 9281-2:1990:[R2013] Information technology - Picture Coding Methods - Part 2: Procedure for Registration IS 9281-2:1990 INCITS/ISO/IEC 9282-1:1988:[R2013] Information technology - Coded Representation of Computer Graphics Images - Part IS 9282-1:1988 1: Encoding principles for picture representation in a 7-bit or 8-bit environment :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 7: IS 13522-7:2001 Interoperability and conformance testing for ISO/IEC 13522-5 (MHEG-7) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 5: IS 13522-5:1997 Support for Base-Level Interactive Applications (MHEG-5) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 3: IS 13522-3:1997 MHEG script interchange representation (MHEG-3) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 6: IS 13522-6:1998 Support for enhanced interactive applications (MHEG-6) :[] Information technology - Coding of Multimedia and Hypermedia Information - Part 8: IS 13522-8:2001 XML notation for ISO/IEC 13522-5 (MHEG-8) Created: 11/16/2014 Page 1 of 44 Committee: (L3) - Audio/Picture Coding National Designation Title (Click here to purchase standards) ISO/IEC Document :[] Information technology - Coding -

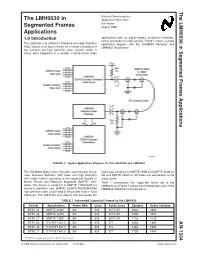

Application Note 1334 the LMH0030 in Segmented Frames Applications

The LMH0030 in Segmented Frames Applications AN-1334 National Semiconductor The LMH0030 in Application Note 1334 Kai Peters Segmented Frames August 2006 Applications 1.0 Introduction applications such as digital routers, production switchers, format converters or video servers. Figure 1 shows a typical The LMH0030 and LMH0031 Standard and High Definition application diagram with the LMH0030 Serializer and Video chipset is an ideal solution for a variety of products in LMH0031 Deserializer. the standard and high definition video systems realm. It allows easy integration in a number of professional video 20108501 FIGURE 1. Typical Application Diagram for the LMH0030 and LMH0031 The LMH0030 Digital Video Serializer automatically recog- video data compliant to SMPTE 259M and SMPTE 344M for nizes Standard Definition (SD) video and High Definition SD and SMPTE 292M for HD Video and serialization to the (HD) video formats according to the respective Society of output ports. Motion Picture and Television Engineers (SMPTE) stan- Table 1 summarizes the supported frame set of the dards. The device is compliant to SMPTE 125M/267M for LMH0030 and Figure 2 shows the simplified data path of the standard definition and SMPTE 260M/274M/295M/296M LMH0030 SD/HD Encoder/Serializer. high definition video as provided to the parallel 10bit or 20bit interfaces. The LMH0030 auto-detects and processes the TABLE 1. Automated Supported Frames by the LMH0030 Format Apecification Frame Rate Lines Active Lines Samples Active Samples SDTV, 54 SMPTE 344M 60I 525 507/1487 3432 2880 SDTV, 36 SMPTE 267M 60I 525 507/1487 2288 1920 SDTV, 27 SMPTE 125M 60I 525 507/1487 1716 1440 SDTV, 54 ITU-R BT 601.5 50I 625 577 3456 2880 SDTV, 36 ITU-R BT 601.5 50I 625 577 2304 1920 SDTV, 27 ITU-R BT 601.5 50I 625 577 1728 1440 PHYTER® is a registered trademark of National Semiconductor. -

Asi6614, Asi6618 Multistream Pci-Express Sound Card

09 DECEMBER 07 ASI6614, ASI6618 MULTISTREAM PCI-EXPRESS SOUND CARD DESCRIPTION FEATURES The ASI6614 and ASI6618 are professional PCI-Express sound cards • 4 or 12 mono/stereo streams of playback into 4 stereo outputs designed for use in radio broadcast automation. (ASI6614) Providing up to 16 play streams that are mixed to 4 (ASI6614) or 8 • 8 or 16 mono/stereo streams of playback into 8 stereo outputs (ASI6618) stereo outputs and up to 2 record streams fed from one (ASI6618) stereo input, the ASI6614 and ASI6518 feature AudioScience’s unique “anything to anywhere” mixing and routing. • 1 or 2 mono/stereo streams of record from 1 stereo input The ASI6614 and ASI6618 provide both balanced analog and AES/EBU • Formats include PCM, MPEG layer 2 and MP3 with sample rates to inputs and outputs. The maximum analog input and output level is 96kHz +24dBu. • MRX™ technology supports digital mixing of multiple stream A choice of uncompressed PCM, MPEG layer 2 and MP3 is available for formats and sample rates both recording and playback. All compression is handled by an on- board floating point DSP, allowing the host computer to focus on other • TSX™ time scaling allows compression/expansion of play streams tasks. by up to +/-20% with no pitch shift ASI6614 and ASI6618 functionality includes MRX™ multi-rate mixing • SSX™ mode for multichannel playback and mixing technology that allows streams of different sample-rates and formats to be mixed digitally. TSX™ time scaling allows compression/expansion of • Balanced stereo analog inputs and outputs with levels to +24dBu any or all playback streams in real time with no change in pitch. -

Application Note

AN5073 Application note Receiving S/PDIF audio stream with the STM32F4/F7/H7 Series Introduction The Sony/Philips Digital Interface Format (S/PDIF) is a point-to-point protocol for serial and uni-directional transmission of digital audio through a single transmission line for consumer and professional applications. The transmission of data can be done in several ways, by electrical or optical means. The S/PDIFRX peripheral embedded in STM32 devices is designed to receive an S/PDIF flow compliant with IEC-60958 and IEC-61937, which define the physical implementation requirements as well as the coding and the protocol. These standards support simple stereo streams up to high sample rates, and compressed multi-channel surround sound, such as those defined by Dolby or DTS. This application note describes electrical interfaces, to properly connect the S/PDIF stream generated by an external device to an STM32 device embedding the S/PDIFRX interface peripheral, since the voltage level of the S/PDIF line is not the same as that used in STM32 devices. AN5073 - Rev 2.0 - June 2018 www.st.com For further information contact your local STMicroelectronics sales office. AN5073 S/PDIF Interface 1 S/PDIF Interface This document applies to Arm®-based devices. Note: Arm is a registered trademark of Arm Limited (or its subsidiaries) in the US and/or elsewhere. 1.1 S/PDIF background S/PDIF is an audio interface for transmission of digital audio data over reasonably short distances between modules of systems such as home theaters or hi-fi. S/PDIF is a single-wire serial uni-directional, self-clocking interface. -

Telos VX® Prime+ Big Performance for Small Facilities

Telos VX® Prime+ Big Performance for Small Facilities OVERVIEW Telos VX® talk-show systems are the world’s first true VoIP-based broadcast phone systems and have been proven to deliver the power of VoIP to the broadcast studio like no other. The Telos VX Prime+, with built-in support for AES67, is the next evolution of Telos VX VoIP phone systems in a powerful new 1RU hardware unit. Additionally, support for the G.722 voice codec ensures the highest quality calls from supported mobile devices. With capacity of 8 fixed hybrids/faders, VX Prime+ is ideal for facilities with 2 to 4 studios. (For larger facilities, check out VX Enterprise with up to 120-hybrid capacity.) AES67 support brings a new level of compatibility and flexibility to VX phone systems. Support for AES67 gives broadcasters the flexibility of integrating VX Prime+ into any AES67 environment, in addition to our own Axia® Livewire® network. With plug-and-play connectivity, you can network multiple channels of audio with any manufacturer’s AES67-compliant hardware. Beyond AES67, Livewire users have the added convenience and power of networking control (GPIO), advertising/discovery, and program associated data throughout the network. TelosAlliance.com/Telos/VX-Prime-Plus Telos VX Prime+ | Big Performance for Small Facilities Using VoIP, VX Prime+ gives you remarkable-sounding on-air phone calls with no ‘gotchas’. It uses standard SIP protocol that works with many VoIP PBX systems and SIP Telco to take advantage of low-cost and high-reliability service offerings. VX Prime+ can also connect to traditional telco lines via Asterisk PBX systems, which can be customized for specific facility requirements. -

Kdy Budeme Surfovat NAVÍC: ZDARMA KE STAŽENÍ Chip Ipad Edice Je ČASOPIS CHIP VE FORMÁTU PDF

DVD 8 GB DUBEN – APRIL 04/2012 WWW.CHIP.CZ Test čtení E-BoOkY → 64 Vs. TaBlEtY Přímé srovnání: Výhody a nevýhody technologií e-ink a LCD NAKdy budeme 200 surfovat MB/S Vše o nejrychlejším internetu všech dob: Technika, ceny, dostupnost, budoucí výstavba → 30 OmLaZeNí NoTeBoOkU € Nekupujte nový, upgradujte starý! → 124 Windows 8 krok za krokem TeStUjEmE BeZ RiZiKa NeJnOvĚjŠí VeRzI / 9,99 DUBEN 2012 | 179,99 KČ → 114 KaTaLoG MoBiLnÍcH ApLiKaCí PRO ANDROID, IOS I WINDOWS Z milionů aplikací vybíráme ty nejlepší → 104 PlNé VeRzE Na ChIp DvD 6MĚSÍČNÍ 6MĚSÍČNÍ PLACENÁ INZERCE LICENCE LICENCE ČISTÝ A VYLADĚNÝ POČÍTAČ ZABEZPEČENÍ PRO PC SNADNÁ ZÁLOHA VŽDY AKTUÁLNÍ S TURBOVÝKONEM A MOBILY PRO KAŽDÉHO OVLADAČE TuneUp Utilities 2011 Bitdefender Sphere Ocster 1-Click Backup DriverScanner 2012 novinky PLACENÁ INZERCE www.chip.cZ 02/2010 3 EDITORIAL POSTŘEHY VYZKOUŠEJTE SI BUDOUCNOST REDAKCE U Evropa začíná zaostávat ve 4G: Není tomu tak dávno, co se Evropa v oblasti mobilního in- ternetu chlubila jasnou techno- logickou převahou nad Spoje- nými státy. Nyní se role proho- svět počítačů už není, co býval. Úplně se vytratila příkazová řádka, ztrácíme dily. Zatímco američtí operáto- kontrolu nad tím, co máme v operační paměti, kolik aplikací nám běží v po- ři, jako např. Sprint a AT&T, již zadí a kde vlastně máme svá data. Pro velkou část uživatelů už ani není dů- ve velkých městech (Chicago, Dallas) nasadili technologie 4G, ležité, jaký procesor nebo kolik paměti mají ve svém stroji, protože napros- Evropou se teprve žene vlna tá většina aktuálně prodávaných konfigurací už s přehledem zvládá všech- aukcí, která v jednotlivých stá- ny běžné kancelářské a komunikační úkoly. -

Designed to Perform the 8 Channel Server

Designed to Perform NEW The 8 Channel Server Production & Media Server Designed to Perform More Power. More Channels. More Capabilities. The new generation of XT servers, with its flexible 8 channel SD/HD & 6 channel 3D/1080p configuration, combines EVS’ world-class speed & reliability with the ultimate capabilities and performance. XT3 integrates today’s top broadcast and IT technologies to offer broadcasters and producers unparalleled motion control and adaptability. Based on its unique Loop Recording technology and its powerful networking capabilities, XT3 offers operators complete media control from ingest to playout, including live editing, slow-motion replays, multi-channels playback, and transfer to third-party systems such as craft editors, automation, archiving, or storage. The XT3 is the first server to natively support such a wide range of codecs without requiring hardware changes, allowing production teams to easily choose amongst the different compression schemes they want to use throughout the entire edit process. Designed to boost broadcasters’ live and near-live production capabilities, the XT3 provides operators with the highest level of bandwidth, flexibility and control available in the industry today. Main Applications ■ Live OB/Remote production ■ Studio ingest ■ Live studio production ■ Content control & delay ■ Fast turnaround production ■ Scenarized production ■ VTR replacement Production & Media Server Designed to Perform The 8 Channel Server Loop Recording Dual Networking EVS ingest solutions and loop recording guarantee unin- The XT3 can be linked to either one of two separate networks : terrupted multi-channel recording and access to recorded The EVS high-bandwidth media sharing network called material at any time. Recording starts as soon as the server XNet[2], which allows all video and audio sources recorded is booted, and remains on until the server is shut down. -

Understanding HD and 3G-SDI Video Poster

Understanding HD & 3G-SDI Video EYE DIGITAL SIGNAL TIMING EYE DIAGRAM The eye diagram is constructed by overlaying portions of the sampled data stream until enough data amplitude is important because of its relation to noise, and because the Y', R'-Y', B'-Y', COMMONLY USED FOR ANALOG COMPONENT ANALOG VIDEO transitions produce the familiar display. A unit interval (UI) is defined as the time between two adjacent signal receiver estimates the required high-frequency compensation (equalization) based on the Format 1125/60/2:1 750/60/1:1 525/59.94/2:1, 625/50/2:1, 1250/50/2:1 transitions, which is the reciprocal of clock frequency. UI is 3.7 ns for digital component 525 / 625 (SMPTE remaining half-clock-frequency energy as the signal arrives. Incorrect amplitude at the Y’ 0.2126 R' + 0.7152 G' + 0.0722 B' 0.299 R' + 0.587 G' + 0.114 B' 259M), 673.4 ps for digital high-definition (SMPTE 292) and 336.7ps for 3G-SDI serial digital (SMPTE 424M) sending end could result in an incorrect equalization applied at the receiving end, thus causing Digital video synchronization is provided by End of Active Video (EAV) and Start of Active Video (SAV) sequences which start with a R'-Y' 0.7874 R' - 0.7152 G' - 0.0722 B' 0.701 R' - 0.587 G' - 0.114 B' as shown in Table 1. A serial receiver determines if the signal is “high” or “low” in the center of each eye, and signal distortions. Overshoot of the rising and falling edge should not exceed 10% of the waveform HORIZONTAL LINE TIMING unique three word pattern: 3FFh (all bits in the word set to 1), 000h (all 0’s), 000h (all 0’s), followed by a fourth “XYZ” word whose B'-Y' -0.2126 R' - 0.7152 G' + 0.9278 B' -0.299 R' - 0.587 G' + 0.886 B' detects the serial data. -

Multi-Rate Serial Digital Interface (SDI) Physical Layer IP Core Is an Ipexpress User-Configurable Core and Can Be Used to Generate Any Allowable Configuration

ispLever TM CORECORE Multi-Rate Serial Digital Interface Physical Layer IP Core User’s Guide January 2012 ipug70_01.2 Multi-Rate Serial Data Interface Physical Layer Lattice Semiconductor IP Core User’s Guide Introduction Serial Digital Interface (SDI) is the most popular raw video link standard used in television broadcast studios and video production facilities. Field Programmable Gate Arrays (FPGAs) with SDI interface capability can be used for acquisition, mixing, storage, editing, processing and format conversion applications. Simpler applications use FPGAs to acquire SDI data from one or more standard definition (SD) or high definition (HD) sources, perform sim- ple processing and retransmit the video data in SDI format. Such applications require an SDI physical layer (PHY) interface and some basic processing blocks such as a color space converter and frame buffer. In more complex applications, the acquired video receives additional processing, such as video format conversion, filtering, scaling, graphics mixing and picture-in-picture display. FPGA devices can also be used as a bridge between SDI video sources and backplane protocols such as PCI Express or Ethernet, with or without any additional video processing. In an FPGA-based SDI solution, the physical interface portion is often the most challenging part of the solution. This is because the PHY layer includes several device-dependent components such as high speed I/Os (inputs/outputs), serializer/deserializer (SERDES), clock/data recovery, word alignment and timing signal detection logic. Video processing, on the other hand, is algorithmic and is usually achieved using proprietary algorithms developed by in-house teams. The Lattice Multi-Rate SDI PHY Intellectual Property (IP) Core is a complete SDI PHY interface that connects to the high-speed SDI serial data on one side and the formatted parallel data on the other side.