Cross-Platform Data Collection for Online Hate Groups; Fall 2019 CS6604 Digital Libraries Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Diaspora Engagement Possibilities for Latvian Business Development

DIASPORA ENGAGEMENT POSSIBILITIES FOR LATVIAN BUSINESS DEVELOPMENT BY DALIA PETKEVIČIENĖ CONTENTS CHAPTER I. Diaspora Engagement Possibilities for Business Development .................. 3 1. Foreword .................................................................................................................... 3 2. Introduction to Diaspora ............................................................................................ 5 2.1. What is Diaspora? ............................................................................................ 6 2.2. Types of Diaspora ............................................................................................. 8 3. Growing Trend of Governments Engaging Diaspora ............................................... 16 4. Research and Analysis of the Diaspora Potential .................................................... 18 4.1. Trade Promotion ............................................................................................ 19 4.2. Investment Promotion ................................................................................... 22 4.3. Entrepreneurship and Innovation .................................................................. 28 4.4. Knowledge and Skills Transfer ....................................................................... 35 4.5. Country Marketing & Tourism ....................................................................... 36 5. Case Study Analysis of Key Development Areas ...................................................... 44 CHAPTER II. -

Do You Know Your Instagram from Your Tiktok, Twitter Or Tumblr?

Do you know your Instagram from your TikTok, Twitter or Tumblr? If not, we have created some basic Instagram tutorials on YouTube (see links be- low) to get your club onto Instagram and posting in no time! Why Instagram? • Great engagement with younger age group ie. 13-17 y/o compared to Face- book • Easy to post – take photos and videos on rally days, competitions and camps, or re-post your members photos. Some tips for using Instagram • Engagement is more important than followers - remember, it’s not a compe- tition! Instagram is a really great tool to keep connected with your younger members, and also reach potential new members • Having a business account will allow you to see your engagements, profile visits, audience demographics, and also when your audience is online the most (to help you to determine the best time to post) • Remember to adhere to the Pony Club NSW Social Media Policy, which can be found here. A good rule is if you are questioning whether it is appro- priate to post, it probably isn’t! • All Instagram users must be at least 13 years or older to have their own ac- count. • Don’t share photos posted on members pages without asking them for per- mission first, and make sure you credit them for use of their image. • Use a club email address to make your Instagram account, that way if the person looking after the account moves on from the club, the login details can be passed onto the new person responsible (succession planning). Instagram vs Facebook We have found at a state level, our Facebook followers are generally older than our Instagram followers, so we customise our approach depending on the plat- form. -

Real-Time News Certification System on Sina Weibo

Real-Time News Certification System on Sina Weibo Xing Zhou1;2, Juan Cao1, Zhiwei Jin1;2,Fei Xie3,Yu Su3,Junqiang Zhang1;2,Dafeng Chu3, ,Xuehui Cao3 1Key Laboratory of Intelligent Information Processing of Chinese Academy of Sciences (CAS) Institute of Computing Technology, Beijing, China 2University of Chinese Academy of Sciences, Beijing, China 3Xinhua News Agency, Beijing, China {zhouxing,caojuan,jinzhiwei,zhangjunqiang}@ict.ac.cn {xiefei,suyu,chudafeng,caoxuehui}@xinhua.org ABSTRACT There are many works has been done on rumor detec- In this paper, we propose a novel framework for real-time tion. Storyful[2] is the world's first social media news agency, news certification. Traditional methods detect rumors on which aims to discover breaking news and verify them at the message-level and analyze the credibility of one tweet. How- first time. Sina Weibo has an official service platform1 that ever, in most occasions, we only remember the keywords encourage users to report fake news and these news will be of an event and it's hard for us to completely describe an judged by official committee members. Undoubtedly, with- event in a tweet. Based on the keywords of an event, we out proper domain knowledge, people can hardly distinguish gather related microblogs through a distributed data acqui- between fake news and other messages. The huge amount sition system which solves the real-time processing need- of microblogs also make it impossible to identify rumors by s. Then, we build an ensemble model that combine user- humans. based, propagation-based and content-based model. The Recently, many researches has been devoted to automatic experiments show that our system can give a response at rumor detection on social media. -

Exploring the Relationship Between Online Discourse and Commitment in Twitter Professional Learning Communities

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Bowling Green State University: ScholarWorks@BGSU Bowling Green State University ScholarWorks@BGSU Visual Communication and Technology Education Faculty Publications Visual Communication Technology 8-2018 Exploring the Relationship Between Online Discourse and Commitment in Twitter Professional Learning Communities Wanli Xing Fei Gao Bowling Green State University, [email protected] Follow this and additional works at: https://scholarworks.bgsu.edu/vcte_pub Part of the Communication Technology and New Media Commons, Educational Technology Commons, and the Engineering Commons Repository Citation Xing, Wanli and Gao, Fei, "Exploring the Relationship Between Online Discourse and Commitment in Twitter Professional Learning Communities" (2018). Visual Communication and Technology Education Faculty Publications. 47. https://scholarworks.bgsu.edu/vcte_pub/47 This Article is brought to you for free and open access by the Visual Communication Technology at ScholarWorks@BGSU. It has been accepted for inclusion in Visual Communication and Technology Education Faculty Publications by an authorized administrator of ScholarWorks@BGSU. Exploring the relationship between online discourse and commitment in Twitter professional learning communities Abstract Educators show great interest in participating in social-media communities, such as Twitter, to support their professional development and learning. The majority of the research into Twitter- based professional learning communities has investigated why educators choose to use Twitter for professional development and learning and what they actually do in these communities. However, few studies have examined why certain community members remain committed and others gradually drop out. To fill this gap in the research, this study investigated how some key features of online discourse influenced the continued participation of the members of a Twitter-based professional learning community. -

KNOW HOW to TALK with YOUR TEEN ABOUT INSTAGRAM: a PARENT’S GUIDE a Parent’S Guide to Instagram 3 a LETTER from the PARENTS of INSTAGRAM

KNOW HOW TO TALK WITH YOUR TEEN ABOUT INSTAGRAM: A PARENT’S GUIDE A Parent’s Guide to Instagram 3 A LETTER FROM THE PARENTS OF INSTAGRAM If you’re reading this, you’re probably a parent. That’s why we’ve created this guide. We know it We know from research that many parents have might feel daunting to have these conversations concerns about their teen’s safety online, and with your teens. We want to make sure you feel as parents who also work at Instagram, we want equipped with the tools you need to start the to address those concerns. It’s with our teens in dialogue and hope this guide will make things a mind that we go into work every day. Parenting is little easier. both an awesome responsibility and an amazing opportunity. For all of the incredible opportunities We feel a great responsibility to make sure parenting brings, there are some challenging Instagram is a supportive community for teens ones too — we’re raising the first generation of to connect and share, and we want to make sure digital natives, for whom the online world is just you as a parent feel informed and empowered to as important as the offline one. help guide them. We believe that the first step is understanding why they use Instagram, and what We know that as a parent it may be hard to tools are available to make sure their experience understand what your teen is doing online and is positive, intentional and safe. why they’re spending so much time on their phones. -

Developments in China's Military Force Projection and Expeditionary Capabilities

DEVELOPMENTS IN CHINA'S MILITARY FORCE PROJECTION AND EXPEDITIONARY CAPABILITIES HEARING BEFORE THE U.S.-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION ONE HUNDRED FOURTEENTH CONGRESS SECOND SESSION THURSDAY, JANUARY 21, 2016 Printed for use of the United States-China Economic and Security Review Commission Available via the World Wide Web: www.uscc.gov UNITED STATES-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION WASHINGTON: 2016 ii U.S.-CHINA ECONOMIC AND SECURITY REVIEW COMMISSION HON. DENNIS C. SHEA, Chairman CAROLYN BARTHOLOMEW, Vice Chairman Commissioners: PETER BROOKES HON. JAMES TALENT ROBIN CLEVELAND DR. KATHERINE C. TOB IN HON. BYRON L. DORGAN MICHAEL R. WESSEL JEFFREY L. FIEDLER DR. LARRY M. WORTZEL HON. CARTE P. GOODWIN MICHAEL R. DANIS, Executive Director The Commission was created on October 30, 2000 by the Floyd D. Spence National Defense Authorization Act for 2001 § 1238, Public Law No. 106-398, 114 STAT. 1654A-334 (2000) (codified at 22 U.S.C. § 7002 (2001), as amended by the Treasury and General Government Appropriations Act for 2002 § 645 (regarding employment status of staff) & § 648 (regarding changing annual report due date from March to June), Public Law No. 107-67, 115 STAT. 514 (Nov. 12, 2001); as amended by Division P of the “Consolidated Appropriations Resolution, 2003,” Pub L. No. 108-7 (Feb. 20, 2003) (regarding Commission name change, terms of Commissioners, and responsibilities of the Commission); as amended by Public Law No. 109- 108 (H.R. 2862) (Nov. 22, 2005) (regarding responsibilities of Commission and applicability of FACA); as amended by Division J of the “Consolidated Appropriations Act, 2008,” Public Law Nol. -

Why Don't Some White Supremacist Groups Pay Taxes?

Emory Law Scholarly Commons Emory Law Journal Online Journals 2018 Why Don't Some White Supremacist Groups Pay Taxes? Eric Franklin Amarante Follow this and additional works at: https://scholarlycommons.law.emory.edu/elj-online Recommended Citation Eric F. Amarante, Why Don't Some White Supremacist Groups Pay Taxes?, 67 Emory L. J. Online 2045 (2018). Available at: https://scholarlycommons.law.emory.edu/elj-online/12 This Essay is brought to you for free and open access by the Journals at Emory Law Scholarly Commons. It has been accepted for inclusion in Emory Law Journal Online by an authorized administrator of Emory Law Scholarly Commons. For more information, please contact [email protected]. AMARANTE GALLEYFINAL 2/15/2018 12:13 PM WHY DON’T SOME WHITE SUPREMACIST GROUPS PAY TAXES? Eric Franklin Amarante* ABSTRACT A number of white supremacist groups enjoy tax-exempt status. These hate groups do not have to pay federal taxes and people who give money to these groups may take deductions on their personal taxes. This recognition not only results in potential lost revenue for government programs, but it also serves as a public subsidy of racist propaganda and operates as the federal government’s imprimatur of white supremacist activities. This is all due to an unnecessarily broad definition of “educational” that somehow encompasses the activities of universities, symphonies, and white supremacists. This Essay suggests a change in the Treasury Regulations to restrict the definition of educational organizations to refer only to traditional, degree-granting institutions, distance-learning organizations, or certain other enumerated entities. -

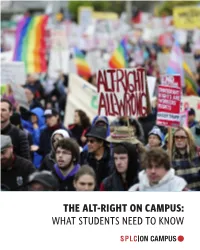

The Alt-Right on Campus: What Students Need to Know

THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW About the Southern Poverty Law Center The Southern Poverty Law Center is dedicated to fighting hate and bigotry and to seeking justice for the most vulnerable members of our society. Using litigation, education, and other forms of advocacy, the SPLC works toward the day when the ideals of equal justice and equal oportunity will become a reality. • • • For more information about the southern poverty law center or to obtain additional copies of this guidebook, contact [email protected] or visit www.splconcampus.org @splcenter facebook/SPLCenter facebook/SPLConcampus © 2017 Southern Poverty Law Center THE ALT-RIGHT ON CAMPUS: WHAT STUDENTS NEED TO KNOW RICHARD SPENCER IS A LEADING ALT-RIGHT SPEAKER. The Alt-Right and Extremism on Campus ocratic ideals. They claim that “white identity” is under attack by multicultural forces using “politi- An old and familiar poison is being spread on col- cal correctness” and “social justice” to undermine lege campuses these days: the idea that America white people and “their” civilization. Character- should be a country for white people. ized by heavy use of social media and memes, they Under the banner of the Alternative Right – or eschew establishment conservatism and promote “alt-right” – extremist speakers are touring colleges the goal of a white ethnostate, or homeland. and universities across the country to recruit stu- As student activists, you can counter this movement. dents to their brand of bigotry, often igniting pro- In this brochure, the Southern Poverty Law Cen- tests and making national headlines. Their appear- ances have inspired a fierce debate over free speech ter examines the alt-right, profiles its key figures and the direction of the country. -

Systematic Scoping Review on Social Media Monitoring Methods and Interventions Relating to Vaccine Hesitancy

TECHNICAL REPORT Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy www.ecdc.europa.eu ECDC TECHNICAL REPORT Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy This report was commissioned by the European Centre for Disease Prevention and Control (ECDC) and coordinated by Kate Olsson with the support of Judit Takács. The scoping review was performed by researchers from the Vaccine Confidence Project, at the London School of Hygiene & Tropical Medicine (contract number ECD8894). Authors: Emilie Karafillakis, Clarissa Simas, Sam Martin, Sara Dada, Heidi Larson. Acknowledgements ECDC would like to acknowledge contributions to the project from the expert reviewers: Dan Arthus, University College London; Maged N Kamel Boulos, University of the Highlands and Islands, Sandra Alexiu, GP Association Bucharest and Franklin Apfel and Sabrina Cecconi, World Health Communication Associates. ECDC would also like to acknowledge ECDC colleagues who reviewed and contributed to the document: John Kinsman, Andrea Würz and Marybelle Stryk. Suggested citation: European Centre for Disease Prevention and Control. Systematic scoping review on social media monitoring methods and interventions relating to vaccine hesitancy. Stockholm: ECDC; 2020. Stockholm, February 2020 ISBN 978-92-9498-452-4 doi: 10.2900/260624 Catalogue number TQ-04-20-076-EN-N © European Centre for Disease Prevention and Control, 2020 Reproduction is authorised, provided the -

TOP TIPS and BEST PRACTICES Using Instagram and Tiktok for HIV Prevention and Sexual Health Education DATED April 16, 2020

TOP TIPS and BEST PRACTICES Using Instagram and TikTok for HIV Prevention and Sexual Health Education DATED April 16, 2020 INSTAGRAM OVERVIEW: According to Piper Jaffray’s 2019 Taking Stock With Teens consumer insights survey, 85% of teens say Instagram is their preferred social network (followed shortly by Snapchat). This is a massive jump from 2017 when a mere 24% said they preferred the site. And, 67% of 18 to 29 year olds use Instagram. Instagram content is high-res, polished; focused on storytelling; uses photo and video; both Gen Z and Millennials; tagline: This is Who I Am. Best Practices *Include a LINK in your bio; shout it out, “Link in bio” in your feed posts. *Be generous with your likes and mentions to gain followers. *Upgrade your account for free to use the platform analytics to measure impact. *Curate your feed with long-term content that shows who your organization is and what you stand for. Cross-promote your agency/organization’s programs in your feed. Keep the look-and- feel consistent: Logo, colors, font. *Curate stories with shorter-term content; use the Highlights feature for stories you want to showcase. Make sure your stories are engaging and have a Call to Action. Always use your campaign hashtag (#) in your posts. TIKTOK OVERVIEW: As of January 2020, users in their teens accounted for 37.2 percent of TikTok's active user accounts in the United States. According to App Ape, users aged 20 to 29 years were the second-largest user group, accounting for 26.3 percent of the video sharing app's user base on the Android platform. -

And INSTAGRAM LLC. Defendants

Case 1:17-cv-01120-UNA Document 1 Filed 08/09/17 Page 1 of 26 PageID #: 1 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE SEARCH AND SOCIAL MEDIA PARTNERS, LLC, C.A. No. __________ Plaintiffs v. JURY TRIAL DEMANDED FACEBOOK, INC.; INSTAGRAM, INC.; and INSTAGRAM LLC. Defendants. COMPLAINT Plaintiff SEARCH AND SOCIAL MEDIA PARTNERS, LLC, (“SSMP”) brings this action against defendant FACEBOOK, INC. (“FACEBOOK”), INSTAGRAM, INC. and INSTAGRAM, LLC (INSTRAGRAM, INC. and INSTRAGRAM, LLC collectively (“INSTAGRAM”), and hereby alleges as follows: THE PARTIES 1. SSMP is a limited liability company organized and existing under the laws of Delaware, having a place of business in Murfreesboro, TN. SSMP is the owner of a family of patents relating to social media networks including U.S. Patent Nos. 8,620,828 (the “’828 Patent”) and 8,719,176 (the “’176 Patent,” collectively the “Asserted Patents.”) 2. Upon information and belief, FACEBOOK is a Delaware corporation, having its corporate headquarters in Menlo Park, California. FACEBOOK provides social networking services through its website, www.facebook.com and m.facebook.com (the “Facebook Website”) and its mobile applications or apps available for several mobile platforms including iOS, Windows Phone, and Android (the “Facebook Apps”). FACEBOOK may be served with Case 1:17-cv-01120-UNA Document 1 Filed 08/09/17 Page 2 of 26 PageID #: 2 process via its registered agent, the Corporation Services Company, 251 Little Falls Drive, Wilmington, Delaware 19808. 3. Upon information and belief, INSTAGRAM, INC. is a Delaware corporation with its principle place of business in Menlo Park, California. -

April 2020 Coordinated Inauthentic Behavior Report

APRIL 2020 DETAILED REPORT April 2020 Coordinated Inauthentic Behavior Report We’re constantly working to find and stop coordinated campaigns that seek to manipulate public debate across our apps. In 2019 alone, we took down over 50 networks worldwide for engaging in coordinated inauthentic behavior (CIB), including ahead of major democratic elections. These efforts are led by a cross-disciplinary team focused on finding and disrupting both the most sophisticated influence operations aimed to manipulate public debate as well as high volume inauthentic behaviors like spam and fake engagement. Over the past several years, our team has grown to over 200 people with expertise ranging from open source research, to threat investigations, cyber security, law enforcement and national security, investigative journalism, engineering, product development, data science and academic studies in disinformation. You can find more information about our previous enforcement actions here. PURPOSE OF THIS REPORT Over the past three years, we’ve shared our findings about coordinated inauthentic behavior we detect and remove from our platforms. As part of regular CIB reports, we’re sharing information about all networks we take down over the course of a month to make it easier for people to see progress we’re making in one place. WHAT IS CIB? While we investigate and enforce against any type of inauthentic behavior — including fake engagement, spam and artificial amplification — we approach enforcement against these mostly financially-motivated activities differently from how we counter foreign interference or domestic influence operations. We routinely take down less sophisticated, high-volume inauthentic behaviors like spam and we do not announce these enforcement actions when we take them.