A Fast Lightweight Based Deep Learning for Detecting Macula Fovea Using

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Urgent Action

First UA: 141/20 Index: ASA 17/3075/2020 China Date: 17 September 2020 URGENT ACTION 12 HONGKONGERS HELD INCOMMUNICADO IN CHINA Twelve Hongkongers, including pro-democracy activists, were arrested on 23 August 2020 by the Chinese coast guard for allegedly crossing the border between Hong Kong and China in secret in a speedboat. Held incommunicado, without access to their family and lawyers of their choice, they are at imminent risk of torture and other ill-treatment. Four family-hired lawyers from mainland China dropped the cases after they were threatened by authorities. TAKE ACTION: 1. Write a letter in your own words or using the sample below as a guide to one or both government officials listed. You can also email, fax, call or Tweet them. 2. Click here to let us know the actions you took on Urgent Action 141.20. It’s important to report because we share the total number with the officials we are trying to persuade and the people we are trying to help. Director Fang Hongsheng Ambassador Cui Tiankai Yantian Branch of Shenzhen Municipal Public Security Bureau Embassy of the People's Republic of China 2078 Shenyan Lu, Yantian Qu, 3505 International Place NW, Washington DC 20008 Shenzhen Shi, 518081, People’s Republic of China Phone: 202 495 2266 I Fax: 202 495 2138 Email: [email protected] Email: [email protected] [email protected] Salutation: Dear Ambassador Dear Director Fang, I am writing to express my grave concern for 12 Hongkongers who were arrested for “secretly crossing the border” (偷越 国(边)境) on 23 August 2020. -

CHINA VANKE CO., LTD.* 萬科企業股份有限公司 (A Joint Stock Company Incorporated in the People’S Republic of China with Limited Liability) (Stock Code: 2202)

Hong Kong Exchanges and Clearing Limited and The Stock Exchange of Hong Kong Limited take no responsibility for the contents of this announcement, make no representation as to its accuracy or completeness and expressly disclaim any liability whatsoever for any loss howsoever arising from or in reliance upon the whole or any part of the contents of this announcement. CHINA VANKE CO., LTD.* 萬科企業股份有限公司 (A joint stock company incorporated in the People’s Republic of China with limited liability) (Stock Code: 2202) 2019 ANNUAL RESULTS ANNOUNCEMENT The board of directors (the “Board”) of China Vanke Co., Ltd.* (the “Company”) is pleased to announce the audited results of the Company and its subsidiaries for the year ended 31 December 2019. This announcement, containing the full text of the 2019 Annual Report of the Company, complies with the relevant requirements of the Rules Governing the Listing of Securities on The Stock Exchange of Hong Kong Limited in relation to information to accompany preliminary announcement of annual results. Printed version of the Company’s 2019 Annual Report will be delivered to the H-Share Holders of the Company and available for viewing on the websites of The Stock Exchange of Hong Kong Limited (www.hkexnews.hk) and of the Company (www.vanke.com) in April 2020. Both the Chinese and English versions of this results announcement are available on the websites of the Company (www.vanke.com) and The Stock Exchange of Hong Kong Limited (www.hkexnews.hk). In the event of any discrepancies in interpretations between the English version and Chinese version, the Chinese version shall prevail, except for the financial report prepared in accordance with International Financial Reporting Standards, of which the English version shall prevail. -

A Stakeholder Perspective of Port City Sustainable Development

sustainability Article A Stakeholder Perspective of Port City Sustainable Development Jasmine Siu Lee Lam 1 and Wei Yim Yap 2,* 1 Maritime Energy and Sustainable Development Centre of Excellence, School of Civil and Environmental Engineering, Nanyang Technological University, Singapore 639798, Singapore; [email protected] 2 School of Business, Singapore University of Social Sciences, Singapore 599494, Singapore * Correspondence: [email protected]; Tel.: +65-6248-4473 Received: 12 October 2018; Accepted: 7 January 2019; Published: 16 January 2019 Abstract: As world seaborne trade continues to grow, especially in view of the Belt and Road Initiative (BRI), there is a mounting demand for a sustainable balance among the economic, social, and environmental performance of the port cities involved. This study aims to first conduct a review of existing sustainability frameworks; second, conduct a stakeholder analysis for port city sustainable development. The case studies of two major port cities in China—Guangzhou and Shenzhen, are conducted. According to the findings, a structured framework with main categories of economic, social and environmental indicators would be recommended for port cities. Dealing with diversified stakeholders and their interests is a major challenge for policy makers to overcome but opportunities do co-exist. Policy makers of Guangzhou and Shenzhen have made progress in balancing economic, social, and environmental interests in recent years. This study has taken a step forward in the research area, with a view to provide reference to stakeholders and governments in progressing towards sustainable development in port cities. Keywords: port city; sustainable development; sustainability framework; stakeholder; Belt and Road Initiative 1. Introduction In response to rising pressures from growing world population, increasing resource consumption, environmental degradation and climate change, there is a clear call for sustainable development in the world today in order to meet the United Nations’ (UN) Millennium Development Goals. -

The Story of Shenzhen

The Story of Shenzhen: Its Economic, Social and Environmental Transformation. UNITED NATIONS HUMAN SETTLEMENTS PROGRAMME THE STORY OF SHENZHEN P.O. Box 30030, Nairobi 00100, Kenya Its Economic, Social and Environmental Transformation [email protected] www.unhabitat.org THE STORY OF SHENZHEN Its Economic, Social and Environmental Transformation THE STORY OF SHENZHEN First published in Nairobi in 2019 by UN-Habitat Copyright © United Nations Human Settlements Programme, 2019 All rights reserved United Nations Human Settlements Programme (UN-Habitat) P. O. Box 30030, 00100 Nairobi GPO KENYA Tel: 254-020-7623120 (Central Office) www.unhabitat.org HS Number: HS/030/19E ISBN Number: (Volume) 978-92-1-132840-0 The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers of boundaries. Views expressed in this publication do not necessarily reflect those of the United Nations Human Settlements Programme, the United Nations, or its Member States. Excerpts may be reproduced without authorization, on condition that the source is indicated. Cover Photo: Shenzhen City @SZAICE External Contributors: Pengfei Ni, Aloysius C. Mosha, Jie Tang, Raffaele Scuderi, Werner Lang, Shi Yin, Wang Dong, Lawrence Scott Davis, Catherine Kong, William Donald Coleman UN-Habitat Contributors: Marco Kamiya and Ananda Weliwita Project Coordinator: Yi Zhang Project Assistant: Hazel Kuria Editors: Cathryn Johnson and Lawrence Scott Davis Design and Layout: Paul Odhiambo Partner: Shenzhen Association for International Culture Exchanges (SZAICE) Table of Contents Foreword .............................................................................................................................................................................. -

Spatio-Temporal Analysis of Female Breast Cancer Incidence In

Zhou et al. Chinese Journal of Cancer (2015) 34:13 DOI 10.1186/s40880-015-0013-y ORIGINAL ARTICLE Open Access Spatio-temporal analysis of female breast cancer incidence in Shenzhen, 2007–2012 Hai-Bin Zhou1†, Sheng-Yuan Liu2†, Lin Lei1, Zhong-Wei Chen2, Ji Peng1*, Ying-Zhou Yang1 and Xiao-Li Liu1 Abstract Introduction: Breast cancer is a leading tumor with a high mortality in women. This study examined the spatio-temporal distribution of the incidence of female breast cancer in Shenzhen between 2007 and 2012. Methods: The data on breast cancer incidence were obtained from the Shenzhen Cancer Registry System. To describe the temporal trend, the average annual percentage change (AAPC) was analyzed using a joinpoint regression model. Spatial autocorrelation and a retrospective spatio-temporal scan approach were used to detect the spatio-temporal cluster distribution of breast cancer cases. Results: Breast cancer ranked first among different types of cancer in women in Shenzhen between 2007 and 2012 with a crude incidence of 20.0/100,000 population. The age-standardized rate according to the world standard population was 21.1/100,000 in 2012, with an AAPC of 11.3%. The spatial autocorrelation analysis showed a spatial correlation characterized by the presence of a hotspot in south-central Shenzhen, which included the eastern part of Luohu District (Donghu and Liantang Streets) and Yantian District (Shatoujiao, Haishan, and Yantian Streets). Five spatio-temporal cluster areas were detected between 2010 and 2012, one of which was a Class 1 cluster located in southwestern Shenzhen in 2010, which included Yuehai, Nantou, Shahe, Shekou, and Nanshan Streets in Nanshan District with an incidence of 54.1/100,000 and a relative risk of 2.41; the other four were Class 2 clusters located in Yantian, Luohu, Futian, and Longhua Districts with a relative risk ranging from 1.70 to 3.25. -

Genome Characterization of COVID-19 Lineage B.1.1.7 Detected in the First Six Patients of a Cluster Outbreak — Shenzhen City, Guangdong Province, China, May 2021

China CDC Weekly Notes from the Field Genome Characterization of COVID-19 Lineage B.1.1.7 Detected in the First Six Patients of a Cluster Outbreak — Shenzhen City, Guangdong Province, China, May 2021 Yaqing He1,2,&; Peihua Niu3,&; Bo Peng1,2; Ying Sun1,2; Ziquan Lyu4; Renli Zhang1,2; Xiang Zhao3; Yang Song3; Yenan Feng3; Xiujuan Tang5; Dongfeng Kong5; Xinyi Wei1,2; Can Zhu1,2; Qingju Lu1,2; Shimin Li1,2; Xiaoliang Xiao1,2; Weiwen Liu4; Junjia He4; Junjie Xia6; Long Chen1,2,#; Xuan Zou6,#; Tiejian Feng1,6,# Screening for coronavirus disease 2019 19 virus/SARS-CoV-2 reference sequence Wuhan-Hu- (COVID-19) virus, also known as SARS-CoV-2, 1 (GenBank no. NC_045512.2). Nucleotide (nt) and infection every seven days was performed for high-risk amino acid (AA) differences between the six virus populations who worked at the Yantian Port in genome sequences from this study and the reference Yantian District, Shenzhen City, Guangdong Province. sequence Wuhan-Hu-1 were analyzed using the On May 20, 2021, an oropharyngeal swab from a 44- programs BioEdit 7.19 and MEGA version7 (2). year-old male (Case A) tested preliminarily positive for The 6 strains from Case A, Case B, Case C, Case D, COVID-19 by a quantitative real-time reverse Case E, and Case F were designated as hCoV-19/ transcription polymerase chain reaction (RT-qPCR) Guangdong/IVDC-05-01-2/2021, hCoV-19/Guang method in a third-party laboratory. On May 21, 2021, dong/IVDC-05-02-2/2021, hCoV-19/Guangdong/ 3 types of specimens (nasopharyngeal swab, IVDC-05-03/2021, hCoV-19/Guangdong/IVDC-05- oropharyngeal swab, and anal swab) from this case 04/2021, hCoV19/Guangdong/IVDC-05-05/2021, were collected by Yantian CDC and were confirmed and hCoV-19/Guangdong/IVDC-05-06/2021, respec- positive for COVID-19 virus by a RT-qPCR method tively, in this study. -

Press Conference

2019 BI-CITY BIENNALE OF URBANISM \ ARCHITECTURE (SHENZHEN) FIRST PRESS CONFERENCE PRESS KIT ■ APRIL 1, 2019 (MONDAY) 14:30-15:30 ■ SHENZHEN MUNICIPAL GOVERNMENT PRESS OFFICE UABB WECHAT PLATFORM: UABB-SZ WATCH IMAGE/TEXT LIVE STREAM OF THE CONFERENCE (IN CHINESE) CONTENTS 04 BASIC INFO OF 2019 UABB 05 PRESS RELEASE 11 THEME OF 2019 UABB 12 SECTIONS 23 MAIN VENUE 24 CURATORIAL TEAM 31 ABOUT US + CONTACT US 2019 UABB FIRST PRESS CONFERENCE ■ BASIC INFO OF 2019 UABB Theme: Urban Interactions Main Venue: Futian Railway Station and surrounding area Time: December, 2019 - March, 2020 (TBD) Sub-Venues: Sha Tau Kok Bonded Zone of Yantian District, Longwangmiao Industrial Zone of Bao’an District, Bao’an International Art Design Center, Software Town of Shenzhen Universiade of Longgang District, the historical Guanlan Market of Longhua District, Shangwei Art Village of Longhua District, Guangming Cloud Valley, Dapeng Fortress and Qianhai free trade zone 4 ■ PRESS RELEASE First Press Conference of 2019 Bi-City Biennale of Urbanism \ Architecture (Shenzhen) Held in Shenzhen April 1, 2019, Shenzhen - On the afternoon of April perspectives. The exhibition is scheduled to open 1, 2019, the first press conference of 2019 Bi-City at the Futian Railway Station and the surrounding Biennale of Urbanism\Architecture (Shenzhen) areas, the Main Venue, in Shenzhen in December [UABB (Shenzhen)] was held at the press conference 2019. At the same time, several sub-venues will be room of Shenzhen Municipal Government. Xu set up in other districts of the city to interconnect Songming, Deputy Director of the Organizing with the Main Venue, completing an organically Committee and Deputy Secretary General of interactive network throughout the city. -

Shenzhen Attractions 2 About and Plan Your Future Visits to China? for More Information Visit the Splendid China and Chinese Folk Culture Village Website

Shenzhen Visitors Guides www.discoverchina.info Guide 2: Attractions Shenzhen is a modern and beautiful metropolis located in southern China just across the border from Hong Kong, and it’s a great place to start your exploration of China. This Guide profiles Shenzhen's many attractions, which include: Theme parks offering family fun and fascinating cultural experiences. Beautiful parks, natural areas and beaches and interesting historic sites. Golf, tennis and yachting with world-class venues and facilities. Excellent shopping, accommodation and dining. The 26th Universiade games 2011. For an introduction to Shenzhen, see Shenzhen Visitors Guides, Guide 1: About Shenzhen, and to plan a visit see Shenzhen Visitors Guides, Guide 3: Plan Your Visit. Theme Parks Overseas Chinese Town Shenzhen’s Nanshan District features the Window of the World, Splendid China, Chinese Folk Culture Village and Happy Kingdom theme parks clustered together in an area known as Overseas Chinese Town (OCT). OCT also features hotels and other tourist facilities. Window of the World Experience the world's landmarks in the one day. Over 130 detailed miniature replicas of notable landmarks throughout the world are featured in eight international areas, including the pyramids of Egypt, a 1/3 scale Eiffel Tower, Buckingham Palace, Niagara Falls and the Sydney Harbour Bridge and Opera House. There are also cultural performances from around the world, and several fun rides. Splendid China Over 80 notable landmarks from around China can be experienced in miniature in the one location, including the Forbidden City, the Terracotta Army and the Great Wall. There are also cultural performances. What better place to think © January 2011 (Edition 4). -

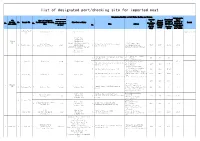

List of Designated Port/Checking Site for Imported Meat

list of designated port/checking site for imported meat Integration Facility of Cold Chain Checking and Storage Comprehensive Port Category Capacity Comprehensi CIQ Name of Designated Area of Import (harbour,river of ve Import directly No. Branch CIQ Port/Designated Checking First Port of Entry Checking Capacity of Remark No. No. port,airport,ro Specialize Capacity of under AQSIQ Site Name Address Platform Designated ad etc.) No. d Cold Integration (square Port/Designat Storage Facility meter) ed Checking (Ton) (10000 Site (10000 Capital airport 1 1 Capital Airport airport Capital Airport 1 Temporary in Use CIQ Tianjin Port Dayaowan Port Beijing 1 Bayuquan Port CIQ Erennhot Designated Port for Cold Storage,Jingjin Beijing Pinggu Beijing Jing-jin Port International 2 Pinggu office 2 road Imported meat 2 Port,Mafang Logistics 9600 1600 16.64 16.64 International Land Port Logistic Co.,Ltd. Qianwan Port, Huangdao Distirct Base,Pinggu District,Beijing Yantai Port Capital Airport Lianyungang Port No.3,Nankang Road,Gangwan China Merchants International Cold Chain 3 Avenue,Nanshan 3000 500 18.3 (Shenzhen) Co., Ltd. District,Shenzhen City 3 Shekou CIQ 3 Shekou Port harbour Shekou Port Bonded Port Area,Qianhai 21.65 China Merchants International Cold Chain Bay,No.53,Linhai 4 7000 208.8 3.35 (Shenzhen) Co., Ltd. Avenue,Nanshan District,Shenzhen City No.3,Yantian Road,Yantian 5 Shenzhen Baohui Logistics Co.,Ltd 3500 2000 58.64 District,Shenzhen City East Gate of No.439,Donghai 6 Shenzhen Ruiyuan Cold Chain Co.,Ltd Road,Yantian District,Shenzhen 11000 -

A Fast Lightweight Based Deep Fusion Learning for Detecting Macula Fovea Using Ultra-Widefield Fundus Images

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 24 August 2021 doi:10.20944/preprints202108.0469.v1 Article A fast lightweight based deep fusion learning for detecting macula fovea using ultra-widefield Fundus images Han Wang 1,2,3, Jie Yang 4,5*, Yaoyang Wu5*, Wencai Du6*, Simon Fong5, Yutong Duan3, Xiaoping Yao7, Xiaoshu Zhou2, Qingqian Li8, Chen Lin9*, Jiang Liu10 and Lina Huang 11* 1 Institute of Data Science, City University of Macau, Macau 999078; [email protected] 2 School of Computer, Beijing Institute of Technology Zhuhai, China; [email protected], [email protected] 3 Zhuhai Institute of Advanced Technology Chinese Academy of Sciences, China 519000; {wanghan, duanyutong}@ziat.ac.cn 4 College of Artificial Intelligence, Chongqing Industry&Trade Polytechnic, China 408000; [email protected] 5 Faculty of Science and Technology, University of Macau, Macau 999078; {jie.yang, yc07481}@connect.um.edu.mo, [email protected] 6 University of Saint Joseph, Macau 999078; [email protected] 7 Union hospital of Fujian Medical University, Fujian, China 353000; [email protected] 8 Department of Ophthalmology, People's Hospital of Yantian District, Shenzhen, China; [email protected] 9 Department of Ophthalmology, Shenzhen People's Hospital, Shenzhen, China, [email protected] 10 Department of Computer Science and Engineering, Southern University of Science and Technology, Shenzhen, China; [email protected] 11 Jinan University Affiliated Shenzhen Aier Eye Hospital, China 518000; [email protected] * Correspondence: J. Y., [email protected]; Y. W., [email protected]; W.D., [email protected]; C.H., [email protected]; L. -

Sub-Subsidiary)’S Interventions in an Administrative Lawsuit

April 6, 2021 News Release Company: Olympus Corporation Yasuo Takeuchi, Director, Representative Executive Officer, President and CEO (Code: 7733, First Section, Tokyo Stock Exchange) Contact: Takaaki Sakurai, Vice President, Investor Relations TEL: +81-3-3340-2111 Notice Concerning Termination of the Consolidated Subsidiary (Sub-subsidiary)’s Interventions in an Administrative Lawsuit As announced in the “Notice Concerning the Consolidated Subsidiary (Sub-subsidiary)’s Intervention in an Administrative Lawsuit” dated May 28, 2020, Olympus (Shenzhen) Industrial Ltd. (“OSZ”), a consolidated subsidiary (sub-subsidiary) of Olympus Corporation (“Olympus”), intervened in the administrative lawsuit (the “Lawsuit (v. Regulation)”) initiated by Shenzhen YL Technology Co., Ltd. (“YL”) against Shenzhen Administration for Market Regulation (“the Regulation”). As announced in the “Notice Concerning the Consolidated Subsidiary (Sub-subsidiary)’s Intervention in an Administrative Lawsuit” dated March 12, 2021, OSZ also intervened in the administrative lawsuit (the “Lawsuit (v. Commission)”) initiated by YL against Shenzhen Science and Technology Innovation Commission (“the Commission”). Since both of the Lawsuit (v. Regulation) and the Lawsuit (v. Commission) were concluded due to withdrawal of complaint by YL, OSZ’s interventions in the both lawsuits have also been terminated. Therefore, Olympus hereby announces as follows: 1. The Lawsuit (v. Regulation) As announced in the “(Change of Disclosure Matters) Notice Concerning Cancellation of Transfer of Equity Interests of Consolidated Subsidiary (Sub-subsidiary)” dated January 20, 2020, although the parties have executed a transfer agreement (“Transfer Agreement”), as of December 25, 2018, to transfer all the equity interests of OSZ held by Olympus (China) Co. Ltd. (“OCH”), a consolidated subsidiary of Olympus, to YL (the “Transfer”), the Transfer Agreement has been terminated on January 20, 2020. -

Chinese Mainland

Address List of Special Warehousing Service Note: The address marked in red are newly added address. (Effective date:October 1, 2021) Province / Directly- controlled City District/county Town, Sub-district and House Number Municipality / Autonomous Region/SAR B4-25, Gate 1, ProLogis Logistics Park, No.1 Tiedi Road, Anhui Province Hefei Shushan District High-tech Zone No.18 Tianzhushan Road, Longshan Sub-district, Wuhu Anhui Province Wuhu Jiujiang District Economic and Technological Development Zone Anhui Province Chuzhou Langya District Longji Leye Photovoltaic Co., Ltd., No.19 Huai'an Road 3/F, No.8 Building, South Area, Lixiang Innovation Park, Anhui Province Chuzhou Nanqiao District Chuzhou, 018 Township Road Anhui Province Chuzhou Nanqiao District No.19 Huai'an Road Yuanrong New Material Holding Co., Ltd., 50 Meters Anhui Province Hefei Shushan District Westward of Bridge of Intersection of Changning Avenue and Ningxi Road Anhui Province Hefei Yaohai District No.88 Dayu Road Anhui Province Hefei Yaohai District No.2177 Dongfang Avenue Beijing BOE Vision-Electronic Technology Co., Ltd., No. Anhui Province Hefei Yaohai District 2177 Dongfang Avenue Anhui Province Hefei Yaohai District No.668 Longzihu Road Anhui Province Hefei Yaohai District No. 668 Longzihu Road Anhui Province Hefei Yaohai District No.2177 Tongling North Road Anhui Province Hefei Yaohai District No.3166 Tongling North Road Anhui Province Hefei Yaohai District No.8 Xiangwang Road Anhui Province Wuhu Jiujiang District No. 8 Anshan Road Anhui Province Wuhu Jiujiang District