Pdf) Detailing D*Lite Pathfinding Algorithm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

Simpleware Scanip Medical Software Solutions for Clinical Applications

Simpleware ScanIP Medical Software Solutions for Clinical Applications FDA 510(k) cleared CE marked ISO 13485:2016 certified Software Solutions for Clinical Applications Patient-Specific Surgical Planning Patient-Specific Design Improve Informed Decision- Accelerate New Product Making Development • Generate models from 3D image data to visualize • Import, position and integrate CAD devices into patient treatment options image data • Landmark and perform measurements on anatomical • Reverse engineer and analyse patient-specific data to assess surgical strategies device designs • Export models for simulating clinical procedures • Generate simulation-ready models for evaluating device performance In Silico Clinical Trials R&D for Medical Devices Increase Confidence in Your Harness the Freedom to Solution Experiment • Explore post-op outcomes or implant positioning or • Generate accurate models for 3D printing, simulation or device performance CAD design • Fast and accurate image processing environment for • Speed up iterative device designs with rapid image-to- creating computational models model workflows • Streamline repeatable workflows with scripting, • Use image-based tools to reduce dependence on automation, or customization physical testing 3D Image Data Processing for Pre-Clinical Applications with Simpleware ScanIP Medical Intuitive and Powerful Software Tackle Clinical Imaging Challenges with Confidence When working in a clinical setting, Simpleware ScanIP Medical is the ideal choice for those working with 3D imaging to create medical -

Simpleware LTD. Ə Dr. Gareth James Marketing and PR Officer Bradninch

DEPARTMENT OF HEALTH & HUMAN SERVICES Public Health Service __________________________________________________________________________________________________________________________ Food and Drug Administration 10903 New Hampshire Avenue Document Control Center – WO66-G609 Silver Spring, MD 20993-0002 Simpleware LTD. April 17, 2015 Ə Dr. Gareth James Marketing and PR Officer Bradninch Hall Castle Street EXETER, GB EX43PL DEVON Re: K142779 Trade/Device Name: ScanIP; ScanIP: Medical Edition; ScanIP: Med Regulation Number: 21 CFR 892.2050 Regulation Name: Picture Archiving and Communications System Regulatory Class: II Product Code: LLZ Dated: March 19, 2015 Received: March 25, 2015 Dear Dr. James: We have reviewed your Section 510(k) premarket notification of intent to market the device referenced above and have determined the device is substantially equivalent (for the indications for use stated in the enclosure) to legally marketed predicate devices marketed in interstate commerce prior to May 28, 1976, the enactment date of the Medical Device Amendments, or to devices that have been reclassified in accordance with the provisions of the Federal Food, Drug, and Cosmetic Act (Act) that do not require approval of a premarket approval application (PMA). You may, therefore, market the device, subject to the general controls provisions of the Act. The general controls provisions of the Act include requirements for annual registration, listing of devices, good manufacturing practice, labeling, and prohibitions against misbranding and adulteration. Please note: CDRH does not evaluate information related to contract liability warranties. We remind you, however, that device labeling must be truthful and not misleading. If your device is classified (see above) into either class II (Special Controls) or class III (PMA), it may be subject to additional controls. -

Hands-On Engineering Courses in the COVID-19 Pandemic: Adapting Medical Device Design for Remote Learning

Physical and Engineering Sciences in Medicine https://doi.org/10.1007/s13246-020-00967-z SCIENTIFIC PAPER Hands-on engineering courses in the COVID-19 pandemic: adapting medical device design for remote learning Yanning Liu1 · Aishwarya Vijay2 · Steven M. Tommasini1,3 · Daniel Wiznia3,4,5 Received: 1 September 2020 / Accepted: 18 December 2020 © Australasian College of Physical Scientists and Engineers in Medicine 2021 Abstract The COVID-19 pandemic has challenged the status quo of engineering education, especially in highly interactive, hands-on design classes. Here, we present an example of how we efectively adjusted an intensive hands-on, group project-based engi- neering course, Medical Device Design & Innovation, to a remote learning curriculum. We frst describe the modifcations we made. Drawing from student pre and post feedback surveys and our observations, we conclude that our adaptations were overall successful. Our experience may guide educators who are transitioning their engineering design courses to remote learning. Keywords COVID-19 · Engineering education · Remote learning · STEM · Product design · Medical device design Introduction how we efectively adjusted an intensive hands-on, group project-based engineering course, Medical Device Design The COVID-19 pandemic has challenged the status quo and Innovation.[4]. of engineering education, especially in highly interactive, Medical Device Design and Innovation is a project hands-on design classes.[1, 2] Traditionally, these classes focused course ofered to all Yale University -

Sergey Makarov

Sergey Makarov · Marc Horner Gregory Noetscher Editors Brain and Human Body Modeling Computational Human Modeling at EMBC 2018 Brain and Human Body Modeling Sergey Makarov • Marc Horner Gregory Noetscher Editors Brain and Human Body Modeling Computational Human Modeling at EMBC 2018 Editors Sergey Makarov Marc Horner Massachusetts General Hospital ANSYS, Inc. Boston, MA, USA Evanston, IL, USA Worcester Polytechnic Institute Worcester, MA, USA Gregory Noetscher Worcester Polytechnic Institute Worcester, MA, USA This book is an open access publication. ISBN 978-3-030-21292-6 ISBN 978-3-030-21293-3 (eBook) https://doi.org/10.1007/978-3-030-21293-3 © The Editor(s) (if applicable) and The Author(s) 2019 Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this book are included in the book’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the book’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. -

Simpleware Software Solutions for Life Sciences from 3D Images to Models Applications in Life Sciences

Simpleware Software Solutions for Life Sciences From 3D Images to Models Applications in Life Sciences Orthopedics Product Design & Analysis Cardio & Respiratory Systems Accurate Anatomical Consumer Products Physiological Flow Models and Wearables Analysis • Automated and semi-automated • Customize product designs to • Reslice images along vessels tools to segment anatomies individual anatomies and airways • Combine CAD implants and • Virtually check the fit and • Combine anatomical data with anatomical data function for devices including stent models • Accurately quantify bone electronic wearables • Mesh boundary layers and add geometries • Export models to simulate real- custom inlets and outlets for • Export multi-part FE/CFD world performance fluid flow analysis meshes to solvers • Gain better insight for future • Quantify vessels with centerline products by analyzing network tools real anatomies EM & Neuromodulation Medical Device R&D Anatomical 3D Printing Human Body Models Device and Image High-Quality STL for Simulation Integration Production • Use automated and/or semi- • Rapidly integrate CAD devices into • Print medical devices and automated segmentation tools CT/MRI scans anatomical parts from scans • Integrate CAD designs like MRI • Research human/device • Generate robust STL files ready coils or electrodes with image data interactions for 3D printing • Easy-to-use registration tools for • Automate repeatable operations • Conforming interfaces for multi- positioning devices with scripting material printing • Generate and export -

An Open-Source, Fully-Automated, Realistic Volumetric-Approach-Based Simulator for TES*

ROAST: an open-source, fully-automated, Realistic vOlumetric-Approach-based Simulator for TES* Yu Huang1;2, Abhishek Datta2, Marom Bikson1 and Lucas C. Parra1 Abstract— Research in the area of transcranial electrical Providence, RI), COMSOL Multiphysics (COMSOL Inc., stimulation (TES) often relies on computational models of cur- Burlington, MA)). rent flow in the brain. Models are built on magnetic resonance Given this great variety and complexity, there is a need images (MRI) of the human head to capture detailed individual anatomy. To simulate current flow, MRIs have to be segmented, to automate these tools into a complete processing pipeline. virtual electrodes have to be placed on the scalp, the volume is Existing pipelines are either not fully automated (e.g. NFT tessellated into a mesh, and the finite element model is solved [11]) or difficult to use (e.g. SciRun [12]). Notable in numerically to estimate the current flow. Various software tools terms of ease of use (if not of installation) is SimNIBS are available for each step, as well as processing pipelines [13], which integrates FreeSurfer, FSL, Gmsh and getDP to that connect these tools for automated or semi-automated processing. The goal of the present tool – ROAST – is to provide provide a complete end-to-end solution, based on the surface an end-to-end pipeline that can automatically process individual segmentation of the head. In the TES modeling literature, heads with realistic volumetric anatomy leveraging open-source however, FEM based on volumetric segmentation is preferred software (SPM8, iso2mesh and getDP) and custom scripts over boundary element method (BEM) that is more common to improve segmentation and execute electrode placement. -

From CT Scan to Plantar Pressure Map Distribution of a 3D Anatomic Human Foot

Excerpt from the Proceedings of the COMSOL Conference 2010 Paris From CT Scan to Plantar Pressure Map Distribution of a 3D Anatomic Human Foot P. Franciosa, S. Gerbino* University of Molise, School of Engineering *Corresponding author: Via Duca degli Abruzzi - 86039 Termoli (CB) – Italy [email protected] Abstract: Understanding the stress-strain behav- methods, based on FE modeling, have also been ior of human foot tissues and pressure map dis- adopted. FE models of human foot have been tributions at the plantar interface is of interest developed under certain simplifications and as- into biomechanical investigations. In particular, sumptions (see [7] and [8]) such as: (i) simplified monitoring plantar pressure maps is crucial to or partial foot shape, (ii) assumptions of non- establishing the perceived human comfort of linear hyper-elastic material law, (iii) ligaments shoe insoles. and plantar fascia modeled as equivalent forces In this contest, a 3D anatomical detailed FE hu- or elastic beams/bars, (iiii) no friction or thermal man foot model was created, starting from CT effect, at plantar foot interface, accounted. (Computer Tomography) scans of a 29 years old Based on these assumptions, valuable 3D FE male. Anatomical domains related to bones and models were presented in the literature [9], [10]. soft tissues were generated, using segmentation In this contest, the geometrical complexity of techniques, and then processed into a CAD envi- foot structure was captured through reverse en- ronment to model 3D domains by using gineering methodologies, based on CT (Com- loft/sweep and boolean operations. Finally, FE puter Tomography) or MR (Magnetic Reso- model was generated into Comsol Multiphys- nance) scans. -

PLM Industry Summary Jillian Hayes, Editor Vol

PLM Industry Summary Jillian Hayes, Editor Vol. 16 No. 49 Friday 5 December 2014 Contents CIMdata News _____________________________________________________________________ 2 CIMdata Publishes PLM Industry Report ____________________________________________________2 CIMdata Releases Series of Country-Specific PLM Market Analysis Reports ________________________5 A New Business Calculus from IBM Software Group ___________________________________________6 SmarTeam: Offering Users Options for Growth: a CIMdata Commentary __________________________10 Subscribing to Product Design Software: a CIMdata Commentary ________________________________12 Company News ____________________________________________________________________ 14 3D Systems Makes Forbes’ List of America’s Best 100 Small Companies __________________________14 Adris, MicroCAD and Graitec UK become one Company Graitec Limited _________________________14 ASCENT- Center for Technical Knowledge Announces Plans to Collaborate with 4D Technologies on Training and Learning Resources for Autodesk Software Users __________________________________15 Autodesk Makes Design Software Free to Schools Worldwide ___________________________________16 Brian Beattie Named EVP, Business Operations and Chief Administrative Officer ___________________17 Cimatron Donates $1.7 Million Software to Iowa Community College for Advanced CAD/CAM Training 18 Dassault Systèmes and Expo Milano 2015: Partners to Promote Eco-Sustainability Exhibition __________18 ESI and EDF Energies Nouvelles sign an Exclusive -

Synopsys (Northern Europe) Ltd

Synopsys (Northern Europe) Ltd. April 1, 2021 ℅ Ms. Jessica James Business Process Analyst Bradninch Hall, Castle Street Exeter, Devon EX4 3PL UNITED KINGDOM Re: K203195 Trade/Device Name: Simpleware ScanIP Medical Regulation Number: 21 CFR 892.2050 Regulation Name: Picture archiving and communications system Regulatory Class: Class II Product Code: LLZ Dated: October 26, 2020 Received: October 28, 2020 Dear Ms. James: We have reviewed your Section 510(k) premarket notification of intent to market the device referenced above and have determined the device is substantially equivalent (for the indications for use stated in the enclosure) to legally marketed predicate devices marketed in interstate commerce prior to May 28, 1976, the enactment date of the Medical Device Amendments, or to devices that have been reclassified in accordance with the provisions of the Federal Food, Drug, and Cosmetic Act (Act) that do not require approval of a premarket approval application (PMA). You may, therefore, market the device, subject to the general controls provisions of the Act. Although this letter refers to your product as a device, please be aware that some cleared products may instead be combination products. The 510(k) Premarket Notification Database located at https://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfpmn/pmn.cfm identifies combination product submissions. The general controls provisions of the Act include requirements for annual registration, listing of devices, good manufacturing practice, labeling, and prohibitions against misbranding and adulteration. Please note: CDRH does not evaluate information related to contract liability warranties. We remind you, however, that device labeling must be truthful and not misleading. -

Multiphysics Software Applications in Reverse Engineering

Multiphysics Software Applications in Reverse Engineering *W. Wang1, K. Genc2 1University of Massachusetts Lowell, Lowell, MA, USA 2Simpleware, Exeter, United Kingdom *Corresponding author: University of Massachusetts Lowell, One University Avenue, Lowell, MA 01854, USA. [email protected] Abstract: This paper will explore the segmented geometry that can be directly applications of advanced software such as imported into the COMSOL environment. An ScanIP and the +FE module (Simpleware Ltd., overview of the workflow steps are shown in Exeter UK) and COMSOL multiphysics Figure 1. The COMSOL multiphysics software software in reinventing an existing part in the environment is capable of facilitating all steps in absence of original design data. It showcases the the modeling and simulation processes from part reverse engineering workflow of going from a definining, feature based meshing to 3D computed tomography (CT) scan of an visualization and solution analysis. However, automotive engine manifold to a COMSOL this paper aims at the demonstration of simulation ready, multi-part computational fluid integration of Simpleware and COMSOL in dynamics (CFD) or finite element (FE) mesh of reverse engineering applications, and we will the segmented geometry. It discusses the focus on the first three steps (blue), which effectiveness of applying multiphysics software describe the workflow of generating a in reverse engineering practice with a focus on simulation-ready mesh from 3D images using the the applicability of Simpleware and COMSOL in ScanIP+FE softrware environment. three-dimensional (3D) image-based modeling, structure analysis and functionality evaluation. Keywords: Reverse Engineering, 3D Image- Based Simulation, Computational Fluid Dynamics, Finite Element 1. Introduction Figure1. Overview of the 3D image-based reverse During the past decade reverse engineering engineering workflow. -

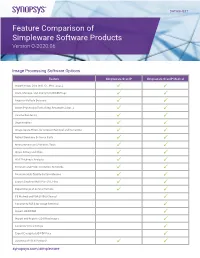

Feature Comparison of Simpleware Software Products Version Q-2020.06

DATASHEET Feature Comparison of Simpleware Software Products Version Q-2020.06 Image Processing Software Options Feature Simpleware ScanIP Simpleware ScanIP Medical Import Image Data (incl. CT, MRI, Jpeg…) Store, Manage, and Anonymize DICOM tags Register Multiple Datasets Image Processing Tools (Crop, Resample, Align…) Volume Rendering Segmentation Image Space Filters for Artefact Removal and Correction Robust Booleans Between Parts Measurement and Statistics Tools Shape Fitting and Stats Wall Thickness Analysis Generate and Probe Centerline Networks Generate High Quality Surface Meshes Export Single or Multi-Part STL Files Export Range of Suface Formats CE Marked and FDA (510k) Cleared Connect to PACS for Image Retrieval Import 4D DICOM Import and Register 2D X-Ray Images Generate Virtual X-Rays Export Encrypted 3D PDF Files Scripting API (C#, Python)* synopsys.com/simpleware Add-On Modules Automated Segmentation CAD Integration Model Generation Physics Simulation AS AS Custom Design Feature CAD FE NURBS SOLID FLOW LAPLACE Cardio Ortho Modeler Link Fully Automated Segmentation of Hearts Automatic Placement of Anatomical Landmarks for Hearts Fully Automated Segmentation of Hips and Knees Automatic Placement of Anatomical Landmarks for Hips and Knees Automatic Region of Interest Detection for Auto Segmentation Fully Customized Automated Image Processing Solution Import Surface Objects (STL, STEP, IGES…) Check and Fix Surface Objects Create Primitive Shapes Register Datasets (Automatic, Landmark…) Position Surface Objects Surface Filters (Smooth, Decimate…) Surface Editing (Extrude, Clip, Hollow, Sweep…) Convert Surfaces to Masks, Masks to Surfaces Robust Booleans Between Surface Parts Surface Deviation Feature Edge Preservation Internal Structures Wizard Mesh Decimation and Refinement Options Export STL Files Import SOLIDWORKS® Parts or Assemblies Dynamic Link with SOLIDWORKS® (Pull, Position, Orientation...) 2 Add-On Modules Cont.