RGB Inter-Channel Measures for Morphological Color Texture Characterization

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hit-Or-Miss Transform

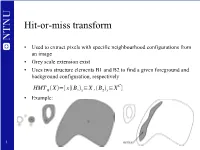

Hit-or-miss transform • Used to extract pixels with specific neighbourhood configurations from an image • Grey scale extension exist • Uses two structure elements B1 and B2 to find a given foreground and background configuration, respectively C HMT B X={x∣B1x⊆X ,B2x⊆X } • Example: 1 Morphological Image Processing Lecture 22 (page 1) 9.4 The hit-or-miss transformation Illustration... Morphological Image Processing Lecture 22 (page 2) Objective is to find a disjoint region (set) in an image • If B denotes the set composed of X and its background, the• match/hit (or set of matches/hits) of B in A,is A B =(A X) [Ac (W X)] ¯∗ ª ∩ ª − Generalized notation: B =(B1,B2) • Set formed from elements of B associated with B1: • an object Set formed from elements of B associated with B2: • the corresponding background [Preceeding discussion: B1 = X and B2 =(W X)] − More general definition: • c A B =(A B1) [A B2] ¯∗ ª ∩ ª A B contains all the origin points at which, simulta- • ¯∗ c neously, B1 found a hit in A and B2 found a hit in A Hit-or-miss transform C HMT B X={x∣B1x⊆X ,B2x⊆X } • Can be written in terms of an intersection of two erosions: HMT X= X∩ X c B B1 B2 2 Hit-or-miss transform • Simple example usages - locate: – Isolated foreground pixels • no neighbouring foreground pixels – Foreground endpoints • one or zero neighbouring foreground pixels – Multiple foreground points • pixels having more than two neighbouring foreground pixels – Foreground contour points • pixels having at least one neighbouring background pixel 3 Hit-or-miss transform example • Locating 4-connected endpoints SEs for 4-connected endpoints Resulting Hit-or-miss transform 4 Hit-or-miss opening • Objective: keep all points that fit the SE. -

Introduction to Mathematical Morphology

COMPUTER VISION, GRAPHICS, AND IMAGE PROCESSING 35, 283-305 (1986) Introduction to Mathematical Morphology JEAN SERRA E. N. S. M. de Paris, Paris, France Received October 6,1983; revised March 20,1986 1. BACKGROUND As we saw in the foreword, there are several ways of approaching the description of phenomena which spread in space, and which exhibit a certain spatial structure. One such approach is to consider them as objects, i.e., as subsets of their space of definition. The method which derives from this point of view is. called mathematical morphology [l, 21. In order to define mathematical morphology, we first require some background definitions. Consider an arbitrary space (or set) E. The “objects” of this :space are the subsets X c E; therefore, the family that we have to handle theoretically is the set 0 (E) of all the subsets X of E. The set p(E) is incomparably less arbitrary than E itself; indeed it is constructured to be a Boolean algebra [3], that is: (i) p(E) is a complete lattice, i.e., is provided with a partial-ordering relation, called inclusion, and denoted by “ c .” Moreover every (finite or not) family of members Xi E p(E) has a least upper bound (their union C/Xi) and a greatest lower bound (their intersection f7 X,) which both belong to p(E); (ii) The lattice p(E) is distributiue, i.e., xu(Ynz)=(XuY)n(xuZ) VX,Y,ZE b(E) and is complemented, i.e., there exist a greatest set (E itself) and a smallest set 0 (the empty set) such that every X E p(E) possesses a complement Xc defined by the relationships: XUXC=E and xn xc= 0. -

Mathematical Morphology

Mathematical morphology Václav Hlaváč Czech Technical University in Prague Faculty of Electrical Engineering, Department of Cybernetics Center for Machine Perception http://cmp.felk.cvut.cz/˜hlavac, [email protected] Outline of the talk: Point sets. Morphological transformation. Skeleton. Erosion, dilation, properties. Thinning, sequential thinning. Opening, closing, hit or miss. Distance transformation. Morphology is a general concept 2/55 In biology: the analysis of size, shape, inner structure (and relationship among them) of animals, plants and microorganisms. In linguistics: analysis of the inner structure of word forms. In materials science: the study of shape, size, texture and thermodynamically distinct phases of physical objects. In signal/image processing: mathematical morphology – a theoretical model based on lattice theory used for signal/image preprocessing, segmentation, etc. Mathematical morphology, introduction 3/55 Mathematical morphology (MM): is a theory for analysis of planar and spatial structures; is suitable for analyzing the shape of objects; is based on a set theory, integral algebra and lattice algebra; is successful due to a a simple mathematical formalism, which opens a path to powerful image analysis tools. The morphological way . The key idea of morphological analysis is extracting knowledge from the relation of an image and a simple, small probe (called the structuring element), which is a predefined shape. It is checked in each pixel, how does this shape matches or misses local shapes in the image. MM founding fathers 4/55 Georges Matheron (∗ 1930, † 2000) Jean Serra (∗ 1940) Matheron, G. Elements pour une Theorie del Milieux Poreux Masson, Paris, 1967. Serra, J. Image Analysis and Mathematical Morphology, Academic Press, London 1982. -

Revised Mathematical Morphological Concepts

Advances in Pure Mathematics, 2015, 5, 155-161 Published Online March 2015 in SciRes. http://www.scirp.org/journal/apm http://dx.doi.org/10.4236/apm.2015.54019 Revised Mathematical Morphological Concepts Joseph Ackora-Prah, Yao Elikem Ayekple, Robert Kofi Acquah, Perpetual Saah Andam, Eric Adu Sakyi, Daniel Gyamfi Department of Mathematics, Kwame Nkrumah University of Science and Technology, Kumasi, Ghana Email: [email protected], [email protected], [email protected], [email protected], [email protected], [email protected] Received 17 February 2015; accepted 12 March 2015; published 23 March 2015 Copyright © 2015 by authors and Scientific Research Publishing Inc. This work is licensed under the Creative Commons Attribution International License (CC BY). http://creativecommons.org/licenses/by/4.0/ Abstract We revise some mathematical morphological operators such as Dilation, Erosion, Opening and Closing. We show proofs of our theorems for the above operators when the structural elements are partitioned. Our results show that structural elements can be partitioned before carrying out morphological operations. Keywords Mathematical Morphology, Dilation, Erosion, Opening, Closing 1. Introduction Mathematical morphology is the theory and technique for the analysis and processing of geometrical structures, based on set theory, lattice theory, topology, and random functions. We consider classical mathematical mor- phology as a field of nonlinear geometric image analysis, developed initially by Matheron [1], Serra [2] and their collaborators, which is applied successfully to geological and biomedical problems of image analysis. The basic morphological operators were developed first for binary images based on set theory [1] [2] inspired by the work of Minkowski [3] and Hadwiger [4]. -

Mathematical Morphology a Non Exhaustive Overview

Mathematical Morphology a non exhaustive overview Adrien Bousseau Mathematical Morphology • Shape oriented operations, that “ simplify image data, preserving their essential shape characteristics and eliminating irrelevancies” [Haralick87] Mathematical Morphology 2 Overview • Basic morphological operators • More complex operations • Conclusion and References Mathematical Morphology 3 Overview • Basic morphological operators – Binary – Grayscale – Color – Structuring element • More complex operations • Conclusion and References Mathematical Morphology 4 Basic operators: binary • Dilation , erosion by a structuring element Mathematical Morphology 5 Basic operators: binary • Opening ° : remove capes, isthmus and islands smaller than the structuring element Mathematical Morphology 6 Basic operators: binary • Closing ° : fill gulfs, channels and lakes smaller than the structuring element Mathematical Morphology 7 Basic operators: binary • Sequencial filter: open-close or close-open Mathematical Morphology 8 Overview • Basic morphological operators – Binary – Grayscale – Color – Structuring element • More complex operations • Conclusion and References Mathematical Morphology 9 Basic operator: grayscale • Dilation : max over the structuring element Mathematical Morphology 10 Basic operator: grayscale • Erosion : min over the structuring element Mathematical Morphology 11 Basic operator: grayscale • Opening ° : remove light features smaller than the structuring element Mathematical Morphology 12 Basic operator: grayscale • Closing ° : remove -

Dilation and Erosion

CS 4495 Computer Vision – A. Bobick Morphology CS 4495 Computer Vision Binary images and Morphology Aaron Bobick School of Interactive Computing CS 4495 Computer Vision – A. Bobick Morphology Administrivia • PS6 – should be working on it! Due Sunday Nov 24th. • Some issues with reading frames. Resolved? • Exam: Tues November 26th . • Short answer and multiple choice (mostly short answer) • Study guide is posted in calendar. • Bring a pen. • PS7 – we still hope to have out by 11/26. Will be straight forward implementation of Motion History Images CS 4495 Computer Vision – A. Bobick Morphology Binary Image Analysis Binary image analysis • consists of a set of image analysis operations that are used to produce or process binary images, usually images of 0’s and 1’s. 0 represents the background 1 represents the foreground 00010010001000 00011110001000 00010010001000 Slide by Linda Shapiro CS 4495 Computer Vision – A. Bobick Morphology Binary Image Analysis • Is used in a number of practical applications • Part inspection • Manufacturing • Document processing Slide by Linda Shapiro CS 4495 Computer Vision – A. Bobick Morphology What kinds of operations? • Separate objects from background and from one another • Aggregate pixels for each object • Compute features for each object Slide by Linda Shapiro CS 4495 Computer Vision – A. Bobick Morphology Example: red blood cell image • Many blood cells are separate objects • Many touch – bad! • Salt and pepper noise from thresholding • How useable is this data? Slide by Linda Shapiro CS 4495 Computer Vision – A. Bobick Morphology Results of analysis • 63 separate objects detected • Single cells have area about 50 • Noise spots • Gobs of cells Slide by Linda Shapiro CS 4495 Computer Vision – A. -

Color Hit-Or-Miss Transform (CMOMP)

20th European Signal Processing Conference (EUSIPCO 2012) Bucharest, Romania, August 27 - 31, 2012 COLOR HIT-OR-MISS TRANSFORM (CMOMP ) Audrey Ledoux, Noel¨ Richard and Anne-Sophie Capelle-Laize´ University of Poitiers, XLIM-SIC JUR CNRS 7252, Poitiers, France Telephone: +33 (0)5 49 49 74 92 Fax: +33 (0)5 49 49 65 70 ABSTRACT 2. HIT-OR-MISS TRANSFORM Often, the mathematical morphology is reduced to the orde- The Hit-or-Miss Transform (HMT ) allows to find specific ring construction and the structuring elements are limited to shapes in images. It was initially developed for binary images flat shapes. In this paper, we propose a new method based on by Matheron and Serra [7]. The searched shapes are defined concept of convergence. Within this proposition, the defini- with a pair of disjoint Structuring Elements (SE) that frame tion of non-flat structuring element is now possible. By ex- it, one for the foreground shape and one for the background tending the mathematical morphology hit-or-miss transform shape. The mathematical expression of the HMT for an ima- to the color, we show that these formalisms are well adapted ge f and its structuring elements g = fg0; g00g is: for complex color images, as skin images for dermatological 0 c 00 purposes. We give and comment results on synthetic and real HMTg(f)(x) = (f b g )(x) \ (f b g )(x) (1) images. where f c is the complement of f, f c = fxjx2 = fg. Index Terms— color mathematical morphology, non-flat Several variations exists around the definition in grayscale structuring element, hit-or-miss [8, 9, 10, 11]. -

Sed For: GW 9 Ida-Maria • Pre-Processing Suggested Problem: 9.7 a • Noise Filtering, Shape Simplification,

Morphology-form and structure Lecture 6, Morphological image processing I mathematical framework used for: GW 9 Ida-Maria • pre-processing Suggested problem: 9.7 a • noise filtering, shape simplification, ... Sketch the result of A first eroded by • enhancing object structure B4 and then dilated by B2 • skeletonization, convex hull... • segmentation L • quantitative description L/4 . L L • area, perimeter, ... B2 B4 A Some set theory Some more set theory A is a set in Z2. ∈ If a=(a 1,a 2) is an element in A: a A. AC ∉ C If a=(a 1,a 2) is not an element in A: a A complement of A: A ={w|w ∉A} empty set : ∅ difference of A and B: set specified using { }, e.g., C={w|w=-d, for d∈D} A-B={w|w ∈A,w ∉B}=A ∩BC A-B every element in A is also in B (subset): A ⊆B A∪B reflection of A: union of A and B: Â={w|w=-a, for a∈A} C=A ∪B={c|c ∈A or c∈B} intersection of A and B: translation of A by a point z=(z 1,z 2): C=A ∩B={c|c ∈A and c∈B} (A) ={c|c=a+c, for a∈A} ∩ z ∩ ∅ A B disjoint/mutually exclusive : A B= (A) Z Logical operations • pixelwise combination of images (AND, OR, NOT, XOR) structuring element (SE) A B • small set to probe the image under study • for each SE, define origo – SE in point p: origo coincides with p NOT A A AND B • shape and size must be adapted to geometric properties for the objects A OR B A XOR B basic idea how to describe SE many different ways! • in parallel for each pixel in binary image: information needed: • position of origo for SE – check if SE is ”satisfied” • positions of elements belonging to SE – output pixel is set -

Morphological Image Processing

Morphological Image Processing C. Andrés Méndez March 2015 Introduction • In many areas of knowledge Morphology deals with form and structure (biology, linguistics, social studies, etc) • Mathematical Morphology deals with set theory • Sets in Mathematical Morphology represents objects in an Image 2 Mathematic Morphology • Used to extract image components that are useful in the representation and description of region shape, such as – boundaries extraction – skeletons – convex hull (italian: inviluppo convesso) – morphological filtering – thinning – pruning 3 Mathematic Morphology mathematical framework used for: • pre-processing – noise filtering, shape simplification, ... • enhancing object structure – skeletonization, convex hull... • segmentation – watershed,… • quantitative description – area, perimeter, ... 4 Z2 and Z3 • set in mathematic morphology represent objects in an image – binary image (0 = white, 1 = black) : the element of the set is the coordinates (x,y) of pixel belong to the object a Z2 • gray-scaled image : the element of the set is the coordinates (x,y) of pixel belong to the object and the gray levels a Z3 Y axis Y axis X axis Z axis X axis 5 Basic Set Operators Set operators Denotations A Subset B A ⊆ B Union of A and B C= A ∪ B Intersection of A and B C = A ∩ B Disjoint A ∩ B = ∅ c Complement of A A ={ w | w ∉ A} Difference of A and B A-B = {w | w ∈A, w ∉ B } Reflection of A Â = { w | w = -a for a ∈ A} Translation of set A by point z(z1,z2) (A)z = { c | c = a + z, for a ∈ A} 6 Basic Set Theory 7 Reflection and Translation -

Mathematical Morphology

Contents List of algorithms iii 13 Mathematical morphology 1 13.1 Basic morphological concepts 2 13.2 Four morphological principles 7 13.3 Binary dilation and erosion 10 13.3.1 Dilation 10 13.3.2 Erosion 17 13.3.3 Hit-or-miss transformation 24 13.3.4 Opening and closing 26 13.4 Gray-scale dilation and erosion 29 Contents ii 13.4.1 Top surface, umbra, and gray-scale dilation and erosion 32 13.4.2 Opening and Closing 42 13.4.3 Top hat transformation 43 13.5 Skeletons and object marking 47 13.5.1 Homotopic transformations 47 13.5.2 Skeleton, maximal ball 49 13.5.3 Thinning, thickening, and homotopic skeleton 54 13.5.4 Quench function, ultimate erosion 63 13.5.5 Ultimate erosion and distance functions 69 13.5.6 Geodesic transformations 73 13.5.7 Morphological reconstruction 76 13.6 Granulometry 80 13.7 Morphological segmentation and watersheds 86 13.7.1 Particles segmentation, marking, and watersheds 86 13.7.2 Binary morphological segmentation 87 13.7.3 Gray-scale segmentation, watersheds 93 13.8 Summary 96 13.9 References 98 List of algorithms Chapter 13 Mathematical morphology 13.1 Basic morphological concepts 2 13.1 Basic morphological concepts • started to develop in the late 1960s • based on the algebra of non-linear operators • operates on object shape • in some respects supersedes the linear algebraic system of convolution • can be used for: – pre-processing – segmentation using object shape – object quantification – in some ways works better and faster than standard approaches • slightly different algebra may be confusing 13.1 Basic morphological concepts 3 Morphological operations use: • Image pre-processing (noise filtering, shape simplification). -

Extending the Morphological Hit-Or-Miss Transform to Deep

IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS 1 c 2020 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. arXiv:1912.02259v2 [cs.CV] 28 Sep 2020 IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS 2 Extending the Morphological Hit-or-Miss Transform to Deep Neural Networks Muhammad Aminul Islam, Member, IEEE, Bryce Murray, Student Member, IEEE, Andrew Buck, Member, IEEE, Derek T. Anderson, Senior Member, IEEE, Grant Scott, Senior Member, IEEE, Mihail Popescu, Senior Member, IEEE, James Keller, Life Fellow, IEEE, Abstract—While most deep learning architectures are built on pass filters), frequency-orientation filtering via the Gabor, etc. convolution, alternative foundations like morphology are being In a continuous space, it is defined as the integral of two explored for purposes like interpretability and its connection functions—an image and a filter in the context of image to the analysis and processing of geometric structures. The morphological hit-or-miss operation has the advantage that it processing—after one is reversed and shifted, whereas in takes into account both foreground and background information discrete space, the integral realized via summation. CNNs when evaluating target shape in an image. Herein, we identify progressively learn more complex features in deeper layers limitations in existing hit-or-miss neural definitions and we with low level features such as edges in the earlier layers formulate an optimization problem to learn the transform relative and more complex shapes in the later layers, which are to deeper architectures. -

Elliptical Adaptive Structuring Elements for Mathematical Morphology

ISSN: 1402-1544 ISBN 978-91-7583-XXX-X Se i listan och fyll i siffror där kryssen är DOCTORAL T H E SIS Department of Computer Science, Electrical and Space Engineering Division of Signals and Systems StructuringAdaptive Elements for Mathematical Morphology Anders Landström Elliptical ISSN 1402-1544 Elliptical Adaptive Structuring Elements ISBN 978-91-7583-047-6 (print) ISBN 978-91-7583-048-3 (pdf) for Mathematical Morphology Luleå University of Technology 2014 Anders Landström Elliptical Adaptive Structuring Elements for Mathematical Morphology Anders Landstr¨om Dept. of Computer Science, Electrical and Space Engineering Lule˚aUniversity of Technology Lule˚a,Sweden Supervisors: Matthew J. Thurley and H˚akan Jonsson Printed by Luleå University of Technology, Graphic Production 2014 ISSN 1402-1544 ISBN 978-91-7583-047-6 (print) ISBN 978-91-7583-048-3 (pdf) Luleå 2014 www.ltu.se To my mother and father, for all their support, and Elizabeth, for all her patience. Thank you. iii iv Abstract As technological advances drives the evolution of sensors as well as the systems using them, processing and analysis of multi-dimensional signals such as images becomes more and more common in a wide range of applications ranging from consumer products to automated systems in process industry. Image processing is often needed to enhance or suppress features in the acquired data, enabling better analysis of the signals and thereby better use of the system in question. Since imaging applications can be very different, image processing covers a wide range of methods and sub-fields. Mathematical morphology constitutes a well defined framework for non-linear im- age processing based on set relations.