Development of a Musical Rhythm Game for Ios

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Programmed Moves: Race and Embodiment in Fighting and Dancing Videogames

UC Berkeley UC Berkeley Electronic Theses and Dissertations Title Programmed Moves: Race and Embodiment in Fighting and Dancing Videogames Permalink https://escholarship.org/uc/item/5pg3z8fg Author Chien, Irene Y. Publication Date 2015 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California Programmed Moves: Race and Embodiment in Fighting and Dancing Videogames by Irene Yi-Jiun Chien A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Film and Media and the Designated Emphasis in New Media in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Linda Williams, Chair Professor Kristen Whissel Professor Greg Niemeyer Professor Abigail De Kosnik Spring 2015 Abstract Programmed Moves: Race and Embodiment in Fighting and Dancing Videogames by Irene Yi-Jiun Chien Doctor of Philosophy in Film and Media Designated Emphasis in New Media University of California, Berkeley Professor Linda Williams, Chair Programmed Moves examines the intertwined history and transnational circulation of two major videogame genres, martial arts fighting games and rhythm dancing games. Fighting and dancing games both emerge from Asia, and they both foreground the body. They strip down bodily movement into elemental actions like stepping, kicking, leaping, and tapping, and make these the form and content of the game. I argue that fighting and dancing games point to a key dynamic in videogame play: the programming of the body into the algorithmic logic of the game, a logic that increasingly organizes the informatic structure of everyday work and leisure in a globally interconnected information economy. -

Digital Entertainment Meets Social Media Paul Verna, Senior Analyst [email protected]

June 2009 Digital Entertainment Meets Social Media Paul Verna, Senior Analyst [email protected] Executive Summary: Three pillars of the entertainment industry—music, movies and video games—are facing disruptive changes that are prompting them to explore opportunities in digital distribution, digital marketing and social media. 103969 Key eMarketer Numbers — Digital Entertainment The recorded music industry is the most beleaguered of all. Knocked off balance by the emergence of the MP3 in the late $4.56 billion US digital music spending in 2013, up from $3.00 billion in 2009 1990s, it has not recovered since. CD sales are plummeting and 82.6% US digital music spending as a percent of total US digital revenues are not picking up the slack.The industry faces recorded music spending in 2013, up from 41.0% in 2009 an uncertain future as it grasps at alternative business models. $5.52 billion Total US recorded music spending in 2013, down from $7.31 billion in 2009 The film business is facing problems of its own, but it is $2.65 billion US online advertising spending by MPAA-member fundamentally healthier.A period of sustained growth in film studios and their subsidiaries in 2013, up Hollywood’ s two core businesses—box-office receipts and from $1.24 billion in 2009 DVD sales/rentals—has stopped and new physical and digital 14.2% Percent of film studio advertising budgets spent online in 2013, up from 7.7% in 2009 formats have not taken a solid place in the revenue mix. $681 million US in-game advertising spending in 2013, up from $443 million in 2009 The video game industry has escaped much of this trouble. -

Tap Tap Revenge 4 Pc Download

Tap tap revenge 4 pc download Tap Tap Revenge 2 on Scratch by djscope. 4. Butterfly 5. Thriller 6. All Song Tour. You can also play a 2-player Download this project file. Play Tap Tap Revenge Online on - Tap Tap Revenge Online is a free Ovumdivinorum. 6. Kick It. 5. Trash Pilots. 4. Bikini Line. How To Download Tap Tap Revenge 4 FREE iOS 9 - Duration: Steven Lim 29, views · · |Tap. Download Tap Tap Revenge 4 for Android. Tap Tap Revenge 4 is a free game app for Android that features a music-based rhythm gameplay. Download Tap Tap Revenge 4 Free and compact version of Guitar Hero for Android. Tap Tap Revenge 4 is a compact version of the classic Guitar Hero in. Tap tap revenge 4 online hack, Tap tap revenge 4 pc hack, Tap tap. Download tap tap revenge 4 for iphone. Tap Tap Revenge 4 is a compact. Tap tap revenge 4 Hack Download Cheat Tool MOD APKVISIT ONLINE Tap tap revenge 4 online hack, Tap tap revenge 4 pc hack, Tap tap. Download free app Tap tap revenge 4 for mobile phone via PC, WAP or QR code. To get best games for Android, top 10 just sort games by Popularity. Tap Tap Revenge 4 for iPhone, free and safe download. Tap Tap Revenge 4 latest version: Play along to your favorite songs!. The developer of Tap Tap. You can then head back to Tap Tap Revenge 3 to enjoy all the songs you previously downloaded. Enjoy! If you have never owned Tap Tap Revenge 3 before. -

Music Games Rock: Rhythm Gaming's Greatest Hits of All Time

“Cementing gaming’s role in music’s evolution, Steinberg has done pop culture a laudable service.” – Nick Catucci, Rolling Stone RHYTHM GAMING’S GREATEST HITS OF ALL TIME By SCOTT STEINBERG Author of Get Rich Playing Games Feat. Martin Mathers and Nadia Oxford Foreword By ALEX RIGOPULOS Co-Creator, Guitar Hero and Rock Band Praise for Music Games Rock “Hits all the right notes—and some you don’t expect. A great account of the music game story so far!” – Mike Snider, Entertainment Reporter, USA Today “An exhaustive compendia. Chocked full of fascinating detail...” – Alex Pham, Technology Reporter, Los Angeles Times “It’ll make you want to celebrate by trashing a gaming unit the way Pete Townshend destroys a guitar.” –Jason Pettigrew, Editor-in-Chief, ALTERNATIVE PRESS “I’ve never seen such a well-collected reference... it serves an important role in letting readers consider all sides of the music and rhythm game debate.” –Masaya Matsuura, Creator, PaRappa the Rapper “A must read for the game-obsessed...” –Jermaine Hall, Editor-in-Chief, VIBE MUSIC GAMES ROCK RHYTHM GAMING’S GREATEST HITS OF ALL TIME SCOTT STEINBERG DEDICATION MUSIC GAMES ROCK: RHYTHM GAMING’S GREATEST HITS OF ALL TIME All Rights Reserved © 2011 by Scott Steinberg “Behind the Music: The Making of Sex ‘N Drugs ‘N Rock ‘N Roll” © 2009 Jon Hare No part of this book may be reproduced or transmitted in any form or by any means – graphic, electronic or mechanical – including photocopying, recording, taping or by any information storage retrieval system, without the written permission of the publisher. -

Tap Tap Revenge 3

Tap Tap Revenge 3 With more than 15 million downloads, Tap Tap Revenge is by far the most popular game on the iPhone and iPod touch. Tap Tap Revenge 3 is the first major game to tap into the App Store’s new in-app commerce capabilities to offer a catalog of great music from big name artists. Tap Tap Revenge 3 is also the most fun social game on the App Store, offering awesome new online gaming with bombs and weapons to disrupt competitors, integrated chat rooms and profile browsing, avatars, achievements and levels. Features: • Awesome new online mode including bombs and weapons, integrated chat, profile browsing and private messaging • A new system of avatars, achievements and levels to let gamers stand out among the crowd • Dozen of new features and game enhancements, including 90- second quick games and instant game replay among others • A fresh look and feel, terrific performance • Pricing: $0.99 The Music: • Over 100 free downloadable tracks • A catalog of premium tracks featuring Lady Gaga, Coldplay, Blink 182, Smashing Pumpkins, Johnny Cash, Asher Roth, Tiësto and dozens of other great artists spanning a broad range of musical genres. 2-track bundles for $0.99, 6-track bundles for $2.99 • All premium tracks come with their own theme • New free and premium tracks every week About Tapulous Tap Tap Revenge 3 is produced by Tapulous, the leader in music and social games on the iPhone. Tapulous is angel funded and based in Palo Alto, CA. Tap Tap Revenge 3: Most fun social game on App Store • Bombs and weapons: Flash bombs, dead silence, explosions, green goo... -

Filthy Lucre? Innovative Communities, Identity, and Commercialization

University of Pennsylvania ScholarlyCommons Management Papers Wharton Faculty Research 2016 Filthy Lucre? Innovative Communities, Identity, and Commercialization Ethan Mollick University of Pennsylvania Follow this and additional works at: https://repository.upenn.edu/mgmt_papers Part of the Management Sciences and Quantitative Methods Commons Recommended Citation Mollick, E. (2016). Filthy Lucre? Innovative Communities, Identity, and Commercialization. Organization Science, 27 (6), 1472-1487. http://dx.doi.org/10.1287/orsc.2016.1100 This paper is posted at ScholarlyCommons. https://repository.upenn.edu/mgmt_papers/188 For more information, please contact [email protected]. Filthy Lucre? Innovative Communities, Identity, and Commercialization Abstract Online communities play an increasingly important role in developing innovation. However, relatively little is known about the ways in which community affiliation influences how innovations and products generated in these communities are commercialized. By examining open source software (OSS) as an example of an innovation community and using both a quasi experiment and a longitudinal survey, I seek to shed light on this issue. In the quasi experiment, using the launch of the Apple App Store, I find a decreased propensity toward commercialization among individuals associated with online community innovation. I then examine the mechanisms for this decreased commercialization with a novel longitudinal survey of OSS community members. Despite the history of OSS as an anticommercial community, I do not find that anticommercial attitudes play a role in commercialization decisions. Instead, differences in entrepreneurial self-identity have large significant effects on the propensity to commercialize. I conclude with a discussion of the implications of these findings for the literatures on both entrepreneurial identity and community innovation. -

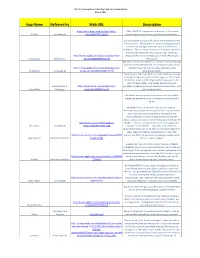

Subscriber App List in PDF Format

The Aha! Connection Subscriber App recommendations March 2011 App Name Referred by Web URL Description http://itunes.apple.com/us/app/11alive‐ 11Alive WXIA TV is pleased to announce a full featured 11 Alive Judi Edwards wx/id384677791?mt=8 weather app for the iPhone and iPod touch platforms. Use one password to store all of your other passwords and important info. 1Password is an award‐winning password and identity manager with more than 1 million users worldwide. This is a special edition of 1Password *just* for iPhone and iPod touch. If you own an iPad, check out http://itunes.apple.com/us/app/1password‐for‐ 1Password Pro—our universal app for iPad, iPhone, and 1Password Mike Martin iphone/id285897618?mt=8 iPod touch! Allrecipes.com Dinner Spinner is a fun and useful recipe app from the world’s #1 food site, delivering thousands of our http://itunes.apple.com/us/app/allrecipes‐com‐ members’ favorite quick and easy recipes to your All Recipes Judi Edwards dinner‐spinner/id299515267?mt=8 iPhone/iPod Touch. The survival of the Angry Birds is at stake. Dish out revenge on the green pigs who stole the Birds’ eggs. Use the unique destructive powers of the Angry Birds to lay waste to the pigs’ fortified castles. Angry Birds features hours of Judi Edwards, S. http://itunes.apple.com/us/app/angry‐ gameplay, challenging physics‐based castle demolition, and Angry Birds Strasburg birds/id343200656?mt=8 lots of replay value. AP Mobile news helps you keep up‐to‐date with what's happening anywhere, in your hometown and across the globe. -

Control Ipad Notifications Have You Ever Played Tap Tap Revenge on the Iphone? Good, Then You Know How Annoying Notifications Ca

Control iPad notifications Have you ever played Tap Tap Revenge on the iPhone? Good, then you know how annoying notifications can be. These messages pop up on the iPad as well. but you can control them. Go to Settings, Notifications, and configure which alerts (and accompanying sounds) will appear. Home Sharing First, turn on Home Sharing in iTunes (Advanced menu) and on your iPad (Settings > iPod and enter your Apple ID). Next, launch the iPod app on your iPad. In the left column, find the little house with "Library" next to it. Tap on that and then on one of the Shared Libraries. It might take a few minutes to update, but then you can watch and listen to all of that Library's media on your iPad. Quick volume mute When the iPad first launched, there was no dedicated mute button anywhere on the device. However, with iOS 4.3 Apple has given you the choice of using the lock switch to mute the sound or lock the iPad's orientation. Either way, a good tip for quickly muting your iPad is to press and hold the Volume-down button. Use an Apple keyboard Missing a real keyboard? Any Apple Wireless Keyboard will work with the iPad. In fact, your iPad will work with any Bluetooth keyboard. Some iPad cases have a keyboard built in, which can be handy. AirPlay: Stream movies, photos and music Whenever you see the AirPlay icon on your iPad it means you can stream whatever media you're viewing to your Apple TV, which is usually connected to your living room TV. -

Disney Acquires Iphone Game Maker Tapulous 2 July 2010, by RYAN NAKASHIMA , AP Business Writer

Disney acquires iPhone game maker Tapulous 2 July 2010, By RYAN NAKASHIMA , AP Business Writer "It definitely is a strategic move and it highlights the importance we put on mobile," Steve Wadsworth, president of Disney Interactive Media Group, told The Associated Press. The initial focus will be on making games for Apple Inc.'s iPhone, iPad and other emerging mobile platforms, he said. Tapulous already has 30 million users on Apple platforms with games such as Tap Tap Revenge, for which it has put out versions with artists such as Metallica, Coldplay, Lady Gaga and Justin Bieber. A new game called Riddim Ribbon features The Black Eyed Peas. Tapulous Inc.'s small staff will remain at the company's headquarters in Palo Alto, which Wadsworth says is a benefit because it is in the high-tech developer community around San Francisco. Disney also owns Pixar Animation Studios in nearby Emeryville. He did not disclose the terms of the acquisition. Tapulous founder and CEO Bart Decrem and Chief Operating Officer Andrew Lacy will take on mobile business development roles at Disney as part of the deal, Wadsworth said. This undated screen shot released by Tapulous shows a new finger-tapping music application for the iPhone called "Tap Tap Revenge: Metallica." The Walt Disney As a possible benefit, Disney could usher artists Co. said Thursday July 1, 2010 it acquired Tapulous, to such as Plain White T's from its label, Hollywood bolster its ability to create mobile games and gain Records, onto Tap Tap platforms, but Wadsworth another toehold in Silicon Valley.(AP Photo/Tapulous, declined to speculate on future products. -

Ilounge 2008 Buyers' Guide

100Best Apps+Games There are over 5,500 third-party applications in the iPod touch and iPhone App Store, the most expensive of which was a $999.99 program called “I Am Rich.” Apple pulled that title, declaring it useless, but left hundreds of other clunkers up for download. Some are free. Most sell for $20 or less. Few users have time to sort through them. Guess who’s been scouring the Store every week since it opened? That’s right: lucky, lucky us. We’ve actually been watching iTunes for new software since 2006, downloading every single iPod Game that Apple released. Now we’ve assembled a list of the 100 best apps and games we’ve tested - for iPhones, iPod touches, and Click Wheel iPods - focusing on titles with mainstream appeal rather than ones made for narrow audiences. Inside, you’ll see only the good stuff, including games - the Store’s most popular genre - and apps that let you create art, share photos, record audio, stream music, buy movie tickets, and much more. 62 2009 Buyers’ Guide 100 best apps + games Trism Aqua Forest Tetris Bubble Bash The iPhone’s best original Mixing Japanese cartoon Better than the many Available for iPods and puzzle game has you graphics and an amazing versions that have iPhones, this bubble- match colored triangles fluid simulator, this come before, this one busting game has by tilting and touching unique puzzle game adds touch- and shake- tropical themes and lots the screen. Multiple needs turns and touches controlled attacks to help of levels; it’s aim- and modes, great fun. -

The Economics of Giving It Away in a Battered Economy, Free Goods and Services Online Are More Attractive Than Ever

ECONOMY | JANUARY 31, 2009 The Economics of Giving It Away In a battered economy, free goods and services online are more attractive than ever. So how can the suppliers make a business model out of nothing? By CHRIS ANDERSON Over the past decade, we have built a country-sized economy online where the default price is zero -- nothing, nada, zip. Digital goods -- from music and video to Wikipedia -- can be produced and distributed at virtually no marginal cost, and so, by the laws of economics, price has gone the same way, to $0.00. For the Google Generation, the Internet is the land of the free. Which is not to say companies can't make money from nothing. Gratis can be a good business. How? Pretty simple: The minority of customers who pay subsidize the majority who do not. Sometimes that's two different sets of customers, as in the traditional media model: A few advertisers pay for content so lots of consumers can get it cheap or free. The concept isn't new, but now that same model is powering everything from photo sharing to online bingo. The last decade has seen the extension of this "two-sided market" model far beyond media, and today it is the revenue engine for all of the biggest Web companies, from Facebook and MySpace to Google itself. In other cases, the same digital economics have spurred entirely new business models, such as "Freemium," a free version supported by a paid premium version. This model uses free as a form of marketing to put the product in the hands of the maximum number of people, converting just a small fraction to paying customers. -

What Do I Do After App Is Downloaded Question: Q: Photos App Won't Show Iphone Photos

what do i do after app is downloaded Question: Q: photos app won't show iPhone photos. Trying to import photos from iPhone 6 to the Photo App on MacBook Pro. The iPhone shows up, open photos for this device is ticked, import to library (which is contained on external HHD). all good. Why don't the photos show up? The 'import selected' and 'import all' are obviously greyed out as it not seeing any photos. All software is updated (iTunes, iOS, photos ad iPhone). There are dozens of pics on the iPhone but they don't show up. Incidentally, I have also done APPLICATIONS, IMAGE CAPTURE.. same thing, images don't show. Thanks. Posted on Mar 11, 2018 4:46 AM. All replies. Loading page content. Page content loaded. All software is updated (iTunes, iOS, photos ad iPhone) Could you please post the version numbers? "Updated" could mean the last version of the currently installed system, not necessarily the most recent system. Are you running macOS 10.13.3 and iOS 11.2.5 and 12.7.3? Are your photos and videos in the new space-saving HEIC/HEVC format (Using HEIF or HEVC media on Apple devices - Apple Support)? Why don't the photos show up? The 'import selected' and 'import all' are obviously greyed out as it not seeing any photos. Question: Q: Apps are "installed" but not showing up on iPhone. I'd search my phone (built-in spotlight) and check every page and no go. The issue is even more apparent now with Apple's built in app- management tool.