Using Context to Enhance File Search

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PDF-Xchange Viewer

PDF-XChange Viewer © 2001-2011 Tracker Software Products Ltd North/South America, Australia, Asia: Tracker Software Products (Canada) Ltd., PO Box 79 Chemainus, BC V0R 1K0, Canada Sales & Admin Tel: Canada (+00) 1-250-324-1621 Fax: Canada (+00) 1-250-324-1623 European Office: 7 Beech Gardens Crawley Down., RH10 4JB Sussex, United Kingdom Sales Tel: +44 (0) 20 8555 1122 Fax: +001 250-324-1623 http://www.tracker-software.com [email protected] Support: [email protected] Support Forums: http://www.tracker-software.com/forum/ ©2001-2011 TRACKER SOFTWARE PRODUCTS II PDF-XChange Viewer v2.5x Table of Contents INTRODUCTION...................................................................................................... 7 IMPORTANT! FREE vs. PRO version ............................................................................................... 8 What Version Am I Running? ............................................................................................................................. 9 Safety Feature .................................................................................................................................................. 10 Notice! ......................................................................................................................................... 10 Files List ....................................................................................................................................... 10 Latest (available) Release Notes ................................................................................................. -

Lookeen Desktop Search

Lookeen Desktop Search Find your files faster! User Benefits Save time by simultaneously searching for documents on your hard drive, in file servers and the network. Lookeen can also search Outlook archives, the Exchange Search with fast and reliable Server and Public Folders. Advanced filters and wildcard options make search more Lookeen technology powerful. With Lookeen you’ll turn ‘search’ into ‘find’. You’ll be able to manage and organize large amounts of data efficiently. Employees will save valuable time usually Find your information in record spent searching to work on more important tasks. time thanks to real-time indexing Lookeen desktop search can also Search your desktop, Outlook be integrated into Outlook The search tool for Windows files and Exchange folders 10, 8, 7 and Vista simultaneously Ctrl+Ctrl is back: instantly launch Edit and save changes to Lookeen from documents in Lookeen preview anywhere on your desktop Save and re-use favorite queries and access them with short keys View all correspondence with individuals or groups at the push of a button Create one-click summaries of email correspondences Start saving time and money immediately For Companies Features Business Edition Desktop search software compatible with Powerful search in virtual environments like Compatible with standard and virtual Windows 10, 8, 7 and Vista Citrix and VMware desktops like Citrix, VMware and Terminal Servers. Simplified roll out through exten- Optional add-in to Microsoft Outlook 2016, Simple, user friendly interface gives users a sive group directives and ADM files. 2013, 2010, 2007 or 2003 and Office 365 unified view over multiple data sources Automatic indexing of all files on the hard Clear presentation of search results drive, network, file servers, Outlook PST/OST- Enterprise Edition Full fidelity preview option archives, Public Folders and the Exchange Scans additional external indexes. -

Towards the Ontology Web Search Engine

TOWARDS THE ONTOLOGY WEB SEARCH ENGINE Olegs Verhodubs [email protected] Abstract. The project of the Ontology Web Search Engine is presented in this paper. The main purpose of this paper is to develop such a project that can be easily implemented. Ontology Web Search Engine is software to look for and index ontologies in the Web. OWL (Web Ontology Languages) ontologies are meant, and they are necessary for the functioning of the SWES (Semantic Web Expert System). SWES is an expert system that will use found ontologies from the Web, generating rules from them, and will supplement its knowledge base with these generated rules. It is expected that the SWES will serve as a universal expert system for the average user. Keywords: Ontology Web Search Engine, Search Engine, Crawler, Indexer, Semantic Web I. INTRODUCTION The technological development of the Web during the last few decades has provided us with more information than we can comprehend or manage effectively [1]. Typical uses of the Web involve seeking and making use of information, searching for and getting in touch with other people, reviewing catalogs of online stores and ordering products by filling out forms, and viewing adult material. Keyword-based search engines such as YAHOO, GOOGLE and others are the main tools for using the Web, and they provide with links to relevant pages in the Web. Despite improvements in search engine technology, the difficulties remain essentially the same [2]. Firstly, relevant pages, retrieved by search engines, are useless, if they are distributed among a large number of mildly relevant or irrelevant pages. -

An Activity Based Data Model for Desktop Querying (Extended Abstract)?

An activity based data model for desktop querying (Extended Abstract)? Sibel Adalı1 and Maria Luisa Sapino2 1 Rensselaer Polytechnic Institute, 110 8th Street, Troy, NY 12180, USA, [email protected], 2 Universit`adi Torino, Corso Svizzera, 185, I-10149 Torino, Italy [email protected] 1 Introduction With the introduction of a variety of desktop search systems by popular search engines as well as the Mac OS operating system, it is now possible to conduct keyword search across many types of documents. However, this type of search only helps the users locate a very specific piece of information that they are looking for. Furthermore, it is possible to locate this information only if the document contains some keywords and the user remembers the appropriate key- words. There are many cases where this may not be true especially for searches involving multimedia documents. However, a personal computer contains a rich set of associations that link files together. We argue that these associations can be used easily to answer more complex queries. For example, most files will have temporal and spatial information. Hence, files created at the same time or place may have relationships to each other. Similarly, files in the same directory or people addressed in the same email may be related to each other in some way. Furthermore, we can define a structure called “activities” that makes use of these associations to help user accomplish more complicated information needs. Intu- itively, we argue that a person uses a personal computer to store information relevant to various activities she or he is involved in. -

Dtsearch Desktop/Dtsearch Network Manual

dtSearch Desktop dtSearch Network Version 7 Copyright 1991-2021 dtSearch Corp. www.dtsearch.com SALES 1-800-483-4637 (301) 263-0731 Fax (301) 263-0781 [email protected] TECHNICAL (301) 263-0731 [email protected] 1 Table of Contents 1. Getting Started _____________________________________________________________ 1 Quick Start 1 Installing dtSearch on a Network 7 Automatic deployment of dtSearch on a Network 8 Command-Line Options 10 Keyboard Shortcuts 11 2. Indexes __________________________________________________________________ 13 What is a Document Index? 13 Creating an Index 13 Caching Documents and Text in an Index 14 Indexing Documents 15 Noise Words 17 Scheduling Index Updates 17 3. Indexing Web Sites _________________________________________________________ 19 Using the Spider to Index Web Sites 19 Spider Options 20 Spider Passwords 21 Login Capture 21 4. Sharing Indexes on a Network _________________________________________________ 23 Creating a Shared Index 23 Sharing Option Settings 23 Index Library Manager 24 Searching Using dtSearch Web 25 5. Working with Indexes _______________________________________________________ 27 Index Manager 27 Recognizing an Existing Index 27 Deleting an Index 27 Renaming an Index 27 Compressing an Index 27 Verifying an Index 27 List Index Contents 28 Merging Indexes 28 6. Searching for Documents _____________________________________________________ 29 Using the Search Dialog Box 29 Browse Words 31 More Search Options 32 Search History 33 i Table of Contents Searching for a List of Words 33 7. -

Comparison of Indexers

Comparison of indexers Beagle, JIndex, metaTracker, Strigi Michal Pryc, Xusheng Hou Sun Microsystems Ltd., Ireland November, 2006 Updated: December, 2006 Table of Contents 1. Introduction.............................................................................................................................................3 2. Indexers...................................................................................................................................................4 3. Test environment ....................................................................................................................................5 3.1 Machine............................................................................................................................................5 3.2 CPU..................................................................................................................................................5 3.3 RAM.................................................................................................................................................5 3.4 Disk..................................................................................................................................................5 3.5 Kernel...............................................................................................................................................5 3.6 GCC..................................................................................................................................................5 -

List of Search Engines

A blog network is a group of blogs that are connected to each other in a network. A blog network can either be a group of loosely connected blogs, or a group of blogs that are owned by the same company. The purpose of such a network is usually to promote the other blogs in the same network and therefore increase the advertising revenue generated from online advertising on the blogs.[1] List of search engines From Wikipedia, the free encyclopedia For knowing popular web search engines see, see Most popular Internet search engines. This is a list of search engines, including web search engines, selection-based search engines, metasearch engines, desktop search tools, and web portals and vertical market websites that have a search facility for online databases. Contents 1 By content/topic o 1.1 General o 1.2 P2P search engines o 1.3 Metasearch engines o 1.4 Geographically limited scope o 1.5 Semantic o 1.6 Accountancy o 1.7 Business o 1.8 Computers o 1.9 Enterprise o 1.10 Fashion o 1.11 Food/Recipes o 1.12 Genealogy o 1.13 Mobile/Handheld o 1.14 Job o 1.15 Legal o 1.16 Medical o 1.17 News o 1.18 People o 1.19 Real estate / property o 1.20 Television o 1.21 Video Games 2 By information type o 2.1 Forum o 2.2 Blog o 2.3 Multimedia o 2.4 Source code o 2.5 BitTorrent o 2.6 Email o 2.7 Maps o 2.8 Price o 2.9 Question and answer . -

Ileak: a Lightweight System for Detecting Inadvertent Information Leaks

iLeak: A Lightweight System for Detecting Inadvertent Information Leaks Vasileios P. Kemerlis, Vasilis Pappas, Georgios Portokalidis, and Angelos D. Keromytis Network Security Lab Computer Science Department Columbia University, New York, NY, USA {vpk, vpappas, porto, angelos}@cs.columbia.edu Abstract—Data loss incidents, where data of sensitive nature Most research on detecting and preventing information are exposed to the public, have become too frequent and have leaks has focused on applying strict information flow control caused damages of millions of dollars to companies and other (IFC). Sensitive data are labeled and tracked throughout organizations. Repeatedly, information leaks occur over the Internet, and half of the time they are accidental, caused by an application’s execution to detect their illegal propaga- user negligence, misconfiguration of software, or inadequate tion (e.g., their transmission over the network). Approaches understanding of an application’s functionality. This paper such as Jif [6] and JFlow [7] achieve IFC by introducing presents iLeak, a lightweight, modular system for detecting extensions to the Java programming language, while others inadvertent information leaks. Unlike previous solutions, iLeak enforce it dynamically [8], or propose new operating system builds on components already present in modern computers. In particular, we employ system tracing facilities and data (OS) designs [9]. Fine-grained IFC mechanisms require that indexing services, and combine them in a novel way to detect sensitive data are labeled by the user beforehand, but as data leaks. Our design consists of three components: uaudits are the amount of data stored in desktops continuously grows, responsible for capturing the information that exits the system, locating documents and files containing personal data be- while Inspectors use the indexing service to identify if the comes burdensome. -

Case Studies

® 3rd party developer case studies click on the icons below to view a category Technical and Medical Documentation Information Management Financial, Trade and News Legal Recruiting and Staffing Non-Profit and Education General Developer Tools and Resources International Language Developer Tools International and Other Government Forensics, Intelligence and Security The Smart Choice for Text Retrieval ® since 1991 dtSearchUntitled Document Technical and Medical Documentation Case Studies Spar Aerospace enlists dtSearch for aircraft contract. Spar Aerospace, a subsidiary of L-3 Communications, is a leading aviation services company. Spar had a contract to update the maintenance publications for an aircraft fleet, both on the Web and on laptop replications in the field. “Search requirements for this project were extensive and stringent. We investigated most of the ‘high end’ search engines out there. We decided to use dtSearch ... I have been very impressed by the dtSearch support organization ... Answers are always appropriate, straight forward, clear and unambiguous.” From Computerworld Canada: “Aviation firm earns wings with new text search ... Spar chose dtSearch Corp.’s text-search product, dtSearch Web, to serve the information up in an easily indexed manner online.” For “‘a requirement that we have to run this thing on stand-alone PCs,’ ... Spar signed on for dtSearch Publish.” Contegra Systems succeeds in “boosting support and service with better search and navigation” through dtSearch web-based implementation for Otis Elevator. -

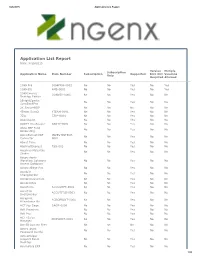

Application List Report

8/26/2015 Application List Report Application List Report Date: 8/26/2015 Version Mutiple Subscription Application Name Item Number Subscription Supported Info Not Versions Only Required Allowed 1099 Pro 1099PRO0001 No No Yes No Yes 1099Etc AMS0001 No No Yes No Yes 1099Convey CONVEY0001 No No Yes No No Desktop Edition 2BrightSparks No No Yes No No SyncBackPro 2X SecureRDP No No No No No 4Team Sync2 4TEAM0001 No No Yes No No 7Zip 7ZIP0001 No No Yes No No AbacusLaw No No Yes No No ABBYY FineReader ABBYY0001 No No Yes No No Abila MIP Fund No No Yes No No Accounting Able2Extract PDF INVESTINTECH No No Yes No No Converter 0001 About Time No No Yes No No AbstractExpress TSS003 No No Yes No No Accelrys Materials No No Yes No No Studio Accountants Workflow Solutions No No Yes No No Access Database AccountEdge Pro No No Yes No No Accubid No No Yes No No ChangeOrder Accubid Livecount No No Yes No No Accubid Pro No No Yes No No AccuTerm ACCUSOFT0001 No No Yes No No AccuTitle ACCUTITLE0001 No No Yes No No DocUploader Acroprint ACROPRINT0001 No No Yes No No Attendance Rx ACT! by Sage SAGE0006 No No Yes No No Act! Premium No No Yes No No Act! Pro No No Yes No No ACT! Sales PINPOINT0001 No No Yes No No Manager Act3D Lumion Free No No No No No Active State No No Yes No No Password Gorilla ActiveHelper Support Panel No No Yes No No Desktop Acumatica ERP No No Yes No No 1/33 8/26/2015 Application List Report AdaptCRM ADAPT0001 No No Yes No No Enterprise Address Accelerator/ Postal BLACKBAUD0004 No No Yes No No Saver Plugin for Raiser's Edge Adobe Acrobat ADOBE0024 No No Yes No No AutoPortfolio Adobe Acrobat Professional (v. -

Extracting Evidence Using Google Desktop Search

Chapter 4 EXTRACTING EVIDENCE USING GOOGLE DESKTOP SEARCH Timothy Pavlic, Jill Slay and Benjamin Turnbull Abstract Desktop search applications have improved dramatically over the last three years, evolving from time-consuming search applications to in- stantaneous search tools that rely extensively on pre-cached data. This paper investigates the extraction of pre-cached data for forensic pur- poses, drawing on earlier work to automate the process. The result is aproof-of-conceptapplicationcalledGoogleDesktopSearch Evidence Collector (GDSEC), which interfaces with Google Desktop Search to convert data from Google’s proprietary format to one that is amenable to offline analysis. Keywords: Google Desktop Search, evidence extraction 1. Introduction Current desktop search utilities such as Windows Desktop Search, Google Desktop Search and Yahoo! Desktop Search differ from earlier tools in that user data is replicated and stored independently [1, 10]. Unlike the older systems that searched mounted volumes on-the-fly, the newer systems search pre-built databases, accelerating thesearchfor user data with only a nominal increase in hard disk storage [5]. The replication of data in a search application has potential forensic appli- cations – data stored independently within a desktop search application database often remains after the original file is deleted. In previous work [9], we examined the forensic possibilitiesofdata stored within Google Desktop Search; in particular, we discussed the extraction of text from deleted word processing documents, thumbnails from deleted image files and the cache for HTTPS sessions. However, the format of the extracted data files does not allow for simple interpretation and analysis; therefore, the only sure method of extracting data was via 44 ADVANCES IN DIGITAL FORENSICS IV the search application interface. -

Foxit PDF Ifilter for Desktop User Manual

Maintain control of all your content More Foxit PDF IFilter for Desktop © Foxit Software Incorporated. All Rights Reserved. No part of this document can be reproduced, transferred, distributed or stored in any format without the prior written permission of Foxit. Anti-Grain Geometry - Version 2.3, Copyright (C) 2002-2005 Maxim Shemanarev (http://www.antigrain.com). FreeType2 (freetype2.2.1), Copyright (C) 1996-2001, 2002, 2003, 2004| David Turner, Robert Wilhelm, and Werner Lemberg. LibJPEG (jpeg V6b 27- Mar-1998), Copyright (C) 1991-1998 Independent JPEG Group. ZLib (zlib1.2.2), Copyright (C) 1995-2003 Jean-loup Gailly and Mark Adler. Little CMS, Copyright (C) 1998-2004 Marti Maria. Kakadu, Copyright (C) 2001, David Taubman, The University of New South Wales (UNSW). PNG, Copyright (C) 1998-2009 Glenn Randers- Pehrson. LibTIFF, Copyright (C) 1988-1997 Sam Leffler and Copyright (C) 1991-1197 Silicon Graphics, Inc. Permission to copy, use, modify, sell and distribute this software is granted provided this copyright notice appears in all copies. This software is provided "as is" without express or implied warranty, and with no claim as to its suitability for any purpose. 2 Foxit PDF IFilter for Desktop Contents Contents ...................................................................................................................... 3 Chapter 1 - Overview ................................................................................................ 4 Operation Systems .............................................................................................................