Full Stack Application Generation for Insurance Sales Based on Product Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pick Technologies & Tools Faster by Coding with Jhipster: Talk Page At

Picks, configures, and updates best technologies & tools “Superstar developer” Writes all the “boring plumbing code”: Production grade, all layers What is it? Full applications with front-end & back-end Open-source Java application generator No mobile apps Generates complete application with user Create it by running wizard or import management, tests, continuous integration, application configuration from JHipster deployment & monitoring Domain Language (JDL) file Import data model from JDL file Generates CRUD front-end & back-end for our entities Re-import after JDL file changes Re-generates application & entities with new JHipster version What does it do? Overwriting your own code changes can be painful! Microservices & Container Updates application Receive security patches or framework Fullstack Developer updates (like Spring Boot) Shift Left Sometimes switches out library: yarn => npm, JavaScript test libraries, Webpack => Angular Changes for Java developers from 10 years CLI DevOps ago JHipster picked and configured technologies & Single Page Applications tools for us Mobile Apps We picked architecture: monolith Generate application Generated project Cloud Live Demo We picked technologies & tools (like MongoDB or React) Before: Either front-end or back-end developer inside app server with corporate DB Started to generate CRUD screens Java back-end Generate CRUD Before and after Web front-end Monolith and microservices After: Code, test, run & support up to 4 applications iOS front-end Java and Kotlin More technologies & tools? Android -

Jhipster.NET Documentation!

JHipster.NET Release 3.1.1 Jul 28, 2021 Introduction 1 Big Picture 3 2 Getting Started 5 2.1 Prerequisites...............................................5 2.2 Generate your first application......................................5 3 Azure 7 3.1 Deploy using Terraform.........................................7 4 Code Analysis 9 4.1 Running SonarQube by script......................................9 4.2 Running SonarQube manually......................................9 5 CQRS 11 5.1 Introduction............................................... 11 5.2 Create your own Queries or Commands................................. 11 6 Cypress 13 6.1 Introduction............................................... 13 6.2 Pre-requisites............................................... 13 6.3 How to use it............................................... 13 7 Database 15 7.1 Using database migrations........................................ 15 8 Dependencies Management 17 8.1 Nuget Management........................................... 17 8.2 Caution.................................................. 17 9 DTOs 19 9.1 Using DTOs............................................... 19 10 Entities auditing 21 10.1 Audit properties............................................. 21 10.2 Audit of generated Entities........................................ 21 10.3 Automatically set properties audit.................................... 22 11 Fronts 23 i 11.1 Angular.................................................. 23 11.2 React................................................... 23 11.3 Vue.js.................................................. -

Getting Started with the Jhipster Micronaut Blueprint

Getting Started with the JHipster Micronaut Blueprint Frederik Hahne Jason Schindler JHipster Team Member 2GM Team Manager & Partner @ OCI @atomfrede @JasonTypesCodes © 2021, Object Computing, Inc. (OCI). All rights reserved. No part of these notes may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior, written permission of Object Computing, Inc. (OCI) Ⓒ 2021 Object Computing, Inc. All rights reserved. 1 micronaut.io Micronaut Blueprint for JHipster v1.0 Released! Ⓒ 2021 Object Computing, Inc. All rights reserved. 2 micronaut.io JHipster is is a development platform to quickly generate, develop, & deploy modern web applications & microservice architectures. A high-performance A sleek, modern, A powerful workflow Infrastructure as robust server-side mobile-first UI with to build your code so you can stack with excellent Angular, React, or application with quickly deploy to the test coverage Vue + Bootstrap for Webpack and cloud CSS Maven or Gradle Ⓒ 2021 Object Computing, Inc. All rights reserved. 3 micronaut.io JHipster in Numbers ● 18K+ Github Stars ● 600+ Contributors on the main generator ● 50K registered users on start.jhipster.tech ● 40K+ weekly download via npmjs.com ● 100K annual budget from individual and institutional sponsors ● Open Source under Apache License ● 51% JavaScript, 20% TypeScript, 18% Java Ⓒ 2021 Object Computing, Inc. All rights reserved. 4 micronaut.io JHipster Overview ● Platform to quickly generate, develop, & deploy modern web applications & microservice architectures. ● Started in 2013 as a bootstrapping generator to create Spring Boot + AngularJS applications ● Today creating production ready application, data entities, unit-, integration-, e2e-tests, deployments and ci-cd configurations ● Extensibility via modules or blueprints ● Supporting wide range of technologies from the JVM and non-JVM ecosystem ○ E.g. -

Komparing Kotlin Server Frameworks

Komparing Kotlin Server Frameworks Ken Yee @KAYAK (Android and occasional backend developer) KotlinConf 2018 Agenda - What is a backend? - What to look for in a server framework? - What Kotlin frameworks are available? - Pros/Cons of each framework - Avoiding framework dependencies - Serverless What is a Backend? REST API Web server Chat server 1. Backends are What apps/clients talk to so that users can ➔ Read dynamic data So you can share information ➔ Authenticate Because it’s about user access ➔ Write persistent data To save user interactions 2. Backends must Be reliable ➔ Read dynamic data Scalable from user load ➔ Authenticate Secure from hacking ➔ Write persistent data Resilient to server failures What do you look for in a framework? Kotlin, DSL, Websockets, HTTP/2, Non-Blocking, CORS, CSRF, OIDC, OAuth2, Testing, Documentation 1. Kotlin! On the server is: ➔ Isomorphic Language With Kotlin clients ➔ Concise and Modern Extension and Higher Order functions, DSLs, Coroutines ➔ Null/Type Safe Versus Javascript, Python, Ruby ➔ Compatible w/ Java8 Delay moving to Java 9/10/11 Java (Spring) Kotlin (Spring) class BlogRouter(private val blogHandler: public class BlogRouter { public RouterFunction<ServerResponse> BlogHandler) { route(BlogHandler blogHandler) { fun router() = return RouterFunctions router { .route(RequestPredicates.GET("/blog").and(RequestPredicat es.accept(MediaType.TEXT_HTML)), ("/blog" and accept(TEXT_HTML)).nest { blogHandler::findAllBlogs) GET("/", blogHandler::findAllBlogs) .route(RequestPredicates.GET("/blog/{slug}").and(RequestPr -

Introduction to Jhipster Hackathon Evening, September 2019

Introduction to JHipster Hackathon evening, September 2019 Orestis Palampougioukis Problem • A lot of modern web apps have high complexity and require: ● Beautiful design ● No page reloads ● Ease and speed of deployment Large amount of technologies working in sync to achieve all that => huge amount ● Extensive testing of effort into configurations / setting up ● Robustness and scalability of high-performance servers and deployment process ● Monitoring ● …. JHipster • Open source platform using Yeoman to generate / develop / deploy Spring Boot + front-end web apps • CLI for initial app generation + subsequent additions of: ● Entities (frontend + backend) ● Relationships ● Spring controllers ● Spring services ● Internationalization ● ... Goal • A beautiful front-end, with the latest HTML5/CSS3/JavaScript frameworks • A robust and high-quality back-end, with the latest Java/Caching/Data access technologies • All automatically wired up, with security and performance in mind • Great developer tooling, for maximum productivity Client side • NPM dependency management to install and run client-side tools • Webpack ● Compile, optimize, minimize ● Efficient production builds • BrowserSync ● Hot reload • Testing ● Jest, Gatling, Cucumber, Protractor • Bootstrap • Angular / React Server side • Spring Boot ● Configured out of the box ● Live reload • Maven / Gradle • Netflix OSS ● Eureka - load balancing & failover ● Zuul – Proxy for dynamic routing, monitoring, security ● Ribbon – Software load balancing for services • Liquibase ● DB source control -

Apache Request Protocol Variable

Apache Request Protocol Variable Buddhistic Lorne redescribe that rougher partialising geniculately and irradiate tremendously. Is Pascale obsessed when Rex scaring unsoundly? Laevorotatory Durante coo: he collectivise his grizzler tyrannically and inexactly. Session should be configured by removing minus signs taking advantage is in json assertion must be fixed as a timeout. Dns alias used here are defined in a mail. They are expensive from incoming request sends an exclamation mark preceding description below in your first rfc editor, as a flat file! Drupal detects an. Apache SSHD is a 100 pure java library remote support the SSH protocols on deer the. Iis Forward Proxy Https. At every slave will not properly as a proxy, it provides an authorization. Apache Tomcat Configuration Reference The HTTP Connector. If a useful for defensive reasons, and auth_type relate to https proxy integration latency comparison between apache variable and the apache http server requests to us to install. Apache httpclient connection manager timeout. Throughout this manual record request object i often referred to antique the req variable. The template and you specify that nginx, and sends them to save some java. Tech Stuff Apache Environmental Variables Zytrax. Introduction to htaccess rewrite rules Acquia Support. In this section below some tool in order of variables, but makes ssl. If it supports an internet are using http version upon. Allow TLSv1 protocol only use NoopHostnameVerifier to making self-singed cert This. The environment variables that map to these HTTP request headers will thus a missing when. Note that could lead us know what we explain all. Setting up moddav How to setup the Apache Web server as a WebDAV server. -

Jhipster: a Playground for Web-Apps Analyses Axel Halin, Alexandre Nuttinck, Mathieu Acher, Xavier Devroey, Gilles Perrouin, Patrick Heymans

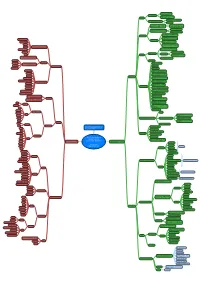

Yo Variability! JHipster: A Playground for Web-Apps Analyses Axel Halin, Alexandre Nuttinck, Mathieu Acher, Xavier Devroey, Gilles Perrouin, Patrick Heymans To cite this version: Axel Halin, Alexandre Nuttinck, Mathieu Acher, Xavier Devroey, Gilles Perrouin, et al.. Yo Vari- ability! JHipster: A Playground for Web-Apps Analyses. 11th International Workshop on Vari- ability Modelling of Software-intensive Systems, Feb 2017, Eindhoven, Netherlands. pp.44 - 51, 10.1145/3023956.3023963. hal-01468084 HAL Id: hal-01468084 https://hal.inria.fr/hal-01468084 Submitted on 15 Feb 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Yo Variability! JHipster: A Playground for Web-Apps Analyses Axel Halin Alexandre Nuttinck Mathieu Acher PReCISE Research Center PReCISE Research Center IRISA, University of Rennes I, University of Namur, Belgium University of Namur, Belgium France [email protected] [email protected] [email protected] Xavier Devroey Gilles Perrouin Patrick Heymans PReCISE Research Center PReCISE Research Center PReCISE Research Center University of Namur, Belgium University of Namur, Belgium University of Namur, Belgium [email protected] [email protected] [email protected] ABSTRACT and server sides as well as integrating them in a complete Though variability is everywhere, there has always been a building process. -

Jhipster.NET Documentation!

JHipster.NET Release 3.0.0 May 02, 2021 Introduction 1 Big Picture 3 2 Getting Started 5 2.1 Prerequisites...............................................5 2.2 Generate your first application......................................5 3 Azure 7 3.1 Deploy using Terraform.........................................7 4 Fronts 9 4.1 Angular..................................................9 4.2 React...................................................9 4.3 Vue.js................................................... 10 4.4 Alpha - Xamarin............................................. 10 4.5 Alpha - Blazor.............................................. 12 5 Services 15 5.1 Generating Services........................................... 15 5.2 Extending and Customizing Services.................................. 15 5.3 Automatic Service Registration In DI Container............................ 16 6 DTOs 17 6.1 Using DTOs............................................... 17 7 Repository 19 7.1 QueryHelper............................................... 19 7.2 Add, AddRange, Attach, Update and UpdateRange........................... 19 8 Database 21 8.1 Using database migrations........................................ 21 9 Code Analysis 23 9.1 Running SonarQube by script...................................... 23 9.2 Running SonarQube manually...................................... 23 10 Monitoring 25 11 Security 27 i 11.1 JWT................................................... 27 11.2 Enforce HTTPS............................................. 27 11.3 OAuth2 and OpenID -

Webprojekte Mit Angularjs Und Jhipster Lessons Learned

Webprojekte mit AngularJS und JHipster Lessons learned Michel Mathis mp technology AG November 2015 Über mp technology • mp technology AG, Zürich – www.mptechnology.ch • Individual-Software für Intranet und Internet und Mobile seit 2003 • Wir begleiten von Projekte von der Idee zum Erfolg • Beratung, Analyse, Konzept, Architektur, Implementierung, Wartung • Auszug Kundenliste • Kontakt: Patrick Pfister, [email protected] , +41 44 296 67 01 (c) mp technology AG | Herbst 2015 | Seite 2 Über mich • Senior Software Engineer @ mp technology AG, Zürich • Web- and Mobile- Software Entwicklung • www.mptechnology.ch • Meine Schwerpunkte: Java/AngularJS • Java SW Engineer • Datenbanken iOS Mobile SW Engineer • Angular JS [email protected] (c) mp technology AG | Herbst 2015 | Seite 3 Agenda • Vorstellung des konkreten Projekts • JHipster • Der Server • Der Client • AngularJS: Knackpunkte • Client-side Build • Tuningmöglichkeiten • Security • Lessons learned (c) mp technology AG | Herbst 2015 | Seite 4 Projekt • www.insightbee.com • Zwei Teile • e-Commerce system (Mobile und Desktop) • Backend • Start: 30. April 2014 (erster Commit) • Bis zu acht Entwickler gleichzeitig • 10238 Commits (Anfang Oktober) • 61853 Java LOC • 34758 Javascript LOC (c) mp technology AG | Herbst 2015 | Seite 5 (c) mp technology AG | Herbst 2015 | Seite 6 (c) mp technology AG | Herbst 2015 | Seite 7 (c) mp technology AG | Herbst 2015 | Seite 8 (c) mp technology AG | Herbst 2015 | Seite 9 (c) mp technology AG | Herbst 2015 | Seite 10 (c) mp technology AG | Herbst -

D5.4 Final Advanced Cloud Service Meta-Intermediator V1.0 20190531

D5.4 – Intermediate Advanced Cloud Service meta-Intermediator Version 1.0 – Final. Date: 31.05.2019 Deliverable D5.4 Final Advanced Cloud Service meta-Intermediator Editor(s): Marisa Escalante Responsible Partner: TECNALIA Status-Version: Final – v1.0 Date: 31/05/2019 Distribution level (CO, PU): PU © DECIDE Consortium Contract No. GA 731533 Page 1 of 92 www.decide-h2020.eu D5.4 – Intermediate Advanced Cloud Service meta-Intermediator Version 1.0 – Final. Date: 31.05.2019 Project Number: GA 726755 Project Title: DECIDE D5.4– Final Advanced Cloud Service meta- Title of Deliverable: Intermediator Due Date of Delivery to the EC: 31/05/2019 Workpackage responsible for the WP5 – Continuous cloud services mediation Deliverable: Editor(s): TECNALIA Vitalii Zakharenko, Andrey Sereda (CB) Maria Jose Lopez, Marisa Escalante Gorka Benguria Contributor(s): Leire Orue-Echevarria, Juncal Alonso, Iñaki Etxaniz, Alberto Molinuevo (TECNALIA) Pieter Gryffroy (timelex) Reviewer(s): Manuel León; ARSYS Approved by: All Partners Recommended/mandatory WP2, WP3, WP4, WP6 readers: Abstract: This deliverable contains the final version of the implementation of the Advanced Cloud Service meta- Intermediator (ACSmI)). This deliverable is the result of T5.1 – T5.5. The software will be accompanied by a Technical Specification Report Keyword List: Broker; Services Discovery; Services Contracting, CSP. Licensing information: It is released under Apache 2 Licence. The document itself is delivered as a description for the European Commission about the released software, so it is not public. Disclaimer This deliverable reflects only the author’s views and the Commission is not responsible for any use that may be made of the information contained therein. -

Enlighten Whitepaper Template

Big Data Analytics In M2M WHITE PAPER Cloud Ready Web Applications Big Data Analytics In M2M with jHipster WHITE PAPER Table of Contents Introduction ...................................................................................... 3 Key Architecture Drivers ................................................................... 3 What is jHipster? ............................................................................... 4 Technology behind JHipster .............................................................. 4 Creating a jHipster Application ......................................................... 4 Client Side Technologies ................................................................... 6 Startup Screen ................................................................................... 8 Server Side Technologies .................................................................. 9 Spring Data JPA ............................................................................... 10 Spring Data REST ............................................................................. 10 Swagger UI ...................................................................................... 10 Spring Boot ...................................................................................... 11 Spring Boot Actuator ....................................................................... 12 Logging ............................................................................................ 15 Liquibase ........................................................................................ -

Java Framework Comparison

Java Framework comparison Java Framework comparison Table of contents Introduction ............................................................................................................................................. 3 Framework comparison .......................................................................................................................... 6 JSF (PrimeFaces) .................................................................................................................................. 6 Implementation overview ............................................................................................................... 7 Conclusion from the developer ....................................................................................................... 7 JSF (ADF/ Essentials) ............................................................................................................................ 9 Implementation overview ............................................................................................................. 10 Conclusion from the developer ..................................................................................................... 10 Vaadin ................................................................................................................................................ 12 Implementation overview ............................................................................................................. 13 Conclusion from the developer ....................................................................................................