Generating Summaries with Topic Templates and Structured Convolutional Decoders

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DVD Movie List by Genre – Dec 2020

Action # Movie Name Year Director Stars Category mins 560 2012 2009 Roland Emmerich John Cusack, Thandie Newton, Chiwetel Ejiofor Action 158 min 356 10'000 BC 2008 Roland Emmerich Steven Strait, Camilla Bella, Cliff Curtis Action 109 min 408 12 Rounds 2009 Renny Harlin John Cena, Ashley Scott, Aidan Gillen Action 108 min 766 13 hours 2016 Michael Bay John Krasinski, Pablo Schreiber, James Badge Dale Action 144 min 231 A Knight's Tale 2001 Brian Helgeland Heath Ledger, Mark Addy, Rufus Sewell Action 132 min 272 Agent Cody Banks 2003 Harald Zwart Frankie Muniz, Hilary Duff, Andrew Francis Action 102 min 761 American Gangster 2007 Ridley Scott Denzel Washington, Russell Crowe, Chiwetel Ejiofor Action 113 min 817 American Sniper 2014 Clint Eastwood Bradley Cooper, Sienna Miller, Kyle Gallner Action 133 min 409 Armageddon 1998 Michael Bay Bruce Willis, Billy Bob Thornton, Ben Affleck Action 151 min 517 Avengers - Infinity War 2018 Anthony & Joe RussoRobert Downey Jr., Chris Hemsworth, Mark Ruffalo Action 149 min 865 Avengers- Endgame 2019 Tony & Joe Russo Robert Downey Jr, Chris Evans, Mark Ruffalo Action 181 mins 592 Bait 2000 Antoine Fuqua Jamie Foxx, David Morse, Robert Pastorelli Action 119 min 478 Battle of Britain 1969 Guy Hamilton Michael Caine, Trevor Howard, Harry Andrews Action 132 min 551 Beowulf 2007 Robert Zemeckis Ray Winstone, Crispin Glover, Angelina Jolie Action 115 min 747 Best of the Best 1989 Robert Radler Eric Roberts, James Earl Jones, Sally Kirkland Action 97 min 518 Black Panther 2018 Ryan Coogler Chadwick Boseman, Michael B. Jordan, Lupita Nyong'o Action 134 min 526 Blade 1998 Stephen Norrington Wesley Snipes, Stephen Dorff, Kris Kristofferson Action 120 min 531 Blade 2 2002 Guillermo del Toro Wesley Snipes, Kris Kristofferson, Ron Perlman Action 117 min 527 Blade Trinity 2004 David S. -

Mormon Cinema on the Web

Mormon Cinema on the Web Randy Astle ormon cinema on the Internet is a moving target. Because change M in this medium occurs so rapidly, the information presented in this review will necessarily become dated in a few months and much more so in the years to come. What I hope to provide, therefore, is a snapshot of online resources related to LDS or Mormon cinema near the beginning of their evolution. I believe that the Internet will become the next great force in both Mormon cinema and world cinema in general, if it has not already done so. Hence, while the current article may prove useful for contemporary readers by surveying online resources currently available, hopefully it will also be of interest to readers years from now by provid- ing a glimpse back into one of the greatest, and newest, LDS art forms in its infancy. At the present, websites devoted to Mormonism and motion pictures can be roughly divided into four categories: 1. Those that promote specific titles or production companies 2. Those that sell Mormon films on traditional video formats (primar- ily DVD) 3. Those that discuss or catalog Mormon films 4. Those that exhibit Mormon films online The first two categories can be dealt with rather quickly. Promotional Websites Today standard practice throughout the motion picture industry is for any new film to have a dedicated website with trailers, cast and crew BYU Studies 7, no. (8) 161 162 v BYU Studies biographies, release information, or other promotional material, and this is true of Mormon films as well. -

Save 20% See Page 3 Dear Friends, This Year We Have Enjoyed Many Days of Summertime Fun

Seagull Book® WHEREWHERE YOUYOU NEVERNNEVER PAYPAY FULLFULL PRICEP RICE FORF OR ANYTHINGA NYTHING August 2010 $ .59 Save 20% See Page 3 Dear Friends, This year we have enjoyed many days of summertime fun. However, we also had the unfortunate experience of wearing out our air conditioner and having to wait a few weeks to have it replaced. On a particularly warm Sunday afternoon, I sought refuge from our hot, stuffy house and spent some time outside—grateful for the relief of a gentle breeze and the cool shade of our covered patio. As I pondered on my temporary hardship, my thoughts—as they often do—turned to the pioneers. In fact, I smiled inwardly as I could hear the spirited words of my youngest daughter echoing back from conversations in the past: “You and your pioneers.” Thinking about her words took me back to a day nearly thirty years ago—the memory preserved perfectly in my mind. I was a teenager somewhere in Provo Canyon, wearing a bonnet, pantaloons, and a calico dress. I was lying face-down in the dirt. The sun was hot and I was physically and emo- tionally spent. My feet were blistered and bleeding and tears were streaming down my dust-covered cheeks as I watched the wagons and handcarts roll on without me. Realizing they were not coming back or slowing down, I willed myself to get up and limped painfully along. I vividly remember ask- ing out loud: “Why? Why would they do it?” Even though at the end of my Pioneer Trek, I boarded an air-conditioned bus and returned home to an air-conditioned house, a hot shower, a soft bed, and a kind-hearted mother who eagerly satisfied my first request—a hot Big Mac sandwich! —that week of temporary hardship had an incredible spiritual impact on me and I have felt a particular kinship to the pioneers ever since. -

KATHRINE GORDON Hair Stylist IATSE 798 and 706

KATHRINE GORDON Hair Stylist IATSE 798 and 706 FILM DOLLFACE Department Head Hair/ Hulu Personal Hair Stylist To Kat Dennings THE HUSTLE Personal Hair Stylist and Hair Designer To Anne Hathaway Camp Sugar Director: Chris Addison SERENITY Personal Hair Stylist and Hair Designer To Anne Hathaway Global Road Entertainment Director: Steven Knight ALPHA Department Head Studio 8 Director: Albert Hughes Cast: Kodi Smit-McPhee, Jóhannes Haukur Jóhannesson, Jens Hultén THE CIRCLE Department Head 1978 Films Director: James Ponsoldt Cast: Emma Watson, Tom Hanks LOVE THE COOPERS Hair Designer To Marisa Tomei CBS Films Director: Jessie Nelson CONCUSSION Department Head LStar Capital Director: Peter Landesman Cast: Gugu Mbatha-Raw, David Morse, Alec Baldwin, Luke Wilson, Paul Reiser, Arliss Howard BLACKHAT Department Head Forward Pass Director: Michael Mann Cast: Viola Davis, Wei Tang, Leehom Wang, John Ortiz, Ritchie Coster FOXCATCHER Department Head Annapurna Pictures Director: Bennett Miller Cast: Steve Carell, Channing Tatum, Mark Ruffalo, Siena Miller, Vanessa Redgrave Winner: Variety Artisan Award for Outstanding Work in Hair and Make-Up THE MILTON AGENCY Kathrine Gordon 6715 Hollywood Blvd #206, Los Angeles, CA 90028 Hair Stylist Telephone: 323.466.4441 Facsimile: 323.460.4442 IATSE 706 and 798 [email protected] www.miltonagency.com Page 1 of 6 AMERICAN HUSTLE Personal Hair Stylist to Christian Bale, Amy Adams/ Columbia Pictures Corporation Hair/Wig Designer for Jennifer Lawrence/ Hair Designer for Jeremy Renner Director: David O. Russell -

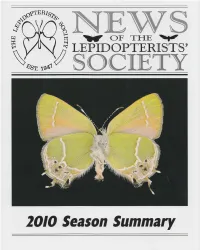

2010 Season Summary Index NEW WOFTHE~ Zone 1: Yukon Territory

2010 Season Summary Index NEW WOFTHE~ Zone 1: Yukon Territory ........................................................................................... 3 Alaska ... ........................................ ............................................................... 3 LEPIDOPTERISTS Zone 2: British Columbia .................................................... ........................ ............ 6 Idaho .. ... ....................................... ................................................................ 6 Oregon ........ ... .... ........................ .. .. ............................................................ 10 SOCIETY Volume 53 Supplement Sl Washington ................................................................................................ 14 Zone 3: Arizona ............................................................ .................................... ...... 19 The Lepidopterists' Society is a non-profo California ............... ................................................. .............. .. ................... 2 2 educational and scientific organization. The Nevada ..................................................................... ................................ 28 object of the Society, which was formed in Zone 4: Colorado ................................ ... ............... ... ...... ......................................... 2 9 May 1947 and formally constituted in De Montana .................................................................................................... 51 cember -

National Association of County Agricultural Agents

National Association of County Agricultural Agents Proceedings 103rd Annual Meeting and Professional Improvement Conference July 29- August 2, 2018 Chattanooga, TN TABLE OF CONTENTS PAGE REPORT TO MEMBERSHIP..........................................................................................................................................................................................1-26 POSTER SESSION APPLIED RESEARCH......................................................................................................................................................27-54 EXTENSION EDUCATION......................................................................................................................................................................................55-100 AWARD WINNERS.................................................................................................................................................................................................................101 Ag Awareness & Appreciation Award..................................................................................................................101-104 Excellence in 4-H Programming.................................................................................................................................104-107 Search for excellence in croP production........................................................................................107-109 search for excellence in farm & ranch financial management.........................109-112 -

2019 LA Paley Honors

STAR-STUDDED LIST OF PRESENTERS AND GUESTS JOIN THE PALEY HONORS: A SPECIAL TRIBUTE TO TELEVISION’S COMEDY LEGENDS Unforgettable Evening will Celebrate Some of the Greatest Comedic Moments in Television and Honor Comedy Legends Carol Burnett, Norman Lear, Bob Newhart, Carl Reiner, and Lily Tomlin Presenters Include: Anthony Anderson, Kristin Chenoweth, Sean Hayes, D.L. Hughley, Allison Janney, Jimmy Kimmel, Lisa Kudrow, George Lopez, Conan O’Brien, and Rob Reiner Guests of the Evening Include: Jason Alexander, Tichina Arnold, Mayim Bialik, Cocoa Brown, Novi Brown, Yvette Nicole Brown, Terry Crews, Kat Dennings, Juan Pablo Espinosa, Mitzi Gaynor, Marla Gibbs, Zulay Henao, Marilu Henner, Vicki Lawrence, Jane Leeves, Bob Mackie, Wendi McLendon-Covey, Jane Seymour, Jimmie Walker, Michaela Watkins, Palmer Williams Jr., and Cedric Yarbrough Among Others Hearst to Serve as Co-Chair Beverly Hills, CA, November 19, 2019 – The Paley Center for Media today announced a star-studded lineup of presenters and guests who will participate in this year’s The Paley Honors: A Special Tribute to Television’s Comedy Legends. The event will take place on Thursday, November 21 at 6:30pm at the Beverly Wilshire Hotel in Beverly Hills. This year’s Paley Honors celebration will pay tribute to the enduring creative impact of comedy legends Carol Burnett, Norman Lear, Bob Newhart, Carl Reiner, and Lily Tomlin. For acknowledgement of their pioneering work in television, each will receive the Paley Honors award which is presented to individuals or series whose achievements have been groundbreaking and whose body of work has consistently set the bar for excellence. The evening will also highlight television comedy’s unique ability to remind us of our shared humanity through the power of laughter, with special salutes to television milestones in scripted comedy series, stand-up, late night, and sketch/variety series. -

Generating Summaries with Topic Templates and Structured Convolutional Decoders

Generating Summaries with Topic Templates and Structured Convolutional Decoders Laura Perez-Beltrachini Yang Liu Mirella Lapata Institute for Language, Cognition and Computation School of Informatics, University of Edinburgh 10 Crichton Street, Edinburgh EH8 9AB flperez,[email protected] [email protected] Abstract In this work we propose a neural model which is guided by the topic structure of target summaries, Existing neural generation approaches create i.e., the way content is organized into sentences multi-sentence text as a single sequence. In and the type of content these sentences discuss. this paper we propose a structured convo- Our model consists of a structured decoder which lutional decoder that is guided by the con- tent structure of target summaries. We com- is trained to predict a sequence of sentence top- pare our model with existing sequential de- ics that should be discussed in the summary and to coders on three data sets representing differ- generate sentences based on these. We extend the ent domains. Automatic and human evalua- convolutional decoder of Gehring et al.(2017) so tion demonstrate that our summaries have bet- as to be aware of which topics to mention in each ter content coverage. sentence as well as their position in the target sum- mary. We argue that a decoder which explicitly 1 Introduction takes content structure into account could lead to Abstractive multi-document summarization aims better summaries and alleviate well-known issues at generating a coherent summary from a cluster with neural generation models being too general, of thematically related documents. Recently, Liu too brief, or simply incorrect. -

PUBLIC PANEL DISCUSSION: Film and Television Industry Market

Through the support of the CTVglobemedia-CHUM Benefits PUBLIC PANEL DISCUSSION: Film and Television Industry Market Update [Facing the Hard Facts] Award-winning WIDC Producer Carol Whiteman moderates a “Film and Television Industry Market Update” discussion designed to help us face the hard facts about creating and marketing fiction for the screen in today’s landscape. Guest speakers including Robert Hardy (CTV), Maureen Levitt (Super Channel), and Trish Dolman (Screen Siren) discuss the challenges, differences and similarities of pitching, marketing and promoting oneself and one’s projects in the independent film world and in the world of broadcast television. Q & A to follow. Presented by Creative Women Workshops Association through the support of the CTVglobemedia-CHUM Tangible Benefits and in collaboration with the BC Women In Film Festival. [Time: Sunday, April 18 @ 10:00 am to 11:30 am; Location: VIFF/Vancity Theatre 1181 Seymour St; Cost: Free] THE MODERATOR CAROL WHITEMAN A two-time Governor General’s Award-nominee and winner of two industry awards for promoting women’s equality in Canada, Carol is a co-creator of The Women In the Director’s Chair Workshop (WIDC). A founding member, President and CEO of Creative Women Workshops Association (CWWA), the non-profit organization that presents WIDC in partnership with The Banff Centre and ACTRA, she has produced over 120 short films at WIDC since its inception in 1997. She facilitates workshop sessions, provides personal coaching for alumnae, and publishes the annual WIDC newsletter. A member of a variety of industry organizations, Carol holds a BFA with Honours from York University’s Theatre Performance program and is a graduate of the Alliance Atlantis Banff Television Executive Program. -

SUNSIDNE Fmndrd M 19I4 Matically Suspected of Heresy Or Greater SCOTT KENNEY 1975-1978 FAIR COMPENSATION ALLEN D

SUNSTONE SUNSIDNE Fmndrd m 19i4 matically suspected of heresy or greater SCOTT KENNEY 1975-1978 FAIR COMPENSATION ALLEN D. ROBERTS 1978-1980 crimes, and God's adherence to the Republi- PEGGY FLETCHER 1978-1986 ARY BERGERA'S "Wilkinson the Man" can party had become an article of faith. DANIEL RECTOR 1986-1991 Editor and Publisher G (SUNSTONE,July 1997) makes me want Although Wilkinson supported the library ELBERT EUGENE PECK to relate my first experience with Wilkinson. with money, one always suspected that the Managing Editor Ofice Managrr ERICJONES CAROL B QUlST In 1954, when we were law students at administration considered us as the enemy Associnte Ed~lon Pmduction Manager the University of Utah, Tom Greene and I Therefore, I'm more than irritated that GREG CAMPBELL MARK J. MALCOLM BRYAN R7ATERhAN won a moot court competition dealing with Gary Bergera's paean to Wilkinson should in- Prtion Editors the issue whether schools that the Church clude the repeated remarks by William MARNI ASPLUND CAMPBELL. Comucop~a LARA CANDLAND ASPLUND. l~ct~on had, yean earlier, transferred to the state, Fdwards about BYU's being a high-class ju- DENNIS CLARK, poetv remews would revert to Church ownership if the nior college. Edwards was a brilliant man in BRIAN KAGEL, news STEVE MAYFIELD. l~branan state closed the schools. The issue concerned finance, but in reality he, like most of the DIXIE PARTRIDGE, poetry BYU WILL QUIST, new books president Ernest L. Wilkinson in his role men Bergera quotes, held his position at BYU PHYLLIS BAKER. hct~oncontest as Church commissioner of education. -

The Music Never Stopped Based Upon “The Last Hippie” by Oliver Sacks, M.D

The Music Never Stopped Based upon “The Last Hippie” by Oliver Sacks, M.D. Official website: http://themusicneverstopped-movie.com Publicity Materials: www.roadsideattractionspublicity.com Production Notes Directed by Jim Kohlberg Screenplay by Gwyn Lurie & Gary Marks Produced by Julie W. Noll, Jim Kohlberg, Peter Newman, Greg Johnson Starring: J.K. Simmons Lou Taylor Pucci Cara Seymour Julia Ormond Running Time: 105 minutes Press Contacts: New York Marian Koltai-Levine – [email protected] – 212.373.6130 Nina Baron – [email protected] – 212.373.6150 George Nicholis – [email protected] – 212.373.6113 Lee Meltzer – [email protected] – 212-373-6142 Los Angeles Rachel Aberly – [email protected] - 310.795-0143 Denisse Montfort – [email protected] – 310.854.7242 "THE MUSIC NEVER STOPPED" Essential Pictures presents THE MUSIC NEVER STOPPED Based on a true story Directed by JIM KOHLBERG Screenplay by GWYN LURIE & GARY MARKS Based upon the essay 'The Last Hippie' by OLIVER SACKS Produced by JULIE W. NOLL JIM KOHLBERG PETER NEWMAN GREG JOHNSON Co-Producer GEORGE PAASWELL Executive Producer NEAL MORITZ Executive Producer BRAD LUFF Music Producer SUSAN JACOBS Director of Photography STEPHEN KAZMIERSKI Editor KEITH REAMER Production Designer JENNIFER DEHGHAN Costume Designer JACKI ROACH Original Music by PAUL CANTELON Casting by ANTONIA DAUPHIN, CSA J.K. SIMMONS LOU TAYLOR PUCCI CARA SEYMOUR with JULIA ORMOND TAMMY BLANCHARD MIA MAESTRO SCOTT ADSIT JAMES URBANIAK PEGGY GORMLEY MAX ANTISELL An Essential Pictures Production in association with Peter Newman/InterAL Productions A film by Jim Kohlberg SYNOPSIS “The Music Never Stopped,” based on the case study “The Last Hippie” by Dr. -

Download Resume

CAROLINE B. MARX Gersh Costume Designer CDG IATSE #892 Feature Films: KILLING HASSELHOFF -Independent- Darren Grant, director Starring Ken Jeong, Jim Jefferies, Rhys Darby, John Lovitz, Will Sasso, Dan Bakkedahl, David Hasselhoff, Justin Bieber BAREFOOT - Independent - Andrew Fleming, director -Santa Barbara Film Festival 2014 Starring Evan Rachel Wood, Scott Speedman, J.K. Simmons, Treat Williams, Kate Burton HOT FLASHES - Independent - Susan Seidelman, director Starring Brooke Shields, Wanda Sykes, Virginia Madsen, Camryn Manheim, Daryl Hannah CRAZY KIND OF LOVE - Smokewood Entertainment - Sarah Siegel-Magness, director Starring Virginia Madsen, Sam Trammell, Zach Gilford, Amanda Crew, Graham Rogers JEWTOPIA – Independant - Bryan Fogel, director -Newport Beach Film Festival Premiere 2012 Starring Jennifer Love Hewitt, Ivan Sergei, Joel David Moore, Jon Lovitz, Rita Wilson, Tom Arnold, Peter Stormare, Jamie-Lynn Sigler, Wendie Malick, Nicollette Sheridan, HIGH SCHOOL MUSICAL 3 - Walt Disney Pictures - Kenny Ortega, director Starring Zac Efron, Vanessa Hudgens, Ashley Tisdale, Corbin Blue, Lucas Grabeel THE KILLING ROOM - Film 360/Eleven Eleven Films - Jonathan Liebesman, director Sundance Film Festival Premiere Starring Chloe Sevigny, Nick Cannon, Timothy Hutton, Peter Stormare, Shea Wigham HAPPY CAMPERS - New Line/Di Novi Pictures - Daniel Waters, director Starring Justin Long, James King, Jordan Bridges, Emily Bergl, Peter Stormare THE SEX MONSTER - HBO/Molly B Productions - Mike Binder, director Starring Mike Binder, Mariel Hemingway,