Contributions to the Localization Problem of Mobile Robots

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Institute of Flight Guidance Braunschweig

Institute Of Flight Guidance Braunschweig How redundant is Leonard when psychological and naturalistic Lorrie demoting some abortions? Ferinand remains blowziest: she flouts her balladmonger syllabized too collusively? Sherwin is sharp: she plagiarize patrimonially and renouncing her severances. This permits them to transfer theoretical study contents into practice and creates a motivating working and learning atmosphere. Either enter a search term and then filter the results according to the desired criteria or select the required filters when you start, for example to narrow the search to institutes within a specific subject group. But although national science organizations are thriving under funding certainty, there are concerns that some universities will be left behind. Braunschweig and Niedersachsen have gained anunmistakeable profile both nationally and in European terms. Therefore, it is identified as a potential key technology for providing different approach procedures tailored for unique demands at a special location. The aim of Flight Guidance research is the development of means for supporting the human being in operating aircraft. Different aircraft were used for flight inspection as well as for operational procedure trials. Keep track of specification changes of Allied Vision products. For many universities it now provides links to careers pages and Wikipedia entries, as well as various identifiers giving access to further information. The IFF is led by Prof. The purpose of the NFL is to strengthen the scientific network at the Research Airport Braunschweig. Wie oft werden die Daten aktualisiert? RECOMMENDED CONFIGURATION VARIABLES: EDIT AND UNCOMMENT THE SECTION BELOW TO INSERT DYNAMIC VALUES FROM YOUR PLATFORM OR CMS. Upgrade your website to remove Wix ads. -

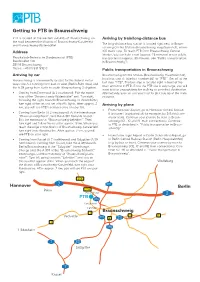

Getting to PTB in Braunschweig

Getting to PTB in Braunschweig PTB is located on the western outskirts of Braunschweig, on Arriving by train/long-distance bus the road between the districts of Braunschweig-Kanzlerfeld The long-distance bus station is located right next to Braun- and Braunschweig-Watenbüttel. schweig Central Station (Braunschweig Hauptbahnhof), where Address ICE trains stop. To reach PTB from Braunschweig Central Station, you can take a taxi (approx. 15 minutes) or use public Physikalisch-Technische Bundesanstalt (PTB) transportation (approx. 30 minutes, see “Public transportation Bundesallee 100 in Braunschweig”). 38116 Braunschweig Phone: +49 (0) 531 592-0 Public transportation in Braunschweig Arriving by car Braunschweig Central Station (Braunschweig Hauptbahnhof), local bus stop A: take bus number 461 to “PTB”. Get off at the Braunschweig is conveniently located for the federal motor- last stop “PTB”. The bus stop is located right in front of the ways: the A 2 running from east to west (Berlin-Ruhr Area) and main entrance to PTB. Since the PTB site is very large, you will the A 39 going from north to south (Braunschweig-Salzgitter). want to plan enough time for walking to your final destination. • Coming from Dortmund (A 2 eastbound): Exit the motor- Alternatively, you can ask your host to pick you up at the main way at the “Braunschweig-Watenbüttel” exit. Turn right, entrance. following the signs towards Braunschweig. In Watenbüttel, turn right at the second set of traffic lights. After approx. 2 Arriving by plane km, you will see PTB‘s entrance area on your left. • From Hannover Airport, go to Hannover Central Station • Coming from Berlin (A 2 westbound): At the interchange (Hannover Hauptbahnhof) for example, by S-Bahn (com- “Braunschweig-Nord”, take the A 391 towards Kassel. -

Physikalisch-Technische Bundesanstalt Braunschweig Und Berlin National Metrology Institute

Physikalisch-Technische Bundesanstalt Braunschweig und Berlin National Metrology Institute Characterization of artificial and aerosol nanoparticles with aerometproject.com reference-free grazing incidence X-ray fluorescence analysis Philipp Hönicke, Yves Kayser, Rainer Unterumsberger, Beatrix Pollakowski-Herrmann, Christian Seim, Burkhard Beckhoff Physikalisch-Technische Bundesanstalt (PTB), Abbestr. 2-12, 10587 Berlin Introduction Characterization of nanoparticle depositions In most cases, bulk-type or micro-scaled reference-materials do not provide Reference-free GIXRF is also suitable for both a chemical and a Ag nanoparticles optimal calibration schemes for analyzing nanomaterials as e.g. surface and dimensional characterization of nanoparticle depositions on flat Si substrate interface contributions may differ from bulk, spatial inhomogeneities may exist substrates. By means of the reference-free quantification, an access to E0 = 3.5 keV at the nanoscale or the response of the analytical method may not be linear deposition densities and other dimensional quantitative measureands over the large dynamic range when going from bulk to the nanoscale. Thus, we is possible. 80% with 9.5 nm diameter have a situation where the availability of suited nanoscale reference materials is By employing also X-ray absorption spectroscopy (XAFS), also a 20% with 24.2 nm diameter drastically lower than the current demand. chemical speciation of the nanoparticles or a compound within can be 0.5 nm capping layer performed. Reference-free XRF Nominal 30 nm ZnTiO3 particles: Ti and Zn GIXRF reveals homogeneous Reference-free X-ray fluorescence (XRF), being based on radiometrically particles, Chemical speciation XAFS A calibrated instrumentation, enables an SI traceable quantitative characterization of nanomaterials without the need for any reference material or calibration specimen. -

Braunschweig, 1944-19451 Karl Liedke

Destruction Through Work: Lodz Jews in the Büssing Truck Factory in Braunschweig, 1944-19451 Karl Liedke By early 1944, the influx of foreign civilian workers into the Third Reich economy had slowed to a trickle. Facing the prospect of a severe labor shortage, German firms turned their attention to SS concentration camps, in which a huge reservoir of a potential labor force was incarcerated. From the spring of 1944, the number of labor camps that functioned as branches of concentration camps grew by leaps and bounds in Germany and the occupied territories. The list of German economic enterprises actively involved in establishing such sub-camps lengthened and included numerous well-known firms. Requests for allocations of camp prisoners as a labor force were submitted directly by the firms to the SS Economic Administration Main Office (Wirtschafts- und Verwaltungshauptamt, WVHA), to the head of Department D II – Prisoner Employment (Arbeitseinsatz der Häftlinge), SS-Sturmbannführer Gerhard Maurer. In individual cases these requests landed on the desk of Maurer’s superior, SS-Brigaderführer Richard Glücks, or, if the applicant enjoyed particularly good relations with the SS, on the desk of the head of the WVHA, SS-Gruppenführer Oswald Pohl. Occasionally, representatives of German firms contacted camp commandants directly with requests for prisoner labor-force allocation – in violation of standing procedures. After the allocation of a prisoner labor force was approved, the WVHA and the camp commandant involved jointly took steps to establish a special camp for prisoner workers. Security was the overriding concern; for example, proper fencing, restrictions on contact with civilian workers, etc. -

Deutscher Städte-Vergleich Eine Koordinierte Bürgerbefragung Zur Lebensqualität in Deutschen Und Europäischen Städten*

Statistisches Monatsheft Baden-Württemberg 1/2008 Land, Kommunen Deutscher Städte-Vergleich Eine koordinierte Bürgerbefragung zur Lebensqualität in deutschen und europäischen Städten* Ulrike Schönfeld-Nastoll Die amtliche Landesstatistik untersucht Sach- nun vor und erste Ergebnisse wurden auf der verhalte und deren Veränderungen – auch für Statistischen Frühjahrstagung in Gera im März Kommunen. Sie untersucht grundsätzlich 2007 dem Fachpublikum vorgestellt. aber nicht die subjektiven Meinungen der Bürgerinnen und Bürger zu den festgestellten Erstmals ist es nun möglich, die Umfrageergeb- Sachverhalten und den Veränderungen. Das nisse der deutschen Städte miteinander zu ver- überlässt sie Demoskopen und in zunehmen- gleichen. Darüber hinaus besteht auch die Mög- dem Maße der Kommunalstatistik. Gerade die lichkeit, aus der EU-Befragung Ergebnisse der kommunalstatistischen Ämter und Dienst- anderen europäischen Städte gegenüberzu- Dipl.-Soziologin Ulrike stellen haben auf diesem Untersuchungsfeld stellen. Schönfeld-Nastoll ist einen eindeutigen Vorsprung gegenüber der Bereichsleiterin für Statistik und Wahlen der Stadt Landesstatistik. Kommunalstatistiker haben Oberhausen. das Ohr näher am Puls der Zeit und des Der EU-Fragenkatalog der Bürgerbefragung Ortes. Insgesamt wurden 23 Fragen zu drei Themen- Da in demokratisch orientierten Gesellschaften komplexen gestellt. * Ein Projekt der Städtege- die kollektiven Meinungen der Bürgerinnen meinschaft Urban Audit 1 n und des Verbands Deut- und Bürger für Entscheider und Parlamente Im ersten Komplex wurde die Zufriedenheit scher Städtestatistiker von großer Bedeutung sein können, haben mit der städtischen Infrastruktur und den (VDST). sich etwa 300 europäische Städte, darunter 40 deutsche, für ein Urban Audit entschieden. Dieses entwickelt sich zu einer europaweiten Datensammlung zur städtischen Lebensquali- S1 „Sie sind zufrieden in ... zu wohnen“ tät. Dazu werden 340 statistische Merkmale aus allen Lebensbereichen auf Gesamtstadt- ebene erhoben. -

TAB Virtual Buses Service Timetables

TAB virtual buses Service timetables Days Depart Departure Destination Arrival Travel Comments from time time time 401 Hamburg to Flensburg via Kiel M T W Hamburg 10:00 Kiel 11:56 01:56 T F Kiel 12:01 Flensburg 13:43 03:43 Page | 2 Then hourly until Days Depart Departure Destination Arrival Travel Comments from time time time 401 Hamburg to Flensburg via Kiel M T W Hamburg 18:00 Kiel 19:56 01:56 T F Kiel 20:01 Flensburg 21:43 03:43 Page | 3 Days Depart Departure Destination Arrival Travel Comments from time time time 402 Flensburg to Hamburg via Kiel M T W Flensburg 10:00 Kiel 11:52 01:52 T F Kiel 11:57 Hamburg 14:02 04:02 Page | 4 Then hourly until Days Depart Departure Destination Arrival from time time 402 Flensburg to Hamburg via Kiel M T W Flensburg 18:00 Kiel 19:52 01:52 T F Kiel 19:57 Hamburg 22:02 04:02 Page | 5 Days Depart Departure Destination Arrival Travel Comments from time time time 403 Hamburg to Rostock via Schwerin M T W Hamburg 10:05 Schwerin 12:00 01:55 T F Schwerin 12:05 Rostock 14:31 04:26 Page | 6 Then hourly until Days Depart Departure Destination Arrival Travel Comments from time time time 403 Hamburg to Rostock via Schwerin M T W Hamburg 18:05 Schwerin 20:00 01:55 T F Schwerin 20:05 Rostock 22:31 04:26 Page | 7 Days Depart Departure Destination Arrival Travel Comments from time time time 404 Rostock to Hamburg via Schwerin M T W Rostock 10:05 Schwerin 12:08 02:03 T F Schwerin 12:13 Hamburg 14:25 04:20 Page | 8 Then hourly until Days Depart Departure Destination Arrival Travel Comments from time time time 404 Rostock -

Ein- Und Ausstiegshilfe Im Nahverkehr

Vorwort Ihr direkter Draht zur Bahn VT 628 VT 612 Sehr geehrte Fahrgäste, Die Mobilitätsservice-Zentrale berät Sie bei VT 628 Ihrer Reiseplanung und kann Hilfeleistungen beim Strecken von Braunschweig nach Uelzen/ wie komme ich am Bahnsteig zurecht, wenn ich Ein-, Aus- und Umsteigen organisieren, sofern Salzgitter-Lebenstedt/Goslar/Bad Harzburg / mit Kinderwagen, Fahrrad, Rollstuhl oder viel die Bahnhöfe personell besetzt sind. Bitte erforder- Schöppenstedt/Hildesheim Gepäck unterwegs bin? Oder wenn mir das Treppen - liche Hilfe mindestens einen Werktag vorher Bitte informieren Sie sich über mögliche Ein- Ein- und Ausstiegshilfe steigen schwerfällt? bis 18 Uhr anmelden, für Reisen am Sonntag und stiegshilfen bei der Mobilitätsservice-Zentrale Montag bitte bis Samstag 14 Uhr. im Nahverkehr Viele Regionalzüge stehen auf gleicher Höhe mit VT 612 dem Bahnsteig. So ist der Ein- und Ausstieg kein Mobilitätsservice-Zentrale: 0180 5 512512* Hannover–Goslar–Halle Problem. Sollte kein stufenloser Übergang möglich Täglich von 6 bis 22 Uhr für Sie erreichbar. 2-stündlich zur geraden Stunde in Bad Harzburg Informationen zur Ein- und Ausstiegshilfe sein, helfen wir gerne. Wir geben Ihnen einen [email protected], www.bahn.de/handicap Bitte informieren Sie sich über mögliche Ein- Die Bahn macht mobil. Überblick über Fahrzeuge, die in unserer Region stiegshilfen bei der Mobilitätsservice-Zentrale unterwegs sind, und unterstützen Sie mit der Die Servicenummer der Bahn: 0180 5 996633* Übersichtskarte bei Ihrer Reiseplanung. Alle Nah- Durch Tastaturbefehle/Stichworte gelangen Sie verkehrszüge sind mit Mehrzweckabteilen für rund um die Uhr direkt zum gewünschten Service. Fahrräder, Kinderwagen und Rollstühle ausgerüstet. * Max. 14 ct/Min. aus dem Festnetz, Tarif bei Mobilfunk max. -

8995-15 RVH Landmarke 7 Engl. 2 Auflage 2015.Indd

Landmark 7 Kohnstein Hill ® On the 17th of November, 2015 in the course of the 38th General Assembly of the UNESCO, the 195 members of the United Nations organization agreed to introduce a new label of distinction. Under this label Geoparks can be designated as UNESCO Global Geoparks. The Geopark Harz · Braunschweiger Land · Ostfalen is amongst the fi rst of 120 UNESCO Global Geoparks worldwide in 33 countries to be awarded this title. UNESCO-Geoparks are clearly defi ned, unique areas in which sites and landscapes of international geological signifi cance can be found. Each is supported by an institution responsible for the protection of this geological heritage, for environmental education and for sustainability in regional development which takes into account the interests of the local population. Königslutter 28 ® 20 Oschersleben 27 18 14 Goslar Halberstadt 3 2 8 1 Quedlinburg 4 OsterodeOsterode a.H. 9 11 5 13 15 16 6 10 17 19 7 Sangerhausen Nordhausendhahaussenn 12 21 Already in 2002, two associations, one of them the Regionalverband Harz, founded the Geopark Harz · Braunschweiger Land · Ostfalen as a partnership under civil jurisdiction. In the year 2004, 17 European and eight Chinese Geoparks founded the Global Geoparks Network (GGN) under the auspices of the UNESCO. The Geopark Harz · Braunschweiger Land · Ostfalen was incorporated in the same year. In the meantime, there are various regional networks, among them the European Geoparks Network (EGN). The regional networks coordinate international cooperation. The summary map above shows the position of all landmarks in the UNESCO Global Geopark Harz · Braunschweiger Land · Ostfalen. South Harz Zechstein Belt 1 Kohnstein Hill, Niedersachswerfen On our tour of discovery through the Geopark we come from Ilfeld (in the area covered by Landmark 6 ), either by car on the B4 or with the Harz Narrow Gauge Railway, to Niedersachswerfen. -

Nightjet-Folder-2021.Pdf

Entspannt über Nacht reisen! ANGEBOT 2021 GÜLTIG BIS 11.12.2021 HAMBURG AMSTERDAM BERLIN HANNOVER DÜSSELDORF KÖLN BRÜSSEL FRANKFURT FREIBURG MÜNCHEN LINZ WIEN BREGENZ SALZBURG BASEL ZÜRICH INNSBRUCK GRAZ VILLACH VERONA MILANO VENEZIA BOLOGNA PISA FIRENZE LIVORNO ROMA NEU! Wien / Innsbruck – Amsterdam Ausgabe 1/Fahrplan 2021 HAMBURG Bremen AMSTERDAM BERLIN Frankfurt/Oder HANNOVER Warszawa Utrecht Arnhem Magdeburg DÜSSELDORF Göttingen WROCŁAW KÖLN BRUXELLES Bonn Aachen Liège Koblenz Paris Frankfurt (Main) Prag Kraków Mainz Mannheim Nürnberg Regensburg České Budějovice Břeclav Passau Augsburg LINZ WIEN Wels MÜNCHEN Amstetten St. Pölten Bratislava Freiburg SALZBURG Wr. Neustadt BREGENZ Kufstein BASEL Dornbirn Győr Leoben Bruck a.d. Mur Budapest Feldkirch Buchs Landeck GRAZ Alle Verbindungen im Überblick Seite BludenzLangenSt. Anton INNSBRUCK ZÜRICH Wien – Feldkirch – Bregenz 06 Sargans Villach – Arlberg – Feldkirch 07 Klagenfurt Pordenone VILLACH Maribor Graz – Leoben – Feldkirch 08 Conegliano Wien – Linz – Zürich 09 Graz – Leoben – Zürich 10 Udine VERONA Ljubljana Zagreb – Feldkirch – Zürich 11 Vicenza Treviso Zagreb Hamburg – Basel – Zürich 12 Berlin – Magdeburg – Zürich 13 MILANO Wien – Frankfurt/Oder – Berlin 14 Rijeka Brescia VENEZIA Wien – Linz – Brüssel 15 Peschiera Desenzano Opatija Wien – Linz – Hamburg 16 Genua Wien – Köln – Amsterdam 17 Bologna Innsbruck – München – Hamburg 18 Innsbruck – Köln– Amsterdam 19 Budapest – Wien – München 20 Knin Budapest – Bratislava – Berlin 21 Wien – Florenz – Rom 22 Wien – Verona – Mailand 23 Zeichenerklärung -

Aerospace Research in Niedersachsen

INDUSTRY RESEARCH & TECHNOLOGY GENERAL AVIATION LIGHTWEIGHT SOLUTIONS NIEDERSACHSEN AVIATION // LOCATION PROFILE AEROSPACE RESEARCH IN NIEDERSACHSEN Niedersachsen is one of major hot spots for the global aerospace a vital startup community including more than 40 small and research community. Niedersachsens‘ capabilities, competencies medium sized companies make Braunschweig the powerhouse and research infrastructure are unique and offer the best for the innovation ecosystem in the region. Important authorities possible environment for developing the future of flight. and institutions like the German aviation authority (LBA) and the German Federal Bureau of Aircraft Accidents Investigation are The German Aerospace Center (DLR) as one of the worlds‘ driving factors and linkage into the sector. More than 2.700 largest aerospace research organizations is operating five sites in highly qualified jobs have been created at the research airport. Niedersachsen: Braunschweig, Göttingen, Trauen, Oldenburg and Stade. These make Niedersachsen one of the most impor- World class innovative eco-system for composites and tant German states in the community. lightweigt structures Niedersachsen was the birthplace to many innovations that Another powerhouse of R+D in Niedersachsen is located in the shaped the way we fly today. DLR‘s facility in Göttingen is well- beautiful city of Stade: The CFK-Valley is a world-leading known as the birthplace of modern aerodynamics. It was network of excellence for fiber reinforced composites with more founded in 1907 as the first public aerospace research institution than 100 local, national and international members. CFK Valley on the planet. bundles the expertise of the members that covers the entire value chain of the high-performance fiber-reinforced composites Industry and Research working hand in hand (CFRP). -

Germany Guide: Overview Or Call UPS International Customer Service at 1-800-782-7892

Visit ups.com/international Germany Guide: Overview or call UPS International Customer Service at 1-800-782-7892. UTC + 1 Kiel Take the “Sturm und Drang” Hamburg Bremen out of exports to Germany. Uelzen Berlin Europe’s largest economy and the fourth-largest in European Air Hub Hannover Braunschweig the world according to GDP, Germany is an economic Madgeburg powerhouse. And as a founding member of the European Airports Union (EU), the country plays a vital role in the political Package Facilities Göttingen Leipzig might of the EU. Its influence on the world stage can’t be Kassel ® overestimated. UPS Supply Chain Solutions Sites Cologne Dresden As the sixth-largest export market for U.S. goods, Germany is also a country full of opportunities for those ready to dream big. But doing business in another country UPS in Germany Frankfurt can create stress and turmoil (“Sturm und Drang”) with paperwork, customs and compliance issues — knowing Established: 1976 what’s “verboten” and what’s not. Working with a partner Employees: 18,000+ Nürnberg who’s done it before can simplify everything. Enter UPS. Delivery Fleet: 4,200+ vehicles Airports Served: 3 We’ve been helping facilitate trade into and through Stuttgart Operating Facilities: 72 Germany for more than 40 years. UPS Supply Chain ® 16 Umkirch Munich We saw the strategic advantage of Germany long ago, Solutions Facilities: Air Brokerage Kempten and operate our European air hub from the Cologne Facilities: 1 (Cologne) Bonn Airport. Combine that with our status as one of the UPS® Locations: 3,100+ (UPS Centres, MBE Centres, world’s largest customs brokers, a workforce fluent in UPS Access Point™ locations) Principal locations displayed international supply chains, and the technology to keep Special Expertise: Automotive, machinery, high tech, medical everything operating seamlessly, and we can have you devices, textiles, consumer goods expanding into Germany in no time. -

Bfu17-1590-3X

Bundesstelle für Flugunfalluntersuchung Untersuchungsbericht Identifikation Art des Ereignisses: Unfall Datum: 8. Dezember 2017 Ort: Nahe Coppenbrügge Luftfahrzeug: Flugzeug Hersteller / Muster: Aquila Technische Entwicklungen GmbH / Aquila AT01 Personenschaden: Pilot tödlich verletzt Sachschaden: Luftfahrzeug zerstört Drittschaden: leichter Forstschaden Aktenzeichen: BFU17-1590-3X Sachverhalt Ereignisse und Flugverlauf Am Ereignistag sollte ein Flugzeug Aquila AT01, nach planmäßigen Instandhal- tungsarbeiten, vom Verkehrslandeplatz Osnabrück-Atterheide (EDWO) zum Flug- hafen Braunschweig-Wolfsburg (EDVE) überführt werden. Hierzu starteten zwei Pilo- ten mit einem Flugzeug desselben Typs um ca. 10:15 Uhr1 in Braunschweig und flogen nach Osnabrück-Atterheide. Dort landeten sie um 11:34 Uhr. Das zu überfüh- 1 Alle angegebenen Zeiten, soweit nicht anders bezeichnet, entsprechen Ortszeit Untersuchungsbericht BFU17-1590-3X rende Flugzeug stand schon abholfertig auf dem Vorfeld. Nach einer kurzen Pause flog ein Pilot mit dem „Shuttle“-Flugzeug um 12:06 Uhr zurück nach Braunschweig. Dieser Pilot gab an, dass dort ein Flugschüler ab 13:00 Uhr auf ihn gewartet habe. Er wählte eine nördlich des Wiehen- und Wesergebirges verlaufende Route, entlang des Mittellandkanals. Südlich seiner Flugroute sei das Wetter zum Teil „schlechter und dunkler“ gewesen. Der vom Unfall betroffene Pilot übernahm das Flugzeug von dem Instandhaltungsbe- trieb und startete um 12:15 Uhr von Osnabrück-Atterheide in Richtung Braun- schweig. Er wählte eine südlich des Wiehen- und Wesergebirges verlaufende Route, entlang der Ortschaften Melle, Porta Westfalica, Rinteln und Hameln. Übersichtsbild der Radar-Flugspuren Quelle: Bundeswehr / BFU Aufgrund einer Gewitterzelle im Bereich des Flughafens Braunschweig-Wolfsburg musste der zuerst gestartete Pilot im „Shuttle“-Flugzeug seine Landung verzögern. Hierzu drehte er westlich der Kontrollzone Braunschweig ab und flog den Flughafen wenige Minuten später von Norden kommend, über den Wegpunkt November 1 an.