Experimental Philosophy of Science and Philosophical Differences Across the Sciences

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Social Sciences—How Scientific Are They?

31 The Social Sciences—How Scientifi c Are They? Manas Sarma or Madame Curie. That is, he social sciences are a very important and amazing in their own way. fi eld of study. A division of science, social sciences Tembrace a wide variety of topics from anthropology A better example of a social to sociology. The social sciences cover a wide range of science than law may be topics that are crucial for understanding human experience/ economics. economics behavior in groups or as individuals. is, in a word, fi nances. Economics is the study By defi nition, social science is the branch of science that deals of how money changes, the rate at which it changes, and with the human facets of the natural world (the other two how it potentially could change and the rate at which it branches of science are natural science and formal science). would. Even though economics does not deal with science Some social sciences are law, economics, and psychology, to directly, it is defi nitely equally scientifi c. About 50-60% of name a few. The social sciences have existed since the time colleges require calculus to study business or economics. of the ancient Greeks, and have evolved ever since. Over Calculus is also required in some science fi elds, like physics time, social sciences have grown and gained a big following. or chemistry. Since economics and science both require Some colleges, like Yale University, have chosen to focus calculus, economics is still a science. more on the social sciences than other subjects. The social sciences are more based on qualitative data and not as Perhaps the most scientifi c of the social sciences is black-and-white as the other sciences, so even though they psychology. -

1 Forthcoming in Oxford Studies in Experimental Philosophy Causal Reasoning

Forthcoming in Oxford Studies in Experimental Philosophy Causal Reasoning: Philosophy and Experiment* James Woodward History and Philosophy of Science University of Pittsburgh 1. Introduction The past few decades have seen much interesting work, both within and outside philosophy, on causation and allied issues. Some of the philosophy is understood by its practitioners as metaphysics—it asks such questions as what causation “is”, whether there can be causation by absences, and so on. Other work reflects the interests of philosophers in the role that causation and causal reasoning play in science. Still other work, more computational in nature, focuses on problems of causal inference and learning, typically with substantial use of such formal apparatuses as Bayes nets (e.g. , Pearl, 2000, Spirtes et al, 2001) or hierarchical Bayesian models (e.g. Griffiths and Tenenbaum, 2009). All this work – the philosophical and the computational -- might be described as “theoretical” and it often has a strong “normative” component in the sense that it attempts to describe how we ought to learn, reason, and judge about causal relationships. Alongside this normative/theoretical work, there has also been a great deal of empirical work (hereafter Empirical Research on Causation or ERC) which focuses on how various subjects learn causal relationships, reason and judge causally. Much of this work has been carried out by psychologists, but it also includes contributions by primatologists, researchers in animal learning, and neurobiologists, and more rarely, philosophers. In my view, the interactions between these two groups—the normative/theoretical and the empirical -- has been extremely fruitful. In what follows, I describe some ideas about causal reasoning that have emerged from this research, focusing on the “interventionist” ideas that have figured in my own work. -

Science of Team Science and Collaborative Research

Stephen M. Fiore, Ph.D. University of Central Florida Cognitive Sciences, Department of Philosophy and Institute for Simulation & Training Fiore, S. M. (2015). The Science of Team Science and Collaborative Research. Invited Colloquium, University of Cincinnati, Office of Research Advanced Seminar Series. October 19th, Cincinnati, OH. This work by Stephen M. Fiore, PhD is licensed under a Creative Commons Attribution-NonCommercial- NoDerivs 3.0 Unported License 2012. Not for commercial use. Approved for redistribution. Attribution required. ¡ Part 1. Laying Founda1on for a Science of Team Science ¡ Part 2. Developing the Science of Team Science ¡ Part 3. Applying Team Theory to Scienfic Collaboraon § 3.1. Of Teams and Tasks § 3.2. Leading Science Teams § 3.3. Educang and Training Science Teams § 3.4. Interpersonal Skills in Science Teams ¡ Part 4. Resources on the Science of Team Science ISSUE - Dealing with Scholarly Structure ¡ Disciplines are distinguished partly for historical reasons and reasons of administrative convenience (such as the organization of teaching and of appointments)... But all this classification and distinction is a comparatively unimportant and superficial affair. We are not students of some subject matter but students of problems. And problems may cut across the borders of any subject matter or discipline (Popper, 1963). ISSUE - Dealing with University Structure ¡ What is critical to realize is that “the way in which our universities have divided up the sciences does not reflect the way in which nature has divided up its problems” (Salzinger, 2003, p. 3) To achieve success in scientific collaboration we must surmount these challenges. Popper, K. (1963). Conjectures and Refutations: The Growth of Scientific Knowledge. -

A Comprehensive Framework to Reinforce Evidence Synthesis Features in Cloud-Based Systematic Review Tools

applied sciences Article A Comprehensive Framework to Reinforce Evidence Synthesis Features in Cloud-Based Systematic Review Tools Tatiana Person 1,* , Iván Ruiz-Rube 1 , José Miguel Mota 1 , Manuel Jesús Cobo 1 , Alexey Tselykh 2 and Juan Manuel Dodero 1 1 Department of Informatics Engineering, University of Cadiz, 11519 Puerto Real, Spain; [email protected] (I.R.-R.); [email protected] (J.M.M.); [email protected] (M.J.C.); [email protected] (J.M.D.) 2 Department of Information and Analytical Security Systems, Institute of Computer Technologies and Information Security, Southern Federal University, 347922 Taganrog, Russia; [email protected] * Correspondence: [email protected] Abstract: Systematic reviews are powerful methods used to determine the state-of-the-art in a given field from existing studies and literature. They are critical but time-consuming in research and decision making for various disciplines. When conducting a review, a large volume of data is usually generated from relevant studies. Computer-based tools are often used to manage such data and to support the systematic review process. This paper describes a comprehensive analysis to gather the required features of a systematic review tool, in order to support the complete evidence synthesis process. We propose a framework, elaborated by consulting experts in different knowledge areas, to evaluate significant features and thus reinforce existing tool capabilities. The framework will be used to enhance the currently available functionality of CloudSERA, a cloud-based systematic review Citation: Person, T.; Ruiz-Rube, I.; Mota, J.M.; Cobo, M.J.; Tselykh, A.; tool focused on Computer Science, to implement evidence-based systematic review processes in Dodero, J.M. -

Outline of Science

Outline of science The following outline is provided as a topical overview of • Empirical method – science: • Experimental method – The steps involved in order Science – systematic effort of acquiring knowledge— to produce a reliable and logical conclusion include: through observation and experimentation coupled with logic and reasoning to find out what can be proved or 1. Asking a question about a natural phenomenon not proved—and the knowledge thus acquired. The word 2. Making observations of the phenomenon “science” comes from the Latin word “scientia” mean- 3. Forming a hypothesis – proposed explanation ing knowledge. A practitioner of science is called a for a phenomenon. For a hypothesis to be a "scientist". Modern science respects objective logical rea- scientific hypothesis, the scientific method re- soning, and follows a set of core procedures or rules in or- quires that one can test it. Scientists generally der to determine the nature and underlying natural laws of base scientific hypotheses on previous obser- the universe and everything in it. Some scientists do not vations that cannot satisfactorily be explained know of the rules themselves, but follow them through with the available scientific theories. research policies. These procedures are known as the 4. Predicting a logical consequence of the hy- scientific method. pothesis 5. Testing the hypothesis through an experiment – methodical procedure carried out with the 1 Essence of science goal of verifying, falsifying, or establishing the validity of a hypothesis. The 3 types of -

Experimental Philosophy, Robert Kane, and the Concept of Free Will

Journal of Cognition and Neuroethics Experimental Philosophy, Robert Kane, and the Concept of Free Will J. Neil Otte University at Buffalo, The State University of New York Biography J. Neil Otte is presently in the Ph.D. program at the University at Buffalo (SUNY) and works in moral psychology, cognitive science, and the history of philosophy. For six years, he was an adjunct lecturer in philosophy at John Jay College of Criminal Justice in New York City. In the last three years, he has been an organizer of the Buffalo Annual Experimental Philosophy Conference, an organizer for a conference on sentiment and reason in early modern philosophy, and has worked as an ontologist for the research foundation, CUBRC. Acknowledgements The author wishes to thank Gunnar Björnsson, Gregg Caruso, and Oisín Deery for their supportive comments and conversation during the Free Will Conference at the Insight Institute of Neurosurgery and Neuroscience in Fall of 2014, as well as conference organizer Jami Anderson. Publication Details Journal of Cognition and Neuroethics (ISSN: 2166-5087). March, 2015. Volume 3, Issue 1. Citation Otte, J. Neil. 2015. “Experimental Philosophy, Robert Kane, and the Concept of Free Will.” Journal of Cognition and Neuroethics 3 (1): 281–296. Experimental Philosophy, Robert Kane, and the Concept of Free Will J. Neil Otte Abstract Trends in experimental philosophy have provided new and compelling results that are cause for re-evaluations in contemporary discussions of free will. In this paper, I argue for one such re-evaluation by criticizing Robert Kane’s well-known views on free will. I argue that Kane’s claims about pre-theoretical intuitions are not supported by empirical findings on two accounts. -

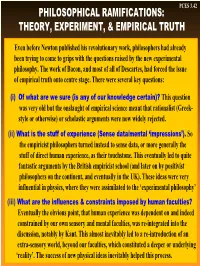

Philosophical Ramifications: Theory, Experiment, & Empirical Truth

PCES 3.42 PHILOSOPHICAL RAMIFICATIONS: THEORY, EXPERIMENT, & EMPIRICAL TRUTH Even before Newton published his revolutionary work, philosophers had already been trying to come to grips with the questions raised by the new experimental philosophy. The work of Bacon, and most of all of Descartes, had forced the issue of empirical truth onto centre stage. There were several key questions: (i) Of what are we sure (is any of our knowledge certain)? This question was very old but the onslaught of empirical science meant that rationalist (Greek- style or otherwise) or scholastic arguments were now widely rejected. (ii) What is the stuff of experience (Sense data/mental ‘impressions’). So the empiricist philosophers turned instead to sense data, or more generally the stuff of direct human experience, as their touchstone. This eventually led to quite fantastic arguments by the British empiricist school (and later on by positivist philosophers on the continent, and eventually in the UK). These ideas were very influential in physics, where they were assimilated to the ‘experimental philosophy’ (iii) What are the influences & constraints imposed by human faculties? Eventually the obvious point, that human experience was dependent on and indeed constrained by our own sensory and mental faculties, was re-integrated into the discussion, notably by Kant. This almost inevitably led to a re-introduction of an extra-sensory world, beyond our faculties, which constituted a deeper or underlying ‘reality’. The success of new physical ideas inevitably helped this process. PCES 3.43 BRITISH EMPIRICISM I: Locke & “Sensations” Locke was the first British philosopher of note after Bacon; his work is a reaction to the European rationalists, and continues to elaborate ‘experimental philosophy’. -

Peer Review of Team Science Research

Peer Review of Team Science Research J. Britt Holbrook School of Public Policy Georgia Institute of Technology 1. Overview This paper explores how peer review mechanisms and processes currently affect team science and how they could be designed to offer better support for team science. This immediately raises the question of how to define teams.1 While recognizing that this question remains open, this paper addresses the issue of the peer review of team science research in terms of the peer review of interdisciplinary and transdisciplinary research. Although the paper touches on other uses of peer review, for instance, in promotion and tenure decisions and in program evaluation, the main 1 Some researchers who assert the increasing dominance of teams in knowledge production may have a minimalist definition of what constitutes a team. For instance, Wuchty, Jones, and Uzzi (2007) define a team as more than a single author on a journal article; Jones (2011) suggests that a team is constituted by more than one inventor listed on a patent. Such minimalist definitions of teams need not entail any connection with notions of interdisciplinarity, since the multiple authors or inventors that come from the same discipline would still constitute a team. Some scholars working on team collaboration suggest that factors other than differences in disciplinary background – factors such as the size of the team or physical distance between collaborators – are much more important determinants of collaboration success (Walsh and Maloney 2007). Others working on the science of teams argue that all interdisciplinary research is team research, regardless of whether all teams are interdisciplinary; research on the science of teams can therefore inform research on interdisciplinarity, as well as research on the science of team science (Fiore 2008). -

Survey Experiments

IU Workshop in Methods – 2019 Survey Experiments Testing Causality in Diverse Samples Trenton D. Mize Department of Sociology & Advanced Methodologies (AMAP) Purdue University Survey Experiments Page 1 Survey Experiments Page 2 Contents INTRODUCTION ............................................................................................................................................................................ 8 Overview .............................................................................................................................................................................. 8 What is a survey experiment? .................................................................................................................................... 9 What is an experiment?.............................................................................................................................................. 10 Independent and dependent variables ................................................................................................................. 11 Experimental Conditions ............................................................................................................................................. 12 WHY CONDUCT A SURVEY EXPERIMENT? ........................................................................................................................... 13 Internal, external, and construct validity .......................................................................................................... -

A Very Useful Guide to Understanding STEM

A Very Useful Guide to Understanding STEM (Science, Technology, Engineering and Maths) By Delphine Ryan Version 2.0 Enquiries: [email protected] 1 © 2018 Delphine Ryan – A very useful guide to understanding STEM INTRODUCTION Imagine a tree with many branches representing human knowledge. We could say that this knowledge may be split into two large branches, with one branch being science and the other being the humanities. From these two large branches, lots of smaller branches have grown, and each represent a specific subject, but are still connected to the larger branches in some way. What do we mean by the humanities? The humanities refers to the learning concerned with human culture, such as literature, languages, history, art, music, geography and so on, as opposed to science. A science is an organised collection of facts about something which have been found by individuals and groups of individuals looking into such things as how birds fly, or why different substances react with each other, or what causes the seasons of the year (different temperatures, amount of daylight, etc.). More on this will be discussed in this booklet. In the following section, I aim to give you a balanced explanation of what we call the STEM subjects (science, technology, engineering and mathematics) in order to help you decide which career path would be best for you: a career in the sciences or in the humanities. I will also talk about art and its relationship to STEM subjects. And finally, I aim to give you a balanced view of the relationship between the sciences and the humanities, and to demonstrate to you, in the final section, how these two large branches of human knowledge work together, always. -

Lab Experiments Are a Major Source of Knowledge in the Social Sciences

IZA DP No. 4540 Lab Experiments Are a Major Source of Knowledge in the Social Sciences Armin Falk James J. Heckman October 2009 DISCUSSION PAPER SERIES Forschungsinstitut zur Zukunft der Arbeit Institute for the Study of Labor Lab Experiments Are a Major Source of Knowledge in the Social Sciences Armin Falk University of Bonn, CEPR, CESifo and IZA James J. Heckman University of Chicago, University College Dublin, Yale University, American Bar Foundation and IZA Discussion Paper No. 4540 October 2009 IZA P.O. Box 7240 53072 Bonn Germany Phone: +49-228-3894-0 Fax: +49-228-3894-180 E-mail: [email protected] Any opinions expressed here are those of the author(s) and not those of IZA. Research published in this series may include views on policy, but the institute itself takes no institutional policy positions. The Institute for the Study of Labor (IZA) in Bonn is a local and virtual international research center and a place of communication between science, politics and business. IZA is an independent nonprofit organization supported by Deutsche Post Foundation. The center is associated with the University of Bonn and offers a stimulating research environment through its international network, workshops and conferences, data service, project support, research visits and doctoral program. IZA engages in (i) original and internationally competitive research in all fields of labor economics, (ii) development of policy concepts, and (iii) dissemination of research results and concepts to the interested public. IZA Discussion Papers often represent preliminary work and are circulated to encourage discussion. Citation of such a paper should account for its provisional character. -

Beyond the Scientific Method

ISSUES AND TRENDS Jeffrey W. Bloom and Deborah Trumbull, Section Coeditors Beyond the Scientific Method: Model-Based Inquiry as a New Paradigm of Preference for School Science Investigations MARK WINDSCHITL, JESSICA THOMPSON, MELISSA BRAATEN Curriculum and Instruction, University of Washington, 115 Miller Hall, Box 353600, Seattle, WA 98195, USA Received 19 July 2007; revised 21 November 2007; accepted 29 November 2007 DOI 10.1002/sce.20259 Published online 4 January 2008 in Wiley InterScience (www.interscience.wiley.com). ABSTRACT: One hundred years after its conception, the scientific method continues to reinforce a kind of cultural lore about what it means to participate in inquiry. As commonly implemented in venues ranging from middle school classrooms to undergraduate laboratories, it emphasizes the testing of predictions rather than ideas, focuses learners on material activity at the expense of deep subject matter understanding, and lacks epistemic framing relevant to the discipline. While critiques of the scientific method are not new, its cumulative effects on learners’ conceptions of science have not been clearly articulated. We discuss these effects using findings from a series of five studies with degree-holding graduates of our educational system who were preparing to enter the teaching profession and apprentice their own young learners into unproblematic images of how science is done. We then offer an alternative vision for investigative science—model-based inquiry (MBI)— as a system of activity and discourse that engages learners more deeply with content and embodies five epistemic characteristics of scientific knowledge: that ideas represented in the form of models are testable, revisable, explanatory, conjectural, and generative.