LOREN: Logic Enhanced Neural Reasoning for Fact Verification

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Zathura: a Space Adventure

An intergalactic world of wonder is waiting just outside your front door in Columbia Pictures' heart-racing sci-fi family film Zathura: A Space Adventure. Zathura: A Space Adventure is the story of two squabbling brothers who are propelled into deepest, darkest space while playing a mysterious game they discovered in the basement of their old house. Now, they must overcome their differences and work together to complete the game or they will be trapped in outer space forever. SYNOPSIS After their father ( Tim Robbins ) leaves for work, leaving them in the care of their older sister ( Kristen Stewart ), six year-old Danny ( Jonah Bobo ) and ten-year old Walter ( Josh Hutcherson ) either get on each other‘s nerves or are totally bored. When their bickering escalates and Walter starts chasing him, Danny hides in a dumbwaiter. But Walter surprises him, and in retaliation, lowers Danny into their dark, scary basement, where he discovers an old tattered metal board game, —Zathura.“ After trying unsuccessfully to get his brother to play the game with him, Danny starts to play on his own. From his first move, Danny realizes this is no ordinary board game. His spaceship marker moves by itself and when it lands on a space, a card is ejected, which reads: —Meteor shower, take evasive action.“ The house is immediately pummeled from above by hot, molten meteors. When Danny and Walter look up through the gaping hole in their roof, they discover, to their horror, that they have been propelled into deepest, darkest outer space. And they are not alone. -

But, Can He Act? See If Ashton Kutcher Gets Debunk’D in Our Review of the Guardian by Peter Sobczynski

MOVIE TIMES | INTERVIEWS | REVIEWS | CROSSWORD & GAMES We talk to Christian Volckman, Ugly whose new film just like you: Renaissance America Ferrera isn’t nearly stars in the as animated new TV show as you might Ugly Betty have heard… produced by none other than Ms. Salma Hayek But, can he act? See if Ashton Kutcher gets Debunk’d in our review of The Guardian by Peter Sobczynski We Are Scientists experiment with new sounds, new tours, new albums, and… Gila monsters?! See what frontman Keith Murray has to say about all this nonsense || SEPTEMBER 29-OCTOBER 5, 2006 ENTERTAINMENT TODAY a “Sensational!” WMK Productions, Inc. Presents —Chicago Tribune “A joyous celebration Three Mo’ Tenors that blows the roof off the house!” Fri.–Sat., Oct. 6–7, 8 pm —Boston Herald Three Mo’ Tenors, the highly acclaimed music sensation, returns to the Cerritos Center for the Performing Arts. This talented trio of African-American opera singers belts out Broadway’s best, and performs heaven-sent Gospel music in a concert event for the entire family. Tickets: $63/$50/$42 Friday $67/$55/$45 Saturday Conceived and Directed by Marion J. Caffey Call (562) 467-8804 today to reserve your seats or go on-line to www.cerritoscenter.com. The Center is located directly off the 91 freeway, 20 minutes east of downtown Long Beach, 15 minutes west of Anaheim, and 30 minutes southeast of downtown Los Angeles. Parking is free. Your Favorite Entertainers, Your Favorite Theater . || ENTERTAINMENT TODAY SEPTEMBER 29-OCTOBER 5, 2006 ENTERTAINMENTVOL. 38|NO. 51|SEPTEMBER 29-OCTOBER 5, 2006 TODAYINCE S 1967 PUBLISHER KRIS CHIN MANAGING EDITOR CECILIA TSAI EDITOR MATHEW KLICKSTEIN PRODUCTION DAVID TAGARDA GRAPHICS CONSULTANT AMAZING GRAPHICS TECHNICAL SUPERVISOR KATSUYUKI UENO WRITERS JESSE ALBA JOHN BARILONE FRANK BARRON KATE E. -

SPECIAL THANK YOU Mo V I E B:11” S:10” Gu I D E T:11” 2017 MOVIE GUIDE

SPECIAL THANK YOU Mo v i e B:11” S:10” Gu i d e T:11” 2017 MOVIE GUIDE - Amityville: The Awakening (1/6) Directed by Franck Khalfoun - Starring Bella Thorne, Jennifer Jason Leigh, Jennifer Morrison - Mena (1/6) Directed by Doug Liman - Starring Tom Cruise, Jesse Plemons - Underworld: Blood Wars (1/6) Directed by Anna Foerster - Starring Theo James, Kate Beckinsale, Charles Dance, Tobias Menzies - Hidden Figures (1/13) JANUARY Directed by Theodore Melfi -Starring Taraji P. Henson, Octavia Spencer, Kirsten Dunst, Jim Parsons, Kevin Costner - Live By Night (1/13) Directed by Ben Affleck - Starring Zoe Saldana, Scott Eastwood, Ben Affleck, Elle Fanning, Sienna Miller - Monster Trucks (1/13) Directed by Chris Wedge - Starring Jane Levy, Amy Ryan, Lucas Till, Danny Glover, Rob Lowe B:11” S:10” T:11” - The Founder (1/20) Directed by John Lee Hancock - Starring Michael Keaton - The Resurrection of Gavin Stone (1/20) Directed by Dallas Jenkins - Starring Nicole Astra, Brett Dalton - Split (1/20) Directed by M. Night Shyamalan - Starring James McAvoy, Anya Taylor Joy - Table 19 (1/20) Directed by Jeffrey Blitz - Starring Anna Kendrick, Craig Robinson, June Squibb, Lisa Kudrow - xXx: The Return of Xander Cage (1/20) Directed by D.J. Caruso - Starring Toni Collette, Vin Diesel, Tony Jaa, Samuel L. Jackson - Bastards (1/27) Directed by Larry Sher - Starring Glenn Close, Ed Helms, Ving Rhames, J.K. Simmons, Katt Williams, Owen Wilson - A Dog’s Purpose (1/27) Directed by Lasse Hallstrom - Starring Peggy Lipton, Dennis Quaid, Britt Robertson - The Lake (1/27) Directed by Steven Quale - Starring Sullivan Stapleton - Resident Evil: The Final Chapter (1/27) Directed by Paul W.S. -

Recently, Celebrity Actor Dax Shepard, Who Previously Appeared

By Kerry Ferguson Recently, celebrity actor Dax Shepard, who previously A Free Training on Preparing Youth appeared on NBC’s for Adult Living, Learning & Working Parenthood and is also Planning for the future can be married to actress Kristen Bell, overwhelming for all youth but for revealed in an interview that those exiting the foster care system he had been molested as a child. Dax disclosed on The Jason it can be even more challenging Ellis Show that he was just 7 years old when he was abused by his but is also very necessary. Centering 18 year old neighbor. the plan around the transitioning Initially, Dax viewed the incident as minimal with him not telling youth’s own strengths, interests and anyone about the abuse for 12 years. He said that he blamed goals is essential in paving the way himself and even considered he may have been gay as a to a successful adult life. This reason why the abuse occurred. Dax publicly struggled with training will explain: drug and alcohol abuse in which he now feels his addiction was What “permanency” is & what it fueled by the molestation. Dax’s mother is a court-appointed means for youth & their lives What the transition process is & why advocate for children in foster care and recently shared a it is important statistic with Dax. At a seminar, Dax’s mother learned that if child Transition planning strategies & has been molested, there is only a 20% chance of them not supports becoming an addict. Unique grants/scholarship opportunities for youth Every 107 seconds a sexual assault occurs with approximately How to support youth’s 293,000 victims of sexual assault each year, this is a shocking participation in transition planning statistic. -

SPRING 2021 Victory! Congress Heeds Call to Fund Post-Viral Research

Visit our website for the latest updates on COVID-19 and ME/CFS: https://solveme.org/covid SPRING 2021 Victory! Congress Heeds Call to Fund Post-Viral Research In December 2020, Solve M.E. helped secure one of the biggest congressional investments in post- infectious disease research ever — a whopping $1.15 billion for Long COVID research, diagnostics, and clinical trials at the National Institutes of Health (NIH). One of our main focuses every year is increasing stakeholders, and met with dozens of congressional offices the federal spending dollars dedicated to research to discuss these federal funding needs. for myalgic encephalomyelitis, otherwise known as chronic fatigue syndrome, or ME/CFS. Early scientific In the letter, we warned of the “second wave” of post-vi- evidence made clear that ME/CFS and Post-Acute ral symptoms following COVID-19 and identified the gaps Sequelae of SARS-COV-2 infection (PASC) or “Post in medical and research infrastructures to address this or Long COVID-19 syndrome” have a lot in common, growing public health crisis. We urged Congress to priori- including symptoms, patient experience, and poor tize Long COVID and post-viral disease funding in the 2020 medical education. People with “Long COVID” (the Congressional COVID-19 relief packages. patient-preferred terminology) need help and have turned to the ME/CFS community to find answers. And that’s exactly what Congress did! » to page 3 Our federal affairs team quickly recognized that the ME/CFS community and the Long COVID community INSIDE can work together to call for more federal funding for 2 Solve M.E. -

Governor Blagojevich Announces Continued Growth of Illinois Film and Television Industry

FOR IMMEDIATE RELEASE June 08, 2005 Governor Blagojevich Announces Continued Growth of Illinois Film and Television Industry Illinois Film Office Releases Blockbuster Lineup of Movies and Television Projects Shooting in Illinois Bringing 5,000 Jobs and $68 Million to State’s Economy CHICAGO – Expanding on the success of the Illinois film industry in 2004, Gov. Rod R. Blagojevich today announced the continued revitalization of movie and television production within the state and introduced an impressive lineup of movies that are scheduled to be filmed in the state in the coming months. The major motion pictures come from a cross- section of Hollywood’s studios and feature popular talent, including Sandra Bullock, Keanu Reeves, Dustin Hoffman, Will Ferrell and Jennifer Aniston. Illinois’ own acting and directing stars Vince Vaughn and John Malkovich play key roles in several of the productions. These exciting projects are estimated to create 5,000 jobs and inject $68 million into the state’s economy. In 2004, projects filmed throughout the state created nearly 15,000 jobs and generated $77 million, 200 percent higher than in 2003. “Our film industry has gone from bust to boom in the past two years – bringing thousands of jobs and millions of dollars in revenue for our economy. Again and again, we have proven that our state has the talent pool, the settings and the resources needed for blockbuster films, and this continued growth reinforces what we already know: Illinois is a great place for Hollywood to do business,” Gov. Blagojevich said. Illinois’ film industry was in deep decline up until two years ago when the Governor signed Senate Bill 785, which made the state more competitive with other filming locations across the nation and around the world. -

Article: Stop the "Nazzi": Why the United States Needs a Full Ban on Paparazzi Photographs of Children of Celebrities

ARTICLE: STOP THE "NAZZI": WHY THE UNITED STATES NEEDS A FULL BAN ON PAPARAZZI PHOTOGRAPHS OF CHILDREN OF CELEBRITIES 2017 Reporter 37 Loy. L.A. Ent. L. Rev. 175 * Length: 15303 words Author: Dayna Berkowitz* * The author would like to give special thanks to Professor Mary Dant for her advice and guidance in the drafting of this article and to her family for their constant support. Text [*175] "We must protect the children because they are our future" or some variation of that phrase is often heard, whether it be in relation to education, security measures, or in some cliched apocalypse-type movie. This phrase carries substantial meaning mostly because of its truth, but also because it is a significant policy consideration in much of the legislation in the United States. Yet the legislature has overlooked a sub-sect of "the children of our future" numerous times when it comes to affording protections. That subsect is composed of the children of celebrities and they lack effective protections from paparazzi harassment. This Note proposes the strong legislation that is needed to protect the children of celebrities from the paparazzi, or as the children of celebrities refer to them: nazzis. 1 My daughter doesn't want to go to school because she knows "the men' are watching for her. They jump out of the bushes and from behind cars and who knows where else, besieging these children just to get a photo. -Halle Berry, speaking before the California Assembly Committee on Public Safety in June 2013 2 [*176] I. Introduction Halle Berry paints a grim picture of what the children of celebrities regularly experience. -

Let S Go to Prison Torrent 720P Movies Dubbed

Let S Go To Prison Torrent 720p Movies Dubbed Let S Go To Prison Torrent 720p Movies Dubbed 1 / 4 2 / 4 Let go to prison tended to be a very good comedy but the result is a comedy that limits it well and what I think is finally guilty. 1. prison escape movies tamil dubbed 2. prison movies tamil dubbed Price for him as a sanatorium where one falls for quite ridiculous crimes which can be compared to that stupid school boy but for each of them will still be punished.. Director: Bob Odenkirk Year: 2006 Starring: Dax Shepard Will Arnett Chi McBride David Koechner Dylan Baker Michael Shannon Miguel Nino Description The cause of all evils of characters hilarious black comedy became a place.. John Lyshitski is a poorer vehicle with weed problems and has been in Illinois statehood so often he knows all his people both workers and shortcomings.. I liked the movie anything but humor is hit and miss This could have been a great comedy but it was only possible to give some funny quips here and there but never mind giving you a ridiculous moment. prison escape movies tamil dubbed prison escape movies tamil dubbed, prison escape movies hindi dubbed, prison movies tamil dubbed Problems Viewing Pdf In Safari Scanf String Dev C++ Free download how to convert pdf into azw format for mac os x prison movies tamil dubbed Fotoskulpt textures Software v2 Store photosculpt textures software v2 store Grid 2 Serial Keygen Generator 3 / 4 systerac tools 6 premium serial 0041d406d9 Mcculloch Garden Vacuum Kit 0041d406d9 Dr Cleaner For Mac 4 / 4 Let S Go To Prison Torrent 720p Movies Dubbed. -

Television Academy Awards

2021 Primetime Emmy® Awards Ballot Outstanding Comedy Series A.P. Bio American Housewife B Positive black-ish Bob Hearts Abishola Breeders Bridge And Tunnel Call Me Kat Call Your Mother Chad Cobra Kai Connecting... The Conners Country Comfort The Crew Dad Stop Embarrassing Me! Dickinson Emily In Paris Everything's Gonna Be Okay The Flight Attendant For The Love Of Jason Frank Of Ireland Genera+ion Girls5eva The Goldbergs grown-ish Hacks Home Economics Kenan The Kominsky Method Last Man Standing Loudermilk Love, Victor Made For Love Master Of None The Mighty Ducks: Game Changers Millennials mixed-ish Mom Moonbase 8 Mr. Iglesias Mr. Mayor My American Family Mythic Quest The Neighborhood Pen15 The Politician Resident Alien Rutherford Falls Saved By The Bell Search Party Shameless Shrill Social Distance Special Staged Superstore Tacoma FD Ted Lasso Teenage Bounty Hunters The Unicorn United States Of Al The Upshaws Woke Young Rock Young Sheldon Younger Zoey's Extraordinary Playlist End of Category Outstanding Drama Series Absentia Age Of The Living Dead Alex Rider The Alienist: Angel Of Darkness All American All Creatures Great And Small (MASTERPIECE) All Rise American Gods Away Batwoman Big Shot Big Sky The Bite The Blacklist Blue Bloods The Bold Type The Boys Brave New World Bridgerton Bull Charmed The Chi Chicago Fire Chicago Med Chicago P.D. Chilling Adventures Of Sabrina City On A Hill Clarice The Crown Cruel Summer Cursed Debris Delilah Doom Patrol Double Cross The Equalizer The Expanse The Falcon And The Winter Soldier FBI FBI: Most Wanted Fear The Walking Dead Firefly Lane The Flash For All Mankind For Life Gangs Of London Ginny & Georgia Godfather Of Harlem The Good Doctor Good Girls Good Trouble Greenleaf Grey's Anatomy The Handmaid's Tale Hanna Helstrom His Dark Materials In The Dark In Treatment Industry The Irregulars Jupiter's Legacy Kung Fu L.A. -

In June 2020 Black Americans, Super Spreaders, Gene

PSA Celebrity Tracker 1 10/23/2020 Celebrity Name Status Additional Notes Demographic Dennis Quaid Accepted Lil Baby Pending Answer Arrested for reckless driving - Black Americans, Super spreaders, 2019, dropped "The Bigger General population Picture" in June 2020 Lil Uzi Vert Pending Answer Arrested for riding an unregistered Black Americans, Super spreaders, and uninsured dirt bike, against General population govt & politics and expressed indifference Lil Wayne Maybe; Follow-Up Discussing internally; big fan of Black Americans, Super spreaders, Obama, stated he does not like General population Republicans Beyoncé Pending Answer Has a net rating of 63% favorable Black Americans, Super spreaders, among democrats, and -3% among General population Rep. Cardi B Pending Answer Arrested with misdemeanor Black Americans, Super spreaders, assault and two counts of reckless General population endangerment in 2018, Endorsed Biden for President Eminem Pending Answer Arrested in 2001 for gun charge, Black Americans, Super spreaders, songs reflect his political views, General population against Republicans Roddy Ricch Pending Answer Arrested in 2019 on felony Black Americans, Super spreaders, domestic charges General population Ariana Grande Pending Answer Endorsed Bernie Sanders, General population, super spreaders expresses Democratic views Garth Brooks Pending Answer Stayed politically silent, General population confidently said "its always about serving" when reporter asked him if he would perform during Trumps inauguration PSA Celebrity Tracker -

Download Headshot + Resume

Theatrical Manager Voice SDB Partners 310.785.0060 323.860.0270 310.728.1081 ALY MAWJI TELEVISION CHANGES (pilot) series regular FOX/John Binkley SILICON VALLEY (seasons 1-3) recurring guest star HBO/Mike Judge WILL & GRACE guest star NBC/James Burrows 9-1-1 guest star FOX/Bradley Buecker PARKS AND RECREATION recurring NBC/Jay Karas LOOSELY EXACTLY NICOLE recurring guest star MTV/Linda Wallem, Daniella Eisman CASTLE guest star ABC/Bill Roe RIZZOLI & ISLES guest star TNT/Steve Robin GREY’S ANATOMY co-star ABC/Rob Corn FILM SIX FEET lead Prowler Films/R. Thakurathi & S. Thomas ALL MY PUNY SORROWS supporting Carousel Pictures/Michael McGowan THE DEVIL HAS A NAME supporting StoryBoard Media/Edward James Olmos LIZA KOSHY HOLIDAY SPECIAL lead YouTube/Pete Marquis & Jamie McCelland CHiPs supporting Warner Bros. Pictures/Dax Shepard REVERSION* supporting Girls with Glasses Prods/Mia Trachinger WORKER DRONE supporting ITVS (PBS)/Sharat Raju * Sundance Film Festival, official selection VOICEOVER SHARKDOG Royce (recurring) ViacomCBS/Netflix LIFE AFTER BOB Orlando/Alan Ian Cheng PINKY MALINKY Sam Nickelodeon/Netflix ABC MOUSE Jayesh ABCMouse.com THEATRE ELABORATE ENTRANCE OF CHAD DEITY V.P. Dallas Theater Center/Jaime Castañeda ANIMALS OUT OF PAPER* Suresh SF Playhouse (San Fran)/Amy Glazer BACK OF THE THROAT Asfoor Pasadena Playhouse/Damaso Rodriguez HOMELAND SECURITY Raj InterAct Theatre Co., PA/Seth Rozin ¡BOCON! Kiki/Calavera Henry Street Settlement, NYC/David Anzuelo DEAD END** Spit Henry Street Settlement, NYC/David Gaard TAMBURLAINE Theridamas -

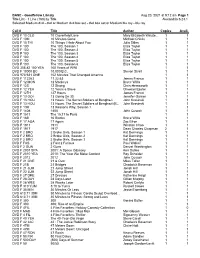

Goodfellow Library 1 Page Aug 20, 2021 at 9:12 Am Title List

BASE - Goodfellow Library Aug 20, 2021 at 9:12 am 1 Page Title List - 1 Line (160) by Title Alexandria 6.23.1 Selected:Medium dvd - dvd or Medium dvd box set - dvd box set or Medium blu ray - blu ray Call # Title Author Copies Avail. DVD F 10 CLO 10 Cloverfield Lane Mary Elizabeth Winste... 1 1 DVD F 10M 10 Minutes Gone Michael Chiklis 1 1 DVD F 10 THI 10 Things I Hate About You Julia Stiles 1 1 DVD F 100 The 100, Season 1 Eliza Taylor 1 1 DVD F 100 The 100, Season 2 Eliza Taylor 1 1 DVD F 100 The 100, Season 3 Eliza Taylor 1 1 DVD F 100 The 100, Season 4 Eliza Taylor 1 1 DVD F 100 The 100, Season 5 Eliza Taylor 1 1 DVD F 100 The 100, Season 6 Eliza Taylor 1 1 DVD 355.82 100 YEA 100 Years of WWI 1 1 DVD F 10000 BC 10,000 B.C. Steven Strait 1 1 DVD 973.931 ONE 102 Minutes That Changed America 1 1 DVD F 112263 11.22.63 James Franco 1 1 DVD F 12 MON 12 Monkeys Bruce Willis 1 1 DVD F 12S 12 Strong Chris Hemsworth 1 1 DVD F 12 YEA 12 Years a Slave Chiwetel Ejiofor 1 1 DVD F 127H 127 Hours James Franco 1 1 DVD F 13 GOI 13 Going On 30 Jennifer Garner 1 1 DVD F 13 HOU 13 Hours: The Secret Soldiers of Benghazi John Krasinski 1 1 DVD F 13 HOU 13 Hours: The Secret Soldiers of Benghazi (B..