ENNET: Inferring Large Gene Regulatory Networks from Expression Data Using Gradient Boosting Janusz Slawek Virginia Commonwealth University, [email protected]

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dream Vs. Chicago

ATLANTA DREAM (4-3) vs. CHICAGO SKY (5-1) June 7, 2014 • 7 p.m. ET • TV: SPSO • RADIO: 92-9 THE GAME Philips Arena • Atlanta, Ga. Regular Season Game 8 • Home Game 5 2014 Schedule & Results PROBABLE STARTERS Date .........Opponent ....................Result/Time Pos. No. Player PPG RPG APG Notes May 11 ..... NEW YORK^ .......................W, 63-58 G 5 JASMINE THOMAS 9.7 2.6 1.9 Has scored in double figures in three of May 16 ..... SAN ANTONIO (SPSO) ....W, 79-75 5-9 • 145 • Duke last four games May 17 ..... at Indiana (FSS) .......W, 90-88 (2OT) Fourth in the league in 3-point field May 24 ..... at Chicago (NBA TV) .......... L, 73-87 8.9 2.1 1.1 G 15 TIFFANY HAYES goal pct. (.583) May 25 ..... INDIANA (SPSO) ...... L, 77-82 (OT) 5-10 • 155 • Connecticut May 30 ..... SEATTLE (SPSO) ................W, 80-69 G 35 ANGEL McCOUGHTRY 17.7 4.5 4.8 Fourth in the league in steals (2.5), June 1 ....... at Connecticut ....................... L, 76-85 seventh in scoring, ninth in assists June 3 ....... LOS ANGELES (ESPN2) ....W, 93-85 6-1 • 160 • Louisville June 7 ....... CHICAGO (SPSO) .....................7 pm F 20 SANCHO LYTTLE 10.9 9.3 2.4 Three double-doubles in last five June 13 .... MINNESOTA (SPSO) ...........7:30 pm 6-4 • 175 • Houton games June 15 .... at Washington .............................4 pm June 18 .... WASHINGTON (FSS) .............12 pm C 14 ERIKA DE SOUZA 18.3 9.7 1.3 Leads the WNBA in field goal percent- June 20 .... NEW YORK (SPSO) .............7:30 pm 6-5 • 190 • Brazil age (.683) June 22 ... -

The Origin of Dreams: a Psychobiological Approach

THE ORIGIN OF DREAMS: A PSYCHOBIOLOGICAL APPROACH Joseph Griffin B.Sc. The London School of Economics and Political Science 1996 Thesis submitted in partial fulfilment of the requirements for the degree of Master of Philosophy in the faculty of Science, University of London. - 1 - UMI Number: U615417 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. Dissertation Publishing UMI U615417 Published by ProQuest LLC 2014. Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 I i-hES ES F 71+os Corrections p line 33 17 unsuitable 38 4 keeper’ 39 last 1 ine If 42 20 insert ’told’ 43 5 there (not their) 49 3 from bottom delete ’releasing’ G3 G comma after discharge 123 13 spat ial 125 13 imagined 12 G 19 spat ial 13G last 1 ine Freud U3 13 Leopold 1G2 11 insert *u’ in stimulus 1G 7 7 th of quote I 17G 11 Hall 187 Strachey ABSTRACT This thesis puts forward three hypotheses concerning the origin, meaning and function of dreaming. Hypothesis no. 1. Dreams are the sensory analogue of emotionally arousing introspections from waking (activated drive - schemata) not manifested or acted out during waking. -

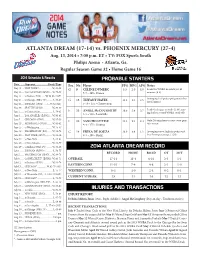

Aug. 13 Vs. Phoenix.Indd

ATLANTA DREAM (17-14) vs. PHOENIX MERCURY (27-4) Aug. 13, 2014 • 7:00 p.m. ET • TV: FOX Sports South Philips Arena • Atlanta, Ga. Regular Season Game 32 • Home Game 16 2014 Schedule & Results PROBABLE STARTERS Date .........Opponent ....................Result/Time Pos. No. Player PPG RPG APG Notes May 11 .....NEW YORK^ .......................W, 63-58 G 9 CÉLINE DUMERC 3.3 2.0 4.0 Leads the WNBA in assists per 40 May 16 .....SAN ANTONIO (SPSO) ....W, 79-75 5-7 • 145 • France minutes (8.9) May 17 .....at Indiana (FSS) .......W, 90-88 (2OT) Averaging 15.9 points per game in her May 24 .....at Chicago (NBA TV) .......... L, 73-87 G 15 TIFFANY HAYES 13.2 3.0 2.6 last 15 games May 25 .....INDIANA (SPSO) ...... L, 77-82 (OT) 5-10 • 155 • Connecticut May 30 .....SEATTLE (SPSO) ................W, 80-69 F 35 ANGEL McCOUGHTRY 19.0 5.4 3.7 Leads the league in steals (2.48), aim- June 1 .......at Connecticut .......................L, 76-85 ing for her second WNBA steals title June 3 .......LOS ANGELES (ESPN2) ....W, 93-85 6-1 • 160 • Louisville June 7 .......CHICAGO (SPSO) ..............W, 97-59 F 20 SANCHO LYTTLE 12.4 9.2 2.4 Only Dream player to start every game June 13 .... MINNESOTA (SPSO) .........W, 85-82 6-4 • 175 • Houton this season June 15 .... at Washington ......................W, 75-67 June 18 .... WASHINGTON (FSS) ........W, 83-73 C 14 ERIKA DE SOUZA 13.9 8.9 1.2 Averaging career highs in points and June 20 .... NEW YORK (SPSO) ...........W, 85-64 6-5 • 190 • Brazil free throw percentage (.720) June 22 ... -

CSR Report 2016 Web Edition

210mm 背幅:2mm(フル版) CSR Report 2016 Web Edition Make Your Dreams Happen この印刷物は責任ある管理がされた森林からの材を含む FSC® 認証紙を使用しています。 インキは環境負荷の少ない植物油インキを使用しています。 KANEKA CSR REPORT 2016 Contents CSR .................................................................................................................................................................... 2 Top Commitment ................................................................................................................................................. 4 Special Features ................................................................................................................................................... 6 Special FeatureⅠ Food Production Support ........................................................................................................... 7 Special Feature II Health Care ........................................................................................................................... 14 Stakeholder Dialogue (Full Report) ..................................................................................................................... 19 Communication Is Our Start Line ....................................................................................................................... 24 KANEKA at a glance ......................................................................................................................................... 27 CSR Topics 2015 ............................................................................................................................................ -

CSR Report 2016 Web Edition

210mm 背幅:2mm(フル版) CSR Report 2016 Web Edition Make Your Dreams Happen この印刷物は責任ある管理がされた森林からの材を含む FSC® 認証紙を使用しています。 インキは環境負荷の少ない植物油インキを使用しています。 KANEKA CSR REPORT 2016 Contents CSR .................................................................................................................................................................... 2 Top Commitment ................................................................................................................................................. 4 Special Features ................................................................................................................................................... 6 Special FeatureⅠ Food Production Support ........................................................................................................... 7 Special Feature II Health Care ........................................................................................................................... 14 Stakeholder Dialogue (Full Report) ..................................................................................................................... 19 Communication Is Our Start Line ....................................................................................................................... 24 KANEKA at a glance ......................................................................................................................................... 27 CSR Topics 2015 ............................................................................................................................................ -

Hdnet Schedule for Mon. March 19, 2012 to Sun. March 25, 2012

HDNet Schedule for Mon. March 19, 2012 to Sun. March 25, 2012 Monday March 19, 2012 3:30 PM ET / 12:30 PM PT 6:00 AM ET / 3:00 AM PT HDNet Fights IMAX - Dinosaurs: Giants of Patagonia 12 Fighters to Watch in 2012 - Michael Schiavello hosts a special highlighting twelve MMA This documentary-style narrative focuses on the history, evolution and extinction of the Fighters to watch on HDNet in 2012. dinosaurs. 5:00 PM ET / 2:00 PM PT 7:00 AM ET / 4:00 AM PT Prison Break Cheers Sweet Caroline - Michael pays the consequences, but finally comes face to face with the No Help Wanted - Influenced by the Cheers clan, Sam goes against his better judgements president; an unlikely alliance forms between Bellick and Sucre; T-Bag loses his money and and hires unhappy and unemployed Norm to do his taxes. C-Note’s offered an out if he double-crosses Mahone. 7:30 AM ET / 4:30 AM PT 6:00 PM ET / 3:00 PM PT Cheers JAG Coachie Makes Three - The omnipresent Coach is oblivious to the fact that he’s interfering Rogue - Ex-Navy SEAL Jack Raglan leads a nighttime raid on the Army garrison at Ft. Mon- with Sam and Diane’s love life. The trio winds up watching the late show together every roe, Virginia. The purpose: to test the garrison’s security measures against terrorist attack. evening at Diane’s apartment! 7:00 PM ET / 4:00 PM PT 8:00 AM ET / 5:00 AM PT JAG Smallville The Colonel’s Wife - In the high desert town of “Golden Hill,” California, a police SWAT team Recruit - In an effort to recruit Clark for the university’s football team, Metropolis University raids a modest suburban home. -

Kaneka Group CSR Report 2016

210mm 背幅:2mm(フル版) CSR Report 2016 Web Edition Make Your Dreams Happen この印刷物は責任ある管理がされた森林からの材を含む FSC® 認証紙を使用しています。 インキは環境負荷の少ない植物油インキを使用しています。 KANEKA CSR REPORT 2016 Contents CSR .................................................................................................................................................................... 2 Top Commitment ................................................................................................................................................. 4 Special Features ................................................................................................................................................... 6 Special FeatureⅠ Food Production Support ........................................................................................................... 7 Special Feature II Health Care ........................................................................................................................... 14 Stakeholder Dialogue (Full Report) ..................................................................................................................... 19 Communication Is Our Start Line ....................................................................................................................... 24 KANEKA at a glance ......................................................................................................................................... 27 CSR Topics 2015 ............................................................................................................................................ -

MMA Encyclopedia / Jonathan Snowden and Kendall Shields

00MMAEncycl_i-iv__ 02/09/10 3:54 PM Page i ECW Press 00MMAEncycl_i-iv__ 02/09/10 3:54 PM Page ii Copyright © Jonathan Snowden and Kendall Shields, 2010 Published by ECW Press 2120 Queen Street East, Suite 200, Toronto, Ontario, Canada m4e 1e2 416-694-3348 [email protected] All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form by any process — electronic, mechanical, photocopying, recording, or other- wise — without the prior written permission of the copyright owners and ECW Press. The scanning, uploading, and distribution of this book via the Internet or via any other means without the permission of the publisher is illegal and punishable by law. Please purchase only authorized electronic editions, and do not participate in or encourage electronic piracy of copyrighted materials. Your support of the authors’ rights is appreciated. library and archives canada cataloguing in publication Snowden, Jonathan, 1975- The MMA encyclopedia / Jonathan Snowden and Kendall Shields. Includes bibliographical references. isbn 978-1-55022-923-3 1. Mixed martial arts--Encyclopedias. i. Shields, Kendall ii. Title. gv1102.7.m59s65 2010 796.81503 c2010-901256-9 Developing Editor: Michael Holmes Cover Design: Dave Gee Text Design: Tania Craan Color Section Design: Rachel Ironstone Typesetting: Gail Nina Photos copyright © Peter Lockley, 2010 Printing: Solisco Tri-Graphic 1 2 3 4 5 The publication of The MMA Encyclopedia has been generously supported by the Government of Ontario through Ontario Book Publishing Tax Credit, by the OMDC Book Fund, an initiative of the Ontario Media Development Corporation, and by the Government of Canada through the Canada Book Fund. -

Indiana Standardbred Stallion Directory

PRSRT STD US POSTAGE PAID 2021 BOURBON IN PERMIT 29 Indiana Standardbred Association 311 American Legion Place Greenfield, IN 46140 2021 INDIANA STANDARDBRED STALLION DIRECTORY STALLION 2021 INDIANA STANDARDBRED INDIANA STANDARDBRED STALLION DIRECTORY Breeders Crown Champion Standing in Indiana in 2021 WORLD CLASS RACINGINDIANA ROOTS LIVE RACING 2021 MARCH 26 - DECEMBER 4 Live racing returns with an expanded season, faster BECKHAMSBECKHAMS ZZ TAMTAM Dean Gillette Photo action, richer rewards, bigger Always A Virgin – Sara’s Lucky Charm – Electric Yankee promotions, and a superior Always A Virgin – Sara’s Lucky Charm – Electric Yankee experience. It’s world-class p,2, Q1:58.2s; 3, 1:50.1s; 1:49s; BT 1:48-’19 ($800,074) racing with Indiana roots. Indiana racing fans will remember Beckhams Z Tam as a gritty racehorse that hit the board in nearly 70 percent of his career starts, featuring a wicked kick coming home. That explosive last quarter earned the son of Always A Virgin a Breeders Crown championship as a three year old, defeating favored Downbytheseaside in the $527,500 final. Beckhams Z Tam finished his career Visit HarrahsHoosierPark.com with 17 wins and a mark of 1:49, taken at age four. His feats of speed over 73 starts include: for more info. Pacing miles in 1:50 or faster 19 times, and miles in 1:51 or faster 38 times Pacing last quarters in under 27 seconds 31 times, including twice as a two year old Storming home in under 26 seconds in five career starts Beckhams Z Tam has a small crop of yearlings this season, and the early reports indicate they are athletic free-legged pacers with no hobbles needed. -

10.16.14 Kids Unbranded FIRST

Kids Unbranded TIPS FOR PARENTING IN A COMMERCIAL CULTURE Created and distributed by The Center for a New American Dream Find us at newdream.org "We have become a nation that places a lower priority on teaching its children how to thrive socially, intellectually, even spiritually, than it does on training them to consume. The long-term consequences of this development are ominous.” Juliet Schor 1 Contents 8 Children as Targets 13 Harming Our Children’s Well-Being 16 Commercialism and the Environment 19 Protecting Our Children: Who’s Responsible? 24 Commercial Television 31 Beyond the Tube 38 Print Media 43 Toys and Activities 50 Breaching the School’s Walls 55 Junk Food Marketing 62 Raising Your Kids in a Non-Commercial Culture 68 Changing Laws and Corporate Behavior 78 Resources 84 Endnotes This booklet was written and published by the Center for a New American Dream. Our mission is to help Americans reduce and shift their consumption to improve quality of life, protect the environment, and promote social justice. Since our founding in 1997, we have raised awareness of the negative impact of a hyper-consumer culture. We work with individuals, institutions, businesses, and communities to conserve natural resources, counter the commercialization of our culture, support community engagement, and promote positive changes in the way goods are produced and consumed. New Dream seeks to change social norms around consumption and consumerism and to support the local movement of individuals and communities pursuing lifestyle and community action. In a society often fixated on “more,” we focus on more of what matters: a cleaner environment, a higher quality of life, and a greater commitment to justice. -

ATLANTA DREAM Vs SEATTLE STORM May 14 Connecticut* L, 67-78 Twitter Atlanta, Ga

2021 REGULAR SEASON SCHEDULE Date Opponent Time (ET)/Result TV ATLANTA DREAM VS SEATTLE STORM May 14 Connecticut* L, 67-78 Twitter Atlanta, Ga. • Gateway Center Arena @ College Park May 19 Chicago* L, 77-85 Facebook Wednesday, June 9 at 7:00 p.m. ET May 21 at Indiana* W, 83-79 Twitter Game 9 • Bally Sports Southeast PxP: Angel Gray • Color: LaChina Robinson May 25 at Chicago* W, 90-83 CBSSN Explorer Rebel May 27 Dallas W, 101-95 BSSO May 29 at New York* W, 90-87 Prime Video June 4 at Minnesota L, 84-86 Facebook PROBABLE STARTERS June 6 at Minnesota L, 80-100 BSSE G #0 ODYSSEY SIMS 5-8 | 163 | Baylor June 9 Seattle 7:00 p.m. BSSE Led the team with 14 points in her Dream debut against Connecticut (5/14), adding on four rebounds, June 11 Seattle 8:00 p.m. CBSSN two assists and a steal ... Picked up two steals against Chicago (5/19) ... Scored 7 points with a sea- June 13 Washington* 3:30 p.m. BSSO/NBA TV son-high-tying 4 rebounds against Dallas (5/27) ... Started and led the team with 6 assists at MIN (6/4). June 17 at Washington* 7:00 p.m. June 23 Minnesota 7:00 p.m. BSSE Alt G #10 COURTNEY WILLIAMS 5-8 | 133 | South Florida June 26 New York* 7:00 p.m. BSSO 2021: Double-digit scoring in every one of the Dream’s six games so far this season ... Recorded her June 29 New York 7:00 p.m. -

Structural Dream Analysis Manual and Case Illustration the Aim of This

Prof. Dr. Christian Roesler [email protected] Structural dream analysis Manual and case illustration The aim of this research project is to create and apply a scientific method for identifying archetypal patterns in dream series from analytical psychotherapies. In Jungian psychology it is assumed that dreams appear as a product of the individuation process to guide the person towards greater wholeness. In psychotherapeutic processes it is assumed that dreams give hints from the unconscious on the central inner psychic problems and/or conflicts, but they also contain elements to cope with these problems. During the course of a psychotherapy the Jungian psychotherapist assumes that the series of dreams follows an inner structure of meaning. Structural dream analysis is a scientific method which tries to identify this inner structure of meaning from the series of dreams alone without referring to additional information about the dreamer, the psychodynamics or the course of psychotherapy. As a scientific method it aims at realizing as much objectivity as possible, a systematic and transparent application of a detailed manual of interpretive steps. As the dreams can only be understood in their meaning by the use of interpretation there is no alternative to using an interpretive method. In qualitative research systematic and objective methods of using interpretive steps have been developed and the method of structural dream analysis makes use of this development. It draws especially on narratological methods. In this sense the dreams are understood as narratives which makes the application of narrative interpretation possible. A detailed manual for the method has been developed which makes the process of interpretation transparent and independent from the interpreter.