Signature Redacted a Uthor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Satellite Regulatory and Usage in Indonesia

Ministry of Communication and Information Technology Toward The Indonesian Information Society Satellite Regulatory and Usage in Indonesia ITU/MIC International Satellite Symposium 2015 30 September- 1 October 2015 Danang City, Vietnam Directorate General of Resource Management and Equipment Standard of Post and Information Technology Background The largest archipelago country : 13,466 islands (already have coordinates and registered) source: Geospasial Information Indonesia (BIG) May 2014 total land area: 1,919,440 km2 (land: 1,826,440 km2, inland water: 93,000 km2) source: statistics Indonesia (BPS) May 2014 The Role of Satellite In Indonesia (1/2) BACKBONE NETWORK IN INDONESIA No access and terestrial backbone ACCESS NETWORK (CELLULAR) IN INDONESIA Still needed The Role of Satellite In Indonesia (2/2) Backbone Network : Fiber Optic • Lack of terrestrial backbone network in Eastern Part of Indonesia due to geographical condition • Terrestrial Access Network has not covered entire Indonesian teritory • Blank spot area only served by satellite infrastructure Access Network : Cellular network • Satellite plays an important role in connecting Indonesia and serving the unserved areas • Indonesia is highly dependent on satellite Overview: Satellite Industry in Indonesia Indonesia satelit operator: 119 txp C and 5 txp Ku Not enough supply from National Satellite Operator From 2009-2016 : No added capacity from National Operator To fulfill demands, foreign satellites can provide service in Indonesia through national telco and broadcasting operators Threre are 34 foreign satellites provide service in Indonesia Number of Foreign Satellites Providing Service in Indonesia 35 34 30 25 22 20 18 15 10 10 9 5 5 2009 2010 2011 2012 2013 2014 0 . -

MRA Leadership

2 MRA Leadership President Neil Van Dyke Stowe Mountain Rescue [email protected] July 2010 MRA Member Guides NASA On Undersea Exploration Analog Vice President Mission …………………………………………………………….3 About Steve Chappell……………………………………………...4 Doug Wesson A Letter From Our New President…………………………………4 Juneau Mountain Rescue Suspension Syndrome…………………………..………………….5 [email protected] Commentary from MRA Medical Committee Chair Skeet Glatterer, M.D……………………………………………………...5 Five Colorado SAR Teams Receive Prestigious NASAR Award…6 Past President 2010 MRA Spring Conference Report……………………………..7 Charley Shimanski Book Review: Mountain Responder……………………………….8 [email protected] International Tech Rescue Symposium…………………………….8 Himalayan First: Standby Rescue Helicopters……………………..9 Don‘t Just Do Something—Stand There!.......................................10 National Search and Rescue Week Designated…………………..11 Secretary/Treasurer John Chang Cover photo by NASA. Bay Area Mountain Rescue Unit [email protected] MRA Sponsors At-Large Member Jim Frank Thanks to the corporate supporters of the MRA. Please support Santa Barbara County SAR those that generously support us! Click the logo to follow the [email protected] link! At-Large Member Dave Clarke Portland Mountain Rescue Cell: 503-784-6341 [email protected] Executive Secretary Kayley Trujillo [email protected] Corporate correspondence to: Mountain Rescue Association PO Box 880868 San Diego, CA 92168-0868 ©2010 Mountain Rescue Association All rights reserved. All content ©MRA or as otherwise noted. Permission to reprint granted to MRA units in good standing with the MRA. 3 MRA Member Guides NASA On Undersea Exploration Analog Mission Parts reprinted with permission from NASA From May 10 - 24, 2010, two astronauts, a veteran undersea engineer and an experienced scientist embarked on the 14th NASA Extreme Environment Mission Operations (NEEMO) undersea analog mission at the Aquarius undersea labora- tory. -

Spotlight on Asia-Pacific

Worldwide Satellite Magazine June 2008 SatMagazine Spotlight On Asia-Pacific * The Asia-Pacific Satellite Market Segment * Expert analysis: Tara Giunta, Chris Forrester, Futron, Euroconsult, NSR and more... * Satellite Imagery — The Second Look * Diving Into the Beijing Olympics * Executive Spotlight, Andrew Jordan * The Pros Speak — Mark Dankburg, Bob Potter, Adrian Ballintine... * Checking Out CommunicAsia + O&GC3 * Thuraya-3 In Focus SATMAGAZINE JUNE 2008 CONTENTS COVER FEATURE EXE C UTIVE SPOTLIGHT The Asia-Pacific Satellite Market Andrew Jordan by Hartley & Pattie Lesser President & CEO The opportunities, and challenges, SAT-GE facing the Asia-Pacific satellite market 12 are enormous 42 FEATURES INSIGHT Let The Games Begin... High Stakes Patent Litigation by Silvano Payne, Hartley & Pattie by Tara Giunta, Robert M. Masters, Lesser, and Kevin and Michael Fleck and Erin Sears The Beijing Olympic Games are ex- Like it or not, high stakes patent pected to find some 800,000 visitors wars are waging in the global satel- 47 arriving in town for the 17-day event. 04 lite sector, and it is safe to assume that they are here to stay. Transforming Satel- TBS: Looking At Further Diversification lite Broadband by Chris Forrester by Mark Dankberg Internationally, Turner Broadcasting The first time the “radical” concept has always walked hand-in-hand with 54 of a 100 Gbps satellite was intro- the growth of satellite and cable – duced was four years ago, 07 and now IPTV. Here’s Looking At Everything — Part II by Hartley & Pattie Lesser The Key To DTH Success In Asia by Jose del Rosario The Geostationary Operational Envi- Some are eyeing Asia as a haven for ronmental Satellites (GOES) continu- economic safety or even economic ously track evolution of weather over growth amidst the current global almost a hemisphere. -

Appendix Program Managers/Acknowledgments

Flight Information Appendix Program Managers/Acknowledgments Selected Readings Acronyms Contributors’ Biographies Index Image of a Legac y—The Final Re-entry Appendix 517 Flight Information Approx. Orbiter Enterprise STS Flight No. Orbiter Crew Launch Mission Approach and Landing Test Flights and Crew Patch Name Members Date Days 1 Columbia John Young (Cdr) 4/12/1981 2 Robert Crippen (Plt) Captive-Active Flights— High-speed taxi tests that proved the Shuttle Carrier Aircraft, mated to Enterprise, could steer and brake with the Orbiter perched 2 Columbia Joe Engle (Cdr) 11/12/1981 2 on top of the airframe. These fights featured two-man crews. Richard Truly (Plt) Captive-Active Crew Test Mission Flight No. Members Date Length 1 Fred Haise (Cdr) 6/18/1977 55 min 46 s Gordon Fullerton (Plt) 2 Joseph Engle (Cdr) 6/28/1977 62 min 0 s 3 Columbia Jack Lousma (Cdr) 3/22/1982 8 Richard Truly (Plt) Gordon Fullerton (Plt) 3 Fred Haise (Cdr) 7/26/1977 59 min 53 s Gordon Fullerton (Plt) Free Flights— Flights during which Enterprise separated from the Shuttle Carrier Aircraft and landed at the hands of a two-man crew. 4 Columbia Thomas Mattingly (Cdr) 6/27/1982 7 Free Flight No. Crew Test Mission Henry Hartsfield (Plt) Members Date Length 1 Fred Haise (Cdr) 8/12/1977 5 min 21 s Gordon Fullerton (Plt) 5 Columbia Vance Brand (Cdr) 11/11/1982 5 2 Joseph Engle (Cdr) 9/13/1977 5 min 28 s Robert Overmyer (Plt) Richard Truly (Plt) William Lenoir (MS) 3 Fred Haise (Cdr) 9/23/1977 5 min 34 s Joseph Allen (MS) Gordon Fullerton (Plt) 4 Joseph Engle (Cdr) 10/12/1977 2 min 34 s Richard Truly (Plt) 5 Fred Haise (Cdr) 10/26/1977 2 min 1 s 6 Challenger Paul Weitz (Cdr) 4/4/1983 5 Gordon Fullerton (Plt) Karol Bobko (Plt) Story Musgrave (MS) Donald Peterson (MS) The Space Shuttle Numbering System The first nine Space Shuttle flights were numbered in sequence from STS -1 to STS-9. -

Karakteristik Data Tle Dan Pengolahannya

KARAKTERISTIK DATA TLE DAN PENGOLAHANNYA Abd. Rachman Peneliti Pusat Pemanfaatan Sains Antariksa, LAPAN Email: [email protected] ABSTRACT Data processing for historical two line element data from 117 satellites has been done by means of a computer program which is developed using SGP4 model. The result reveals that historical TLE data often contains duplication of elset. The result also shows that difference between orbital element's value which has been processed using SGP4 model and the one which has not been processed using SGP4 model happens more to eccentricity, argument of perigee, and mean anomaly. Beside that, the result also reveals that prediction using SGP4 model is very sensitive to the related TLE. ABSTRAK Dengan memakai historical data two line element dari 117 satelit telah dibuat pengolahan data menggunakan program yang dikembangkan menggunakan model SGP4. Hasil pengolahan menunjukkan bahwa duplikasi elset seringkali terjadi dalam sebuah historical data TLE. Selain itu, diketahui bahwa perbedaan nilai elemen orbit yang diproses memakai model SGP4 dengan yang tidak diproses memakai model SGP4 terutama tampak pada eksentrisitas, argument of perigee, dan mean anomaly. Diketahui pula bahwa prediksi menggunakan model SGP4 sangat sensitif terhadap masukan data TLE-nya. 1 PENDAHULUAN orbit satelit seperti dalam penelitian Dalam lingkup penelitian orbit pengaruh perubahan aktivitas matahari satelit, data yang populer digunakan pada orbit satelit LEO (Sinambela, 1996). adalah two-line element set [TLE set) yang Sebagaimana lazimnya data yang dikeluarkan oleh NORAD (North American digunakan dalam sebuah penelitian, Aerospace Defense Command). Data ini data TLE juga memerlukan pengolahan. dipublikasikan di internet melalui NASA/ Diperlukan kajian khusus tentang data Goddard dan dapat diakses melalui TLE untuk bisa memperoleh teknik www.space-track.org atau www.celestrak. -

Transfer of Ownership in Orbit: from Fiction to Problem

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Space, Cyber, and Telecommunications Law Program Faculty Publications Law, College of 2017 Transfer of Ownership in Orbit: From Fiction to Problem Frans von der Dunk Follow this and additional works at: https://digitalcommons.unl.edu/spacelaw Part of the Air and Space Law Commons, Comparative and Foreign Law Commons, International Law Commons, Military, War, and Peace Commons, National Security Law Commons, and the Science and Technology Law Commons This Article is brought to you for free and open access by the Law, College of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Space, Cyber, and Telecommunications Law Program Faculty Publications by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. A chapter in Ownership of Satellites: 4th Luxembourg Workshop on Space and Satellite Communication Law, Mahulena Hofmann and Andreas Loukakis (editors), Baden-Baden, Germany: Nomos Verlagsgesellschaft and Hart Publishing, 2017, pp. 29–43. Copyright © 2017 Nomos Verlagsgesellschaft. Used by permission. Transfer of Ownership in Orbit: from Fiction to Problem Frans von der Dunk* Abstract For many years, the concept of transfer of ownership of a satellite in orbit was not something on the radar screen of anyone seriously involved in space law, if indeed it was not considered a concept of an essentially fic- tional nature. Space law after all developed, as far as the key UN treaties were concerned, in a period when only States – and only very few States at that – were interested in and possessed the capability of conducting space activities, and they did so for largely military/strategic or scientific purposes. -

Fiscal Year 2013 Activities

Aeronautics and Space Report of the President • Fiscal Year 2013 Activities 2013 Year • Fiscal and Space Report of the President Aeronautics Aeronautics and Space Report of the President Fiscal Year 2013 Activities NP-2014-10-1283-HQ Aeronautics and Space Report of the President Fiscal Year 2013 Activities The National Aeronautics and Space Act of 1958 directed the annual Aeronautics and Space Report to include a “comprehensive description of the programmed activities and the accomplishments of all agencies of the United States in the field of aeronautics and space activities during the preceding calendar year.” In recent years, the reports have been prepared on a fiscal-year basis, consistent with the budgetary period now used in programs of the Federal Government. This year’s report covers activities that took place from October 1, 2012, Aeronautics and SpaceAeronautics Report of the President through September 30, 2013. Please note that these activities reflect the Federal policies of that time and do not include subsequent events or changes in policy. TABLE OF CONTENTS Fiscal Year 2013 Activities 2013 Year Fiscal National Aeronautics and Space Administration . 1 • Human Exploration and Operations Mission Directorate 1 • Science Mission Directorate 19 • Aeronautics Research Mission Directorate 33 • Space Technology Mission Directorate 40 Department of Defense . 43 Federal Aviation Administration . 57 Department of Commerce . 63 Department of the Interior . 91 Federal Communications Commission . 115 U.S. Department of Agriculture. 119 -

Cnsa): a National Space Resources Access Strategy in Disruptive Time

CONSORTIUM FOR NATIONAL SPACE RESOURCES ACCESS (CNSA): A NATIONAL SPACE RESOURCES ACCESS STRATEGY IN DISRUPTIVE TIME Meiditomo Sutyarjoko1), Yuliawan Cahya Pamungkas2) PT. Bank Rakyat Indonesia (Persero) Tbk. Email : 1)[email protected] 2)[email protected] ABSTRACT Space technology development history goes way back to the World War II era fueled by the “space race”. Indonesia is one of the early adopter of GEO satellite communication technology, and remain largely as satellite operator – albeit of the national program to master the space technology. Mastering space technology is not an easy task, and so far India is the only developing country that able to reach the rank #13 in the Space Technology Ladder. The space technology conservatism, particularly in the GEO satellite technology, that discourage innovations has been seen shifted due to recent structural industry change. The non-GSO satellite seems to lead the Disruptive Satellite Technology (DST) and has been self evidence on the start ups advancement in satellite industry. Increase in leverage as satellite operators, proposed to be achieved through the CNSA model should lead to a better rounded satellite coordination orchestration and more benefit for the satellite procurement processes. Aggregation of the satellite procurement processes, should benefit the procurement investment amount, technology transfer in the forms of training, program offset, or increase in local content should be less challenging, and for satellite operators who are the member of the consortium may only procure the satellite capacity as needed in their business plan. Keywords: Space Resources, Space Access, Space Technology, Technology Disruption, Competitiveness ABSTRAK Sejarah perkembangan teknologi anatriksa kembali ke era Perang Dunia II yang dipicu oleh "perang antariksa". -

United States Space Program Firsts

KSC Historical Report 18 KHR-18 Rev. December 2003 UNITED STATES SPACE PROGRAM FIRSTS Robotic & Human Mission Firsts Kennedy Space Center Library Archives Kennedy Space Center, Florida Foreword This summary of the United States space program firsts was compiled from various reference publications available in the Kennedy Space Center Library Archives. The list is divided into four sections. Robotic mission firsts, Human mission firsts, Space Shuttle mission firsts and Space Station mission firsts. Researched and prepared by: Barbara E. Green Kennedy Space Center Library Archives Kennedy Space Center, Florida 32899 phone: [321] 867-2407 i Contents Robotic Mission Firsts ……………………..........................……………...........……………1-4 Satellites, missiles and rockets 1950 - 1986 Early Human Spaceflight Firsts …………………………............................……........…..……5-8 Projects Mercury, Gemini, Apollo, Skylab and Apollo Soyuz Test Project 1961 - 1975 Space Shuttle Firsts …………………………….........................…………........……………..9-12 Space Transportation System 1977 - 2003 Space Station Firsts …………………………….........................…………........………………..13 International Space Station 1998-2___ Bibliography …………………………………..............................…………........…………….....…14 ii KHR-18 Rev. December 2003 DATE ROBOTIC EVENTS MISSION 07/24/1950 First missile launched at Cape Canaveral. Bumper V-2 08/20/1953 First Redstone missile was fired. Redstone 1 12/17/1957 First long range weapon launched. Atlas ICBM 01/31/1958 First satellite launched by U.S. Explorer 1 10/11/1958 First observations of Earth’s and interplanetary magnetic field. Pioneer 1 12/13/1958 First capsule containing living cargo, squirrel monkey, Gordo. Although not Bioflight 1 a NASA mission, data was utilized in Project Mercury planning. 12/18/1958 First communications satellite placed in space. Once in place, Brigadier Project Score General Goodpaster passed a message to President Eisenhower 02/17/1959 First fully instrumented Vanguard payload. -

Space Reporter's Handbook Mission Supplement

CBS News Space Reporter's Handbook - Mission Supplement Page 1 The CBS News Space Reporter's Handbook Mission Supplement Shuttle Mission STS-127/ISS-2JA: Station Assembly Enters the Home Stretch Written and Produced By William G. Harwood CBS News Space Analyst [email protected] CBS News 6/15/09 Page 2 CBS News Space Reporter's Handbook - Mission Supplement Revision History Editor's Note Mission-specific sections of the Space Reporter's Handbook are posted as flight data becomes available. Readers should check the CBS News "Space Place" web site in the weeks before a launch to download the latest edition: http://www.cbsnews.com/network/news/space/current.html DATE RELEASE NOTES 06/10/09 Initial STS-127 release 06/15/09 Updating to reflect launch delay to 6/17/09 Introduction This document is an outgrowth of my original UPI Space Reporter's Handbook, prepared prior to STS-26 for United Press International and updated for several flights thereafter due to popular demand. The current version is prepared for CBS News. As with the original, the goal here is to provide useful information on U.S. and Russian space flights so reporters and producers will not be forced to rely on government or industry public affairs officers at times when it might be difficult to get timely responses. All of these data are available elsewhere, of course, but not necessarily in one place. The STS-127 version of the CBS News Space Reporter's Handbook was compiled from NASA news releases, JSC flight plans, the Shuttle Flight Data and In-Flight Anomaly List, NASA Public Affairs and the Flight Dynamics office (abort boundaries) at the Johnson Space Center in Houston. -

China's Ambitions in Space

SECTION 3: CHINA’S AMBITIONS IN SPACE: CONTESTING THE FINAL FRONTIER Key Findings • China’s goal to establish a leading position in the economic and military use of outer space, or what Beijing calls its “space dream,” is a core component of its aim to realize the “great re- juvenation of the Chinese nation.” In pursuit of this goal, China has dedicated high-level attention and ample funding to catch up to and eventually surpass other spacefaring countries in terms of space-related industry, technology, diplomacy, and mil- itary power. If plans hold to launch its first long-term space station module in 2020, it will have matched the United States’ nearly 40-year progression from first human spaceflight to first space station module in less than 20 years. • China views space as critical to its future security and economic interests due to its vast strategic and economic potential. More- over, Beijing has specific plans not merely to explore space, but to industrially dominate the space within the moon’s orbit of Earth. China has invested significant resources in exploring the national security and economic value of this area, including its potential for space-based manufacturing, resource extraction, and power generation, although experts differ on the feasibility of some of these activities. • Beijing uses its space program to advance its terrestrial geopo- litical objectives, including cultivating customers for the Belt and Road Initiative (BRI), while also using diplomatic ties to advance its goals in space, such as by establishing an expanding network of overseas space ground stations. China’s promotion of launch services, satellites, and the Beidou global navigation system un- der its “Space Silk Road” is deepening participants’ reliance on China for space-based services. -

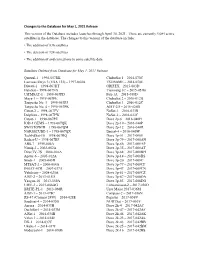

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18