Risk/Reward Analysis of Test-Driven Development by Susan Hammond

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CITS5501 Software Testing and Quality Assurance Test Automation

Test automation Test cases CITS5501 Software Testing and Quality Assurance Test Automation Unit coordinator: Arran Stewart 1 / 111 Test automation Test cases Re-cap We looked at testing concepts – failures, faults/defects, and erroneous states We looked at specifications and APIs – these help us answer the question, “How do we know what to test against?” i.e., What is the correct behaviour for some piece of software? We have discussed what unit tests are, and what they look like 2 / 111 Test automation Test cases Questions What’s the structure of a test? How do different types of test relate? How do we come up with tests? How do we know when we have enough tests? What are typical patterns and techniques when writing tests? How do we deal with difficult-to-test software? (e.g. software components with many dependencies) What sorts of things can be tested? 3 / 111 Test automation Test cases Questions What’s the structure of a test? Any test can be seen, roughly, as asking: “When set up appropriately – if the system (or some part of it) is asked to do X, does its actual behaviour match the expected behaviour?” 4 / 111 Test automation Test cases Test structure That is, we can see any test as consisting of two things: Test values: anything required to set up the system (or some part of it), and “ask it do” something, and observe the result. Expected values: what the system is expected to do. “Values” is being used in a very broad sense. Suppose we are designing system tests for a phone – then the “test values” might include, in some cases, physical actions to be done by a tester to put the phone in a particular state (e.g. -

Carmine Vassallo

Automated Testing Carmine Vassallo [email protected] @ccvassallo Recommended Book Gerard Meszaros, xUnit Test Patterns: Refactoring Test Code (Martin Fowler Signature Book) Addison-Wesley, 2007 2 Software Bugs “A software bug is an error, flaw, failure or fault in a computer program or system that causes it to produce an incorrect or unexpected result, or to behave in unintended ways.” https://en.wikipedia.org/wiki/Software_bug 3 How to detect bugs? Testing • Testing is the activity of finding out whether a piece of code (a method, class, or program) produces the intended behaviour. • We test software because we are sure it has bugs in it! • The feedback provided by testing is very valuable, but if it comes so late in the development cycle, its value is greatly diminished. • We need to continuously check for defects in our code. 4 The LEAN process http://thecommunitymanager.com/2012/08/01/the-lean-community/ 5 Automated SW Testing • Automated Software Testing consists of using special software (separate from the software being tested) to control the execution of tests and the comparison of actual outcomes with predicted outcomes. • Test automation can automate some repetitive but necessary tasks in a formalized testing process already in place, or perform additional testing that would be difficult to do manually. • Test automation is critical for continuous delivery and continuous testing. 6 Goals of Automated Testing (1/2) • Tests should help us improve quality. • If we are doing test-driven development or test-first development, the tests give us a way to capture what the SUT should be doing before we start building it. -

ABAP to the Future 864 Pages, 2019, $79.95 ISBN 978-1-4932-1761-8

First-hand knowledge. Browse the Book This chapter covers eliminating dependencies in your existing programs, implementing test doubles, and using ABAP Unit to write and implement test classes. It also includes advice on how to improve test-driven development via automated frameworks. ABAP Unit and Test-Driven Development Table of Contents Index The Author Paul Hardy ABAP to the Future 864 Pages, 2019, $79.95 ISBN 978-1-4932-1761-8 www.sap-press.com/4751 Chapter 5 ABAP Unit and Test-Driven Development 5 “Code without tests is bad code. It doesn’t matter how well-written it is; it doesn’t matter how pretty or object-oriented or well-encapsulated it is. With tests, we can change the behavior of our code quickly and verifiably. Without them, we really don’t know if our code is getting better or worse.” —Michael Feathers, Working Effectively with Legacy Code Programs have traditionally been fragile; that is, any sort of change was likely to break something, potentially causing massive damage. You want to reverse this situ- ation so that you can make as many changes as the business requires as fast as you can with zero risk. The way to do this is via test-driven development (TDD), supported by the ABAP Unit tool. This chapter explains what TDD is and how to enable it via the ABAP Unit framework. In the traditional development process, you write a new program or change an exist- ing one, and after you’re finished you perform some basic tests, and then you pass the program on to QA to do some proper testing. -

Assessing Mock Classes: an Empirical Study

Assessing Mock Classes: An Empirical Study Gustavo Pereira, Andre Hora ASERG Group, Department of Computer Science (DCC) Federal University of Minas Gerais (UFMG) Belo Horizonte, Brazil fghapereira, [email protected] Abstract—During testing activities, developers frequently SinonJS1 and Jest,2 which is supported by Facebook; Java rely on dependencies (e.g., web services, etc) that make the developers can rely on Mockito3 while Python provides test harder to be implemented. In this scenario, they can use unittest.mock4 in its core library. The other solution to create mock objects to emulate the dependencies’ behavior, which contributes to make the test fast and isolated. In practice, mock classes is by hand, that is, manually creating emulated the emulated dependency can be dynamically created with the dependencies so they can be used in test cases. In this case, support of mocking frameworks or manually hand-coded in developers do not need to rely on any particular mocking mock classes. While the former is well-explored by the research framework since they can directly consume the mock class. literature, the latter has not yet been studied. Assessing mock For example, to facilitate web testing, the Spring web classes would provide the basis to better understand how those mocks are created and consumed by developers and to detect framework includes a number of classes dedicated to mock- 5 novel practices and challenges. In this paper, we provide the ing. Similarly, the Apache Camel integration framework first empirical study to assess mock classes. We analyze 12 provides mocking classes to support distributed and asyn- popular software projects, detect 604 mock classes, and assess chronous testing.6 That is, in those cases, instead of using their content, design, and usage. -

Mockator Pro

UNIVERSITY OF APPLIED SCIENCES RAPPERSWIL Mockator Pro Seams and Mock Objects for Eclipse CDT MASTER THESIS: Fall and Spring Term 2011/12 Author Supervisor Michael Rüegg Prof. Peter Sommerlad “To arrive at the simple is difficult.” — Rashid Elisha i Abstract Breaking dependencies is an important task in refactoring legacy code and putting this code under tests. Feathers’ seams help us here because they enable us to inject dependencies from outside. Although seams are a valuable technique, it is hard and cumbersome to apply them without automated refactorings and tool chain config- uration support. We provide sophisticated support for seams with Mockator Pro, a plug-in for the Eclipse C/C++ development tooling project. Mockator Pro creates the boilerplate code and the necessary infrastructure for the four seam types object, compile, preprocessor and link seam. Although there are already various existing mock object libraries for C++, we believe that creating mock objects is still too complicated and time-consuming for developers. Mockator provides a mock object library and an Eclipse plug-in to create mock objects in a simple yet powerful way. Mockator leverages the new language facilities C++11 offers while still being compatible with C++98/03. ii Management Summary In this report we discuss the development of an Eclipse plug-in to refactor towards seams in C++ and the engineered mock object solution. This master’s thesis is a con- tinuation of a preceding term project at the University of Applied Sciences Rapperswil by the same author. Motivation High coupling, hard-wired and cyclic dependencies lead to systems that are hard to change, test and deploy in isolation. -

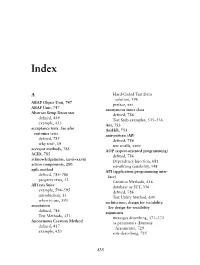

ABAP Object Unit, 747 ABAP Unit, 747 Abstract Setup Decorator Defined, 449 Example, 453 Acceptance Tests. See Also Customer Test

Index A Hard-Coded Test Data solution, 196 ABAP Object Unit, 747 preface, xxi ABAP Unit, 747 anonymous inner class Abstract Setup Decorator defi ned, 786 defi ned, 449 Test Stub examples, 535–536 example, 453 Ant, 753 acceptance tests. See also AntHill, 753 customer tests anti-pattern (AP) defi ned, 785 defi ned, 786 why test?, 19 test smells, xxxv accessor methods, 785 AOP (aspect-oriented programming) ACID, 785 defi ned, 786 acknowledgements, xxvii–xxviii Dependency Injection, 681 action components, 280 retrofi tting testability, 148 agile method API (application programming inter- defi ned, 785–786 face) property tests, 52 Creation Methods, 416 AllTests Suite database as SUT, 336 example, 594–595 defi ned, 786 introduction, 13 Test Utility Method, 600 when to use, 593 architecture, design for testability. annotation See design-for-testability defi ned, 786 arguments Test Methods, 351 messages describing, 371–372 Anonymous Creation Method as parameters (Dummy defi ned, 417 Arguments), 729 example, 420 role-describing, 725 835 836 Index Arguments, Dummy, 729 improperly coded in Neverfail Ariane 5 rocket, 218 Tests, 274 aspect-oriented programming (AOP) introduction, 77 defi ned, 786 Missing Assertion Messages, Dependency Injection, 681 226–227 retrofi tting testability, 148 reducing Test Code Duplication, Assertion Message 114–119 of Assertion Method, 364 refactoring, xlvi–xlix pattern description, 370–372 Self-Checking Tests, 107–108 Assertion Method unit testing, 6 Assertion Messages, 364 Verify One Condition per Test, calling built-in, 363–364 -

Development Testing

Software and Systems Verification (VIMIMA01) Development testing Zoltan Micskei Budapest University of Technology and Economics Fault Tolerant Systems Research Group Budapest University of Technology and Economics Department of Measurement and Information Systems 1 Main topics of the course . Overview (1) o V&V techniques, Critical systems . Static techniques (2) o Verifying specifications o Verifying source code . Dynamic techniques: Testing (7) o Developer testing, Test design techniques o Testing process and levels, Test generation, Automation . System-level verification (3) o Verifying architecture, Dependability analysis o Runtime verification 2 Example: what/how/where to test? 3 Example: what/how/where to test? Tests through GUI (~ system test) 4 Example: what/how/where to test? Tests through API (~ integration test) 5 Example: what/how/where to test? Module/unit tests 6 UNIT TESTING 7 Learning outcomes . Explain characteristics of good unit tests (K2) . Write unit tests using a unit testing framework (K3) 8 Module / unit testing . Module / unit: o Logically separable part o Well-defined interface o Can be: method / class / package / component… . Call hierarchy (ideal case): A A A3 A31 A311 A312 A1 A2 A3 A31 A32 A33 … A311 A312 A313 9 Why do we need unit testing? . Goal: Detect and fix defects during development (lowest level) o Can integrated later tested modules o Developer of the unit can fix the defect fastest . Units can be tested separately o Manage complexity o Locate defects more easily, fix is cheaper o Gives confidence for performing changes . Characteristics if unit tests o Checks a well-defined functionality o Defines a “contract” for the unit o Can be used an example o (Not neceserraly automatic) 10 Unit test frameworks . -

Testen Mit Mockito.Pdf

Testen mit Mockito http://www.vogella.com/tutorials/Mockito/article.html Warum testen? Würde dies funktionieren, ohne es vorab zu testen? Definition von Tests • Ein Softwaretest prüft und bewertet Software auf Erfüllung der für ihren Einsatz definierten Anforderungen und misst ihre Qualität. Die gewonnenen Erkenntnisse werden zur Erkennung und Behebung von Softwarefehlern genutzt. Tests während der Softwareentwicklung dienen dazu, die Software möglichst fehlerfrei in Betrieb zu nehmen. Arten von Tests • Unit-Test: Test der einzelnen Methoden einer Klasse • Integrationstest: Tests mehrere Klassen / Module • Systemtest: Test des Gesamtsystems (meist GUI-Test) • Akzeptanztest / Abnahmetest: Test durch den Kunden, ob Produkt verwendbar • Regressionstest: Nachweis, dass eine Änderung des zu testenden Systems früher durchgeführte Tests erneut besteht • Feldtest: Test während des Einsatzes • Lasttest • Stresstest • Smoke-Tests: (Zufallstest) nicht systematisch; nur Funktion wird getestet Definitionen • SUT … system under test • CUT … class under test TDD - Test Driven Development Test-Driven Development with Mockito, packt 2013 JUnit.org Hamcrest • Hamcrest is a library of matchers, which can be combined in to create flexible expressions of intent in tests. They've also been used for other purposes • http://hamcrest.org/ • https://github.com/hamcrest/JavaHamcrest • http://www.leveluplunch.com/java/examples/hamcrest- collection-matchers-junit-testing/ • http://grepcode.com/file/repo1.maven.org/maven2/ org.hamcrest/hamcrest-library/1.3/org/hamcrest/Matchers.java Test Doubles Warum Test-Doubles? • A unit test should test a class in isolation. Side effects from other classes or the system should be eliminated if possible. The achievement of this desired goal is typical complicated by the fact that Java classes usually depend on other classes. -

Unit Testing: Principles, Practices, and Patterns

Principles, Practices, and Patterns Vladimir Khorikov MANNING Chapter Map Licensed toJorge Cavaco <[email protected]> Complexity (ch. 7) Fast feedback (ch. 4) Maximize Have high Domain model and Maintainability Maximize Unit tests Cover algorithms (ch. 4) (ch. 7) Protection against Test accuracy False negatives Defined by Tackled by regressions Maximize Integration tests (ch. 4) (ch. 4) (ch. 4) Cover Defined by Maximize Resistance to False positives Controllers Tackled by refactoring Used in (ch. 4) (ch. 7) (ch. 4) Damage if used incorrectly Have large number of Mocks (ch. 5) In-process dependencies Are Collaborators (ch. 2) (ch. 2) Managed dependencies Should not be used for (ch. 8) Are Out-of-process dependencies Are (ch. 2) Should be used for Unmanaged Are dependencies (ch. 8) Unit Testing: Principles, Practices, and Patterns VLADIMIR KHORIKOV MANNING SHELTER ISLAND Licensed to Jorge Cavaco <[email protected]> For online information and ordering of this and other Manning books, please visit www.manning.com. The publisher offers discounts on this book when ordered in quantity. For more information, please contact Special Sales Department Manning Publications Co. 20 Baldwin Road PO Box 761 Shelter Island, NY 11964 Email: [email protected] ©2020 by Manning Publications Co. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by means electronic, mechanical, photocopying, or otherwise, without prior written permission of the publisher. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in the book, and Manning Publications was aware of a trademark claim, the designations have been printed in initial caps or all caps. -

BONUS CHAPTER Contents

BONUS CHAPTER contents preface xvii acknowledgments xix about this book xxi about the cover illustration xxvii PART 1 A TDD PRIMER ..............................................1 The big picture 3 1 1.1 The challenge: solving the right problem right 5 Creating poorly written code 5 ■ Failing to meet actual needs 6 1.2 Solution: being test-driven 7 High quality with TDD 8 ■ Meeting needs with acceptance TDD 10 ■ What’s in it for me? 11 1.3 Build it right: TDD 14 Test-code-refactor: the heartbeat 15 ■ Developing in small increments 19 ■ Keeping code healthy with refactoring 24 ■ Making sure the software still works 28 i ii CONTENTS 1.4 Build the right thing: acceptance TDD 31 What’s in a name? 31 ■ Close collaboration 32 ■ Tests as a shared language 33 1.5 Tools for test-driven development 36 Unit-testing with xUnit 36 ■ Test frameworks for acceptance TDD 37 ■ Continuous integration and builds 37 ■ Code coverage 39 1.6 Summary 41 Beginning TDD 43 2 2.1 From requirements to tests 45 Decomposing requirements 45 ■ What are good tests made of? 47 ■ Working from a test list 47 ■ Programming by intention 48 2.2 Choosing the first test 48 Creating a list of tests 49 ■ Writing the first failing test 50 ■ Making the first test pass 54 ■ Writing another test 56 2.3 Breadth-first, depth-first 58 Faking details a little longer 59 ■ Squeezing out the fake stuff 60 2.4 Let’s not forget to refactor 63 Potential refactorings in test code 64 ■ Removing a redundant test 65 2.5 Adding a bit of error handling 66 Expecting an exception 66 ■ Refactoring toward -

ABAP to the Future 801 Pages, 2016, $79.95 ISBN 978-1-4932-1410-5

First-hand knowledge. Reading Sample This sample chapter describes how to use ABAP Unit for test-driven development (TDD) when creating and changing custom programs to make your changes with minimal risk to the business. This chap- ter explains what TDD is and how to enable it via the ABAP Unit framework. “ABAP Unit and Test-Driven Development” Contents Index The Author Paul Hardy ABAP to the Future 801 Pages, 2016, $79.95 ISBN 978-1-4932-1410-5 www.sap-press.com/4161 Chapter 3 Code without tests is bad code. It doesn’t matter how well-written it is; it doesn’t matter how pretty or object-oriented or well-encapsulated it is. With tests, we can change the behavior of our code quickly and verifiably. Without them, we really don't know if our code is getting better or worse. —Michael Feathers, Working Effectively with Legacy Code 3 ABAP Unit and Test-Driven Development Nothing is more important, during the process of creating and changing custom programs, than figuring out how to make such changes with minimal risk to the business. The way to do this is via test-driven development (TDD), and the tool to use is ABAP Unit. This chapter explains what TDD is and how to enable it via the ABAP Unit framework. In the traditional development process, you write a new program or change an existing one, and after you’re finished you perform some basic tests, and then you pass the program on to QA to do some proper testing. Often, there isn’t enough time, and this aspect of the software development lifecycle is brushed over—with disastrous results. -

Unit Testing

Software Construction Mock Testing Jürg Luthiger University of Applied Sciences Northwestern Switzerland Institute for Mobile and Distributed Systems Learning Target You can describe the concepts behind Mock testing can use Mock Objects as an efficient way to do Unit Testing Institute for Mobile and Distributed Systems J. Luthiger 2 Agenda Introduction into Mock Testing Introduction into EasyMock2 Institute for Mobile and Distributed Systems J. Luthiger 3 Unit Testing (Reminder!) Unit Testing is done on each module in isolation to verify its behavior Unit test will establish some kind of artificial environment invoke routines in the module under test check the results against known values Institute for Mobile and Distributed Systems J. Luthiger 4 Limits of Unit Testing Some things can't be tested in isolation Configuration JDBC code z Testing for connection acquisition and release is not very interesting O/R mappings ... and, of course, how classes work together Institute for Mobile and Distributed Systems J. Luthiger 5 Test Double in Unit Testing Dummy objects are passed around but never actually used. Usually they are just used to fill parameter lists. Fake objects actually have working implementations, but usually take some shortcut which makes them not suitable for production (an in-memory database is a good example). Stubs provide canned answers to calls made during the test, usually not responding at all to anything outside what's programmed in for the test. Stubs may also record information about calls, such as an email gateway stub that remembers the messages it 'sent', or maybe only how many messages it 'sent'. Mocks objects pre-programmed with expectations which form a specification of the calls they are expected to receive.