Unit Testing: Principles, Practices, and Patterns

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER

Practical Unit Testing for Embedded Systems Part 1 PARASOFT WHITE PAPER Table of Contents Introduction 2 Basics of Unit Testing 2 Benefits 2 Critics 3 Practical Unit Testing in Embedded Development 3 The system under test 3 Importing uVision projects 4 Configure the C++test project for Unit Testing 6 Configure uVision project for Unit Testing 8 Configure results transmission 9 Deal with target limitations 10 Prepare test suite and first exemplary test case 12 Deploy it and collect results 12 Page 1 www.parasoft.com Introduction The idea of unit testing has been around for many years. "Test early, test often" is a mantra that concerns unit testing as well. However, in practice, not many software projects have the luxury of a decent and up-to-date unit test suite. This may change, especially for embedded systems, as the demand for delivering quality software continues to grow. International standards, like IEC-61508-3, ISO/DIS-26262, or DO-178B, demand module testing for a given functional safety level. Unit testing at the module level helps to achieve this requirement. Yet, even if functional safety is not a concern, the cost of a recall—both in terms of direct expenses and in lost credibility—justifies spending a little more time and effort to ensure that our released software does not cause any unpleasant surprises. In this article, we will show how to prepare, maintain, and benefit from setting up unit tests for a simplified simulated ASR module. We will use a Keil evaluation board MCBSTM32E with Cortex-M3 MCU, MDK-ARM with the new ULINK Pro debug and trace adapter, and Parasoft C++test Unit Testing Framework. -

Towards a Taxonomy of Sunit Tests *

Towards a Taxonomy of SUnit Tests ? Markus G¨alli a Michele Lanza b Oscar Nierstrasz a aSoftware Composition Group Institut f¨urInformatik und angewandte Mathematik Universit¨atBern, Switzerland bFaculty of Informatics University of Lugano, Switzerland Abstract Although unit testing has gained popularity in recent years, the style and granularity of individual unit tests may vary wildly. This can make it difficult for a developer to understand which methods are tested by which tests, to what degree they are tested, what to take into account while refactoring code and tests, and to assess the value of an existing test. We have manually categorized the test base of an existing object-oriented system in order to derive a first taxonomy of unit tests. We have then developed some simple tools to semi-automatically categorize tests according to this taxonomy, and applied these tools to two case studies. As it turns out, the vast majority of unit tests focus on a single method, which should make it easier to associate tests more tightly to the methods under test. In this paper we motivate and present our taxonomy, we describe the results of our case studies, and we present our approach to semi-automatic unit test categorization. 1 Key words: unit testing, taxonomy, reverse engineering 1 13th International European Smalltalk Conference (ESUG 2005) http://www.esug.org/conferences/thirteenthinternationalconference2005 ? We thank St´ephane Ducasse for his helpful comments and gratefully acknowledge the financial support of the Swiss National Science Foundation for the project “Tools and Techniques for Decomposing and Composing Software” (SNF Project No. -

Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems

University of Denver Digital Commons @ DU Electronic Theses and Dissertations Graduate Studies 1-1-2017 Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems Tosapon Pankumhang University of Denver Follow this and additional works at: https://digitalcommons.du.edu/etd Part of the Computer Sciences Commons Recommended Citation Pankumhang, Tosapon, "Parallel, Cross-Platform Unit Testing for Real-Time Embedded Systems" (2017). Electronic Theses and Dissertations. 1353. https://digitalcommons.du.edu/etd/1353 This Dissertation is brought to you for free and open access by the Graduate Studies at Digital Commons @ DU. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of Digital Commons @ DU. For more information, please contact [email protected],[email protected]. PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS __________ A Dissertation Presented to the Faculty of the Daniel Felix Ritchie School of Engineering and Computer Science University of Denver __________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy __________ by Tosapon Pankumhang August 2017 Advisor: Matthew J. Rutherford ©Copyright by Tosapon Pankumhang 2017 All Rights Reserved Author: Tosapon Pankumhang Title: PARALLEL, CROSS-PLATFORM UNIT TESTING FOR REAL-TIME EMBEDDED SYSTEMS Advisor: Matthew J. Rutherford Degree Date: August 2017 ABSTRACT Embedded systems are used in a wide variety of applications (e.g., automotive, agricultural, home security, industrial, medical, military, and aerospace) due to their small size, low-energy consumption, and the ability to control real-time peripheral devices precisely. These systems, however, are different from each other in many aspects: processors, memory size, develop applications/OS, hardware interfaces, and software loading methods. -

2.5 the Unit Test Framework (UTF)

Masaryk University Faculty of Informatics C++ Unit Testing Frameworks Diploma Thesis Brno, January 2010 Miroslav Sedlák i Statement I declare that this thesis is my original work of authorship which I developed individually. I quote properly full reference to all sources I used while developing. ...…………..……… Miroslav Sedlák Acknowledgements I am grateful to RNDr. Jan Bouda, Ph.D. for his inspiration and productive discussion. I would like to thank my family and girlfriend for the encouragement they provided. Last, but not least, I would like to thank my friends Ing.Michal Bella who was very supportive in verifying the architecture of the extension of Unit Test Framework and Mgr. Irena Zigmanová who helped me with proofreading of this thesis. ii Abstract The aim of this work is to gather, clearly present and test the use of existing UTFs (CppUnit, CppUnitLite, CppUTest, CxxTest and other) and supporting tools for software project development in the programming language C++. We will compare the advantages and disadvantages of UTFs and theirs tools. Another challenge is to design effective solutions for testing by using one of the UTFs in dependence on the result of analysis. Keywords C++, Unit Test (UT), Test Framework (TF), Unit Test Framework (UTF), Test Driven Development (TDD), Refactoring, xUnit, Standard Template Library (STL), CppUnit, CppUnitLite, CppUTest Run-Time Type Information (RTTI), Graphical User Interface (GNU). iii Contents 1 Introduction .................................................................................................................................... -

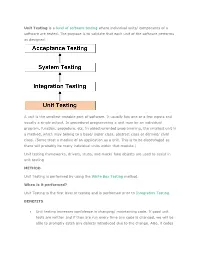

Unit Testing Is a Level of Software Testing Where Individual Units/ Components of a Software Are Tested. the Purpose Is to Valid

Unit Testing is a level of software testing where individual units/ components of a software are tested. The purpose is to validate that each unit of the software performs as designed. A unit is the smallest testable part of software. It usually has one or a few inputs and usually a single output. In procedural programming a unit may be an individual program, function, procedure, etc. In object-oriented programming, the smallest unit is a method, which may belong to a base/ super class, abstract class or derived/ child class. (Some treat a module of an application as a unit. This is to be discouraged as there will probably be many individual units within that module.) Unit testing frameworks, drivers, stubs, and mock/ fake objects are used to assist in unit testing. METHOD Unit Testing is performed by using the White Box Testing method. When is it performed? Unit Testing is the first level of testing and is performed prior to Integration Testing. BENEFITS Unit testing increases confidence in changing/ maintaining code. If good unit tests are written and if they are run every time any code is changed, we will be able to promptly catch any defects introduced due to the change. Also, if codes are already made less interdependent to make unit testing possible, the unintended impact of changes to any code is less. Codes are more reusable. In order to make unit testing possible, codes need to be modular. This means that codes are easier to reuse. Development is faster. How? If you do not have unit testing in place, you write your code and perform that fuzzy ‘developer test’ (You set some breakpoints, fire up the GUI, provide a few inputs that hopefully hit your code and hope that you are all set.) If you have unit testing in place, you write the test, write the code and run the test. -

Including Jquery Is Not an Answer! - Design, Techniques and Tools for Larger JS Apps

Including jQuery is not an answer! - Design, techniques and tools for larger JS apps Trifork A/S, Headquarters Margrethepladsen 4, DK-8000 Århus C, Denmark [email protected] Karl Krukow http://www.trifork.com Trifork Geek Night March 15st, 2011, Trifork, Aarhus What is the question, then? Does your JavaScript look like this? 2 3 What about your server side code? 4 5 Non-functional requirements for the Server-side . Maintainability and extensibility . Technical quality – e.g. modularity, reuse, separation of concerns – automated testing – continuous integration/deployment – Tool support (static analysis, compilers, IDEs) . Productivity . Performant . Appropriate architecture and design . … 6 Why so different? . “Front-end” programming isn't 'real' programming? . JavaScript isn't a 'real' language? – Browsers are impossible... That's just the way it is... The problem is only going to get worse! ● JS apps will get larger and more complex. ● More logic and computation on the client. ● HTML5 and mobile web will require more programming. ● We have to test and maintain these apps. ● We will have harder requirements for performance (e.g. mobile). 7 8 NO Including jQuery is NOT an answer to these problems. (Neither is any other js library) You need to do more. 9 Improving quality on client side code . The goal of this talk is to motivate and help you improve the technical quality of your JavaScript projects . Three main points. To improve non-functional quality: – you need to understand the language and host APIs. – you need design, structure and file-organization as much (or even more) for JavaScript as you do in other languages, e.g. -

Testing and Debugging Tools for Reproducible Research

Testing and debugging Tools for Reproducible Research Karl Broman Biostatistics & Medical Informatics, UW–Madison kbroman.org github.com/kbroman @kwbroman Course web: kbroman.org/Tools4RR We spend a lot of time debugging. We’d spend a lot less time if we tested our code properly. We want to get the right answers. We can’t be sure that we’ve done so without testing our code. Set up a formal testing system, so that you can be confident in your code, and so that problems are identified and corrected early. Even with a careful testing system, you’ll still spend time debugging. Debugging can be frustrating, but the right tools and skills can speed the process. "I tried it, and it worked." 2 This is about the limit of most programmers’ testing efforts. But: Does it still work? Can you reproduce what you did? With what variety of inputs did you try? "It's not that we don't test our code, it's that we don't store our tests so they can be re-run automatically." – Hadley Wickham R Journal 3(1):5–10, 2011 3 This is from Hadley’s paper about his testthat package. Types of tests ▶ Check inputs – Stop if the inputs aren't as expected. ▶ Unit tests – For each small function: does it give the right results in specific cases? ▶ Integration tests – Check that larger multi-function tasks are working. ▶ Regression tests – Compare output to saved results, to check that things that worked continue working. 4 Your first line of defense should be to include checks of the inputs to a function: If they don’t meet your specifications, you should issue an error or warning. -

CITS5501 Software Testing and Quality Assurance Test Automation

Test automation Test cases CITS5501 Software Testing and Quality Assurance Test Automation Unit coordinator: Arran Stewart 1 / 111 Test automation Test cases Re-cap We looked at testing concepts – failures, faults/defects, and erroneous states We looked at specifications and APIs – these help us answer the question, “How do we know what to test against?” i.e., What is the correct behaviour for some piece of software? We have discussed what unit tests are, and what they look like 2 / 111 Test automation Test cases Questions What’s the structure of a test? How do different types of test relate? How do we come up with tests? How do we know when we have enough tests? What are typical patterns and techniques when writing tests? How do we deal with difficult-to-test software? (e.g. software components with many dependencies) What sorts of things can be tested? 3 / 111 Test automation Test cases Questions What’s the structure of a test? Any test can be seen, roughly, as asking: “When set up appropriately – if the system (or some part of it) is asked to do X, does its actual behaviour match the expected behaviour?” 4 / 111 Test automation Test cases Test structure That is, we can see any test as consisting of two things: Test values: anything required to set up the system (or some part of it), and “ask it do” something, and observe the result. Expected values: what the system is expected to do. “Values” is being used in a very broad sense. Suppose we are designing system tests for a phone – then the “test values” might include, in some cases, physical actions to be done by a tester to put the phone in a particular state (e.g. -

Enhancement in Nunit Testing Framework by Binding Logging with Unit Testing

International Journal of Science and Research (IJSR), India Online ISSN: 2319-7064 Enhancement in Nunit Testing Framework by binding Logging with Unit testing Viraj Daxini1, D. A. Parikh2 1Gujarat Technological University, L. D. College of Engineering, Ahmedabad, Gujarat, India 2Associate Professor and Head, Department of Computer Engineering, L. D. College of Engineering, Ahmadabad, Gujarat, India Abstract: This paper describes the new testing library named log4nunit testing library which is enhanced version of nunit testing library as it is binded to logging functionality. NUnit is similar to xunit testing family in all that cases are built directly to the code of the project. Log4NUnit testing library provides the capability to log the results of tests along with testing classes. It also provides the capability to log the messages from within the testing methods. It supplies extension of NUnit’s Test class method in terms of logging the results. It has functionality to log the entity of test cases, based on the Logging framework, to document the test settings which is done at the time of testing, test case execution and it’s results. Attractive feature of new library is that it will redirect the log message to console at runtime if Log4net library is not present in the class path. It will make easy for those users who do not want to download Logging framework and put it in their class path. Log4NUnit also include a Log4net logger methods and implements utility of logging methods such as info, debug, warn, fatal etc. This work was prompted due to lack of published results concerning the implementation of Log4NUnit. -

From Zero to 1000 Tests in 6 Months

From Zero to 1000 tests in 6 months Or how not to lose your mind with 2 week iterations Name is Max Vasilyev Senior Developer and QA Manager at Solutions Aberdeen http://tech.trailmax.info @trailmax Business Does Not Care • Business does not care about tests. • Business does not care about internal software quality. • Business does not care about architecture. • Some businesses don’t care so much, they even don’t care about money. Don’t Tell The Business Just do it! Just write your tests, ask no one. Honestly, tomorrow in the office just create new project, add NUnit package and write a test. That’ll take you 10 minutes. Simple? Writing a test is simple. Writing a good test is hard. Main questions are: – What do you test? – Why do you test? – How do you test? Our Journey: Stone Age Started with Selenium browser tests: • Recording tool is OK to get started • Boss loved it! • Things fly about on the screen - very dramatic But: • High maintenance effort • Problematic to check business logic Our Journey: Iron Age After initial Selenium fever, moved on to integration tests: • Hook database into tests and part-test database. But: • Very difficult to set up (data + infrastructure) • Problematic to test logic Our Journey: Our Days • Now no Selenium tests • A handful of integration tests • Most of the tests are unit-ish* tests • 150K lines of code in the project • Around 1200 tests with 30% coverage** • Tests are run in build server * Discuss Unit vs Non-Unit tests later ** Roughly 1 line of test code covers 2 lines of production code Testing Triangle GUI Tests GUI Tests Integration Tests Integration Tests Unit Tests Unit Tests Our Journey: 2 Week Iterations? The team realised tests are not optional after first 2-week iteration: • There simply was no time to manually test everything at the end of iteration. -

Designpatternsphp Documentation Release 1.0

DesignPatternsPHP Documentation Release 1.0 Dominik Liebler and contributors Jul 18, 2021 Contents 1 Patterns 3 1.1 Creational................................................3 1.1.1 Abstract Factory........................................3 1.1.2 Builder.............................................8 1.1.3 Factory Method......................................... 13 1.1.4 Pool............................................... 18 1.1.5 Prototype............................................ 21 1.1.6 Simple Factory......................................... 24 1.1.7 Singleton............................................ 26 1.1.8 Static Factory.......................................... 28 1.2 Structural................................................. 30 1.2.1 Adapter / Wrapper....................................... 31 1.2.2 Bridge.............................................. 35 1.2.3 Composite............................................ 39 1.2.4 Data Mapper.......................................... 42 1.2.5 Decorator............................................ 46 1.2.6 Dependency Injection...................................... 50 1.2.7 Facade.............................................. 53 1.2.8 Fluent Interface......................................... 56 1.2.9 Flyweight............................................ 59 1.2.10 Proxy.............................................. 62 1.2.11 Registry............................................. 66 1.3 Behavioral................................................ 69 1.3.1 Chain Of Responsibilities................................... -

Introduction to Unit Testing

INTRODUCTION TO UNIT TESTING In C#, you can think of a unit as a method. You thus write a unit test by writing something that tests a method. For example, let’s say that we had the aforementioned Calculator class and that it contained an Add(int, int) method. Let’s say that you want to write some code to test that method. public class CalculatorTester { public void TestAdd() { var calculator = new Calculator(); if (calculator.Add(2, 2) == 4) Console.WriteLine("Success"); else Console.WriteLine("Failure"); } } Let’s take a look now at what some code written for an actual unit test framework (MS Test) looks like. [TestClass] public class CalculatorTests { [TestMethod] public void TestMethod1() { var calculator = new Calculator(); Assert.AreEqual(4, calculator.Add(2, 2)); } } Notice the attributes, TestClass and TestMethod. Those exist simply to tell the unit test framework to pay attention to them when executing the unit test suite. When you want to get results, you invoke the unit test runner, and it executes all methods decorated like this, compiling the results into a visually pleasing report that you can view. Let’s take a look at your top 3 unit test framework options for C#. MSTest/Visual Studio MSTest was actually the name of a command line tool for executing tests, MSTest ships with Visual Studio, so you have it right out of the box, in your IDE, without doing anything. With MSTest, getting that setup is as easy as File->New Project. Then, when you write a test, you can right click on it and execute, having your result displayed in the IDE One of the most frequent knocks on MSTest is that of performance.