Conference Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Programme Booklet 2016

18th Slowind Festival 2016 PU LSE OF LIG Slovenian PhilharmonicHT Cankarjev dom 22–29 October 2016 Artistic Director: Ivan Fedele Andrea Manzoli • Pierre Boulez • Luciano Berio • Neville Hall • Lojze Lebič • Nina Šenk • Pasquale Corrado • Luka Juhart • Erik Satie • Claude Debussy • Ivan Fedele • Sergei Rachmaninoff • Bor Turel • Ottorino Respighi • Gregor Pirš • György Ligeti • Dear Listeners, While it was not a coincidence that we selected Vito Žuraj as the artistic director of the last Slowind Festival, we can say that this year’s choice also involved a certain amount of luck. About two years ago, we knocked on the door of Italian composer Ivan Fedele and proposed that Slowind perform at the Venice Biennale, of which he is the programme director. He generously invited us to the last edition of the festival and included a good measure of Slovenian contemporary music written for our ensemble on the programme. There followed discussions on a variety of challenges in the field of contemporary music, and a desire to invite him to Ljubljana soon matured within us. We gave him a copy of Mateja Kralj’s book entitled ... Wood, Wind, Metal ... which provides a thorough presentation of the philosophy of Slowind’s operation, while also giving creative people an indication of what still remains to be done with us after seventeen festivals. Ivan Fedele was glad to accept our invitation and, with his new festival programme, has invited us into the unknown – just where Slowind always likes to be. This year’s festival will primarily be permeated with Ivan Fedele’s own music for a wide range of instrumental combinations. -

Iscm Svetovni Glasbeni Dnevi – Slovenija 2015 Tromostovje – Tri Smeri 26

ISCM SVETOVNI GLASBENI DNEVI – SLOVENIJA 2015 TROMOSTOVJE – TRI SMERI 26. september – 2. oktober 2015 Častni pokrovitelj Predsednik Državnega sveta Republike Slovenije g. Mitja Bervar Projekt sofinancirata Ministrstvo za kulturo Republike Slovenije Mestna občina Ljubljana Partnerji Slovenska filharmonija, Simfonični orkester RTV Slovenija, Konservatorij za glasbo in balet Ljubljana V sodelovanju s/z Akademija za glasbo Univerze v Ljubljani, Cankarjev dom, Glasbena matica Ljubljana, Kulturni dom Nova Gorica, Oddelek za muzikologijo Filozofske fakultete Univerze v Ljubljani, MoTA - Museum of Transitory Art, SIGIC, SNG Opera in balet Ljubljana, Zavod Sploh, Zveza Glasbene mladine Slovenije Pokrovitelji Krka d.d., SAZAS, Four Points by Sheraton Ljubljana Mons, CICERO Begunje d.o.o, Dnevnik d.d., Dream Cymbals and Gongs Inc., Europlakat d.o.o., GoOpti, LPP d.o.o, Galeria River, Gorenje d.d., Plečnikov hram, Promotion d.o.o., Silič d.o.o, Zois Apartments Medijski pokrovitelj Delo d. d. Umetniški vodja: Pavel Mihelčič Programski odbor: Pavel Mihelčič - predsednik, Snježana Drevenšek, Nenad Firšt, Maja Kojc, Sonja Kralj Bervar, Gregor Pompe, Nina Šenk Organizacijski odbor: Nenad Firšt - predsednik, Sabina Dečman, Uroš Mijošek, Aleksij Valentinčič, Klemen Weiss, Tinka Zadravec Pripravila in uredila: Tinka Zadravec Prevod: Mojca Šorli (slovenščina), Neville Hall (angleščina) Oblikovanje: Klemen Kunaver Izdalo: Društvo slovenskih skladateljev Zanj: Klemen Weiss, september 2015 Natisnila: CICERO Begunje d.o.o Naklada: 500 izvodov Kontakt CIP - Kataložni zapis o publikaciji Društvo slovenskih skladateljev Narodna in univerzitetna knjižnica, Ljubljana Trg francoske revolucije 6/l, SI-1000 Ljubljana Slovenija 78:7.079(497.4Ljubljana)“2015“ T: +386 1 241 56 60 ISCM Svetovni glasbeni dnevi (2015 ; Slovenija) F: +386 1 241 56 66 Tromostovje - tri smeri = Three Bridges - three directions M: +368 41 562 144 / ISCM Svetovni glasbeni dnevi, Slovenija 2015 = ISCM World E: [email protected] Music Days, Slovenia 2015, 26. -

Ut Rip Sve Tlobe

18. Festival Slowind 2016 UT RIP SVE SlovenskaTLOBE filharmonija Cankarjev dom 22.–29. oktober 2016 Umetniški vodja: Ivan Fedele Andrea Manzoli • Pierre Boulez • Luciano Berio • Neville Hall • Lojze Lebič • Nina Šenk • Pasquale Corrado • Luka Juhart • Erik Satie • Claude Debussy • Ivan Fedele • Sergej Rahmaninov • Bor Turel • Ottorino Respighi • Gregor Pirš • György Ligeti • Cenjena poslušalka, spoštovani poslušalec, če ni bilo naključje, da smo za umetniškega vodjo lanskega Festivala Slowind izbrali Vita Žuraja lahko rečemo, da je letošnji izbiri botrovalo tudi nekaj sreče. Pred približno dvema letoma smo namreč potrkali na vrata italijanskega skladatelja Ivana Fedeleja in mu predlagali nastop Slowinda na Beneškem bienalu, ki ga programsko vodi. Velikodušno nas je povabil na lansko izdajo festivala in na spored koncerta uvrstil tudi dobro mero slovenske sodobne glasbe, napisane za naš ansambel. Sledili so pogovori o najrazličnejših izzivih na področju sodobne glasbe in kmalu je v nas dozorela želja, da ga povabimo v Ljubljano. Izročili smo mu knjigo Mateje Kralj z naslovom ... les, veter, kovina ..., ki bralcu dodobra razkrije filozofijo Slowindovega delovanja, kreativnim ljudem pa tudi nakaže, kaj se z nami po sedemnajstih festivalih še da početi. Ivan Fedele je naše povabilo rad sprejel in nas z novim programom festivala povabil v neznano – tja, kamor Slowind vedno znova rad zahaja. Letošnji festival bo v prvi vrsti prežet z njegovo glasbo za najrazličnejše sestave. Zato so koncerti glede na zasedbe izvajalcev koncipirani precej klasično. Prvi koncert je v celoti namenjen zasedbi dveh klavirjev, tolkal 3 in elektronike, drugi samo violini z elektroniko, tretji pihalnemu kvintetu, četrti godalnemu kvartetu s pevko, zadnji pa nekoliko bolj v duhu Slowinda – mešanim raznovrstnim zasedbam. -

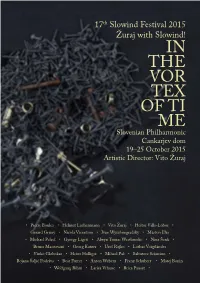

In the Vor Tex of Ti Me

17th Slowind Festival 2015 Žuraj with Slowind! IN THE VOR TEX OF TI Slovenian PhilharmonicME Cankarjev dom 19–25 October 2015 Artistic Director: Vito Žuraj • Pierre Boulez • Helmut Lachenmann • Vito Žuraj • Heitor Villa-Lobos • Gérard Grisey • Nicola Vicentino • Ivan Wyschnegradsky • Márton Illés • Michael Pelzel • György Ligeti • Alwyn Tomas Westbrooke • Nina Šenk • Bruno Mantovani • Georg Katzer • Uroš Rojko • Lothar Voigtländer • Vinko Globokar • Heinz Holliger • Mihael Paš • Salvatore Sciarrino • Bojana Šaljić Podešva • Beat Furrer • Anton Webern • Franz Schubert • Matej Bonin • Wolfgang Rihm • Larisa Vrhunc • Brice Pauset • Valued Listeners, It is no coincidence that we in Slowind decided almost three years ago to entrust the artistic direction of our festival to the penetrating, successful and (still) young composer Vito Žuraj. And it is precisely in the last three years that Vito has achieved even greater success, recently being capped off with the prestigious Prešeren Fund Prize in 2014, as well as a series of performances of his compositions at a diverse range of international music venues. Vito’s close collaboration with composers and performers of various stylistic orientations was an additional impetus for us to prepare the Slowind Festival under the leadership of a Slovenian artistic director for the first time! This autumn, we will put ourselves at the mercy of the vortex of time and again celebrate to some extent. On this occasion, we will mark the th th 90 birthday of Pierre Boulez as well as the 80 birthday of Helmut Lachenmann and Georg Katzer. In addition to works by these distinguished th composers, the 17 Slowind Festival will also present a range of prominent composers of our time, including Bruno Mantovani, Brice Pauset, Michael Pelzel, Heinz Holliger, Beat Furrer and Márton Illés, as well as Slovenian composers Larisa Vrhunc, Nina Šenk, Bojana Šaljić Podešva, Uroš Rojko, and Matej Bonin, and, of course, the festival’s artistic director Vito Žuraj. -

October 2016 Events

Events October 2016 Index Ljubljana European Green Capital 2016 Festivals 4 Exhibitions 7 Music 9 Opera, theatre, dance 15 Miscellaneous 17 Sports 18 Cover photo: J. Skok Edited and published by: Ljubljana Tourism, Krekov trg 10, SI-1000 Ljubljana, www.visitljubljana.com T: +386 1 306 45 83, E: [email protected], Get to know Ljubljana, the European Green Capital 2016, on a special city tour run as part W: www.visitljubljana.com of a series of green, sustainability-themed tours of Ljubljana. Photography: Archives of Ljubljana The tours will take visitors to Tivoli Park or the Ljubljana Botanic Garden, two of the city’s Tourism and individual event organisers major green attractions. On each tour, a Ljubljana Tourism guide will introduce visitors to Prepress: Prajs d.o.o. the sustainability projects while taking them around the city’s green nooks and crannies. Printed by: Partner Graf d.o.o., Ljubljana, October 2016 15 October Ljubljana Tourism is not liable for any Wood - a precious gift of nature inaccurate information appearing in the (Jesenko Forest Trail) calendar. Departure point: at 10:00 from Ljubljana Tourist Information Centre - TIC, next to the Triple Bridge. Language options: Slovenian, English Committed to caring for the environment, we print on Price: adults € 10.00, children from 4 to 12 years € 5.00 recycled paper. Information: Ljubljana Tourist Information Centre - TIC, Tel. 306 12 35, Share our commitment by [email protected], www.visitljubljana.com sharing this publication with your friends. Highlights in October Medieval Day 1 Oct 14 Oct 30 Oct Medieval Day Cirque du Soleil: Varekai Ljubljana Marathon The Medieval Day Don’t miss the famous The Ljubljana Marathon is features a large costumed Canadian artistic ensemble a mammoth celebration of procession of medieval who “reinvented the circus” running. -

Composers of the 17Th Slowind Festival 2015 Modern, the Ensemble Intercontemporain, the Minguet Quartet and the Munich Chamber Orchestra

Composers of the 17th Slowind Festival 2015 Modern, the Ensemble Intercontemporain, the Minguet Quartet and the Munich Chamber Orchestra. In 2001 he played the solo part of his piano concerto Rajzok II in the Cologne Philharmonie with the Bamberg Symphony Orchestra, conducted by Jonathan Nott. He has taught theory at Karlsruhe University of Music since 2005 Márton Illés (roj . 1975) and composition at Würzburg University of Music since 2011. His works are Márton Illés, born in Budapest, received published by Breitkopf & Härtel. Among his early training in piano, composition his awards and honours are the Christoph and percussion at various Kodály schools and Stephan Kaske Prize (2005), the in Győr. From 1994 to 2001 he attended composers’ prize of the Ernst von Siemens the Basle Academy of Music, where he Music Foundation (2008), the Schneider- studied with László Gyimesi (piano) and Schott Prize and the Paul Hindemith Detlev Müller-Siemens (composition). Prize. This was followed from 2001 to 2005 by studies in Karlsruhe with Wolfgang Rihm (composition) and Michael Reudenbach (theory). Later he received fellowships to the Villa Massimo in Rome (2009), the Villa Concordia in Bamberg (2011) and the Civitella Ranieri Centre in Umbria (2012). His catalogue of works includes pieces for solo instrument, chamber music, string quartets, vocal works, ensemble compositions, electronic music, two pieces of music theatre and works for string orchestra and full orchestra. He has been performed at leading international festivals and concert halls including the Rome Auditorium, Cité des Arts (Paris), Klangspuren (Schwaz, Austria), Kings Place (London), the Berlin Konzerthaus, Musica Strasbourg, the Munich Biennale, the Schleswig-Holstein Festival, the Tokyo Summer Festival, Ultraschall (Berlin), Eclat Festival (Stuttgart) and the Witten Contemporary Chamber Music Festival. -

Letopis SAZU 2020

SLOVENSKE AKADEMIJE ZNANOSTI IN UMETNOSTI 71. KNJIGA 71. KNJIGA / 2020 71. KNJIGA 2020 SAZU LETOPIS 15 € LJUBLJANA ISSN 0374–0315 2021 ISSN 0374-0315 LETOPIS SLOVENSKE AKADEMIJE ZNANOSTI IN UMETNOSTI 71/2020 THE YEARBOOK OF THE SLOVENIAN ACADEMY OF SCIENCES AND ARTS VOLUME 71/2020 ANNALES ACADEMIAE SCIENTIARUM ET ARTIUM SLOVENICAE LIBER LXXI (2020) SLOVENSKE AKADEMIJE ZNANOSTI IN UMETNOSTI 71. KNJIGA 2020 THE YEARBOOK OF THE SLOVENIAN ACADEMY OF SCIENCES AND ARTS VOLUME 71/2020 LJUBLJANA 2021 SPREJETO NA SEJI PREDSEDSTVA SLOVENSKE AKADEMIJE ZNANOSTI IN UMETNOSTI DNE 2. FEBRUARJA 2021 Naslov - Address SLOVENSKA AKADEMIJA ZNANOSTI IN UMETNOSTI SI-1000 LJUBLJANA, Novi trg 3, p.p. 323, telefon (01) 470-61-00, elektronska pošta: [email protected] spletna stran: www.sazu.si VSEBINA / CONTENTS I ORGANIZACIJA SAZU/ SASA ORGANIZATION .............................................................7 Skupščina, redni, izredni, dopisni člani II POROČILO O DELU SAZU / REPORT ON THE WORK OF SASA ..............................19 Slovenska akademija znanosti in umetnosti v letu 2020 .....................................................21 Delo skupščine SAZU ................................................................................................................27 I. Razred za zgodovinske in družbene vede ..............................................................................29 II. Razred za filološke in literarne vede ....................................................................................... 31 III. Razred za matematične, fizikalne, -

Sozvočje Svetov

Sozvočje svetov XV Harmony of the Spheres XV Narodna Društvo Komorni godalni orkester galerija Slovenske filharmonije Mestni trg 17 Slovenia 2015–2016 1000 Ljubljana of Telefon / telefaks +386 (0)1 426 1420 [email protected] National www.drustvo-kgosf.si Gallery Zanj / Represented by: Gallery Boris Šinigoj of Slovenia National : Narodna galerija / Rinaldo and Armida Puharjeva 9 2015–2016 NG S 3057 oil, canvas 1000 Ljubljana, Slovenija Cover Armida Telefon: +386 (0)1 24 15 434 Inv. no. Inv. Telefaks: +386 (0)1 24 15 403 in [email protected] www.ng-slo.si XV XV Zanjo / Represented by: / Naslovnica Lazzarini (1657–1730) Gregorio Rinaldo olje, platno / št. / Inv. galerija / Narodna Barbara Jaki, direktorica / director Uredila / Editors: Andrej Smrekar, Klemen Hvala Besedilo / Text: Andrej Smrekar, Klemen Hvala Prevod / Translation: Andrej Smrekar Pregled besedil / Editing: Alenka Klemenc Oblikovanje / Graphic design: zasnova / original design Miljenko Licul mutacija / mutation Kristina Kurent Fotografija / Photography: Fototeka Narodne galerije, Ljubljana / Photo Library of the National Gallery of svetov Sozvočje Slovenia Tisk / Printing: SOLOS d. o. o. Naklada / Print run: 600 izvodov / copies © Narodna galerija, Ljubljana, september 2015 of the Spheres Harmony sozvocje-naslovnica_2015.indd 1 6/15/15 12:15 PM Izvedbo Sozvočja svetov XV so zagotovili The following made the Harmony of the Spheres XV possible Generalni pokrovitelj sozvocje svetov_2015.indd 1 6/16/15 9:45 AM Izvedbo Sozvočja svetov XV so zagotovili The following made the Harmony of the Spheres XV possible REPUBLIKA SLOVENIJA MINISTRSTVO ZA KULTURO Skladbe Tomaža Bajžlja "Kontra bas" za kontrabas in godala, Nine Šenk Concertino za violončelo in godala in Mateja Bonina novo delo za harmoniko in godala po naročilu Komornega godalnega orkestra Slovenske filharmonije je v celoti podprla glasbena fundacija Ernst von Siemens. -

Izidor Kokovnik CV In

Slovenian accordion player Izidor Kokovnik started his first accordion lessons when he was 14 years old. He enrolled in the music school in the Slovenian town of Velenje. After studying music under Prof. Zmago Štih, Kokovnik went abroad to study under Prof. Ivan Koval at the Liszt School of Music Weimar, Germany. He graduated in 2008 with a diploma in education and continued his studies in art education under Prof. Ivan Koval. In October 2010, Kokovnik resumed his studies at the Academy of Music in Ljubljana (Slovenia) with Prof. Primoţ Parovel and two years under Prof. Luka Juhart until receiving his master’s degree. Some of Kokovnik’s most important performance are the concerts under the patronage of Jeunesses Musicales International of Slovenia, his re-creation of Rej (the composition by L. Lebič) at the Ţupančič Award Ceremony where composer Prof. Lojze Lebič received the Ţupančič Lifetime Achievement Award, his solo recital at the Nei Suoni dei Luoghi international music festival (Italy) and his solo recital in Velenje (in the context of the European Capital of Culture Maribor 2012). He performed with the Slovenian Philharmonic Orchestra (conductor Loris Voltolini) and he played at the Composer’s Evening event of Uroš Rojko and Slavko L. Šuklar. Kokovnik performed at the World Première of compositions by Marianne Richter (with the Ensemble for Contemporary Music Weimar, Germany), Prof. Wolf-Günter Leidel and Slovenian composer Blaţenka Arnič-Lemeţ. Izidor Kokovnik has attended several seminars by accordionists such as Matti Rantanen, Iñaki Alberdi, Teodoro Anzellotti, Stefan Hussong, Hugo Noth, Margit Kern, Roman Pechmann, Corrado Rojac, harpsichordist Egon Mihajlović, composer Alan Bern and others. -

Daily Schedule Irzu Institute for Sonic Arts Research Daily Guide for the International Computer Music Conference 9

DAILY SCHEDULE IRZU _INSTITUTE FOR SONIC ARTS RESEARCH DAILY GUIDE FOR THE INTERNATIONAL COMPUTER MUSIC CONFERENCE 9. - 14. SEPTEMBER 2012 LJUBLJANA, SLOVENIA Conference Chair: Miha Ciglar Paper Chair: Matija Marolt Paper Co-Chair: Martin Kaltenbrunner Music Chair: Mauricio Valdes san Emeterio Music Co-Chair: Steven Loy Music Co-Chair: Gregor Pompe Music Co-Chair: Brane Zorman Proceedings Co-Editors: Matija Marolt, Martin Kaltenbrunner, Miha Ciglar IRZU _Institute for Sonic Arts Research, Ljubljana, Slovenia SUPPORT CONTENTS The Organizing committee of ICMC2011 acknowledge the generous support of: _6 WELCOME NOTES _7 WELCOME FROM THE ICMA: TAE HONG PARK _8 THE _ICMC2012 ORGANIZING COMMITTEE _8 TECHNICAL SUPERVISORS _8 REGISTRATION DESK _9 WELCOME FROM IRZU _INSTITUTE FOR SONIC ARTS RESEARCH: MIHA CIGLAR _11 WELCOME FROM THE PAPER PROGRAM CHAIRS: MATIJA MAROLT AND MARTIN KALTENBRUNNER _12 WELCOME FROM THE MUSIC PROGRAM CHAIR: MAURICIO VALDÉS _12 _ICMC2012 VENUES & LOCAL TRANSPORT _17 SCHEDULE AND CONFERENCE OVERVIEW _22 PAPER SESSIONS _34 INSTALLATIONS _45 VIDEO LISTENING ROOM _48 LISTENING ROOM _79 CONCERTS _122 COMPOSER / PERFORMER BIOGRAPHIES _170 ACCOMPANYING EVENTS IN KIBERPIPA / CYBERPIPE _172 CD WELCOME NOTES WELCOME FROM THE ICMA Dear 2012 ICMC Delegates, On behalf of the ICMA, it is with great pleasure to welcome you to the 2012 ICMC. Since 1974, we have convened annually at the ICMC conferences at various locations and countries all over the globe including Canada, China, Cuba, Denmark, France, Ireland, Japan, Germany, Greece, Netherlands, Scotland, Singapore, Spain, and the United States. We are excited that Slovenia has joined the expanding list of countries that have hosted our annual meeting as it shows that computer music is reaching more diverse audiences and communities. -

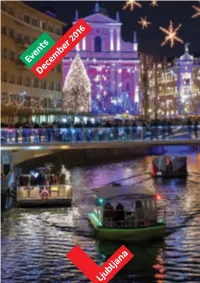

December 2016 Events

Events December 2016 Guided tours of festive Ljubljana Highlights in December Festive Ljubljana Index December in Ljubljana 4 Exhibitions 6 Music 9 Opera, theatre, dance 15 Miscellaneous 18 December Tour of Festively Decorated Ljubljana Sports 19 Romantic and magical. This is the best description of Ljubljana during the days of December. You will take a walk through festively decorated Ljubljana with a guide, look for a unique gift at the holiday fair and get to know the story and concept of the decorations, the creative talent behind Cover photo: D. Wedam which has been, for many years, the renowned painter Edited and published by: Ljubljana Zmago Modic. Tourism, Krekov trg 10, SI-1000 Ljubljana, Schedule: 26 November 2016 - 8 January 2017 daily at 17:00 T: +386 1 306 45 83, Duration: Two hours. E: [email protected], Language options: Slovenian, English and Italian Other language options are available by arrangement with the tour W: www.visitljubljana.com organiser. Photography: Archives of Ljubljana Departure point: Ljubljana Tourist Information Centre Tourism and individual event organisers (Stritarjeva ulica street) Price: €10.00 for adults, €5.00 for children aged between 4 and 12 Prepress: Prajs d.o.o. Printed by: Partner Graf d.o.o., Ljubljana, December 2016 Ljubljana Tourism is not liable for any Kurzschluss 27 Nov -3 Jan 27 Nov -3 Jan inaccurate information appearing in the The Kurzschluss festival of calendar. December in Ljubljana Festive Fair electronic music presents The festively decorated Each year in December, a string of top-quality old city centre will see Ljubljana’s Festive Fair Committed to caring for the club events featuring a range of free open-air becomes the centre of the environment, we print on recycled paper. -

Trumpet Ob 18.30 / at 6.30 Pm Bojana Karuza, Klavir / Piano

Viteška dvorana, Križanke in Slovenska filharmonija Knights` Hall, Križanke and Slovenian Philharmonic 8. 10. 2020–1. 4. 2021 MLADI VIRTUOZI YOUNG VIRTUOSI Mednarodni glasbeni cikel Festivala Ljubljana 2020/2021 International Music Cycle of the Festival Ljubljana 2020/2021 Program finančno omogočata / The programme is supported by: Ustanoviteljica Festivala Ljubljana je Mestna občina Ljubljana. The Festival Ljubljana was founded by the City of Ljubljana. 2 Informacije / Information: FESTIVAL LJUBLJANA Trg francoske revolucije 1, 1000 Ljubljana Tel.: + 386 (0)1 / 241 60 00 ljubljanafestival.si; [email protected] www.facebook.com/ljubljanafestival www.instagram.com/festival_ljubljana/ www.youtube.com/user/TheFestivalLjubljana Festival Ljubljana si pridržuje pravico do sprememb v programu. The Ljubljana Festival reserves the right to alter the programme. Izdal / Published by: Festival Ljubljana, oktober / October 2020 Zanj / For the Publisher: Darko Brlek, direktor in umetniški vodja, Častni član Evropskega združenja festivalov / General and Artistic Director, Honorary member of the European Festivals Association Uredile / Edited by: Samantha Reich, Evelin Frčec, Katja Bogovič Prevod / Translation: Amidas, d. o. o. Oblikovanje / Design: Art design, d. o. o. Tisk / Printing: Bucik, d.o.o. Izvodov / Circulation: 1.500 28. MLADI VIRTUOZI / 28th YOUNG VIRTUOSI, 8. 10. 2020 –1. 4. 2021 3 Pridružite se nam na čarobnih večerih, Polnih mladostne energije! Mednarodni glasbeni cikel Mladi virtuozi ponuja možnosti za vzgojo mladih poslušalcev in izvajalcev, od katerih je odvisen današnji svet kulture in umetnosti. Leta 1992 ga je ustanovil direktor in umetniški vodja Festivala Ljubljana, Darko Brlek, z vizijo, da bi omogočil vzhajajočim mladim glasbenim upom resno koncertno predstavitev. To jesen v glasbeno zgodbo tako ponosno stopamo že 28.