Comparison of Artificial Intelligence Techniques for Project Conceptual Cost Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GAO-20-195G, Cost Estimating and Assessment Guide

COST ESTIMATING AND ASSESSMENT GUIDE Best Practices for Developing and Managing Program Costs GAO-20-195G March 2020 Contents Preface 1 Introduction 3 Chapter 1 Why Government Programs Need Cost Estimates and the Challenges in Developing Them 8 Cost Estimating Challenges 9 Chapter 2 Cost Analysis and Cost Estimates 17 Types of Cost Estimates 17 Significance of Cost Estimates 22 Cost Estimates in Acquisition 22 The Importance of Cost Estimates in Establishing Budgets 24 Cost Estimates and Affordability 25 Chapter 3 The Characteristics of Credible Cost Estimates and a Reliable Process for Creating Them 31 The Four Characteristics of a Reliable Cost Estimate 31 Best Practices Related to Developing and Maintaining a Reliable Cost Estimate 32 Cost Estimating Best Practices and the Estimating Process 33 Chapter 4 Step 1: Define the Estimate’s Purpose 38 Scope 38 Including All Costs in a Life Cycle Cost Estimate 39 Survey of Step 1 40 Chapter 5 Step 2: Developing the Estimating Plan 41 Team Composition and Organization 41 Study Plan and Schedule 42 Cost Estimating Team 44 Certification and Training for Cost Estimating and EVM Analysis 46 Survey of Step 2 46 Chapter 6 Step 3: Define the Program - Technical Baseline Description 48 Definition and Purpose 48 Process 48 Contents 49 Page i GAO-20-195G Cost Estimating and Assessment Guide Key System Characteristics and Performance Parameters 52 Survey of Step 3 54 Chapter 7 Step 4: Determine the Estimating Structure - Work Breakdown Structure 56 WBS Concepts 56 Common WBS Elements 61 WBS Development -

Introduction to Estimating

CHAPTER ONE INTRODUCTION TO ESTIMATING 1–1 GENERAL INTRODUCTION The estimator is responsible for including everything con- tained in the drawings and the project manual in the submit- Building construction estimating is the determination of ted bid. Because of the complexity of the drawings and the probable construction costs of any given project. Many items project manual, coupled with the potential cost of an error, influence and contribute to the cost of a project; each item the estimator must read everything thoroughly and recheck must be analyzed, quantified, and priced. Because the esti- all items. Initially, the plans and the project manual must be mate is prepared before the actual construction, much study checked to ensure that they are complete. Then the estimator and thought must be put into the construction documents. can begin the process of quantifying all of the materials pre- The estimator who can visualize the project and accurately sented. Every item included in the estimate must contain as determine its cost will become one of the most important much information as possible. The quantities determined for persons in any construction company. the estimate will ultimately be used to order and purchase For projects constructed with the design-bid-build de- the needed materials. The estimated quantities and their as- livery system, it is necessary for contractors to submit a sociated projected costs will become the basis of project competitive cost estimate for the project. The competition controls (e.g., budget and baseline schedule) in the field. in construction bidding is intense, with multiple firms vying Estimating the ultimate cost of a project requires the in- for a single project. -

Estimating Project Cost Contingency – a Model and Exploration of Research Questions

Estimating Project Cost Contingency – A Model and Exploration of Research Questions David Baccarini Department of Construction Management, Curtin University of Technology, PO Box U198, Perth 6845, Western Australia, Australia. The cost performance of building construction projects is a key success criterion for project sponsors. Projects require budgets to set the sponsor’s financial commitment and provide the basis for cost control and measurement of cost performance. A key component of a project budget is cost contingency. A literature review of the concept of project cost contingency is presented from which a model for the estimating of project cost contingency is derived. This model is then used to stimulate a range of important research questions in regard to estimating project cost contingency and the measurement of its accuracy. Keywords: cost contingency, project cost performance, project risk management INTRODUCTION The cost performance of construction projects is a key success criterion for project sponsors. Project cost overruns are commonplace in construction (Touran 2003). Cost contingency is included within a budget estimate so that the budget represents the total financial commitment for the project sponsor. Therefore the estimation of cost contingency and its ultimate adequacy is of critical importance to projects. The primarily focus of this paper is a literature review, leading to a tentative model for the concept of project cost contingency. The literature on project cost contingency tends to focus predominately upon the micro-process of estimation. There is no model that sets out a macro perspective to provide a holistic understanding of the estimating process for project cost contingency. A model for project cost contingency, from the perspective of the project, is presented to stimulate the identification of research questions regarding the estimating of project cost contingency and measurement of its accuracy. -

Estimating Project Cost Contingency – a Model and Exploration of Research Questions

ESTIMATING PROJECT COST CONTINGENCY – A MODEL AND EXPLORATION OF RESEARCH QUESTIONS David Baccarini Department of Construction Management, Curtin University of Technology, PO Box U198, Perth 6845, Western Australia, Australia. The cost performance of building construction projects is a key success criterion for project sponsors. Projects require budgets to set the sponsor’s financial commitment and provide the basis for cost control and measurement of cost performance. A key component of a project budget is cost contingency. A literature review of the concept of project cost contingency is presented from which a model for the estimating of project cost contingency is derived. This model is then used to stimulate a range of important research questions in regard to estimating project cost contingency and the measurement of its accuracy. Keywords: cost contingency, project cost performance, project risk management INTRODUCTION The cost performance of construction projects is a key success criterion for project sponsors. Project cost overruns are commonplace in construction (Touran 2003). Cost contingency is included within a budget estimate so that the budget represents the total financial commitment for the project sponsor. Therefore the estimation of cost contingency and its ultimate adequacy is of critical importance to projects. The primarily focus of this paper is a literature review, leading to a tentative model for the concept of project cost contingency. The literature on project cost contingency tends to focus predominately upon the micro-process of estimation. There is no model that sets out a macro perspective to provide a holistic understanding of the estimating process for project cost contingency. A model for project cost contingency, from the perspective of the project, is presented to stimulate the identification of research questions regarding the estimating of project cost contingency and measurement of its accuracy. -

Cost Estimating Guide

U.S. Department of Energy ADMIN CHANGE Washington, D.C. DOE G 413.3-21 Chg 1: 10-22-2015 SUBJECT: ADMINISTRATIVE CHANGE TO DOE G 413.3-21, COST ESTIMATING GUIDE 1. EXPLANATION OF CHANGES. These Administrative Changes are limited to changing the Office of Primary Interest (OPI) to the Office of Project Management Oversight and Assessments. 2. LOCATIONS OF CHANGES: Page Paragraph Changed To Title INITIATED BY: INITIATED BY: Office of Management Office of Project Management Oversight & Assessments NOT MEASUREMENT SENSITIVE DOE G 413.3-21 Approved 5-9-2011 Chg 1 (Admin Chg) 10-22-2015 Cost Estimating Guide [This Guide describes suggested non-mandatory approaches for meeting requirements. Guides are not requirements documents and are not to be construed as requirements in any audit or appraisal for compliance with the parent Policy, Order, Notice, or Manual.] U.S. Department of Energy Washington, D.C. 20585 AVAILABLE ONLINE AT: INITIATED BY: https://www.directives.doe.gov Office of Project Management Oversight & Assessments DOE G 413.3-21 i (and ii) 5-9-2011 FOREWORD This Department of Energy (DOE) Guide may be used by all DOE elements. This Guide provides uniform guidance and best practices that describe the methods and procedures that could be used in all programs and projects at DOE for preparing cost estimates. This guidance applies to all phases of the Department’s acquisition of capital asset life-cycle management activities. Life-cycle costs (LCCs) are the sum total of the direct, indirect, recurring, nonrecurring, and other costs incurred or estimated to be incurred in the design, development, production, operation, maintenance, support, and final disposition of a system over its anticipated useful life span. -

Cost Estimating Requirements Handbook, November 2007

Cost Estimating Requirements Handbook National Park Service November, 2007 1 Table of Contents Page Chapter 1: Introduction 2 1.1 Purpose 2 1.2 Application 2 1.3 Cost Management Policies 2 Chapter 2: Design Estimating Submissions 3 2.1 Submission Levels 3 2.2 Phased Projects 3 2.3 Multi-Structure (Multi-Asset) Estimates 3 2.4 Optional Contract Line Items 3 2.5 Resolution of NPS Comments 4 Chapter 3: Cost Estimating Practices 5 3.1 Cost Management 5 3.2 Estimating Formats [Work Breakdown Structure (WBS)] 7 3.3 English Units 9 3.4 Unit Pricing 9 3.5 Cost Estimate Data Sources 9 3.6 Estimate Mark-ups 9 3.7 Adjusting for Escalation 12 Chapter 4: Standards of Conduct 13 4.1 Standards 13 4.2 False Statements 13 Appendix 14 A. Conceptual (Class C) Estimates 15 B. Budgetary (Class B) Estimates 18 C. Actual Construction (Class A) Estimate 21 D. Market Survey 25 E. Scope and Cost Validation Report 26 F. Cost Comparability Analysis 28 G. Asset Categories 31 H. Uniformat II 32 I. CSI MasterFormat 35 J. Federal Acquisition Regulation 36 K. Direct/Net/Gross Construction Costs and Total Project Costs 38 Samples 39 1. Class A Construction Cost Estimate 2. Class B Construction Cost Estimate 3. Class C Construction Cost Estimate 4. Cost Comparability Analysis Worksheet 5. Cost Comparability Data Checklist 1 CHAPTER 1. Introduction 1.1 Purpose This handbook supports construction programs within the National Park Service. Technical and administrative requirements are presented for the development, preparation and submittal of cost estimates during a construction project’s planning, design, and construction process. -

Compatibility Between BIM Software and Cost Estimate Tools: a Comparison Between Two Directions of Solutions1, 2

PM World Journal Compatability between BIM Software and Vol. VIII, Issue VIII – September 2019 Cost Estimating Tools www.pmworldjournal.com Featured Paper by Ying Xu Compatibility Between BIM Software and Cost Estimate Tools: A Comparison between Two Directions of Solutions1, 2 Ying XU ABSTRACT Inaccuracy and redundant work are major issues in the cost estimating. The utilization of Building Information Model (BIM) can increase the efficiency and reduce some manmade errors. However, there are many BIM software, cost estimate software, as well as cost databases existing in different companies. All these platforms have different features from each other. It is still difficult to really realize the exchange of data. It is important to find out a solution to tackle this incompatibility. This paper introduces the concept of two directions of solutions, “from BIM to cost estimating” and “from cost estimating to BIM”. It explains in detail the pros and cons of these two directions. By using a Multi-Attribute Decision Making Method (MADM), the two directions are compared in both quantitative and qualitative analysis. The result of the analysis shows that “From BIM to cost estimation” is a better solution. The key to realizing compatibility with this solution is to have a universally adaptable coding system. Keywords Compatibility, Building Information Modeling, Cost Estimation, Bill of Quantity, Material Take-off, Software INTRODUCTION 1. The inaccuracy of cost estimation Statistics show that in 2015, fewer than one-third of the projects managed to come within 10 percent of the planned budget Large percentage of projects are facing a problem of cost overrun. -

Cost Estimating and Best Practices

Appendix B Cost Estimating and Best Practices DISCLAIMER: This is a working draft document for discussion purposes only. All information contained herein is subject to change upon further review by the U.S. Nuclear Regulatory Commission. Appendix B: Cost Estimating and Best Practices Contents Abbreviations and Acronyms ........................................................................................................ 4 Purpose .............................................................................................................................. 5 Guidance Overview ............................................................................................................ 5 B.2.1 Purpose of the Cost Estimate ..................................................................................... 6 B.2.2 Overview of the Cost Estimating Process ................................................................... 6 Cost Estimating Inputs ....................................................................................................... 9 B.3.1 Project Requirements ............................................................................................... 10 B.3.2 Documentation Requirements .................................................................................. 10 Cost Estimating Characteristics and Classifications ........................................................ 10 B.4.1 Planning the Cost Estimates ..................................................................................... 10 B.4.2 Cost Estimate -

NDOT Risk Management and Risk-Based Cost Estimation

RISK MANAGEMENT AND RISK-BASED COST ESTIMATION GUIDELINES August 2021 This page is left intentionally blank NEVADA DEPARTMENT OF TRANSPORTATION RISK MANAGEMENT AND RISK-BASED COST ESTIMATION GUIDELINES The goal of this manual is to provide guidance to NDOT personnel and consultants in best practice methods of risk management and risk-based cost estimation. It is recognized that guidelines cannot provide entirely complete and practical guidance applicable to all situations and cannot replace experience and sound judgment. As such, deviations from these guidelines may, in some cases, be unavoidable or otherwise justifiable. Procedure for Guideline Revisions The guideline will be periodically revised or updated. For edits or updates, contact the Project Management Division at (775) 888-7321. All updates will be available on the Project Management Division SharePoint Portal which should be visited regularly for updated information. Responsibility: The Project Management Division is responsible for edits and updates to this document. Temporary Revisions: Temporary revisions will be issued by the Project Management Division to reflect updated/revised procedures. These will be reflected on dated errata sheet(s) posted on the Project Management Division SharePoint Portal. Scheduled Revisions: As deemed necessary by the Project Management Chief, the Project Management Chief will assemble a Review Team for reviewing the guidelines and errata sheets to determine if a revised edition of the guidelines is required. The Project Management Division Chief will evaluate and approve the recommendations of the team for revisions to the guidelines. Risk Management and Page i Risk-Based Cost Estimation Guidelines August 2021 Foreword These guidelines address the first step in NDOT project management’s vision of achieving statewide uniformity and consistency of project cost estimates and department-wide priority on estimating, managing, and controlling costs. -

Project Estimating Requirements for the Public Buildings Service

GSA Public Buildings Service P-120 project estimating requirements for the public buildings service P-120 project estimating requirements for the public buildings service U.S. General Services Administration Office of the Chief Architect January 2007 table of contents introduction iii document organization iv acknowledgements v 1 general requirements and principles 1 1 general philosophy 3 2 estimator qualification and ethics 4-5 requirements independent government estimate (IGE) ethics due diligence expectations penalties 3 cost estimating and management practices 6-7 cost management principles estimating formats 4 estimating requirements 8-17 general warm-lit shell versus tenant improvement (TI) cost estimates contents and degree of detail cost-estimating and cost-management tools 2 prospectus-level projects product/deliverable requirements 25 1 preliminary planning and programming project requirements 27-28 project planning guide level and format requirements 2 design and construction phase cost estimating 29-46 basic concept: for all phases cost estimates and summaries market survey cost growth report space-type cost analysis life-cycle cost analyses value-engineering studies budget analysis requirements for bid submission construction-award bid analysis for prospectus-level projects cost database construction modifications and claims analysis value engineering change proposals (VECP’s) risk management occupancy agreements and tenant-improvements pricing table of contents (continued) 3 delivery methods and deliverables 49 1 overview -

Cost Estimating Guide

NOT MEASUREMENT SENSITIVE DOE G 413.3-21A 6-6-2018 Cost Estimating Guide [This Guide describes suggested non-mandatory approaches for meeting requirements. Guides are not requirements documents and are not to be construed as requirements in any audit or appraisal for compliance with the parent Policy, Order, Notice, or Manual.] U.S. Department of Energy Washington, D.C. 20585 AVAILABLE ONLINE AT: INITIATED BY: www.directives.doe.gov Office of Project Management DOE G 413.3-21A i (and ii) 6-6-2018 FOREWORD A strong cost estimating foundation is essential to achieving program and project success. Every Federal cost estimating practitioner is challenged to strive for high quality cost estimates by using the preferred best practices, methods and procedures contained in this Department of Energy (DOE) Cost Estimating Guide. Content in this guide supersedes DOE Guide 413.3-21, Chg1, Cost Estimating Guide, 10-22-2015. The Guide is applicable to all phases of the Department’s acquisition of capital asset life-cycle management activities and may be used by all DOE elements, programs and projects. When considering unique attributes, technology, and complexity, DOE personnel are advised to carefully compare alternate methods or tailored approaches against this uniform, comprehensive cost estimating guidance. Programs may specify more specific processes and procedures that augment or replace those in this guide (e.g. NNSA Life Extension Programs (LEPs) fall under the process/timeline in the Phase 6.X process). Guides provide non-mandatory supplemental information and additional guidance regarding executing the Department’s Policies, Orders, Notices, and regulatory standards. Guides may also provide acceptable methods for implementing these requirements. -

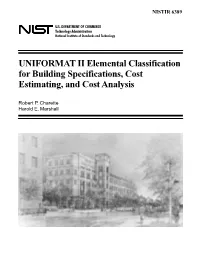

UNIFORMAT II Elemental Classification for Building Specifications, Cost Estimating, and Cost Analysis

NISTIR 6389 U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology UNIFORMAT II Elemental Classification for Building Specifications, Cost Estimating, and Cost Analysis Robert P.Charette Harold E. Marshall ASTM Uniformat II Classification for Building Elements (E1557-97) Level 1 Level 2 Level 3 Major Group Elements Group Elements Individual Elements A SUBSTRUCTURE A10 Foundations A1010 Standard Foundations A1020 Special Foundations A1030 Slab on Grade A20 Basement Construction A2010 Basement Excavation A2020 Basement Walls B SHELL B10 Superstructure B1010 Floor Construction B1020 Roof Construction B20 Exterior Enclosure B2010 Exterior Walls B2020 Exterior Windows B2030 Exterior Doors B30 Roofing B3010 Roof Coverings B3020 Roof Openings C INTERIORS C10 Interior Construction C1010 Partitions C1020 Interior Doors C1030 Fittings C20 Stairs C2010 Stair Construction C2020 Stair Finishes C30 Interior Finishes C3010 Wall Finishes C3020 Floor Finishes C3030 Ceiling Finishes D SERVICES D10 Conveying D1010 Elevators & Lifts D1020 Escalators & Moving Walks D1090 Other Conveying Systems D20 Plumbing D2010 Plumbing Fixtures D2020 Domestic Water Distribution D2030 Sanitary Waste D2040 Rain Water Drainage D2090 Other Plumbing Systems D30 HVAC D3010 Energy Supply D3020 Heat Generating Systems D3030 Cooling Generating Systems D3040 Distribution Systems D3050 Terminal & Package Units D3060 Controls & Instrumentation D3070 Systems Testing & Balancing D3090 Other HVAC Systems & Equipment D40 Fire Protection D4010