The Sellers-Chell Method: a Standardized Technique for Assessing and Selecting a Model-Based Systems Engineering (MBSE) Tool

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

07 Requirements What About RFP/RFB/Rfis?

CMPSCI520/620 07 Requirements & UML Intro 07 Requirements SW Requirements Specification • Readings • How do we communicate the Requirements to others? • [cK99] Cris Kobryn, Co-Chair, “Introduction to UML: Structural and Use Case Modeling,” UML Revision Task Force Object Modeling with OMG UML Tutorial • It is common practice to capture them in an SRS Series © 1999-2001 OMG and Contributors: Crossmeta, EDS, IBM, Enea Data, • But an SRS doesn’t need to be a single paper document Hewlett-Packard, IntelliCorp, Kabira Technologies, Klasse Objecten, Rational Software, Telelogic, Unisys http://www.omg.org/technology/uml/uml_tutorial.htm • Purpose • [OSBB99] Gunnar Övergaard, Bran Selic, Conrad Bock and Morgan Björkande, “Behavioral Modeling,” UML Revision Task Force, Object Modeling with OMG UML • Contractual requirements Tutorial Series © 1999-2001 OMG and Contributors: Crossmeta, EDS, IBM, Enea elicitation Data, Hewlett-Packard, IntelliCorp, Kabira Technologies, Klasse Objecten, Rational • Baseline Software, Telelogic, Unisys http://www.omg.org/technology/uml/uml_tutorial.htm • for evaluating subsequent products • [laM01] Maciaszek, L.A. (2001): Requirements Analysis and System Design. • for change control requirements Developing Information Systems with UML, Addison Wesley Copyright © 2000 by analysis Addison Wesley • Audience • [cB04] Bock, Conrad, Advanced Analysis and Design with UML • Users, Purchasers requirements http://www.kabira.com/bock/ specification • [rM02] Miller, Randy, “Practical UML: A hands-on introduction for developers,” -

Activity Diagram Inheritance1

Activity Diagram Inheritance1 Arnd Schnieders, Frank Puhlmann Hasso-Plattner-Institute for IT Systems Engineering at the University of Potsdam {schnieders, puhlmann}@hpi.uni-potsdam.de Abstract This paper outlines the ongoing work on the realization of a flexible inheritance mechanism for Activity Diagrams that assures the maintenance of syntactical correctness for the derived Activity Diagrams. The objective is to support the reuse of process models especially by applying Activity Diagram inheritance as a variability mechanism in the context of product line oriented software development. Keywords: Activity Diagrams, domain engineering, process inheritance, variability mechanism 1. Introduction In industry similar products are frequently developed and produced as product lines. One of the main advantages is a gain of efficiency in development and production since parts, which are common for several product line members, can be reused optimally. This approach has been transferred successfully to software development and is also known by the name domain engineering. Variability mechanisms are thereby important for the effectiveness of domain engineering. A great number of variability mechanisms has already been published [5, 9, 11, 13, 18]. Unfortunately, existing variability mechanisms only refer to the static aspects of a software system’s design while the impact of variability mechanisms on the process view on the system has been strongly neglected. Therefore, the first contribution of this paper is to contribute to closing this gap by making the important variability mechanism inheritance available for process design models in order to derive process model variants. The second contribution of this paper is to show how the defined process inheritance mechanism is realized concretely for UML 2.0 Activity Diagrams. -

Lecture for Chapter 2, Modeling With

09/10/2019 Overview: modeling with UML Modeling with UML What is modeling? What is UML? Use case diagrams Class diagrams Oriented Software Engineering - Object What is modeling? Example: street map Modeling consists of building an abstraction of reality. Abstractions are simplifications because: They ignore irrelevant details and They only represent the relevant details. What is relevant or irrelevant depends on the purpose of the model. Why model software? Systems, Models and Views Why model software? A model is an abstraction describing a subset of a system A view depicts selected aspects of a model Software is getting increasingly more complex A notation is a set of graphical or textual rules for depicting views Windows XP > 40 million lines of code Views and models of a single system may overlap each other A single programmer cannot manage this amount of code in its entirety. Code is not easily understandable by developers who did not Examples: write it System: Aircraft We need simpler representations for complex systems Models: Flight simulator, scale model Modeling is a mean for dealing with complexity Views: All blueprints, electrical wiring, fuel system 1 09/10/2019 Systems, Models and Views Models, Views and Systems (UML) Flightsimulator Blueprints * * System Model View Aircraft Described by Depicted by Model 2 View 2 View 1 System Airplane: System View 3 Model 1 Scale Model: Model Flight Simulator: Model Electrical Wiring Scale Model Blueprints: View Fuel System: View Electrical Wiring: View What is UML? What is UML? UML (Unified Modeling Language) The Unified Modeling Language (UML) is a language for Specifying An emerging standard for modeling object-oriented software. -

The Guide to Succeeding with Use Cases

USE-CASE 2.0 The Guide to Succeeding with Use Cases Ivar Jacobson Ian Spence Kurt Bittner December 2011 USE-CASE 2.0 The Definitive Guide About this Guide 3 How to read this Guide 3 What is Use-Case 2.0? 4 First Principles 5 Principle 1: Keep it simple by telling stories 5 Principle 2: Understand the big picture 5 Principle 3: Focus on value 7 Principle 4: Build the system in slices 8 Principle 5: Deliver the system in increments 10 Principle 6: Adapt to meet the team’s needs 11 Use-Case 2.0 Content 13 Things to Work With 13 Work Products 18 Things to do 23 Using Use-Case 2.0 30 Use-Case 2.0: Applicable for all types of system 30 Use-Case 2.0: Handling all types of requirement 31 Use-Case 2.0: Applicable for all development approaches 31 Use-Case 2.0: Scaling to meet your needs – scaling in, scaling out and scaling up 39 Conclusion 40 Appendix 1: Work Products 41 Supporting Information 42 Test Case 44 Use-Case Model 46 Use-Case Narrative 47 Use-Case Realization 49 Glossary of Terms 51 Acknowledgements 52 General 52 People 52 Bibliography 53 About the Authors 54 USE-CASE 2.0 The Definitive Guide Page 2 © 2005-2011 IvAr JacobSon InternationAl SA. All rights reserved. About this Guide This guide describes how to apply use cases in an agile and scalable fashion. It builds on the current state of the art to present an evolution of the use-case technique that we call Use-Case 2.0. -

OMG Systems Modeling Language (OMG Sysml™) Tutorial 25 June 2007

OMG Systems Modeling Language (OMG SysML™) Tutorial 25 June 2007 Sanford Friedenthal Alan Moore Rick Steiner (emails included in references at end) Copyright © 2006, 2007 by Object Management Group. Published and used by INCOSE and affiliated societies with permission. Status • Specification status – Adopted by OMG in May ’06 – Finalization Task Force Report in March ’07 – Available Specification v1.0 expected June ‘07 – Revision task force chartered for SysML v1.1 in March ‘07 • This tutorial is based on the OMG SysML adopted specification (ad-06-03-01) and changes proposed by the Finalization Task Force (ptc/07-03-03) • This tutorial, the specifications, papers, and vendor info can be found on the OMG SysML Website at http://www.omgsysml.org/ 7/26/2007 Copyright © 2006,2007 by Object Management Group. 2 Objectives & Intended Audience At the end of this tutorial, you should have an awareness of: • Benefits of model driven approaches for systems engineering • SysML diagrams and language concepts • How to apply SysML as part of a model based SE process • Basic considerations for transitioning to SysML This course is not intended to make you a systems modeler! You must use the language. Intended Audience: • Practicing Systems Engineers interested in system modeling • Software Engineers who want to better understand how to integrate software and system models • Familiarity with UML is not required, but it helps 7/26/2007 Copyright © 2006,2007 by Object Management Group. 3 Topics • Motivation & Background • Diagram Overview and Language Concepts • SysML Modeling as Part of SE Process – Structured Analysis – Distiller Example – OOSEM – Enhanced Security System Example • SysML in a Standards Framework • Transitioning to SysML • Summary 7/26/2007 Copyright © 2006,2007 by Object Management Group. -

PART 3: UML Dynamic Modelling Notations • State Machines/Statecharts • Collaboration Diagrams • Sequence Diagrams • Activity Diagrams

PART 3: UML Dynamic Modelling Notations • State machines/statecharts • Collaboration diagrams • Sequence diagrams • Activity diagrams. Chapter 19 of the textbook is relevant to this part. 1 State machines • State machines describe dynamic behaviour of objects, show life history of objects over time + object communications. • Used for real-time system design (eg., robotics); GUI design (states represent UI screens/modes). Example shows simple state machine with two states, On and Off and transitions between them. 2 Switch Off swon swoff On Simple state machine 3 State machines Elements of a state machine are: States: Rounded-corner boxes, containing state name. Transitions: Arrows from one state, source of transition, to another, target, labelled with event that causes transition. Default initial state: State of object at start of its life history. Shown as target of transition from initial pseudostate (black filled circle). Termination of state machine can be shown by `bullseye' symbol. 4 State machines UML divides state machines into two kinds: 1. Protocol state machines { describe allowed life histories of objects of a class. Events on transitions are operations of that class, transitions may have pre and post conditions. Transitions cannot have generated actions (although we will allow this). 2. Behaviour state machines { describe operation execution/implementation of object behaviour. Transitions do not have postconditions, but can have actions. State machines describe behaviour of objects of a particular class, or execution processing of an operation. 5 State machines • Class diagrams describe system data, independently of time. • State machines show how system/objects can change over time. • Switch state machine is protocol state machine for objects of Switch class. -

UML Tutorial: Sequence Diagrams

UML Tutorial: Sequence Diagrams. Robert C. Martin Engineering Notebook Column April, 98 In my last column, I described UML Collaboration diagrams. Collaboration diagrams allow the designer to specify the sequence of messages sent between objects in a collaboration. The style of the diagram emphasizes the relationships between the objects as opposed to the sequence of the messages. In this column we will be discussing UML Sequence diagrams. Sequence diagrams contain the same information as Collaboration diagrams, but emphasize the sequence of the messages instead of the relationships between the objects. The Cellular Phone Revisited Here again is the final collaboration diagram from last column’s Cellular Phone example. (See Figure 1.) 1*:ButtonPressed() :DigitButton Digit:Button 1.1:Digit(code) Adapter 2:ButtonPressed() :SendButton Send:Button :Dialler Adapter 2.1:Send() 1.1.2:EmitTone(code) 2.1.1:Connect(pno) 1.1.1:DisplayDigit(code) 2.1.1.1:InUse() :Cellular display display:Dialler :Speaker Radio :CRDisplay Display Figure 1: Collaboration diagram of Cellular Phone. The Sequence diagram that corresponds to this model is shown in Figure 2. It is pretty easy to see what the diagrams in Figure 2 are telling us, especially when we compare them to Figure 1. Let’s walk through the features. First of all, there are two sequence diagrams present. The first one captures the course of events that takes place when a digit button is pressed. The second captures what happens when the user pushes the ‘send’ button in order to make a call. At the top of each diagram we see the rectangles that represent objects. -

How to Build a UML Model Announcements Rational Unified

Announcements How to build a UML model ❚ HW3 – Phase 1 due on Feb 6th, 5:00pm (need to create new pairs, accounts) ❚ Feedback on M2: turn procedural code RUP into OO code, Planning game (show tables Steriotypes, packages, and with features, subtasks, estimates, object diagrams actuals, pair-programming partners) Case study ❚ Register for the Feb 18 Industry Reception 1 CS361 7-2 Rational Unified Process How RUP builds a model ❚ Designed to work with UML ❚ Gather use cases from customer ❚ No longer being promoted by IBM ❚ Make initial object model ❚ Roles - (out of 20 or so) ❚ For each use case: ❙ Architect ❙ step through use case, ❙ UI designer ❙ note the objects it requires ❙ Use case specifier ❙ note the operations it uses ❙ Use case engineer ❙ Component engineer ❚ Clean up the model CS361 7-3 CS361 7-4 Architect UI design ❚ Determine which use cases need to be ❚ Logical design developed first. ❙ Which user-interface elements are needed for ❚ High priority use cases each use case? ❙ describe important and critical functionality ❙ What information does the actor need to receive from or give to the system? ❘ security ❘ database ❚ Prototyping ❙ hard to retrofit later ❙ Often is on paper. ❙ Test on real users CS361 7-5 CS361 7-6 1 Requirements Specification Analysis model ❚ Not all requirements go in a use case. ❚ Class diagrams ❙ Example: security ❙ vague interfaces (“responsibilities”) ❙ Example: global performance ❙ vague associations (ignore navigability) ❚ Requirements document describes all ❙ stereotype classes: other requirements -

Plantuml Language Reference Guide (Version 1.2021.2)

Drawing UML with PlantUML PlantUML Language Reference Guide (Version 1.2021.2) PlantUML is a component that allows to quickly write : • Sequence diagram • Usecase diagram • Class diagram • Object diagram • Activity diagram • Component diagram • Deployment diagram • State diagram • Timing diagram The following non-UML diagrams are also supported: • JSON Data • YAML Data • Network diagram (nwdiag) • Wireframe graphical interface • Archimate diagram • Specification and Description Language (SDL) • Ditaa diagram • Gantt diagram • MindMap diagram • Work Breakdown Structure diagram • Mathematic with AsciiMath or JLaTeXMath notation • Entity Relationship diagram Diagrams are defined using a simple and intuitive language. 1 SEQUENCE DIAGRAM 1 Sequence Diagram 1.1 Basic examples The sequence -> is used to draw a message between two participants. Participants do not have to be explicitly declared. To have a dotted arrow, you use --> It is also possible to use <- and <--. That does not change the drawing, but may improve readability. Note that this is only true for sequence diagrams, rules are different for the other diagrams. @startuml Alice -> Bob: Authentication Request Bob --> Alice: Authentication Response Alice -> Bob: Another authentication Request Alice <-- Bob: Another authentication Response @enduml 1.2 Declaring participant If the keyword participant is used to declare a participant, more control on that participant is possible. The order of declaration will be the (default) order of display. Using these other keywords to declare participants -

Sysml Distilled: a Brief Guide to the Systems Modeling Language

ptg11539604 Praise for SysML Distilled “In keeping with the outstanding tradition of Addison-Wesley’s techni- cal publications, Lenny Delligatti’s SysML Distilled does not disappoint. Lenny has done a masterful job of capturing the spirit of OMG SysML as a practical, standards-based modeling language to help systems engi- neers address growing system complexity. This book is loaded with matter-of-fact insights, starting with basic MBSE concepts to distin- guishing the subtle differences between use cases and scenarios to illu- mination on namespaces and SysML packages, and even speaks to some of the more esoteric SysML semantics such as token flows.” — Jeff Estefan, Principal Engineer, NASA’s Jet Propulsion Laboratory “The power of a modeling language, such as SysML, is that it facilitates communication not only within systems engineering but across disci- plines and across the development life cycle. Many languages have the ptg11539604 potential to increase communication, but without an effective guide, they can fall short of that objective. In SysML Distilled, Lenny Delligatti combines just the right amount of technology with a common-sense approach to utilizing SysML toward achieving that communication. Having worked in systems and software engineering across many do- mains for the last 30 years, and having taught computer languages, UML, and SysML to many organizations and within the college setting, I find Lenny’s book an invaluable resource. He presents the concepts clearly and provides useful and pragmatic examples to get you off the ground quickly and enables you to be an effective modeler.” — Thomas W. Fargnoli, Lead Member of the Engineering Staff, Lockheed Martin “This book provides an excellent introduction to SysML. -

VPUML Data Sheet

Datasheet Visual Paradigm for UML 7.0 Model-Code-Deploy Platform Boost Productivity with Innovative and Intuitive Technologies – From Requirements to Code Datasheet Visual Paradigm for UML 7.0 What VP-UML Provides? UML Modeling Business Process Modeling Visualize, design, communicate and Visualize, understand, analyze and document UML 2.2 diagrams with improve business process with BPMN, intuitive and sophisticated visual Process Map, Data Flow Diagram and modeling environment. Organization Chart. Database Modeling Requirement Modeling Design, generate, reverse and synchronize Capture, organize, manage and realize database with sophisticated ERD. requirements with SysML Requirement Generate professional documentation and Diagram. Build or customize your own Java Object-Relational Mapping layer requirement types. (Hibernate). Mind Mapping Diagramming Toolset Brainstorm, outline, share and discuss Create and maintain business and concepts and idea with mind mapping engineering diagrams with Visual tool. Mind Mapping diagram nodes can Paradigm cutting edge visual modeling link to any BPMN or UML elements to toolset such as resource-centric interface, keep the traceability. mouse gesture, sweeper, magnet, styles and layer. Page 2 of 6 Datasheet Visual Paradigm for UML 7.0 What VP-UML Provides? Modeling Toolset Documentation Generation Beyond diagramming there are various Design and generate professional toolsets to improve the quality of visual documentation in PDF, MS Word 2007 modeling. Nicknamer helps maintain and HTML formats. Keeping database, single element with multiple names for program and technical specification multi-national team. Visual Diff shows up-to-date with minimal effort. differences between diagrams and revisions visually. Animating UML diagram with Animacian. Team Collaboration Interoperability & Integration Modeling collaboratively and Export, import and merge with XML, simultaneously with VP Teamwork XMI, MS Excel formats. -

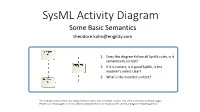

Sysml Activity Diagram Some Basic Semantics [email protected]

SysML Activity Diagram Some Basic Semantics [email protected] 1. Does this diagram follow all SysML rules, is it semantically correct? 2. If it is correct, is it good SysML, is the modeler’s intent clear? 3. What is the modeler’s intent? The semantics discussed are not comprehensive; rather, they are meant to cover only some of the most common usages. Readers are encouraged to consult additional documentation to obtain a fuller understanding of the SysML grammar. Activity Edges Control and Object Flows Control Flow Object Flow The most basic function of Activity Diagrams is the Object flow Pin. Control flow activity edge, commutation of information activity edge, solid line. among Actions. This is dashed line. accomplished by using either or both types of activity edges, as required: Best Practices 1. Use Object Flows whenever possible as they explicitly specify what is being communicated between Actions. 2. The two object flow constructs on the right are semantically identical. In general, the use of pins is preferred as it is more concise and allows for typecasting, should that be required. Activity Edge Semantics 1. An Action cannot start until all Control and Object tokens are received at the Action edge. 2. When an Action finishes, it provides all Control and Object tokens to their respective flows. Diagram Flow Discussion 1. When A finishes, it provides The Activity Diagram on the Control tokens to B and C at the left is grammatically correct. same time. However, the Control Flow 2. B then starts. When B finishes, it between A and B is provides a Control token to C.