Mixed-Signal and DSP Design Techniques, Digital Filters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Moving Average Filters

CHAPTER 15 Moving Average Filters The moving average is the most common filter in DSP, mainly because it is the easiest digital filter to understand and use. In spite of its simplicity, the moving average filter is optimal for a common task: reducing random noise while retaining a sharp step response. This makes it the premier filter for time domain encoded signals. However, the moving average is the worst filter for frequency domain encoded signals, with little ability to separate one band of frequencies from another. Relatives of the moving average filter include the Gaussian, Blackman, and multiple- pass moving average. These have slightly better performance in the frequency domain, at the expense of increased computation time. Implementation by Convolution As the name implies, the moving average filter operates by averaging a number of points from the input signal to produce each point in the output signal. In equation form, this is written: EQUATION 15-1 Equation of the moving average filter. In M &1 this equation, x[ ] is the input signal, y[ ] is ' 1 % y[i] j x [i j ] the output signal, and M is the number of M j'0 points used in the moving average. This equation only uses points on one side of the output sample being calculated. Where x[ ] is the input signal, y[ ] is the output signal, and M is the number of points in the average. For example, in a 5 point moving average filter, point 80 in the output signal is given by: x [80] % x [81] % x [82] % x [83] % x [84] y [80] ' 5 277 278 The Scientist and Engineer's Guide to Digital Signal Processing As an alternative, the group of points from the input signal can be chosen symmetrically around the output point: x[78] % x[79] % x[80] % x[81] % x[82] y[80] ' 5 This corresponds to changing the summation in Eq. -

Discrete-Time Fourier Transform 7

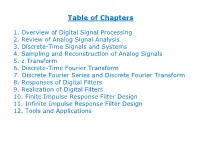

Table of Chapters 1. Overview of Digital Signal Processing 2. Review of Analog Signal Analysis 3. Discrete-Time Signals and Systems 4. Sampling and Reconstruction of Analog Signals 5. z Transform 6. Discrete-Time Fourier Transform 7. Discrete Fourier Series and Discrete Fourier Transform 8. Responses of Digital Filters 9. Realization of Digital Filters 10. Finite Impulse Response Filter Design 11. Infinite Impulse Response Filter Design 12. Tools and Applications Chapter 1: Overview of Digital Signal Processing Chapter Intended Learning Outcomes: (i) Understand basic terminology in digital signal processing (ii) Differentiate digital signal processing and analog signal processing (iii) Describe basic digital signal processing application areas H. C. So Page 1 Signal: . Anything that conveys information, e.g., . Speech . Electrocardiogram (ECG) . Radar pulse . DNA sequence . Stock price . Code division multiple access (CDMA) signal . Image . Video H. C. So Page 2 0.8 0.6 0.4 0.2 0 vowel of "a" -0.2 -0.4 -0.6 0 0.005 0.01 0.015 0.02 time (s) Fig.1.1: Speech H. C. So Page 3 250 200 150 100 ECG 50 0 -50 0 0.5 1 1.5 2 2.5 time (s) Fig.1.2: ECG H. C. So Page 4 1 0.5 0 -0.5 transmitted pulse -1 0 0.2 0.4 0.6 0.8 1 time 1 0.5 0 -0.5 received pulse received t -1 0 0.2 0.4 0.6 0.8 1 time Fig.1.3: Transmitted & received radar waveforms H. C. So Page 5 Radar transceiver sends a 1-D sinusoidal pulse at time 0 It then receives echo reflected by an object at a range of Reflected signal is noisy and has a time delay of which corresponds to round trip propagation time of radar pulse Given the signal propagation speed, denoted by , is simply related to as: (1.1) As a result, the radar pulse contains the object range information H. -

Designing Filters Using the Digital Filter Design Toolkit Rahman Jamal, Mike Cerna, John Hanks

NATIONAL Application Note 097 INSTRUMENTS® The Software is the Instrument ® Designing Filters Using the Digital Filter Design Toolkit Rahman Jamal, Mike Cerna, John Hanks Introduction The importance of digital filters is well established. Digital filters, and more generally digital signal processing algorithms, are classified as discrete-time systems. They are commonly implemented on a general purpose computer or on a dedicated digital signal processing (DSP) chip. Due to their well-known advantages, digital filters are often replacing classical analog filters. In this application note, we introduce a new digital filter design and analysis tool implemented in LabVIEW with which developers can graphically design classical IIR and FIR filters, interactively review filter responses, and save filter coefficients. In addition, real-world filter testing can be performed within the digital filter design application using a plug-in data acquisition board. Digital Filter Design Process Digital filters are used in a wide variety of signal processing applications, such as spectrum analysis, digital image processing, and pattern recognition. Digital filters eliminate a number of problems associated with their classical analog counterparts and thus are preferably used in place of analog filters. Digital filters belong to the class of discrete-time LTI (linear time invariant) systems, which are characterized by the properties of causality, recursibility, and stability. They can be characterized in the time domain by their unit-impulse response, and in the transform domain by their transfer function. Obviously, the unit-impulse response sequence of a causal LTI system could be of either finite or infinite duration and this property determines their classification into either finite impulse response (FIR) or infinite impulse response (IIR) system. -

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli

Finite Impulse Response (FIR) Digital Filters (II) Ideal Impulse Response Design Examples Yogananda Isukapalli 1 • FIR Filter Design Problem Given H(z) or H(ejw), find filter coefficients {b0, b1, b2, ….. bN-1} which are equal to {h0, h1, h2, ….hN-1} in the case of FIR filters. 1 z-1 z-1 z-1 z-1 x[n] h0 h1 h2 h3 hN-2 hN-1 1 1 1 1 1 y[n] Consider a general (infinite impulse response) definition: ¥ H (z) = å h[n] z-n n=-¥ 2 From complex variable theory, the inverse transform is: 1 n -1 h[n] = ò H (z)z dz 2pj C Where C is a counterclockwise closed contour in the region of convergence of H(z) and encircling the origin of the z-plane • Evaluating H(z) on the unit circle ( z = ejw ) : ¥ H (e jw ) = åh[n]e- jnw n=-¥ 1 p h[n] = ò H (e jw )e jnwdw where dz = jejw dw 2p -p 3 • Design of an ideal low pass FIR digital filter H(ejw) K -2p -p -wc 0 wc p 2p w Find ideal low pass impulse response {h[n]} 1 p h [n] = H (e jw )e jnwdw LP ò 2p -p 1 wc = Ke jnwdw 2p ò -wc Hence K h [n] = sin(nw ) n = 0, ±1, ±2, …. ±¥ LP np c 4 Let K = 1, wc = p/4, n = 0, ±1, …, ±10 The impulse response coefficients are n = 0, h[n] = 0.25 n = ±4, h[n] = 0 = ±1, = 0.225 = ±5, = -0.043 = ±2, = 0.159 = ±6, = -0.053 = ±3, = 0.075 = ±7, = -0.032 n = ±8, h[n] = 0 = ±9, = 0.025 = ±10, = 0.032 5 Non Causal FIR Impulse Response We can make it causal if we shift hLP[n] by 10 units to the right: K h [n] = sin((n -10)w ) LP (n -10)p c n = 0, 1, 2, …. -

Signals and Systems Lecture 8: Finite Impulse Response Filters

Signals and Systems Lecture 8: Finite Impulse Response Filters Dr. Guillaume Ducard Fall 2018 based on materials from: Prof. Dr. Raffaello D’Andrea Institute for Dynamic Systems and Control ETH Zurich, Switzerland G. Ducard 1 / 46 Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 2 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations Outline 1 Finite Impulse Response Filters Definition General Properties 2 Moving Average (MA) Filter MA filter as a simple low-pass filter Fast MA filter implementation Weighted Moving Average Filter 3 Non-Causal Moving Average Filter Non-Causal Moving Average Filter Non-Causal Weighted Moving Average Filter 4 Important Considerations Phase is Important Differentiation using FIR Filters Frequency-domain observations Higher derivatives G. Ducard 3 / 46 Finite Impulse Response Filters Moving Average (MA) Filter Definition Non-Causal Moving Average Filter General Properties Important Considerations FIR filters : definition The class of causal, LTI finite impulse response (FIR) filters can be captured by the difference equation M−1 y[n]= bku[n − k], Xk=0 where 1 M is the number of filter coefficients (also known as filter length), 2 M − 1 is often referred to as the filter order, 3 and bk ∈ R are the filter coefficients that describe the dependence on current and previous inputs. -

Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation

Computers, Materials & Continua Tech Science Press DOI:10.32604/cmc.2021.014752 Article Windowing Techniques, the Welch Method for Improvement of Power Spectrum Estimation Dah-Jing Jwo1, *, Wei-Yeh Chang1 and I-Hua Wu2 1Department of Communications, Navigation and Control Engineering, National Taiwan Ocean University, Keelung, 202-24, Taiwan 2Innovative Navigation Technology Ltd., Kaohsiung, 801, Taiwan *Corresponding Author: Dah-Jing Jwo. Email: [email protected] Received: 01 October 2020; Accepted: 08 November 2020 Abstract: This paper revisits the characteristics of windowing techniques with various window functions involved, and successively investigates spectral leak- age mitigation utilizing the Welch method. The discrete Fourier transform (DFT) is ubiquitous in digital signal processing (DSP) for the spectrum anal- ysis and can be efciently realized by the fast Fourier transform (FFT). The sampling signal will result in distortion and thus may cause unpredictable spectral leakage in discrete spectrum when the DFT is employed. Windowing is implemented by multiplying the input signal with a window function and windowing amplitude modulates the input signal so that the spectral leakage is evened out. Therefore, windowing processing reduces the amplitude of the samples at the beginning and end of the window. In addition to selecting appropriate window functions, a pretreatment method, such as the Welch method, is effective to mitigate the spectral leakage. Due to the noise caused by imperfect, nite data, the noise reduction from Welch’s method is a desired treatment. The nonparametric Welch method is an improvement on the peri- odogram spectrum estimation method where the signal-to-noise ratio (SNR) is high and mitigates noise in the estimated power spectra in exchange for frequency resolution reduction. -

Exploring Anti-Aliasing Filters in Signal Conditioners for Mixed-Signal, Multimodal Sensor Conditioning by Arun T

Texas Instruments Incorporated Amplifiers: Op Amps Exploring anti-aliasing filters in signal conditioners for mixed-signal, multimodal sensor conditioning By Arun T. Vemuri Systems Architect, Enhanced Industrial Introduction Figure 1. Multimodal, mixed-signal sensor-signal conditioner Some sensor-signal conditioners are used to process the output Analog Digital of multiple sense elements. This Domain Domain processing is often provided by multimodal, mixed-signal condi- tioners that can handle the out- puts from several sense elements Sense Digital Amplifier 1 ADC 1 at the same time. This article Element 1 Filter 1 analyzes the operation of anti- Processed aliasing filters in such sensor- Intelligent Output Compensation signal conditioners. Sense Digital Basics of sensor-signal Amplifier 2 ADC 2 Element 2 Filter 2 conditioners Sense elements, or transducers, convert a physical quantity of interest into electrical signals. Examples include piezo resistive bridges used to measure pres- sure, piezoelectric transducers used to detect ultrasonic than one sense element is processed by the same signal waves, and electrochemical cells used to measure gas conditioner is called multimodal signal conditioning. concentrations. The electrical signals produced by sense Mixed-signal signal conditioning elements are small and exhibit nonidealities, such as tem- Another aspect of sensor-signal conditioning is the electri- perature drifts and nonlinear transfer functions. cal domain in which the signal conditioning occurs. TI’s Sensor analog front ends such as the Texas Instruments PGA309 is an example of a device where the signal condi- (TI) LMP91000 and sensor-signal conditioners such as TI’s tioning of resistive-bridge sense elements occurs in the PGA400/450 are used to amplify the small signals produced analog domain. -

Transformations for FIR and IIR Filters' Design

S S symmetry Article Transformations for FIR and IIR Filters’ Design V. N. Stavrou 1,*, I. G. Tsoulos 2 and Nikos E. Mastorakis 1,3 1 Hellenic Naval Academy, Department of Computer Science, Military Institutions of University Education, 18539 Piraeus, Greece 2 Department of Informatics and Telecommunications, University of Ioannina, 47150 Kostaki Artas, Greece; [email protected] 3 Department of Industrial Engineering, Technical University of Sofia, Bulevard Sveti Kliment Ohridski 8, 1000 Sofia, Bulgaria; mastor@tu-sofia.bg * Correspondence: [email protected] Abstract: In this paper, the transfer functions related to one-dimensional (1-D) and two-dimensional (2-D) filters have been theoretically and numerically investigated. The finite impulse response (FIR), as well as the infinite impulse response (IIR) are the main 2-D filters which have been investigated. More specifically, methods like the Windows method, the bilinear transformation method, the design of 2-D filters from appropriate 1-D functions and the design of 2-D filters using optimization techniques have been presented. Keywords: FIR filters; IIR filters; recursive filters; non-recursive filters; digital filters; constrained optimization; transfer functions Citation: Stavrou, V.N.; Tsoulos, I.G.; 1. Introduction Mastorakis, N.E. Transformations for There are two types of digital filters: the Finite Impulse Response (FIR) filters or Non- FIR and IIR Filters’ Design. Symmetry Recursive filters and the Infinite Impulse Response (IIR) filters or Recursive filters [1–5]. 2021, 13, 533. https://doi.org/ In the non-recursive filter structures the output depends only on the input, and in the 10.3390/sym13040533 recursive filter structures the output depends both on the input and on the previous outputs. -

ELEG 5173L Digital Signal Processing Ch. 5 Digital Filters

Department of Electrical Engineering University of Arkansas ELEG 5173L Digital Signal Processing Ch. 5 Digital Filters Dr. Jingxian Wu [email protected] 2 OUTLINE • FIR and IIR Filters • Filter Structures • Analog Filters • FIR Filter Design • IIR Filter Design 3 FIR V.S. IIR • LTI discrete-time system – Difference equation in time domain N M y(n) ak y(n k) bk x(n k) k 1 k 0 – Transfer function in z-domain N M k k Y (z) akY (z)z bk X (z)z k 1 k 0 M k bk z Y (z) k 0 H (z) N X (z) k 1 ak z k 1 4 FIR V.S. IIR • Finite impulse response (FIR) – difference equation in the time domain M y(n) bk x(n k) k 0 – Transfer function in the Z-domain M Y (z) k H (z) bk z X (z) k 0 – Impulse response h(n) [b ,b ,,b ] 0 1 M • The impulse response is of finite length finite impulse response 5 FIR V.S. IIR • Infinite impulse response (IIR) – Difference equation in the time domain N M y(n) ak y(n k) bk x(n k) k1 k0 – Transfer function in the z-domain M k bk z Y(z) k0 H (z) N X (z) k 1 ak z k1 – Impulse response can be obtained through inverse-z transform, and it has infinite length 6 FIR V.S. IIR • Example – Find the impulse response of the following system. Is it a FIR or IIR filter? Is it stable? 1 y(n) y(n 2) x(n) 4 7 FIR V.S. -

Digital Signal Processing Filter Design

2065-27 Advanced Training Course on FPGA Design and VHDL for Hardware Simulation and Synthesis 26 October - 20 November, 2009 Digital Signal Processing Filter Design Massimiliano Nolich DEEI Facolta' di Ingegneria Universita' degli Studi di Trieste via Valerio, 10, 34127 Trieste Italy Filter design 1 Design considerations: a framework |H(f)| 1 C ıp 1 ıp ıs f 0 fp fs Passband Transition Stopband band The design of a digital filter involves five steps: Specification: The characteristics of the filter often have to be specified in the frequency domain. For example, for frequency selective filters (lowpass, highpass, bandpass, etc.) the specification usually involves tolerance limits as shown above. Coefficient calculation: Approximation methods have to be used to calculate the values hŒk for a FIR implementation, or ak, bk for an IIR implementation. Equivalently, this involves finding a filter which has H.z/ satisfying the requirements. Realisation: This involves converting H.z/ into a suitable filter structure. Block or flow diagrams are often used to depict filter structures, and show the computational procedure for implementing the digital filter. 1 Analysis of finite wordlength effects: In practice one should check that the quantisation used in the implementation does not degrade the performance of the filter to a point where it is unusable. Implementation: The filter is implemented in software or hardware. The criteria for selecting the implementation method involve issues such as real-time performance, complexity, processing requirements, and availability of equipment. 2 Finite impulse response (FIR) filter design A FIR filter is characterised by the equations N 1 yŒn D hŒkxŒn k kXD0 N 1 H.z/ D hŒkzk: kXD0 The following are useful properties of FIR filters: They are always stable — the system function contains no poles. -

Lecture 2 - Digital Filters

Lecture 2 - Digital Filters 2.1 Digital filtering Digital filtering can be implemented either in hardware or software; in the first case, the numerical processor is either a special-purpose chip or it is assembled out of a set of digital integrated circuits which provide the essential building blocks of a digital filtering operation – storage, delay, addition/subtraction and multiplication by constants. On the other hand, a general-purpose mini-or micro-computer can also be programmed as a digital filter, in which case the numerical processor is the computer’s CPU, GPU and memory. Filtering may be applied to signals which are then transformed back to the analogue domain using a digital to analogue convertor or worked with entirely in the digital domain. 33 2.1.1 Reasons for using a digital rather than an analogue filter The numerical processor can easily be (re-)programmed to implement a num- • ber of different filters. The accuracy of a digital filter is dependent only on the round-off error in • the arithmetic. This has two advantages: – the accuracy is predictable and hence the performance of the digital signal processing algorithm is known apriori. – The round-off error can be minimized with appropriate design techniques and hence digital filters can meet very tight specifications on magnitude and phase characteristics (which would be almost impossible to achieve with analogue filters because of component tolerances and circuit noise). The widespread use of mini- and micro-computers in engineering has greatly • increased the number of digital signals recorded and processed. Power sup- ply and temperature variations have no effect on a programme stored in a computer. -

Digital Signal Processing Tom Davinson

First SPES School on Experimental Techniques with Radioactive Beams INFN LNS Catania – November 2011 Experimental Challenges Lecture 4: Digital Signal Processing Tom Davinson School of Physics & Astronomy N I VE R U S I E T H Y T O H F G E D R I N B U Objectives & Outline Practical introduction to DSP concepts and techniques Emphasis on nuclear physics applications I intend to keep it simple … … even if it’s not … … I don’t intend to teach you VHDL! • Sampling Theorem • Aliasing • Filtering? Shaping? What’s the difference? … and why do we do it? • Digital signal processing • Digital filters semi-gaussian, moving window deconvolution • Hardware • To DSP or not to DSP? • Summary • Further reading Sampling Sampling Periodic measurement of analogue input signal by ADC Sampling Theorem An analogue input signal limited to a bandwidth fBW can be reproduced from its samples with no loss of information if it is regularly sampled at a frequency fs 2fBW The sampling frequency fs= 2fBW is called the Nyquist frequency (rate) Note: in practice the sampling frequency is usually >5x the signal bandwidth Aliasing: the problem Continuous, sinusoidal signal frequency f sampled at frequency fs (fs < f) Aliasing misrepresents the frequency as a lower frequency f < 0.5fs Aliasing: the solution Use low-pass filter to restrict bandwidth of input signal to satisfy Nyquist criterion fs 2fBW Digital Signal Processing D…igi wtahl aSt ignneaxtl? Processing Digital signal processing is the software controlled processing of sequential data derived from a digitised analogue signal. Some of the advantages of digital signal processing are: • functionality possible to implement functions which are difficult, impractical or impossible to achieve using hardware, e.g.