Web Spam, Propaganda and Trust

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Full Circle Magazine #33 Contents ^ Full Circle Ubuntu Women P.28

full circle ISSUE #33 - January 2010 CCRREEAATTEE AA MMEEDDIIAA CCEENNTTEERR WWIITTHH UUBBUUNNTTUU,, AANN AACCEERR RREEVVOO && BBOOXXEEEE full circle magazine #33 contents ^ full circle Ubuntu Women p.28 Program In Python - Pt7 p.08 Ubuntu Games p.31 My Story p.19 MOTU Interview p.24 Read how Ubuntu is used in public education, and why one man made the switch to Linux. Ubuntu, Revo & Boxee p.13 Command & Conquer p.05 The Perfect Server - Pt3 p.15 Review - Exaile p.23 Letters p.26 Top 5 - Sync. Clients p.35 The articles contained in this magazine are released under the Creative Commons Attribution-Share Alike 3.0 Unported license. This means you can adapt, copy, distribute and transmit the articles but only under the following conditions: You must attribute the work to the original author in some way (at least a name, email or URL) and to this magazine by name ('full circle magazine') and the URL www.fullcirclemagazine.org (but not attribute the article(s) in any way that suggests that they endorse you or your use of the work). If you alter, transform, or build upon this work, you must distribute the resulting work under the same, similar or a compatible license. full circle magazine #33 contents ^ EDITORIAL This magazine was created using : Welcome to another issue of Full Circle magazine. ast month, Andrew gave us his Top 5 Media Center applications. This month I've written a How-To on using Ubuntu on an Acer Aspire Revo to create the foundation for Boxee. For under £150 I've created a fantastic media center L which not only looks great, it's fully customizable! That's my media center story, but don't forget to read the My Story articles which this month focus on Ubuntu, Linux and open-source in public education, as well as how one man went from using old (modern at the time) computers, to using Ubuntu. -

TEAM Ling Linux Shell Scripting with Bash

TEAM LinG Linux Shell Scripting with Bash This Book Is Distributed By http://pdfstore.tk/ Please Make Sure That This E-Book Dont Have Any Or Damage This will cause you Missing Pages And Missing Tutorials.www.pdfstore.tk will automaticly `check . is this book is ready for read Attention :- Before You read this Book Please Visit www.pdfstore.tk and check you can Free Download any kind of Free matirials from www.pdfstore.tk web site TEAM LinG Linux Shell Scripting with Bash Ken O. Burtch DEVELOPER’S LIBRARY Sams Publishing, 800 East 96th Street, Indianapolis, Indiana 46240 Linux Shell Scripting with Bash Acquisitions Editor Scott Meyers Copyright © 2004 by Sams Publishing All rights reserved. No part of this book shall be reproduced, stored Managing Editor in a retrieval system, or transmitted by any means, electronic, Charlotte Clapp mechanical, photocopying, recording, or otherwise, without written Project Editor permission from the publisher. No patent liability is assumed with Elizabeth Finney respect to the use of the information contained herein.Although every precaution has been taken in the preparation of this book, the Copy Editor publisher and author assume no responsibility for errors or omis- Kezia Endsley sions. Nor is any liability assumed for damages resulting from the use Indexer of the information contained herein. Ken Johnson International Standard Book Number: 0-672-32642-6 Proofreader Library of Congress Catalog Card Number: 2003112582 Leslie Joseph Printed in the United States of America Technical Editor First Printing: February 2004 John Traenkenschuh 07060504 4321 Publishing Coordinator Trademarks Vanessa Evans All terms mentioned in this book that are known to be trademarks Multimedia Developer or service marks have been appropriately capitalized. -

Stanley Labounty Takes Big Steps to Lower Their Carbon Footprint

February 2020 www.clpower.com stanley labounty taking big steps, pg 1 what is all that gear for?, pg 3 district #1 nominating meeting, pg 5 board minutes summary, pg 6 Stanley LaBounty Takes Big Steps to Lower Their Carbon Footprint Carey Hogenson, Marketing Manager This past September, Stanley LaBounty of Two Harbors current forklift vendor and covered the rental fees for a contacted Cooperative Light & Power (CLP) looking month-long rental of an electric forklift. During that time, for ways to reduce the company’s carbon footprint. CLP the electric forklift usage, electric consumption, and other teamed up with its wholesale power provider, Great River useful information was tracked using a data logger. Energy (GRE), to see what options were available to help Stanley LaBounty accomplish its goal. CLP and GRE did a At the end of the month, the data was collected and walkthrough of the Stanley LaBounty plant and discussed a compared to the company’s cost of its propane forklifts. few possible changes where CLP and Stanley LaBounty was able to show it would achieve GRE could assist. significant long-term savings by replacing its existing IC forklifts with One of the options was a new electric lifts and optimize the number program that allows CLP members of forklifts in the fleet to better with a qualifying fleet of forklifts to match the workload of the lifts. try a new electric forklift for a month- long trial period. Multiple studies have Additionally, Stanley LaBounty was shown that using electric forklifts able to capture a substantial CO2 is a great way to reduce fuel and reduction from converting two of its maintenance costs when compared to IC lifts to electric as part of the first internal combustion (IC) forklifts. -

The Gl Bal Times

TMONDHAY, JUNEE 28, 2021 GL BAL TIMwww.thEeglobaltimS es.in INSIDE It’s outdated! A carrot affair, P4 The Rise And Fall Of The Technological Elements We Used To Swear By Aanya Narula, AIS Vas 1, X A 95 per cent of the browser market, broadly questionable name in the internet land - for about 2 decades. scape, Internet Explorer was our good old he innovative prowess of mankind The fall: Recently, Microsoft announced friend. And today as we advance into the has brought about ground-breaking to cut off its support for the Internet Ex - virtual realm, we pull the exit doors on the strides, with these in turn becoming plorer 11 across all of its applications one that helped us foray into the vast History this week, P7 Ta catalyst in the technologically-driven starting August 2021. Following the mas - world of web, in the first place. world we inhabit today. To be precise, the sive user base and popularity that the bygone decade itself saw the rise of many web browser once enjoyed, it even - technological services and applications that tually became slow and filled with gave rise to amazing advancements, Do you think Google’s changing the course of what we do cloud partnership with and how we do it. However, Reliance Jio will boost with such accelerating blazing for its times, it instantly became India’s 5G plans? technological ad - a fitting computer application, attracting vancements, a plethora of people who were still ex - a) Yes come the ac - ploring the web as a new medium for b) No celerated rate creativity. -

Web Design for Developers

What Readers Are Saying About Web Design for Developers This is the book I wish I had had when I started to build my first web- site. It covers web development from A to Z and will answer many of your questions while improving the quality of the sites you produce. Shae Murphy CTO, Social Brokerage As a web developer, I thought I knew HTML and CSS. This book helped me understand that even though I may know the basics, there’s more to web design than changing font colors and adding margins. Mike Weber Web application developer If you’re ready to step into the wonderful world of web design, this book explains the key concepts clearly and effectively. The comfortable, fun writing style makes this book as enjoyable as it is enlightening. Jeff Cohen Founder, Purple Workshops This book has something for everyone, from novice to experienced designers. As a developer, I found it extremely helpful for my day-to- day work, causing me to think before just putting content on a page. Chris Johnson Solutions developer From conception to launch, Mr. Hogan offers a complete experience and expertly navigates his audience though every phase of develop- ment. Anyone from beginners to seasoned veterans will gain valuable insight from this polished work that is much more than a technical guide. Neal Rudnik Web and multimedia production manager, Aspect This book arms application developers with the knowledge to help blur the line that some companies place between a design team and a development team. After all, just because someone is a “coder” doesn’t mean he or she can’t create an attractive and usable site. -

Event Based Propagation Approach to Constraint Configuration Problems

San Jose State University SJSU ScholarWorks Master's Theses Master's Theses and Graduate Research 2009 Event based propagation approach to constraint configuration problems Rajhdeep Jandir San Jose State University Follow this and additional works at: https://scholarworks.sjsu.edu/etd_theses Recommended Citation Jandir, Rajhdeep, "Event based propagation approach to constraint configuration problems" (2009). Master's Theses. 3659. DOI: https://doi.org/10.31979/etd.g49s-e6we https://scholarworks.sjsu.edu/etd_theses/3659 This Thesis is brought to you for free and open access by the Master's Theses and Graduate Research at SJSU ScholarWorks. It has been accepted for inclusion in Master's Theses by an authorized administrator of SJSU ScholarWorks. For more information, please contact [email protected]. EVENT BASED PROPAGATION APPROACH TO CONSTRAINT CONFIGURATION PROBLEMS A Thesis Presented to The Faculty of the Department of Computer Science San Jose State University In Partial Fulfillment of the Requirements for the Degree Master of Science by Rajhdeep Jandir May 2009 UMI Number: 1470965 INFORMATION TO USERS The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed-through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. UMI UMI Microform 1470965 Copyright 2009 by ProQuest LLC All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. -

Personal Knowledge Models with Semantic Technologies

Max Völkel Personal Knowledge Models with Semantic Technologies Personal Knowledge Models with Semantic Technologies Max Völkel 2 Bibliografische Information Detaillierte bibliografische Daten sind im Internet über http://pkm. xam.de abrufbar. Covergestaltung: Stefanie Miller Herstellung und Verlag: Books on Demand GmbH, Norderstedt c 2010 Max Völkel, Ritterstr. 6, 76133 Karlsruhe This work is licensed under the Creative Commons Attribution- ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Fran- cisco, California, 94105, USA. Zur Erlangung des akademischen Grades eines Doktors der Wirtschaftswis- senschaften (Dr. rer. pol.) von der Fakultät für Wirtschaftswissenschaften des Karlsruher Instituts für Technologie (KIT) genehmigte Dissertation von Dipl.-Inform. Max Völkel. Tag der mündlichen Prüfung: 14. Juli 2010 Referent: Prof. Dr. Rudi Studer Koreferent: Prof. Dr. Klaus Tochtermann Prüfer: Prof. Dr. Gerhard Satzger Vorsitzende der Prüfungskommission: Prof. Dr. Christine Harbring Abstract Following the ideas of Vannevar Bush (1945) and Douglas Engelbart (1963), this thesis explores how computers can help humans to be more intelligent. More precisely, the idea is to reduce limitations of cognitive processes with the help of knowledge cues, which are external reminders about previously experienced internal knowledge. A knowledge cue is any kind of symbol, pattern or artefact, created with the intent to be used by its creator, to re- evoke a previously experienced mental state, when used. The main processes in creating, managing and using knowledge cues are analysed. Based on the resulting knowledge cue life-cycle, an economic analysis of costs and benefits in Personal Knowledge Management (PKM) processes is performed. -

Adult Autologous Transplant Manual

1 2 Table of Contents IMPORTANT PHONE NUMBERS ......................................................................................................................................................... 5 CHAPTER 1 – WELCOME .................................................................................................................................................................... 7 STEPS OF AUTOLOGOUS TRANSPLANT ............................................................................................................................................................. 9 PREPARATION .......................................................................................................................................................................................... 10 CONDITIONING ........................................................................................................................................................................................ 13 TRANSPLANT ........................................................................................................................................................................................... 18 BEFORE ENGRAFTMENT ............................................................................................................................................................................. 19 AFTER ENGRAFTMENT .............................................................................................................................................................................. -

Upgrade Issues

Upgrade issues Graph of new conflicts libsiloh5-0 libhdf5-lam-1.8.4 (x 3) xul-ext-dispmua (x 2) liboss4-salsa-asound2 (x 2) why sysklogd console-cyrillic (x 9) libxqilla-dev libxerces-c2-dev iceape xul-ext-adblock-plus gnat-4.4 pcscada-dbg Explanations of conflicts pcscada-dbg libpcscada2-dev gnat-4.6 gnat-4.4 Similar to gnat-4.4: libpolyorb1-dev libapq-postgresql1-dev adacontrol libxmlada3.2-dev libapq1-dev libaws-bin libtexttools2-dev libpolyorb-dbg libnarval1-dev libgnat-4.4-dbg libapq-dbg libncursesada1-dev libtemplates-parser11.5-dev asis-programs libgnadeodbc1-dev libalog-base-dbg liblog4ada1-dev libgnomeada2.14.2-dbg libgnomeada2.14.2-dev adabrowse libgnadecommon1-dev libgnatvsn4.4-dbg libgnatvsn4.4-dev libflorist2009-dev libopentoken2-dev libgnadesqlite3-1-dev libnarval-dbg libalog1-full-dev adacgi0 libalog0.3-base libasis2008-dbg libxmlezout1-dev libasis2008-dev libgnatvsn-dev libalog0.3-full libaws2.7-dev libgmpada2-dev libgtkada2.14.2-dbg libgtkada2.14.2-dev libasis2008 ghdl libgnatprj-dev gnat libgnatprj4.4-dbg libgnatprj4.4-dev libaunit1-dev libadasockets3-dev libalog1-base-dev libapq-postgresql-dbg libalog-full-dbg Weight: 5 Problematic packages: pcscada-dbg hostapd initscripts sysklogd Weight: 993 Problematic packages: hostapd | initscripts initscripts sysklogd Similar to initscripts: conglomerate libnet-akamai-perl erlang-base screenlets xlbiff plasma-widget-yawp-dbg fso-config- general gforge-mta-courier libnet-jifty-perl bind9 libplack-middleware-session-perl libmail-listdetector-perl masqmail libcomedi0 taxbird ukopp -

* His Is the Original Ubuntuguide. You Are Free to Copy This Guide but Not to Sell It Or Any Derivative of It. Copyright Of

* his is the original Ubuntuguide. You are free to copy this guide but not to sell it or any derivative of it. Copyright of the names Ubuntuguide and Ubuntu Guide reside solely with this site. This guide is neither sold nor distributed in any other medium. Beware of copies that are for sale or are similarly named; they are neither endorsed nor sanctioned by this guide. Ubuntuguide is not associated with Canonical Ltd nor with any commercial enterprise. * Ubuntu allows a user to accomplish tasks from either a menu-driven Graphical User Interface (GUI) or from a text-based command-line interface (CLI). In Ubuntu, the command-line-interface terminal is called Terminal, which is started: Applications -> Accessories -> Terminal. Text inside the grey dotted box like this should be put into the command-line Terminal. * Many changes to the operating system can only be done by a User with Administrative privileges. 'sudo' elevates a User's privileges to the Administrator level temporarily (i.e. when installing programs or making changes to the system). Example: sudo bash * 'gksudo' should be used instead of 'sudo' when opening a Graphical Application through the "Run Command" dialog box. Example: gksudo gedit /etc/apt/sources.list * "man" command can be used to find help manual for a command. For example, "man sudo" will display the manual page for the "sudo" command: man sudo * While "apt-get" and "aptitude" are fast ways of installing programs/packages, you can also use the Synaptic Package Manager, a GUI method for installing programs/packages. Most (but not all) programs/packages available with apt-get install will also be available from the Synaptic Package Manager. -

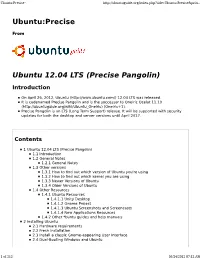

Ubuntu:Precise Ubuntu 12.04 LTS (Precise Pangolin)

Ubuntu:Precise - http://ubuntuguide.org/index.php?title=Ubuntu:Precise&prin... Ubuntu:Precise From Ubuntu 12.04 LTS (Precise Pangolin) Introduction On April 26, 2012, Ubuntu (http://www.ubuntu.com/) 12.04 LTS was released. It is codenamed Precise Pangolin and is the successor to Oneiric Ocelot 11.10 (http://ubuntuguide.org/wiki/Ubuntu_Oneiric) (Oneiric+1). Precise Pangolin is an LTS (Long Term Support) release. It will be supported with security updates for both the desktop and server versions until April 2017. Contents 1 Ubuntu 12.04 LTS (Precise Pangolin) 1.1 Introduction 1.2 General Notes 1.2.1 General Notes 1.3 Other versions 1.3.1 How to find out which version of Ubuntu you're using 1.3.2 How to find out which kernel you are using 1.3.3 Newer Versions of Ubuntu 1.3.4 Older Versions of Ubuntu 1.4 Other Resources 1.4.1 Ubuntu Resources 1.4.1.1 Unity Desktop 1.4.1.2 Gnome Project 1.4.1.3 Ubuntu Screenshots and Screencasts 1.4.1.4 New Applications Resources 1.4.2 Other *buntu guides and help manuals 2 Installing Ubuntu 2.1 Hardware requirements 2.2 Fresh Installation 2.3 Install a classic Gnome-appearing User Interface 2.4 Dual-Booting Windows and Ubuntu 1 of 212 05/24/2012 07:12 AM Ubuntu:Precise - http://ubuntuguide.org/index.php?title=Ubuntu:Precise&prin... 2.5 Installing multiple OS on a single computer 2.6 Use Startup Manager to change Grub settings 2.7 Dual-Booting Mac OS X and Ubuntu 2.7.1 Installing Mac OS X after Ubuntu 2.7.2 Installing Ubuntu after Mac OS X 2.7.3 Upgrading from older versions 2.7.4 Reinstalling applications after -

July/Aug 2021

JULY/AUGUST 2021 CONNECTION SEEING IS BELIEVING STARS Louisa Eye Care ventures into aesthetics AND STRIPES ALL THE WORLD’S A STAGE Kentucky couple creates Broadband extends art American flag art beyond the theatre INDUSTRY NEWS Rural Connections By SHIRLEY BLOOMFIELD, CEO NTCA–The Rural Broadband Association Partnering to fend off cyberattacks n recent years, we’ve learned even the biggest of corporations, including IMicrosoft, Target and Marriott, are vul- TIPS FOR SECURE ONLINE SHOPPING nerable to cyberattack. Then, last year, the pandemic increased the number of remote workers, moving more technology from the onvenience and a seemingly endless supply of options drives online shop- office into homes. ping, which is safe as long as you take a few straightforward precautions. “The pandemic gave cybercriminals the CThe Cybersecurity & Infrastructure Security Agency offers a few straight- opportunity to discover new malware fami- forward tips to ensure that no one uses your personal or financial information for lies, successful new tactics and ‘double extor- their gain. tion’ strategies,” says Roxanna Barboza, our Industry and Cybersecurity Policy analyst. THE THREATS “And since then, they have further honed their 1. Unlike visiting a physical store, shopping online opens the doors to threats like skills to exploit fear, gather intelligence and malicious websites or bogus email messages. Some might appear as charities, attack.” particularly after a natural disaster or during the holidays. If this sounds like the trailer for a horror film you have no interest in seeing, I promise 2. Vendors who do not properly secure — encrypt — their online systems may allow you, the possible impacts of a cyber breach an attacker to intercept your information.