Privacy in the Age of Facebook: Discourse, Architecture, Consequences

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Transcript of Proceedings Held on 06/23/08, Before Judge Ware. Court

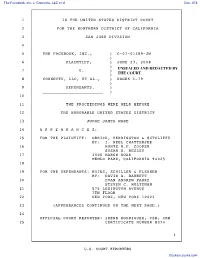

The Facebook, Inc. v. Connectu, LLC et al Doc. 474 1 IN THE UNITED STATES DISTRICT COURT 2 FOR THE NORTHERN DISTRICT OF CALIFORNIA 3 SAN JOSE DIVISION 4 5 THE FACEBOOK, INC., ) C-07-01389-JW ) 6 PLAINTIFF, ) JUNE 23, 2008 ) UNSEALED AND REDACTED BY 7 V. ) SEALED ) THE COURT 8 CONNECTU, LLC, ET AL., ) PAGES 1-79 ) 9 DEFENDANTS. ) _______________________ ) 10 11 THE PROCEEDINGS WERE HELD BEFORE 12 THE HONORABLE UNITED STATES DISTRICT 13 JUDGE JAMES WARE 14 A P P E A R A N C E S: 15 FOR THE PLAINTIFF: ORRICK, HERRINGTON & SUTCLIFFE BY: I. NEEL CHATTERJEE 16 MONTE M.F. COOPER SUSAN D. RESLEY 17 1000 MARSH ROAD MENLO PARK, CALIFORNIA 94025 18 19 FOR THE DEFENDANTS: BOIES, SCHILLER & FLEXNER BY: DAVID A. BARRETT 20 EVAN ANDREW PARKE STEVEN C. HOLTZMAN 21 575 LEXINGTON AVENUE 7TH FLOOR 22 NEW YORK, NEW YORK 10022 23 (APPEARANCES CONTINUED ON THE NEXT PAGE.) 24 OFFICIAL COURT REPORTER: IRENE RODRIGUEZ, CSR, CRR 25 CERTIFICATE NUMBER 8074 1 U.S. COURT REPORTERS Dockets.Justia.com 1 A P P E A R A N C E S: (CONT'D) 2 3 FOR THE DEFENDANTS: FINNEGAN, HENDERSON, FARABOW, GARRETT & DUNNER 4 BY: SCOTT R. MOSKO JOHN F. HORNICK 5 STANFORD RESEARCH PARK 3300 HILLVIEW AVENUE 6 PALO ALTO, CALIFORNIA 94304 7 FENWICK & WEST BY: KALAMA LUI-KWAN 8 555 CALIFORNIA STREET 12TH FLOOR 9 SAN FRANCISCO, CALIFORNIA 94104 10 11 ALSO PRESENT: BLOOMBERG NEWS BY: JOEL ROSENBLATT 12 PIER 3 SUITE 101 13 SAN FRANCISCO, CALIFORNIA 94111 14 THE MERCURY NEWS 15 BY: CHRIS O'BRIEN SCOTT DUKE HARRIS 16 750 RIDDER PARK DRIVE SAN JOSE, CALIFORNIA 94190 17 18 THE RECORDER BY: ZUSHA ELINSON 19 10 UNITED NATIONS PLAZA SUITE 300 20 SAN FRANCISCO, CALIFORNIA 94102 21 CNET NEWS 22 BY: DECLAN MCCULLAGH 1935 CALVERT STREET, NW #1 23 WASHINGTON, DC 20009 24 25 2 U.S. -

Top 50 Twitter Terms

NetLingo Top 50 Twitter Terms 1. @reply - A way to say something directly back to another person on Twitter @username, so it’s public for all to see 2. #word - When you put a hashtag before a word, it adds and sorts tweets into a category to display what's trending 3. bieber baiting - Using Justin Bieber’s name in posts to drive traffic to your online accounts (it’s actually illegal) 4. blogosphere - The shared space of blogs, crogs, flogs, microblogs, moblogs, placeblogs, plogs, splogs, vlogs 5. digital dirt - Unflattering information you may have written on social networking sites that can later haunt you 6. DM - Direct Message, a message between only you and the person you are sending it to, it is considered private 7. flash mob - A large group of people who gather suddenly in a public place, do something, then quickly disperse 8. FOMO - Fear Of Missing Out, online junkies paying partial attention to everything while scrolling through feeds 9. hashtag - When the hash sign (#) is added to a word or phrase, it lets users search for tweets similarly tagged 10. hashtag activism - Using Twitter's hashtags for Internet activism, for example #metoo #occupywallstreet 11. HT - Hat Tip, it’s an abbreviation you use to attribute a link to one of your tweeps 12. indigenous content - User-generated content created by the digital natives for themselves 13. influencer - Active Twitter users who have influence on others due to their large number of followers 14. Larry the Bird - The name of the Twitter bird, in honor of the Celtics basketball legend Larry Bird 15. -

1 Do We Still Want Privacy in the Information Age? Marvin Gordon

Do we still want privacy in the information age? Marvin Gordon-Lickey PROLOGUE All those who can remember how we lived before 1970 can readily appreciate the many benefits we now enjoy that spring from the invention of digital computing. The computer and its offspring, the internet, have profoundly changed our lives. For the most part the changes have been for the better, and they have enhanced democracy. But we know from history that such large scale transformations in the way we live are bound to cause some collateral damage. And the computer revolution has been no exception. One particular casualty stands out starkly above the sea of benefits: we are in danger of losing our privacy. In the near future it will become technically possible for businesses, governments and other institutions to observe and record all the important details of our personal lives, our whereabouts, our buying habits, our income, our social and religious activities and our family life. It will be possible to track everyone, not just suspected criminals or terrorists. Even now, information about us is being detected, stored, sorted and analyzed by machine and on a vast scale at low cost. Information flows freely at light speed around the world. Spying is being automated. High tech scanners can see through our clothes, and we have to submit to an x-ray vision strip search every time we board an airplane. Although we have laws that are intended to protect us against invasion of privacy, the laws are antiquated and in most cases were written before the computer age. -

Social Media in Government

Social Media in Government Alex Howard Government 2.0 Correspondent O’Reilly Media Agenda • A brief history of social media • e-government, open government & “We government” • The growth and future of “Gov 2.0” What is social media? Try today’s Wikipedia entry: social media is media for social interaction, using highly accessible and scalable publishing techniques A read-write Web Think of it another way: Social media are messages, text, video or audio published on digital platforms that the community to create the content Social media isn’t new • Consider the Internet before the Web (1969- 1991) • Used by military, academia and hackers • Unix-to-Unix Copy (UUCP), Telnet, e-mail Bulletin Board Services (BBS) (1979) Ward Christensen and the First BBS Usenet (1979) • First conceived of by Tom Truscott and Jim Ellis. • Usenet let users post articles or posts to newsgroups. Commercial online services (1979) Online chat rooms (1980) Internet Relay Chat (1988) • IRC was followed by ICQ in the mid- 90s. • First IM program for PCs. World Wide Web (1991) Blogs (1994) • Blogging rapidly grew in use in 1999, when Blogger and LiveJournal launched. Wikis (1994) • Ward Cunningham started development on the first wiki in 1994 and installed it on c2.om in 1995. • Cunningham was in part inspired by Apple’s Hypercard • Cunningham developed Vannevar Bush’s ideas of “allowing users to comment on and change one another’s text” America Online (1995) Social networks (1997) From one to hundreds of millions • Six Degrees was the first modern social network. • Friendster followed in 2002. • MySpace founded in 2003. -

Facebook Adds Anonymous Login, in Move to Build Trust (Update) 30 April 2014, by Glenn Chapman

Facebook adds anonymous login, in move to build trust (Update) 30 April 2014, by Glenn Chapman time use them more," Zuckerberg told an audience of about 1,700 conference attendees. "That is positive for everyone." In a statement Facebook explained its new "Anonymous Login" as an easy way for people to try an app without sharing personal information from Facebook.. "People tell us they're sometimes worried about sharing information with apps and want more choice and control over what personal information apps receive," the company said. "Today's announcements put power and control squarely in people's hands." Facebook CEO Mark Zuckerberg delivers the opening People are scared keynote at the Facebook f8 conference on April 30, 2014 in San Francisco, California In coming weeks, Facebook will also roll out a redesigned dashboard to give users a simple way Facebook moved Wednesday to bolster the trust of to manage or remove third-party applications linked its more than one billion users by providing new to their profiles at the social network. controls on how much information is shared on the world's leading social network. "This is really big from a user standpoint," JibJab chief executive Gregg Spiridellis said of what he In a major shift away from the notion long heard during the keynote presentation that opened preached by Facebook co-founder and chief Mark the one-day Facebook conference. Zuckerberg of having a single known identity online, people will be able to use applications "I think they are seeing people are scared. They anonymously at Facebook. realize that long-term, they need to be trusted." The social network also provided a streamlined A JibJab application that can synch with Facebook way for people to control which data applications lets people personalize digital greeting cards with can access and began letting people rein in what images of themselves or friends. -

La Protección De La Intimidad Y Vida Privada En Internet La Integridad

XIX Edición del Premio Protección de Datos Personales de Investigación de la Agencia Española de Protección de Datos PREMIO 2015 Las redes sociales se han convertido en una herramienta de comu- nicación y contacto habitual para millones de personas en todo el La protección de la intimidad mundo. De hecho, se calcula que más de un 75% de las personas Amaya Noain Sánchez que se conectan habitualmente a Internet cuentan con al menos un y vida privada en internet: la perfil en una red social. La autora plantea en el texto si las empre- sas propietarias de estos servicios ofrecen una información sufi- integridad contextual y los flujos de ciente a los usuarios sobre qué datos recogen, para qué los van a información en las redes sociales utilizar y si van a ser cedidos a terceros. En una reflexión posterior, propone como posibles soluciones el hecho de que estas empresas (2004-2014) pudieran implantar directrices técnicas compartidas y una adapta- ción normativa, fundamentalmente con la privacidad desde el di- seño, la privacidad por defecto y el consentimiento informado. Así, Amaya Noain Sánchez el resultado sería un sistema de información por capas, en el que el usuario fuera conociendo gradualmente las condiciones del trata- miento de su información personal. Este libro explica el funcionamiento de una red social, comenzan- do por la creación del perfil de usuario en el que se suministran datos, y analiza cómo la estructura del negocio está basada en la monetización de los datos personales, con sistemas como el targe- ting (catalogando al usuario según sus intereses, características y predilecciones) y el tracking down (cruzando información dentro y fuera de la red). -

{PDF} the Facebook Effect: the Real Inside Story of Mark Zuckerberg

THE FACEBOOK EFFECT: THE REAL INSIDE STORY OF MARK ZUCKERBERG AND THE WORLDS FASTEST GROWING COMPANY PDF, EPUB, EBOOK David Kirkpatrick | 384 pages | 01 Feb 2011 | Ebury Publishing | 9780753522752 | English | London, United Kingdom The Facebook Effect: The Real Inside Story of Mark Zuckerberg and the Worlds Fastest Growing Company PDF Book The cover of the plus-page hardcover tome is the silhouette of a face made of mirror-like, reflective paper. Not bad for a Harvard dropout who later became a visionary and technologist of this digital era. Using the kind of computer code otherwise used to rank chess players perhaps it could also have been used for fencers , he invited users to compare two different faces of the same sex and say which one was hotter. View all 12 comments. There was a lot of time for bull sessions, which tended to center on what kind of software should happen next on the Internet. He searched around online and found a hosting company called Manage. Even for those not so keen on geekery and computers, the political wrangling of the company supplies plenty of drama. As Facebook spreads around the globe, it creates surprising effects—even becoming instrumental in political protests from Colombia to Iran. But there are kinks in the storytelling. In little more than half a decade, Facebook has gone from a dorm-room novelty to a company with million users. Sheryl Sandberg, COO: Sandberg is an elegant, slightly hyper, light-spirited forty- year-old with a round face whose bobbed black hair reaches just past her shoulders. -

Netflix and the Development of the Internet Television Network

Syracuse University SURFACE Dissertations - ALL SURFACE May 2016 Netflix and the Development of the Internet Television Network Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Osur, Laura, "Netflix and the Development of the Internet Television Network" (2016). Dissertations - ALL. 448. https://surface.syr.edu/etd/448 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When Netflix launched in April 1998, Internet video was in its infancy. Eighteen years later, Netflix has developed into the first truly global Internet TV network. Many books have been written about the five broadcast networks – NBC, CBS, ABC, Fox, and the CW – and many about the major cable networks – HBO, CNN, MTV, Nickelodeon, just to name a few – and this is the fitting time to undertake a detailed analysis of how Netflix, as the preeminent Internet TV networks, has come to be. This book, then, combines historical, industrial, and textual analysis to investigate, contextualize, and historicize Netflix's development as an Internet TV network. The book is split into four chapters. The first explores the ways in which Netflix's development during its early years a DVD-by-mail company – 1998-2007, a period I am calling "Netflix as Rental Company" – lay the foundations for the company's future iterations and successes. During this period, Netflix adapted DVD distribution to the Internet, revolutionizing the way viewers receive, watch, and choose content, and built a brand reputation on consumer-centric innovation. -

Peter Thiel: What the Future Looks Like

Peter Thiel: What The Future Looks Like Peter Thiel: Hello, this is Peter. James Altucher: Hey, Peter. This is James Altucher. Peter Thiel: Hi, how are you doing? James Altucher: Good, Peter. Thanks so much for taking the time. I’m really excited for this interview. Peter Thiel: Absolutely. Thank you so much for having me on your show. James Altucher: Oh, no problem. So I’m gonna introduce you, but first I wanna mention congratulations your book – by the time your podcast comes out, your book will have just come out, Zero to One: Notes on Startups or How to Build a Future, and, Peter, we’re just gonna dive right into it. Peter Thiel: That’s awesome. James Altucher: So I want – before I break – I wanna actually, like, break down the title almost word by word, but before I do that, I want you to tell me what the most important thing that’s happened to you today because I feel like you’re – like, every other day you’re starting, like a Facebook or a PayPal or a SpaceX or whatever. What happened to you today that you looked at? Like, what interesting things do you do on a daily basis? Peter Thiel: Well, it’s – I don’t know that there’s a single thing that’s the same from day to day, but there’s always an inspiring number of great technology ideas, great science ideas that people are working on, and so even though there are many different reasons that I have concerns about the future and trends that I don’t like in our society and in the larger world, one of the things that always gives me hope is how much people are still trying to do, how many new technologies they’re trying to build. -

How Syrian Refugee Families Are Coping During COVID-19

Tragedy Tears Through Lebanon. Syrians Are Marginalized More Than Ever. As told by Syrian refugees whose lives remain in British Columbia, but compassion flies back home. Syrian refugee family’s youngest daughters, Nour Khalaf (nine years old) standing behind her little sister, Sarah Khalaf (three years old). PRINCE GEORGE, Canada - An eager ray of Saturday sunshine trickles in through Syrian refugee Aliyah Kora's window. The week has finally presented its gift. She instinctively goes downstairs, decamping to her living room. With her husband selling food at the local market and her five children fast asleep, this is the time she has for herself, her one respite, to help quiet the onslaught of worries that plague her thoughts. "Mama?" Her voice makes the turbulent journey to Turkey via WhatsApp, before reaching the ear of her mother. Though she dearly wishes to be in Syria with the rest of her children, Aliyah's mother seeks medical attention, a practically impossible phenomenon in her hometown, Aleppo, stemming from the sheer enormity of the cost. Aliyah's mother is in dire need of heart surgery, having been turned away from Lebanon she remains on the outskirts of Turkey. Aliyah spends the rest of the morning connecting with her brothers and sisters, who have been scattered helplessly throughout Syria, with some edging towards the borders, where food is scarce and death prevailing. The usual tranquility this morning brings her, is however, clouded. Burdened by a fresh layer of misery, afflicting displaced Syrians today, that she so narrowly escaped. This haze of sorrow came about on 4th August 2020 when an explosion fuelled by a large amount of ammonium nitrate stored at the port of Lebanon's capital city, Beirut, ripped through the city. -

Remembering World War Ii in the Late 1990S

REMEMBERING WORLD WAR II IN THE LATE 1990S: A CASE OF PROSTHETIC MEMORY By JONATHAN MONROE BULLINGER A dissertation submitted to the Graduate School-New Brunswick Rutgers, The State University of New Jersey In partial fulfillment of the requirements For the degree of Doctor of Philosophy Graduate Program in Communication, Information, and Library Studies Written under the direction of Dr. Susan Keith and approved by Dr. Melissa Aronczyk ________________________________________ Dr. Jack Bratich _____________________________________________ Dr. Susan Keith ______________________________________________ Dr. Yael Zerubavel ___________________________________________ New Brunswick, New Jersey January 2017 ABSTRACT OF THE DISSERTATION Remembering World War II in the Late 1990s: A Case of Prosthetic Memory JONATHAN MONROE BULLINGER Dissertation Director: Dr. Susan Keith This dissertation analyzes the late 1990s US remembrance of World War II utilizing Alison Landsberg’s (2004) concept of prosthetic memory. Building upon previous scholarship regarding World War II and memory (Beidler, 1998; Wood, 2006; Bodnar, 2010; Ramsay, 2015), this dissertation analyzes key works including Saving Private Ryan (1998), The Greatest Generation (1998), The Thin Red Line (1998), Medal of Honor (1999), Band of Brothers (2001), Call of Duty (2003), and The Pacific (2010) in order to better understand the version of World War II promulgated by Stephen E. Ambrose, Tom Brokaw, Steven Spielberg, and Tom Hanks. Arguing that this time period and its World War II representations -

Homeschooling, Freedom of Conscience, and the School As Republican Sanctuary

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by PhilPapers Homeschooling, freedom of conscience, and the school as republican sanctuary: An analysis of arguments repre- senting polar conceptions of the secular state and reli- gious neutrality P. J. Oh Master’s Thesis in Educational Leadership Fall Term 2016 Department of Education University of Jyväskylä 2 ABSTRACT Oh, Paul. 2016. Homeschooling, freedom of conscience, and the school as re- publican sanctuary: An analysis of arguments representing polar conceptions of the secular state and religious neutrality. Master's Thesis in Educational Leadership. University of Jyväskylä. Department of Education. This paper examines how stances and understandings pertaining to whether home education is civically legitimate within liberal democratic contexts can depend on how one conceives normative roles of the secular state and the religious neutrality that is commonly associated with it. For the purposes of this paper, home education is understood as a manifestation of an educational phi- losophy ideologically based on a given conception of the good. Two polar conceptions of secularism, republican and liberal-pluralist, are ex- plored. Republican secularists declare that religious expressions do not belong in the public sphere and justify this exclusion by promoting religious neutrality as an end in itself. But liberal-pluralists claim that religious neutrality is only the means to ensure protection of freedom of conscience and religion, which are moral principles. Each conception is associated with its own stance on whether exemptions or accommodations on account of religious beliefs have special legal standing and thereby warranted. The indeterminate nature of religion and alleg- edly biased exclusion of secular beliefs, cited by some when denying religious exemptions, can be overcome by understanding all religious and conscientious beliefs as having equal standing as conceptions of the good.