Color Image Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Management

Color Management hotoshop 5.0 was justifiably praised as a ground- breaking upgrade when it was released in the summer of 1998, although the changes made to the color P management setup were less well received in some quarters. This was because the revised system was perceived to be complex and unnecessary. Bruce Fraser once said of the Photoshop 5.0 color management system ‘it’s push-button simple, as long as you know which of the 60 or so buttons to push!’ Attitudes have changed since then (as has the interface) and it is fair to say that most people working today in the pre-press industry are now using ICC color profile managed workflows. The aim of this chapter is to introduce the basic concepts of color management before looking at the color management interface in Photoshop and the various color management settings. 1 Color management Adobe Photoshop CS6 for Photographers: www.photoshopforphotographers.com The need for color management An advertising agency art buyer was once invited to address a meeting of photographers. The chair, Mike Laye, suggested we could ask him anything we wanted, except ‘Would you like to see my book?’ And if he had already seen your book, we couldn’t ask him why he hadn’t called it back in again. And if he had called it in again we were not allowed to ask why we didn’t get the job. And finally, if we did get the job we were absolutely forbidden to ask why the color in the printed ad looked nothing like the original photograph! That in a nutshell is a problem which has bugged many of us throughout our working lives, and it is one which will be familiar to anyone who has ever experienced the difficulty of matching colors on a computer display with the original or a printed output. -

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

Chapter 6 : Color Image Processing

Chapter 6 : Color Image Processing CCU, Taiwan Wen-Nung Lie Color Fundamentals Spectrum that covers visible colors : 400 ~ 700 nm Three basic quantities Radiance : energy that flows from the light source (measured in Watts) Luminance : a measure of energy an observer perceives from a light source (in lumens) Brightness : a subjective descriptor difficult to measure CCU, Taiwan Wen-Nung Lie 6-1 About human eyes Primary colors for standardization blue : 435.8 nm, green : 546.1 nm, red : 700 nm Not all visible colors can be produced by mixing these three primaries in various intensity proportions Cones in human eyes are divided into three sensing categories 65% are sensitive to red light, 33% sensitive to green light, 2% sensitive to blue (but most sensitive) The R, G, and B colors perceived by CCU, Taiwan human eyes cover a range of spectrum Wen-Nung Lie 6-2 Primary and secondary colors of light and pigments Secondary colors of light magenta (R+B), cyan (G+B), yellow (R+G) R+G+B=white Primary colors of pigments magenta, cyan, and yellow M+C+Y=black CCU, Taiwan Wen-Nung Lie 6-3 Chromaticity Hue + saturation = chromaticity hue : an attribute associated with the dominant wavelength or dominant colors perceived by an observer saturation : relative purity or the amount of white light mixed with a hue (the degree of saturation is inversely proportional to the amount of added white light) Color = brightness + chromaticity Tristimulus values (the amount of R, G, and B needed to form any particular color : X, Y, Z trichromatic -

Image Processing Terms Used in This Section Working with Different Screen Bit Describes How to Determine the Screen Bit Depth of Your Depths (P

13 Color This section describes the toolbox functions that help you work with color image data. Note that “color” includes shades of gray; therefore much of the discussion in this chapter applies to grayscale images as well as color images. Topics covered include Terminology (p. 13-2) Provides definitions of image processing terms used in this section Working with Different Screen Bit Describes how to determine the screen bit depth of your Depths (p. 13-3) system and provides recommendations if you can change the bit depth Reducing the Number of Colors in an Describes how to use imapprox and rgb2ind to reduce the Image (p. 13-6) number of colors in an image, including information about dithering Converting to Other Color Spaces Defines the concept of image color space and describes (p. 13-15) how to convert images between color spaces 13 Color Terminology An understanding of the following terms will help you to use this chapter. Terms Definitions Approximation The method by which the software chooses replacement colors in the event that direct matches cannot be found. The methods of approximation discussed in this chapter are colormap mapping, uniform quantization, and minimum variance quantization. Indexed image An image whose pixel values are direct indices into an RGB colormap. In MATLAB, an indexed image is represented by an array of class uint8, uint16, or double. The colormap is always an m-by-3 array of class double. We often use the variable name X to represent an indexed image in memory, and map to represent the colormap. Intensity image An image consisting of intensity (grayscale) values. -

What Resolution Should Your Images Be?

What Resolution Should Your Images Be? The best way to determine the optimum resolution is to think about the final use of your images. For publication you’ll need the highest resolution, for desktop printing lower, and for web or classroom use, lower still. The following table is a general guide; detailed explanations follow. Use Pixel Size Resolution Preferred Approx. File File Format Size Projected in class About 1024 pixels wide 102 DPI JPEG 300–600 K for a horizontal image; or 768 pixels high for a vertical one Web site About 400–600 pixels 72 DPI JPEG 20–200 K wide for a large image; 100–200 for a thumbnail image Printed in a book Multiply intended print 300 DPI EPS or TIFF 6–10 MB or art magazine size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1800 x 1200 pixels. Printed on a Multiply intended print 200 DPI EPS or TIFF 2-3 MB laserwriter size by resolution; e.g. an image to be printed as 6” W x 4” H would be 1200 x 800 pixels. Digital Camera Photos Digital cameras have a range of preset resolutions which vary from camera to camera. Designation Resolution Max. Image size at Printable size on 300 DPI a color printer 4 Megapixels 2272 x 1704 pixels 7.5” x 5.7” 12” x 9” 3 Megapixels 2048 x 1536 pixels 6.8” x 5” 11” x 8.5” 2 Megapixels 1600 x 1200 pixels 5.3” x 4” 6” x 4” 1 Megapixel 1024 x 768 pixels 3.5” x 2.5” 5” x 3 If you can, you generally want to shoot larger than you need, then sharpen the image and reduce its size in Photoshop. -

Cielab Color Space

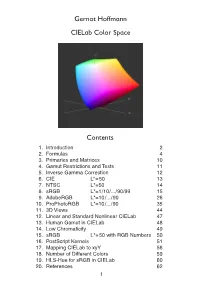

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Indexed Color

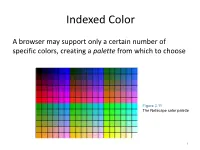

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

The Effects of Learning Rate Schedules on Color Quantization

THE EFFECTS OF LEARNING RATE SCHEDULES ON COLOR QUANTIZATION by Garrett Brown A thesis presented to the Department of Computer Science and the Graduate School of the University of Central Arkansas in partial fulfillment of the requirements for the degree of Master of Science in Computer Science Conway, Arkansas May 2020 ProQuest Number:27834147 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent on the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. ProQuest 27834147 Published by ProQuest LLC (2020). Copyright of the Dissertation is held by the Author. All Rights Reserved. This work is protected against unauthorized copying under Title 17, United States Code Microform Edition © ProQuest LLC. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, MI 48106 - 1346 TO THE OFFICE OF GRADUATE STUDIES: The members of the Committee approve the thesis of Garrett Brown presented on March 31, 2020. M. Emre Celebi, Ph.D., Committee Chairperson Ahmad Patooghy, Ph.D. Mahmut Karakaya, Ph.D. PERMISSION Title The Effects of Learning Rate Schedules on Color Quantization Department Computer Science Degree Master of Science In presenting this thesis/dissertation in partial fulfillment of the requirements for a graduate degree from the University of Central Arkansas, I agree that the Library of this University shall make it freely available for inspections. I further agree that permission for extensive copying for scholarly purposes may be granted by the professor who supervised my thesis/dissertation work, or, in the professor’s absence, by the Chair of the Department or the Dean of the Graduate School. -

Jupiter Litho

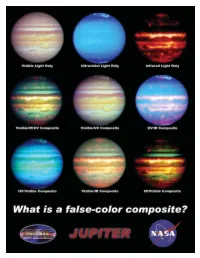

What Is a False-Color Composite? When most of us think of light, we naturally think about the light we can see with our eyes. But did you know that visible light is only a tiny fraction of the electromagnetic spectrum? You see, the human eye is only sensitive to certain wavelengths of light, and there are many other wavelengths, including radio waves, infrared light, ultraviolet light, x-rays, and gamma rays. When astronomers observe the cosmos, some objects are better observed at wavelengths that we cannot see. In order to help us visualize what we're looking at, sometimes scientists and artists will create a false-color image. Basically, the wavelengths of light that our eyes can't see are represented by a color (or a shade of grey) that we can see. Even though these false-color images do not show us what the object we're observing would actually look like, it can still provide us with a great deal of insight about the object. A composite is an image which combines different wavelengths into the same image. Since a composite isn't what an object really looks like, the same object can be represented countless different ways to emphasize different features. On the reverse are nine images of the planet Jupiter. The first image is a visible-light image, and shows the planet as it might look to our eyes. This image is followed by two false-color images, one showing the planet in the ultraviolet spectrum, and one showing it in the infrared spectrum. The six composites that follow are all made from the first three images, and you can see how different the planet can look! Definitions composite – An image that combines several different wavelengths of light that humans cannot see into one picture. -

Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor

® Foveon FO18-50-F19 4.5 MP X3 Direct Image Sensor Features The Foveon FO18-50-F19 is a 1/1.8-inch CMOS direct image sensor that incorporates breakthrough Foveon X3 technology. Foveon X3 direct image sensors Foveon X3® Technology • A stack of three pixels captures superior color capture full-measured color images through a unique stacked pixel sensor design. fidelity by measuring full color at every point By capturing full-measured color images, the need for color interpolation and in the captured image. artifact-reducing blur filters is eliminated. The Foveon FO18-50-F19 features the • Images have improved sharpness and immunity to color artifacts (moiré). powerful VPS (Variable Pixel Size) capability. VPS provides the on-chip capabil- • Foveon X3 technology directly converts light ity of grouping neighboring pixels together to form larger pixels that are optimal of all colors into useful signal information at every point in the captured image—no light for high frame rate, reduced noise, or dual mode still/video applications. Other absorbing filters are used to block out light. advanced features include: low fixed pattern noise, ultra-low power consumption, Variable Pixel Size (VPS) Capability and integrated digital control. • Neighboring pixels can be grouped together on-chip to obtain the effect of a larger pixel. • Enables flexible video capture at a variety of resolutions. • Enables higher ISO mode at lower resolutions. Analog Biases Row Row Digital Supplies • Reduces noise by combining pixels. Pixel Array Analog Supplies Readout Reset 1440 columns x 1088 rows x 3 layers On-Chip A/D Conversion Control Control • Integrated 12-bit A/D converter running at up to 40 MHz. -

False Color Photography Effect Using Hoya UV\&Amp

MATEC Web of Conferences 197, 15008 (2018) https://doi.org/10.1051/matecconf/201819715008 AASEC 2018 False Color Photography Effect using Hoya UV&IR Cut Filters Feature White Balance Setya Chendra Wibawa1,6*, Asep Bayu Dani Nandyanto2, Dodik Arwin Dermawan3, Alim Sumarno4, Naim Rochmawati5, Dewa Gede Hendra Divayana6, and Amadeus Bonaventura6 1Universitas Negeri Surabaya, Educational in Information Technology, Informatics Engineering, 20231, Indonesia, 2Universitas Pendidikan Indonesia, Chemistry Department, Bandung, 40154, Indonesia, 3Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 4Universitas Negeri Surabaya, Educational Technology, 60231, Indonesia, 5Universitas Negeri Surabaya, Informatics Engineering, 60231, Indonesia, 6Universitas Pendidikan Ganesha, Educational in Information Technology, Informatics Engineering, 81116, Indonesia Abstract. The research inspired by modification DSLR camera become False Color Photography effect. False Color Photography is a technique to give results like near-infrared. Infrared photography engages capturing invisible light to produces a striking image. The objective of this research to know the effect by change a digital single-lens reflex (D-SLR) camera to be false color. The assumption adds a filter and make minor adjustments or can modify the camera permanently by removing the hot mirror. This experiment confirms change usual hot mirror to Hoya UV&IR Cut Filter in front of sensor CMOS. The result is false color effect using feature Auto White Balance such as the color of object photography change into reddish, purplish, old effect, in a pint of fact the skin of model object seems to be smoother according to White Balance level. The implication this study to get more various effect in photography. 1 Introduction The camera sensor is, in essence, monochromatic. -

Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location

Missouri University of Science and Technology Scholars' Mine Electrical and Computer Engineering Faculty Research & Creative Works Electrical and Computer Engineering 01 Nov 2015 Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location Ravneet Kaur P. P. Albano Justin G. Cole Jason R. Hagerty et. al. For a complete list of authors, see https://scholarsmine.mst.edu/ele_comeng_facwork/3045 Follow this and additional works at: https://scholarsmine.mst.edu/ele_comeng_facwork Part of the Chemistry Commons, and the Electrical and Computer Engineering Commons Recommended Citation R. Kaur et al., "Real-Time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location," Skin Research and Technology, vol. 21, no. 4, pp. 466-473, John Wiley & Sons, Nov 2015. The definitive version is available at https://doi.org/10.1111/srt.12216 This Article - Journal is brought to you for free and open access by Scholars' Mine. It has been accepted for inclusion in Electrical and Computer Engineering Faculty Research & Creative Works by an authorized administrator of Scholars' Mine. This work is protected by U. S. Copyright Law. Unauthorized use including reproduction for redistribution requires the permission of the copyright holder. For more information, please contact [email protected]. Published in final edited form as: Skin Res Technol. 2015 November ; 21(4): 466–473. doi:10.1111/srt.12216. Real-time Supervised Detection of Pink Areas in Dermoscopic Images of Melanoma: Importance of Color Shades, Texture and Location Ravneet Kaur, MS, Department of Electrical and Computer Engineering, Southern Illinois University Edwardsville, Campus Box 1801, Edwardsville, IL 62026-1801, Telephone: 618-210-6223, [email protected] Peter P.