___Signature Redacted Signature Redacted

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Flag Football Rules

Flag Football Rules Divisions Men’s and Women’s Leagues are offered Sub divisions may be created upon need of skill level 1. Team Requirements 1.1 A team shall consist of seven players. A team can play with a minimum of 6 players. 1.2 The offensive team must have 4 players within 1 yard of the line of scrimmage at the time of the snap. 1.3 All players must have checked in with the scorekeeper and be recorded on the game sheet before they are allowed to participate. 1.4 Substitutions are allowed between plays and during time-outs. 1.5 All games shall be played on the date and hour scheduled. BE ON TIME. 2. Equipment and Facilities 2.1 All players must wear shoes. 2.2 Rubber cleated shoes will be allowed. No metal screw-in cleats, open toe, open heel or hard soled shoes will be allowed. 2.3 Each player must wear pants or shorts without any belt(s), belt loop(s), pockets(s) or exposed drawstrings. A player may turn his/her shorts inside-out or tape his/her pockets in order to play. 2.4 All jewelry must be removed before participating. 2.5 Towels may not be worn, a towel may be kept behind the play. 2.6 Equipment such as helmets, billed hats, pads or braces worn above the waist, leg and knee braces made of hard, unyielding substances, or casts is strictly prohibited. Knee braces made of hard, unyielding substances covered on both sideswith all edges overlapped and any other hard substances covered with at least 2 inch of slow recovery rubber or similar material will be allowed. -

WFFN National League Rules

***Credit given to the AFFL organization for the creation of these rules. Covid compliance guidelines will supersede any rules that may conflict with those guidelines. WFFN National League Rules 2021 TABLE OF CONTENTS 1. Field 2. Pre-Game 3. The Throw-Off 4. Offensive Play, Sets of Downs and Huddles 5. Scoring 6. Time 7. Possessions & Formations 8. Penalties & Remedies 9. Ball 10. Rosters & Uniforms 11. Referees and Staff 12. Definitions 13. Miscellaneous Rules 14. Referee Signals 1. FIELD 1.1. The playing field shall be 120 yards long, with a width of 53 1/3 yards. 1.2. The four intersections of Goal Lines and Sidelines must be marked at inside corners of the End Zone and the Goal Line by pylons. Pylons must be placed at inside edges of white lines and should not touch the Field of Play. Pylons should also be placed at the 2 intersections of the Sidelines and the 50-yard Line, as well as the four intersections of Sidelines and 25-yard Lines. 1.3. All lines must be marked with a material that is not harmful to a person’s eyes or skin, and should be four inches wide, except for the Goal Lines, which should be eight inches wide. 1.4. All benches and other rigid features must be 10 yards or further back from the Out of Bounds line. If space permits these features may be placed further back. 1.5. The playing surface shall consist of grass or artificial turf. 1.6. The field will be as represented in Figure 1. -

Legacy Statewide 7V7 League - Official Rules 1

LEGACY STATEWIDE 7V7 LEAGUE - OFFICIAL RULES 1. FIELD LENGTH: A. 50 TOTAL YARDS—40 YARDS WITH A 10-YARD END ZONE. 2. STARTING EACH GAME: A. THE DESIGNED HOME TEAM (2ND ON SCHEDULE) WILL BEGIN POSSESSION OF THE BALL ON THE +40 YARD LINE. CHOICE OF HASH. B. A HORN WILL BEGIN AND END EACH GAME. C. EACH GAME LASTS 25 MINUTES, RUNNING TIME. D. OFFICIAL WILL DECLARE WHEN THE CLOCK GOES UNDER THREE MINUTES. E. EACH TEAM WILL BE ALLOWED ONE TIME OUT PER GAME TO STOP THE PLAY CLOCK ONLY. THE GAME CLOCK WILL NOT STOP FOR ANY REASON OTHER THAN MAJOR INJURY. TIMEOUTS CAN NOT BE TAKEN UNDER THREE MINUTES. 3. OFFENSE & MOVING THE BALL: A. OFFENSE ALWAYS STARTS ON +40 WITH THEIR CHOICE OF HASH AFTER ANY CHANGE OF POSSESSION. B. THE OFFICIALS WHISTLE SIGNIFIES “READY TO PLAY” AFTER SPOT OF THE BALL. C. EACH TEAM WILL HAVE 25 SECONDS TO PUT THE BALL INTO PLAY. DELAY OF GAME IS LOSS OF DOWN. D. THE OFFENSIVE TEAM IS RESPONSIBLE FOR RETRIEVING AND RETURNING THE BALL TO THE OFFICIAL. ANY DELAY BY THE OFFENSE IN RETRIEVING AND RETURNING THE BALL TO THE OFFICIAL WITH RESULT IN DELAY OF GAME AND LOSS OF DOWN. E. QB IS ALLOWED FOUR SECONDS TO THROW. ONE-ONE THOUSAND...TWO-ONE THOUSAND...THREE-ONE THOUSAND...FOUR-ONE THOUSAND! REFEREES WILL STOP PLAY AT FOUR SECOND MARK WHICH WILL RESULT IN A LOSS OF DOWN. F. OFFENSE HAS THREE DOWNS TO GAIN A FIRST DOWN. FIRST DOWN MARKERS WILL BE AT THE +25 AND +10 YARD LINES. -

Guide for Statisticians © Copyright 2021, National Football League, All Rights Reserved

Guide for Statisticians © Copyright 2021, National Football League, All Rights Reserved. This document is the property of the NFL. It may not be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or information storage and retrieval systems, or the information therein disseminated to any parties other than the NFL, its member clubs, or their authorized representatives, for any purpose, without the express permission of the NFL. Last Modified: July 9, 2021 Guide for Statisticians Revisions to the Guide for the 2021 Season ................................................................................4 Revisions to the Guide for the 2020 Season ................................................................................4 Revisions to the Guide for the 2019 Season ................................................................................4 Revisions to the Guide for the 2018 Season ................................................................................4 Revisions to the Guide for the 2017 Season ................................................................................4 Revisions to the Guide for the 2016 Season ................................................................................4 Revisions to the Guide for the 2012 Season ................................................................................5 Revisions to the Guide for the 2008 Season ................................................................................5 Revisions to -

Flag Football Rules Warning

Flag Football Rules Warning: Your participation in this sport could result in physical injury, which could be serious or fatal!! The University of North Carolina Wilmington assumes no responsibility for injuries and related medical expenses received during Intramural Sports activities. Students, faculty and staff are reminded that Intramural participation is completely voluntary. It is strongly recommended that all participants have a physical examination and secure adequate medical insurance prior to participation. All questions about rules/procedures not found in this document should be directed to the Competitive Sports staff. Staff may be contacted via email; [email protected], phone; 910- 962-PLAY(7529), or in person in the Student Recreation Center Room 162. I. Requirements (Valid UNCW One Card, Uniform (flag football= shorts/pants without pockets/exposed drawstrings), etc a. All participants must present a valid UNCW One Card before participating in any Intramural game b. No steel or metal cleats are allowed to be used on the facilities (IM Fields, Gazebo, etc.) c. All participants to wear appropriate athletic gear including NO SLEEVELESS SHIRTS unless the team brings their own jerseys d. All shorts/pants must be free of belt loops, pockets, and exposed drawstrings e. Jewelry: NO JEWELRY! Earrings, rings, watches, bracelets and necklaces may not be worn during intramural competition. There will be no exceptions to this rule. Participants will be required to remove anything deemed harmful by the IM staff. (This can include rubber bands, neckties, etc.) Any player wearing jewelry that cannot be removed quickly must leave the game and cannot return to the game until the next dead ball situation after the ball is returned to play. -

Football Rules and Interpretations 2018 Edition

INTERNATIONAL FEDERATION OF AMERICAN FOOTBALL FOOTBALL RULES AND INTERPRETATIONS 2018 EDITION 2018.2.2 Foreword The rules are revised each year by IFAF to improve the sport’slev el of safety and quality of play,and to clarify the meaning and intent of rules where needed. The principles that govern all rule changes are that theymust: •besafe for the participants; •beapplicable at all levels of the sport; •becoachable; •beadministrable by the officials; •maintain a balance between offense and defence; •beinteresting to spectators; •not have a prohibitive economic impact; and •retain some affinity with the rules adopted by NCAA in the USA. IFAF statutes require all member federations to play by IFAF rules, except in the following regards: 1. national federations may adapt Rule 1 to meet local needs and circumstances, provided no adaption reduces the safety of the players or other participants; 2. competitions may adjust the rules according to (a) the age group of the participants and (b) the gender of the participants; 3. competition authorities have the right to amend certain specific rules (listed on page 13); 4. national federations may restrict the above sothat the same regulations apply to all competitions under their jurisdiction. These rules apply to all IFAF organised competitions and takeeffect from 1st March 2018. National federations may adopt them earlier for their domestic competitions. Forbrevity,male pronouns are used extensively in this book, but the rules are equally applicable to female and male participants. 2 Table of -

Football Manual 2020-21.Pdf

Marking 2 ~2019-2020 STATE FOOTBALL CHAMPIONS~ Blum High School Mart High School 1A 6-Man Division I State Champion 2A Division II State Champion Richland Springs High School Grandview High School 1A 6-Man Division II State Champion 3A Division I State Champion Refugio High School Gunter High School 2A Division I State Champion 3A Division II State Champion 3 Carthage High School Aledo High School 4A Division I State Champion 5A Division II State Champion Texarkana Pleasant Grove High School 4A Division II State Champion Galena Park North Shore High School 6A Division I State Champion Alvin Shadow Creek High School 5A Division I State Champion Austin Westlake High School 6A Division II State Champion 4 ~UIL PERSONNEL~ Dr. Susan Elza UIL Director of Athletics [email protected] 512-471-5883 Brian Polk Joseph Garmon UIL Associate AD (Football Director) UIL Assistant AD [email protected] [email protected] 512-471-5883 512-471-5883 Brandy Belk AJ Martinez UIL Assistant AD UIL Assistant AD [email protected] [email protected] 512-471-5883 512-471-5883 5 ~TABLE OF CONTENTS~ UIL Regulations ............. ........................................................................................................................................6-28 Football Calendar .................................................................................................................................................. 6 UIL Rule Changes ................................................................................................................................................. -

Rule 14 Penalty Enforcement

Rule 14 Penalty Enforcement (Governing all cases not otherwise specifically provided for) Section 1 Spot From Which Penalty in Foul is Enforced SPOTS OF ENFORCEMENT Article 1 The general provisions of Rule 14 govern all spots of enforcement. Note: The spot of enforcement for fouls by players or the actual distance penalty or both, when not specific, are subordinate to the specific rules governing a foul during a fumble, pass or kick. These in turn are both subordinate to Rule 14. A.R. 14.1 Second-and-15 on A4. Quarterback A1 throws a legal pass which is incomplete. A2 held in end zone. Ruling: Safety or A’s ball third-and-15 on A4. FOULS BETWEEN DOWNS Article 2 When a foul by a player occurs between downs, enforcement is from the succeeding spot (14-5- S.N. 3). FOULS BY NON-PLAYERS Article 3 Penalties for fouls committed by non-players shall be enforced as specifically provided under Rule 13. ENFORCEMENT SPOT NOT GOVERNED Article 4 When the spot of enforcement is not governed by a general or specific rule, it is the spot of the foul. BASIC SPOTS OF ENFORCEMENT Article 5 The basic spots of enforcement (3-11-1) are: (a) The previous spot for a forward pass (8-6-11); a scrimmage kick (9-5-1); or a free kick (6-3-1) (b) The dead ball spot on a running play (14-1-12). (c) The spot of snap, backward pass, or fumble (8-7). (d) The spot of the foul (14-1-4 and 14-1-13). -

The Official Advanced Playing Rules of Electric Football

THE OFFICIAL ADVANCED PLAYING RULES OF ELECTRIC FOOTBALL SPECIFICATIONS, PROCEDURES, REGULATIONS, AND GUIDELINES Originally Published 19 October, 2015 POSITIONS AND RESPONSIBILITIES COMMISSIONER Provide supervision of all league activities. Provide an environment for committee activities. Provide final determination on league actions. COMMITTEE (Chairman) Answer questions relating to league activities. Pre-approve any substance in question. Grant waivers on a case-by-case basis for player deficiencies. Submit rules and guidelines for league play. Provide commissioner with feedback. Solicit tournament directors’ feedback. TOURNAMENT DIRECTOR Oversee all tournament functions. Provide any equipment specific to the event for all coaches to compete. Provide guidance to all members of any provisions not stated and/or exceptions to the rules. Supervise tournament officials. TOURNAMENT OFFICIAL Ensure all equipment and players are in compliance throughout the event. Perform pre-tournament inspections by various means of equipment and players. Provided determination of equipment and players fit for competition. Assign/supervise referees. REFEREE Conduct officiating of assigned game in accordance tournament rules. Approve/set the proper vibrating speed. Ensure etiquette, fair play and sportsmanship are enforced throughout game. Provide updated status on game. TIMEKEEPER/SCOREBOARD OPERATOR Operate the clock and/or scoreboard at the direction of the referee. Notify all of time status. MEMBER COACHES Ensure and provide for inspection all equipment and players for compliance throughout the event. Play the game in accordance with the rules. Establish the speed of the board. Declare the metal element to the inspector and its location on the figure prior to detection. Establish who will be responsible for moving both the yard markers and the 10-yard chain. -

See Official Rules

TM 1 TM AMERICAN FLAG FOOTBALL LEAGUE OFFICIAL RULES 2021 2 TABLE OF CONTENTS 1. Field 2. Ball 3. Rosters & Uniforms 4. Definitions 5. Time 6. Overtime 7. Scoring 8. Pre-Game 9. The Throw-Off 10. Set of Downs 11. Game Play and Formations 12. Punts 13. Possessions 14. Miscellaneous Rules 15. Penalties & Remedies 16. Referees and Staff 17. Referee Signals 3 1. FIELD 1.1. The playing field shall be 120 yards long, with a width of 53 1/3 yards. 1.2. The four intersections of Goal Lines and Sidelines must be marked at inside corners of the End Zone and the Goal Line by pylons. Pylons must be placed at inside edges of white lines and should not touch the Field of Play. Pylons should also be placed at the 2 intersections of the Sidelines and the 50-yard Line, as well as the four intersections of Sidelines and 25-yard Lines. 1.3. All lines must be marked with a material that is not harmful to a person’s eyes or skin, and should be four inches wide, except for the Goal Lines, which should be eight inches wide. 1.4. All benches and other rigid features must be 10 yards or further back from the Out of Bounds line. If space permits these features may be placed further back. 1.5. The playing surface shall consist of grass or artificial turf. 1.6. The field will be as represented in Figure 1. 1.7. All markings or decorations on the field must not hinder the Players in any way. -

Flag Football Rules Will Govern Play for Any Rules Not Mentioned in the Following Intramural Rules

SFP Flag Football League Rules *Game time is forfeit time! The winning team of a forfeited game will recieve fourteen points for the point differential of that game. Teams with less than the required number of participants at the scheduled start time will forfeit the contest. *The officiating will be done by officials who are in absolute control of the game. *Teams are responsible for keeping their spectators under control. Misconduct of spectators, players or coaches can result in assessment of a penalty, ejection or forfeiture of the game. Spectators must also remain in the area designated by the officials. *The official and or supervisor have the power to make decisions on any matters or questions not specifically covered in the rules. *Each team is required to provide a scorekeeper and down marker operator, which will be assigned by the IM Supervisor. *The NIRSA Flag Football Rules will govern play for any rules not mentioned in the following intramural rules: 1. Team Requirements A team shall consist of seven players. A team can play with a minimum of 5 players. The offensive team must have any 4 players on the line of scrimmage at the time of the snap. Substitutions are allowed between plays and during time outs. All players must be recorded on the roster and waiver form (with personal signature) before they are allowed to participate. The designated team area is located between the 20 yard lines, two yards off the sideline. This area is for all players and a maximum of two coaches. The spectator area is designated as five yards from the sideline. -

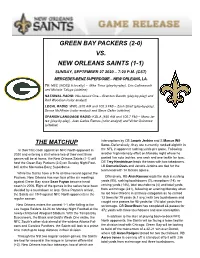

2020-3-New Orleans.Pub

GREEN BAY PACKERS (2-0) VS. NEW ORLEANS SAINTS (1-1) SUNDAY, SEPTEMBER 27 2020 – 7:20 P.M. (CST) MERCEDES-BENZ SUPERDOME – NEW ORLEANS, LA. TV: NBC (WDSU 6 locally) – Mike Tirico (play-by-play), Cris Collinsworth and Michele Tafoya (sideline) NATIONAL RADIO: Westwood One – Brandon Gaudin (play-by-play) and Rod Woodson (color analyst) LOCAL RADIO: WWL (870 AM and 105.3 FM) – Zach Strief (play-by-play), Deuce McAllister (color analyst) and Steve Geller (sideline) SPANISH LANGUAGE RADIO: KGLA (830 AM and 105.7 FM) – Mario Je- rez (play-by-play), Juan Carlos Ramos (color analyst) and Victor Quinonez (sideline) interceptions by CB Janoris Jenkins and S Marcus Wil- THE MATCHUP liams. Defensively, they are currently ranked eightth in In their first clash against an NFC North opponent in the NFL in opponent rushing yards per game. Following 2020 and entering a stint where two of their next three another high-intensity effort on Monday night where he games will be at home, the New Orleans Saints (1-1) will posted five solo tackles, one sack and one tackle for loss, host the Green Bay Packers (2-0) on Sunday Night Foot- DE Trey Hendrickson leads the team with two takedowns. ball at the Mercedes-Benz Superdome. LB Demario Davis and Janoris Jenkins are tied for the team lead with 14 tackles apiece. While the Saints have a 9-16 all-time record against the Packers, New Orleans has won four of the six meetings Offensively, RB Alvin Kamara leads the club in rushing against Green Bay since Sean Payton became head yards (95), rushing touchdowns (3), receptions (14), re- coach in 2006.