Data Integration Methods for Studying Animal Population Dynamics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Aboriginal Pantheon

ABORIGINAL PANTHEON There are many creation stories from the Aboriginals in Australia mainly because Australia is so large. The creation myth represented here is from the Bandicoot Aboriginal clan, in which Ka-Ro-Ra is the creator god. Aboriginals throughout Australia believe in Dreamtime (Alchera). This is the period long ago when the ancestral spirits of aboriginal tribes walked the earth and living creatures came into being. These ancestors are believed to have returned to their homes underground. Dreamtime is the source of all life. Below are some of the major gods found among the Aboriginals from different parts of Australia. ALINGA was the sun goddess. BUNJIL was a sky god (often painted as a great Eagle hawk). He was the creator god in the West of South Australia. He made the world and all things on it. He taught humans to sing and dance, and under his guidance humans gradually became wise in all things. In some stories it is said that he made men out of clay while his brother, Bat, made women out of water. DJANGGAO (sometimes spelled Junkgowa) and her sister Djunkgao were fertility goddesses. They created the ocean and all the fish found there. In Arnhemland (in Australia) the fertility goddesses are known as Wawalag. GIDJA was the god of the moon. He was in love with Yalma, the Evening Star. Yalma gave birth to the morning star, Lilga. When she died in an accident it was the first time anybody had died. People blamed Gidja for this and tried to kill him. They failed and Gidja cursed the people and told them that when they died they would stay dead. -

The Astronomy of the Kamilaroi and Euahlayi Peoples and Their Neighbours

The Astronomy of the Kamilaroi and Euahlayi Peoples and Their Neighbours By Robert Stevens Fuller A thesis submitted to the Faculty of Arts at Macquarie University for the degree of Master of Philosophy November 2014 © Robert Stevens Fuller i I certify that the work in this thesis entitled “The Astronomy of the Kamilaroi and Euahlayi Peoples and Their Neighbours” has not been previously submitted for a degree nor has it been submitted as part of requirements for a degree to any other university or institution other than Macquarie University. I also certify that the thesis is an original piece of research and it has been written by me. Any help and assistance that I have received in my research work and the preparation of the thesis itself has been appropriately acknowledged. In addition, I certify that all information sources and literature used are indicated in the thesis. The research presented in this thesis was approved by Macquarie University Ethics Review Committee reference number 5201200462 on 27 June 2012. Robert S. Fuller (42916135) ii This page left intentionally blank Contents Contents .................................................................................................................................... iii Dedication ................................................................................................................................ vii Acknowledgements ................................................................................................................... ix Publications .............................................................................................................................. -

DOCUMENT RESUME ED 085 006 HE 004 900 TITLE the Teacher's

DOCUMENT RESUME ED 085 006 HE 004 900 TITLE The Teacher's Perspective. Selections From Reports by The Teachers of The Thirteen-College Curriculum Program 1968-69. INSTITUTION Institute for Services to Education, Washington, D.C. PUB DATE Mar 70 NOTE 196p. EDRS PRICE MF-$0.65 HC-$6.58 DESCRIPTORS Curriculum Development; *Educational Programs; *Evaluation; *Higher Education; *Negro Institutions; *Teacher Attitudes; Teacher Developed Materials; Teachers IDENTIFIERS Institute for Services to Education; ISE; TCCP; *Thirteen College Curriculum Program ABSTRACT The purpose of this document is to show how the teachers in the Thirteen-College Curriculum Program (TCCP) perceive what they are doing and how things are working out. The teachers in these reports present their own views on the values of t.le TCCP based on their own experiences. In some cases there seems to be general agreement concerning the value of a particular endeavor or aspect of the program, althou0-1 a few teachers may disagree. The selections are grouped by the following fields: ideas and their expression; quantitative and analytical thinking; social institutions; biology and the physical sciences; humanities and philosophy; new questions, doubts, and hopes; and effect of the project on teachers. Within each field the selections are grouped under headings consisting of certain basic types: themes and approaches; surveys of the year; anecdotes; comparisons; reviews of books and films; general impressions and appraisals. Two sections of general comments are included at the end. (Author/PG) FILMED FROM BEST AVAILABLE COPY THE TEAcHER'S PERSPECTIVE SELECTIONS FROM REPORTS BY THE TEACHERS OF THE THIRTEEN-COLLEGE CURRICULUM PROGRAM 1968-69 March 1970 Institute for Services to Education 1527 New Hampshire Avenue, N.W. -

Spellonyms As Linguo-Cultural Onomastic Units in Indigenous Folklore

E3S Web of Conferences 284, 08013 (2021) https://doi.org/10.1051/e3sconf/202128408013 TPACEE-2021 Spellonyms as linguo-cultural onomastic units in indigenous folklore Viktoriya Oschepkova1 and Nataliya Solovyeva1,* 1Moscow Region State University, 141014, Very Voloshinoy str, 24, city of Mytishi, Moscow Region, Russia Abstract. The undertaken research replenishes the pool of knowledge about folklore texts and the functions of spellonyms as signs saturated with national and cultural meanings. The study establishes and compares linguistic and cultural characteristics of spellonyms in the onomasticon of Australian and Nanaian aetiological tales. The authors proposed a typology of spellonyms which includes 5 thematic groups: nominations of deities of different nature; nominations of celestial bodies transformed from representatives of the tribe; nominations of objects of worship and magical rituals; nominations of magical natural phenomena; nominations of magical creatures. The results of the research demonstrate a significant prevalence in the number of deity nominations among Australian spellonyms, while the majority of Nanaian spellonyms refer to magical artefacts. The research has also proved the utmost significance of the water element in the folk worldview of Australian Aborigines and the equivalent importance of the water, land and air elements in the Nanai folk worldview. The obvious preference in both folklore traditions is given to nominations of native origin transcribed into the language of translation. The structural types of spellonyms vary from group to group, with the majority of monolexemic nominations. 1 Introduction Folklore works are closely related to national and regional traditions of a society, embody values and worldview of their creators, and act as a driving force for creativity and innovation. -

Abhiyoga Jain Gods

A babylonian goddess of the moon A-a mesopotamian sun goddess A’as hittite god of wisdom Aabit egyptian goddess of song Aakuluujjusi inuit creator goddess Aasith egyptian goddess of the hunt Aataentsic iriquois goddess Aatxe basque bull god Ab Kin Xoc mayan god of war Aba Khatun Baikal siberian goddess of the sea Abaangui guarani god Abaasy yakut underworld gods Abandinus romano-celtic god Abarta irish god Abeguwo melansian rain goddess Abellio gallic tree god Abeona roman goddess of passage Abere melanisian goddess of evil Abgal arabian god Abhijit hindu goddess of fortune Abhijnaraja tibetan physician god Abhimukhi buddhist goddess Abhiyoga jain gods Abonba romano-celtic forest goddess Abonsam west african malicious god Abora polynesian supreme god Abowie west african god Abu sumerian vegetation god Abuk dinkan goddess of women and gardens Abundantia roman fertility goddess Anzu mesopotamian god of deep water Ac Yanto mayan god of white men Acacila peruvian weather god Acala buddhist goddess Acan mayan god of wine Acat mayan god of tattoo artists Acaviser etruscan goddess Acca Larentia roman mother goddess Acchupta jain goddess of learning Accasbel irish god of wine Acco greek goddess of evil Achiyalatopa zuni monster god Acolmitztli aztec god of the underworld Acolnahuacatl aztec god of the underworld Adad mesopotamian weather god Adamas gnostic christian creator god Adekagagwaa iroquois god Adeona roman goddess of passage Adhimukticarya buddhist goddess Adhimuktivasita buddhist goddess Adibuddha buddhist god Adidharma buddhist goddess -

Vol. 1, No. 2 September 2011

‘Research for Peace and Development’ SAVAP International Academic Research International Vol. 1, No. 2 September 2011 SAVAP International Bright Home, Lodhran City - 59320, PAKISTAN. URL: http:// www.journals.savap.org.pk ISSN-L: 2223-9553 ACADEMIC RESEARCH ISSN: 2223-9944 Print ISSN: 2223: 9952 CD INTERNATIONAL Academic Research International is open access journal. Any part of this journal may be reprinted or reproduced for academic and Vol. 1, No. 2, September 2011 research purpose only. EDITORIAL TEAM EDITORIAL BOARD Prof. Dr. T. F. "Tim" McLaughlin, Prof. Dr. Muhammad Aslam Adeeb, Editor: Gonzaga University, WA, USA. The Islamia University of Bhawalpur, Muhammad Ashraf Malik PAKISTAN. Prof. Dr. Hong Lin, Chairman, SAVAP International University of Houston-Downtown, Professor Dr. Ugur DEMIRAY, Houston, Texas, USA. Anadolu University, TURKEY. Associate Editor : Prof. Dr. Noraini Binti Idris, Prof. Dr. Chris Atkin, Prof. Hafiz Habib Ahmed University of Malaya, MALAYSIA. Liverpool Hope University, UK. Assistant Editors : Prof. Dr. Ghulam Shabir, Prof. Dr. Ken Kawan Soetato, Mian Muhammad Furqan The Islamia University of Bahawalpur, Waseda University, Tokyo, JAPAN. PAKISTAN. Rana Muhammad Dilshad Prof. Dr. Sinan Olkun, Prof. Dr Azman Bin Che Mat, Ankra University, TURKEY. Muhammad Rafiq Khwaja Universiti Teknologi Mara, MALAYSIA. Prof. Dr.Tahir Abbas, Hameedullah B. Z. University, Multan, PAKISTAN. Khawaja Shahid Mehmood Prof. Dr. Ata ATUN, Near East University, Prof. Dr. Rosnani Hashim, Salman Ali Khan NORTH CYPRUS. International Islamic University Kuala Lumpur, MALAYSIA. Malik Azhar Hussain Prof. Dr. Kyung-Sung Kim, Seoul National University of Prof. Dr. Alireza Jalilifar, Muhammad Akbar Education, SOUTH KOREA. Shahid Chamran University of Ahvaz, IRAN. Ms. Rafia Sultana Prof. -

Australian Legendary Tales

Ui)TR/(L S-™*"'!® GCND/IRY 35S TAl£ i "!{»"'« VFT-H " COLL£CT€0 ' BY K' LvlNGLOH "*"'—'"•'""•'' '~ y5,i^,jS!aS ', —'p>i^^^j^^™^ ^^3rf^y%js>-^jfe,i^^^^^^^»i-j^g^i^as^ig^ Sy .;• B Cornell University S Library The original of this book is in the Cornell University Library. There are no known copyright restrictions in the United States on the use of the text. http://www.archive.org/details/cu31924029909060 7/6 AUSTRALIAN LEGENDARY TALES AUSTRALIAN Legendary Tales FOLK-LORE. OF THE NOONGAHBURRAHS AS TOLD TO THE PICCANINNIES COLLECTED Br Mrs. K. LANGLOH PARKER ^ITH INTRODUCTION Br ANDREW LANG, M.A. ILLUSTRATIONS BY A NATIVE ARTIST, AND A SPECIMEN OF THE NATIVE TEXT LONDON DAVID NUTT, 270-271, STRAND MELBOURNE MELVILLE, MULLEN & SLADE 1896 A. \ 03 4-2.3 [All rights reserved] DEDICATED TO PETER HIPPI KING OF THE NOONGAHBURRAHS Contents PREFACE . , . IX INTRODUCTION, BY ANDREW LANG, M.A xiii S DINEWAN THE EMU, AND GOOMBLEGUBEON THE BUSTARD . I THE GALAH, AND OOLAH THE LIZARD 6 BAHLOO THE MOON, AND THE DAENS 8 THE ORIGIN OF THE NARRAN LAKE II GOOLOO THE MAGPIE, AND THE WAHROOGAH . 15 THE WEEOOMBEENS AND THE PIGGIEBILLAH ..... IQ BOOTOOLGAH THE CRANE AND GOONUR THE KANGAROO RAT, THE FIRE MAKERS . ... 24 WEEDAH THE MOCKING BIRD . .30 THE GWINEEBOOS THE REDBREASTS • • • • 35 MEAMEI THE SEVEN SISTERS 40 THE COOKOOBURRAHS AND THE GOOLAHGOOL . 47 THE MAYAMAH . 50 THE BUNBUNDOOLOOEYS . .... 52 OONGNAIRWAH AND GUINAREY ""35 NARAHDARN THE BAT 57 Vlll Coatents I'Aun 6a MULLYANGAH TUB MORNINS STAR . GOOMBLBQUBBON, BBBARQAH, AND OUYAN 6S MOOREGOO THE MOPOKB, AND DAIILOO THE MOON 68 OUYAN THE CURLEW 70 EINEWAN THB EMU, AND WAHN THE CROWS , 73 GOOLAHWILLEBL THE TOPKNOT PIGEONS . -

Resistance of Inbred Lines of Corn (Zea Mays L.) to the Second Brood

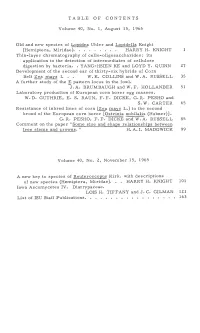

TABLE OF CONTENTS Volume 40, No. 1, August 15, 1965 Old and new species of Lopidea Uhler and Lopidella Knight (Hemiptera, Miridae). HARRY H. KNIGHT Thin-layer chromatography of cello-oligosaccharides: its application to the detection of intermediates of cellulose digestion by bacteria. YANG-HSIEN KE and LOYD Y. QUINN 27 Development of the second ear of thirty-six hybrids of Corn Belt Zea mays 1. • • W. K. COLLINS and W. A. RUSSELL 35 A further study of the ~ pattern locus in the fowl. J.A. BRUMBAUGHandW.F. HOLLANDER 51 Laboratory production of European corn borer egg masses. W. D. GUTHRIE, E. S. RAUN, F. F. DICKE, G. R. PESHO and_ S. W. CARTER 65 Resistance of inbred lines of corn (Z e a mays L.) to the second brood of the European corn borer (Ostrinia nubilalis (Hubner)]. G. R. PESHO, F. F. DICKE and W. A. RUSSELL 85 Comment on the paper " Some size and shape relationships between tree stems and crowns. 11 H. A. I. MADGWICK 99 Volume 40, No. 2, November 15, 1965 A new key to species of Reuteroscopus Kirk. with descriptions of new species (Hemiptera, Miridae) ... HARRY H. KNIGHT 101 Iowa Ascomycetes IV. Diatrypaceae. LOIS H. TIFFANY and J.C. GILMAN 121 List of ISU Staff Publications ........•..•.•. · · • 163 Volume 40, No. 3, February 15, 1966 A contribution to the anatomical development of the acorn in Quercus L. H. LLOYD MOGENSEN 221 Morphological investigations of the internal anatomy of the fifth larval instar of the European corn borer. H. G. DRECKTRAH, K. L. KNIGHT and T. -

Coordinated Bird Monitoring: Technical Recommendations for Military Lands

Prepared in cooperation with the DoD Natural Resources Program, Arlington, Virginia; Great Basin Bird Observatory, Reno, Nevada; U.S. Army Engineer Research and Development Center, Environmental Laboratory, Vicksburg, Mississippi; DoD Partners in Flight, Warrenton, Virginia Coordinated Bird Monitoring: Technical Recommendations for Military Lands Open-File Report 2010–1078 U.S. Department of the Interior U.S. Geological Survey Coordinated Bird Monitoring: Technical Recommendations for Military Lands By Jonathan Bart and Ann Manning, U.S. Geological Survey; Leah Dunn, Great Basin Bird Observatory; Richard Fischer and Chris Eberly, Department of Defense Partners in Flight Prepared in cooperation with the DoD Natural Resources Program, Arlington, Virginia; Great Basin Bird Observatory, Reno, Nevada; U.S. Army Engineer Research and Development Center, Environmental Laboratory, Vicksburg, Mississippi; DoD Partners in Flight, Warrenton, Virginia A Report Prepared for the Department of Defense Legacy Resource Management Program Legacy Project # 05-246, 06-246, 07-246 Open-File Report 2010–1078 U.S. Department of the Interior U.S. Geological Survey U.S. Department of the Interior KEN SALAZAR, Secretary U.S. Geological Survey Marcia K. McNutt, Director U.S. Geological Survey, Reston, Virginia: 2012 For more information on the USGS—the Federal source for science about the Earth, its natural and living resources, natural hazards, and the environment, visit http://www.usgs.gov or call 1-888-ASK-USGS. For an overview of USGS information products, including maps, imagery, and publications, visit http://www.usgs.gov/pubprod To order this and other USGS information products, visit http://store.usgs.gov The DoD Legacy Resource Management Program funded this project. -

HERBICIDES in Asian Rice: Transitions in Weed Management, Ed

DEDICATION To Keith Moody WEED SCIENTIST As an agronomist and leading weed scientist at two major centers in the CG Sys- tem—the International Rice Research Institute (IRRI) from 1975 to 1995 and the International Institute of Tropical Agriculture (IITA) from 1969 to 1975—Keith Moody has played a critical role in designing strategies for weed management as part of the Green Revolution for rice. He has worked with scientists in nearly all countries of Asia and West Africa where rice is grown, and he has published more than 200 re- search papers in internationally refereed journals. His professional memberships in- clude International Weed Science Society (president, 1984-88), Asian-Pacific Weed Science Society (treasurer, 1984-90), and Weed Science Society of the Philippines (president, 1984-85; vice-president 1983-84, member of the board of directors, 1976- 86 and 1988). His professional service includes Weed Abstracts (editorial advisory board member), Crop Protection (international editorial board member), and Journal of Plant Protection in the Tropics (panel of reviewers-members). He coordinated IRRI’s weed science training short course and guided more than 30 degree candidates and research fellows. Keith Moody has challenged weed scientists throughout Asia and Africa to pay close attention to farmers and their traditional methods of weed control. He has sought to develop an integrated weed management strategy for rice production that is scien- tifically sophisticated, compatible with traditional pest management practices, profit- able for farmers, and nondamaging to the environment. His knowledge of farmers’ behavior and field conditions with respect to weeds is unsurpassed. It is to his excite- ment for weed science and to his deep concern for the welfare of rice farmers and the health of rice-based ecosystems that this volume is dedicated. -

Syllabus of Biotechnology

Syllabus of Biotechnology (B. Sc. I, II & III Year) Session 201 8-2019 201 9-2020 20B.Sc.20 -I 20 21 BIOTECHNOLOGY BoS approved syllabus for B.Sc. Biotechnology (Academic session 2018-19, 2019-20 and 2020-21) B.Sc.-I BIOTECHNOLOGY PAPER – I BIOCHEMISTRY, BIOSTASTICS AND COMPUTERS UNIT-I 1. Introduction to Biochemistry: History, Scope and Development. 2. Carbohydrates: Classification, Structure and Function of Mono, Oligo and Polysaccharides. 3. Lipids: Structure, Classification and Function. UNIT –II 1. Amino acids and Proteins: Classification, Structure and Properties of amino acids, Types of Proteins and their Classification and Function. 2. Enzymes: Nomenclature and Classification of enzyme, Mechanism of enzyme action, Enzyme Kinetics and Factors affecting the enzymes action. Immobilization of enzyme and their application. UNIT –III 1. Hormones: Plant Hormone-Auxin and Gibberellins and Animal Hormone-Pancreas and Thyroid. 2. Carbohydrates, Proteins and Lipid Metabolism - Glycolysis, Glycogenesis, Glyconeogenesis, Glycogenolysis and Krebs cycle. Electron Transport Chain and β- oxidation of Fatty acids. UNIT-IV 1. Scope of Biostatistics, Samples and Population concept, Collection of data-sampling techniques, Processing and Presentation of data. 2. Measures of Central Tendency: Mean, Median and Mode and Standard Deviation. 3. Probability Calculation: Definition of probability, Theorem on total and compound probability. UNIT-V 1. Computers - General introduction, Organization of computer, Digital and Analogue Computers and Computer Algorithm. 2. Concept of Hardware and Software, Input and Output Devices. 3. Application of computer in co-ordination of solute concentration, pH and Temperature etc., of a Fermenter in operation and Internet application. BoS approved syllabus for B.Sc. Biotechnology (Academic session 2018-19, 2019-20 and 2020-21) List of Books 1. -

On the Astronomical Knowledge and Traditions of Aboriginal Australians

On the Astronomical Knowledge and Traditions of Aboriginal Australians By Duane Willis Hamacher II a thesis submitted in fulfilment of the requirements for the degree of Doctor of Philosophy | December 2011 ii c Duane Willis Hamacher II, . Typeset in LATEX 2". iii Except where acknowledged in the customary manner, the material presented in this thesis is, to the best of my knowledge, original and has not been submitted in whole or part for a degree in any university. Duane Willis Hamacher II iv Contents Dedication xiii Acknowledgements xv Abstract xvii Preface xix List of Publications xxiii List of Figures xxv List of Tables xxix 1 Introduction 1 1.1 Hypotheses . 1 1.2 Aims . 4 1.3 Objectives . 5 1.4 Structure . 5 2 Discipline, Theory, & Methodology 7 2.1 Cultural Astronomy . 7 2.1.1 Archaeoastronomy & Ethnoastronomy . 8 2.1.2 Historical Astronomy . 9 v vi Contents 2.1.3 Geomythology . 9 2.2 Theory . 10 2.3 Methodology . 13 2.4 Rigour . 16 3 Indigenous History & Culture 23 3.1 Human Migration to Australia . 23 3.2 Language & The Dreaming . 28 3.3 Indigeneity & Self{Identification . 31 3.4 Researching Indigenous Knowledge . 34 4 Review of Aboriginal Astronomy 41 4.1 What is Aboriginal Astronomy? . 41 4.2 Why Study Aboriginal Astronomy? . 42 4.3 Literature on Aboriginal Astronomy . 45 4.4 Examples of Aboriginal Astronomy . 46 4.4.1 Timekeeping & Written Records . 47 4.4.2 Observations of Planetary Motions . 48 4.4.3 Astronomy in Stone Arrangements & Rock Art . 51 5 Dating Techniques 55 5.1 Introduction .