Google Search Algorithm Updates Against Web Spam

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Search Engine Optimization: a Survey of Current Best Practices

Grand Valley State University ScholarWorks@GVSU Technical Library School of Computing and Information Systems 2013 Search Engine Optimization: A Survey of Current Best Practices Niko Solihin Grand Valley Follow this and additional works at: https://scholarworks.gvsu.edu/cistechlib ScholarWorks Citation Solihin, Niko, "Search Engine Optimization: A Survey of Current Best Practices" (2013). Technical Library. 151. https://scholarworks.gvsu.edu/cistechlib/151 This Project is brought to you for free and open access by the School of Computing and Information Systems at ScholarWorks@GVSU. It has been accepted for inclusion in Technical Library by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. Search Engine Optimization: A Survey of Current Best Practices By Niko Solihin A project submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Information Systems at Grand Valley State University April, 2013 _______________________________________________________________________________ Your Professor Date Search Engine Optimization: A Survey of Current Best Practices Niko Solihin Grand Valley State University Grand Rapids, MI, USA [email protected] ABSTRACT 2. Build and maintain an index of sites’ keywords and With the rapid growth of information on the web, search links (indexing) engines have become the starting point of most web-related 3. Present search results based on reputation and rele- tasks. In order to reach more viewers, a website must im- vance to users’ keyword combinations (searching) prove its organic ranking in search engines. This paper intro- duces the concept of search engine optimization (SEO) and The primary goal is to e↵ectively present high-quality, pre- provides an architectural overview of the predominant search cise search results while efficiently handling a potentially engine, Google. -

Click Trajectories: End-To-End Analysis of the Spam Value Chain

Click Trajectories: End-to-End Analysis of the Spam Value Chain ∗ ∗ ∗ ∗ z y Kirill Levchenko Andreas Pitsillidis Neha Chachra Brandon Enright Mark´ Felegyh´ azi´ Chris Grier ∗ ∗ † ∗ ∗ Tristan Halvorson Chris Kanich Christian Kreibich He Liu Damon McCoy † † ∗ ∗ Nicholas Weaver Vern Paxson Geoffrey M. Voelker Stefan Savage ∗ y Department of Computer Science and Engineering Computer Science Division University of California, San Diego University of California, Berkeley z International Computer Science Institute Laboratory of Cryptography and System Security (CrySyS) Berkeley, CA Budapest University of Technology and Economics Abstract—Spam-based advertising is a business. While it it is these very relationships that capture the structural has engendered both widespread antipathy and a multi-billion dependencies—and hence the potential weaknesses—within dollar anti-spam industry, it continues to exist because it fuels a the spam ecosystem’s business processes. Indeed, each profitable enterprise. We lack, however, a solid understanding of this enterprise’s full structure, and thus most anti-spam distinct path through this chain—registrar, name server, interventions focus on only one facet of the overall spam value hosting, affiliate program, payment processing, fulfillment— chain (e.g., spam filtering, URL blacklisting, site takedown). directly reflects an “entrepreneurial activity” by which the In this paper we present a holistic analysis that quantifies perpetrators muster capital investments and business rela- the full set of resources employed to monetize spam email— tionships to create value. Today we lack insight into even including naming, hosting, payment and fulfillment—using extensive measurements of three months of diverse spam data, the most basic characteristics of this activity. How many broad crawling of naming and hosting infrastructures, and organizations are complicit in the spam ecosystem? Which over 100 purchases from spam-advertised sites. -

SEO Techniques for Various Applications - a Comparative Analyses and Evaluation

IOSR Journal of Computer Engineering (IOSR-JCE) e-ISSN: 2278-0661,p-ISSN: 2278-8727 PP 20-24 www.iosrjournals.org SEO Techniques for various Applications - A Comparative Analyses and Evaluation Sandhya Dahake1, Dr. V. M. Thakare2 , Dr. Pradeep Butey3 1([email protected], G.H.R.I.T., Nagpur, RTM Nagpur University, India) 2([email protected] , P.G. Dept of Computer Science, SGB Amravati University, Amravati, India) 3([email protected], Head, Dept of Computer Science, Kamla Nehru College, Nagpur, India) Abstract : In this information times, the website management is becoming hot topic and search engine has more influence on website. So many website managers are trying o make efforts for Search Engine Optimization (SEO). In order to make search engine transfer information efficiently and accurately, to improve web search ranking different SEO techniques are used. The purpose of this paper is a comparative study of applications of SEO techniques used for increasing the page rank of undeserving websites. With the help of specific SEO techniques, SEO committed to optimize the website to increase the search engine ranking and web visits number and eventually to increase the ability of selling and publicity through the study of how search engines scrape internet page and index and confirm the ranking of the specific keyword. These technique combined page rank algorithm which helps to discover target page. Search Engine Optimization, as a kind of optimization techniques, which can promote website’s ranking in the search engine, also will get more and more attention. Therefore, the continuous technology improvement on network is also one of the focuses of search engine optimization. -

Social Information Retrieval Systems: Emerging Technologies and Applications for Searching the Web Effectively

Social Information Retrieval Systems: Emerging Technologies and Applications for Searching the Web Effectively Dion Goh Nanyang Technological University, Singapore Schubert Foo Nanyang Technological University, Singapore INFORMATION SCIENCE REFERENCE Hershey • New York Acquisitions Editor: Kristin Klinger Development Editor: Kristin Roth Senior Managing Editor: Jennifer Neidig Managing Editor: Sara Reed Copy Editor: Maria Boyer Typesetter: Cindy Consonery Cover Design: Lisa Tosheff Printed at: Yurchak Printing Inc. Published in the United States of America by Information Science Reference (an imprint of IGI Global) 701 E. Chocolate Avenue, Suite 200 Hershey PA 17033 Tel: 717-533-8845 Fax: 717-533-8661 E-mail: [email protected] Web site: http://www.igi-global.com/reference and in the United Kingdom by Information Science Reference (an imprint of IGI Global) 3 Henrietta Street Covent Garden London WC2E 8LU Tel: 44 20 7240 0856 Fax: 44 20 7379 0609 Web site: http://www.eurospanonline.com Copyright © 2008 by IGI Global. All rights reserved. No part of this publication may be reproduced, stored or distributed in any form or by any means, electronic or mechanical, including photocopying, without written permission from the publisher. Product or company names used in this set are for identification purposes only. Inclusion of the names of the products or companies does not indicate a claim of ownership by IGI Global of the trademark or registered trademark. Library of Congress Cataloging-in-Publication Data Social information retrieval systems -

Seo On-Page Optimization

SEO ON-PAGE OPTIMIZATION Equipping Your Website to Become a Powerful Marketing Platform Copyright 2016 SEO GLOBAL MEDIA TABLE OF CONTENTS Introduction 01 Chapter I Codes, Tags, and Metadata 02 Chapter II Landing Pages and Buyer Psychology 06 Chapter III Conclusion 08 Copyright 2016 SEO GLOBAL MEDIA INTRODUCTION Being indexed and ranked on the search engine results pages (SERPs) depends on many factors, beginning with the different elements on each of your website. Optimizing these factors helps search engine crawlers find your website, index the pages appropriately , and rank it according to your desired keywords. On-page optimization plays a big role in ensuring your online marketing campaign's success. In this definitive guide, you will learn how to successfully optimize your website to ensure that your website is indexed and ranked on the SERPs. In addition you’ll learn how to make your web pages convert. Copyright 2016 SEO GLOBAL MEDIA SEO On-Page Optimization | 1 CODES, MARK-UPS, AND METADATA Let’s get technical Having clean codes, optimized HTML tags and metadata helps search engines crawl your site better and index and rank your pages according to the relevant search terms. Make sure to check the following: Source Codes Your source code is the backbone of your website. The crawlers finds everything it needs in order to index your website here. Make sure your source code is devoid of any problems by checking the following: INCORRECTLY IMPLEMENTED TAGS: Examples of these are re=canonical tags, authorship mark-up, or redirects. These could prove catastrophic, especially the canonical code, which can end up in duplicate content penalties. -

ICT Jako Významný Faktor Konkurenceschopnosti

ČESKÁ ZEMĚDĚLSKÁ UNIVERZITA V PRAZE PROVOZNĚ EKONOMICKÁ FAKULTA KATEDRA INFORMAČNÍCH TECHNOLOGIÍ ICT jako významný faktor konkurenceschopnosti disertační práce Autor: Ing. Pavel Šimek Školitel: Doc. PhDr. Ivana Švarcová, CSc. © 2007 Prohlášení Prohlašuji, že disertační práci na téma „ICT jako významný faktor konkurenceschopnosti“ jsem vypracoval samostatně a použil jsem pramenů, které jsou uvedeny v přiloženém seznamu literatury. V Praze dne 21. září 2007 Pavel Šimek Poděkování Rád bych při této příležitosti poděkoval své školitelce Doc. PhDr. Ivaně Švarcové, CSc., za ochotu a odborné vedení, nejen při psaní této práce, ale během celého studia. Dále děkuji Ing. Karlu Jiránkovi a Bc. Ireně Krupičkové za pomoc při praktické implementaci metodického postupu optimalizace dokumentu na několika reálných projektech. Souhrn Souhrn Předkládaná disertační práce se zabývá problematikou optimalizace dokumentu a celých website pro fulltextové vyhledávače a je rozdělena do dvou základních částí. První část se zabývá teoretickou základnou obsahující principy a možnosti služby World Wide Web, principy a možnosti fulltextových vyhledávačů, přehled již známých technik Search Engine Optimization, poslední vývojové trendy v oblasti Search Engine Marketingu a analýzu vlivů různých faktorů na hodnocení relevance WWW stránky vyhledávacím strojem. Ve druhé části disertační práce je splněn její hlavní cíl, tedy navrhnutí metodického a ověřeného postupu pro optimalizaci dokumentu na určitá klíčová slova pro nejpoužívanější fulltextové vyhledávače od úplného začátku, -

Internet Multimedia Information Retrieval Based on Link

Internet Multimedia Information Retrieval based on Link Analysis by Chan Ka Yan Supervised by Prof. Christopher C. Yang A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Philosophy in Division of Systems Engineering and Engineering Management ©The Chinese University of Hong Kong August 2004 The Chinese University of Hong Kong holds the copyright of this thesis. Any person(s) intending to use a part or whole material in the thesis in proposed publications must seek copyright release from the Dean of Graduate School. (m 1 1 2055)11 WWSYSTEM/麥/J ACKNOWLEDGEMENT Acknowledgement I wish to gratefully acknowledge the major contribution made to this paper by Prof. Christopher C. Yang, my supervisor, for providing me with the idea to initiate the project as well as guiding me through the whole process; Prof. Wei Lam and Prof. Jeffrey X. Yu, my internal markers, and my external marker, for giving me invaluable advice during the oral examination to improve the project. I would also like to thank my classmates and friends, Tony in particular, for their help and encouragement shown throughout the production of this paper. Finally, I have to thank my family members for their patience and consideration and for doing my share of the domestic chores while I worked on this paper. i ABSTRACT Abstract Ever since the invention of Internet World Wide Web (WWW), which can be regarded as an electronic library storing billions of information sets with different types of media, enhancing the efficiency in searching on WWW has been becoming the major challenge in the Internet world while different web search engines are the tools for obtaining the necessary materials through various information retrieval algorithms. -

PIA National Agency Marketing Guide!

Special Report: Agents’ Guide to Internet Marketing PIA National Agency Marketing Guide2011 Brought to you by these sponsors: A product of the PIA Branding Program Seaworthy Insurance... The Choice When It Comes To Security And Superior Service In Marine Coverage n Featuring policies for pleasure boats, yachts, super yachts and commercial charter boats n Excellent claims service provided by boating experts, including 24-hour dispatch of assistance n Competitive commission structure and low premium volume commitment n Web-based management system allows agents to quote, bind, track commissions and claims status online, any time Become a Seaworthy Agency today, visit www.seaworthyinsurance.com or call toll-free 1-877-580-2628 PIA National Marketing Guide_7x9.5live.indd 1 4/21/11 3:31 PM elcome to the second edition of the PIA National Agency Marketing Guide! The 2011 edition of this PIA Branding Program publication follows the highly Wacclaimed 2010 edition, which focused on agent use of social media. While social media continues to be of major interest to agents across America, many of the questions coming in to PIA National’s office this year had to do with the Internet. PIA mem- bers wanted to know how to best position their agency website, how to show up in search engines, how to use email marketing, and more. Our special report, the Agents’ Guide to Internet Marketing, helps to answer these questions. Whether you handle most of your agency’s marketing in-house, or whether you outsource many of these functions, you’ll find a treasure trove of things to implement, things to consider and things to watch out for. -

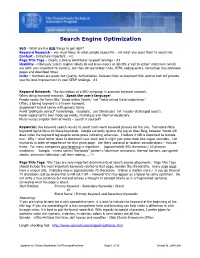

SEO - What Are the BIG Things to Get Right? Keyword Research – You Must Focus on What People Search for - Not What You Want Them to Search For

Search Engine Optimization SEO - What are the BIG things to get right? Keyword Research – you must focus on what people search for - not what you want them to search for. Content – Extremely important - #2. Page Title Tags – Clearly a strong contributor to good rankings - #3 Usability – Obviously search engine robots do not know colors or identify a ‘call to action’ statement (which are both very important to visitors), but they do see broken links, HTML coding errors, contextual links between pages and download times. Links – Numbers are good; but Quality, Authoritative, Relevant links on Keyword-Rich anchor text will provide you the best improvement in your SERP rankings. #1 Keyword Research: The foundation of a SEO campaign is accurate keyword research. When doing keyword research: Speak the user's language! People search for terms like "cheap airline tickets," not "value-priced travel experience." Often, a boring keyword is a known keyword. Supplement brand names with generic terms. Avoid "politically correct" terminology. (example: use ‘blind users’ not ‘visually challenged users’). Favor legacy terms over made-up words, marketese and internal vocabulary. Plural verses singular form of words – search it yourself! Keywords: Use keyword search results to select multi-word keyword phrases for the site. Formulate Meta Keyword tag to focus on these keywords. Google currently ignores the tag as does Bing, however Yahoo still does index the keyword tag despite some press indicating otherwise. I believe it still is important to include one. Why – what better place to document your work and it might just come back into vogue someday. List keywords in order of importance for that given page. -

The Anatomy of a Large-Scale Social Search Engine

WWW 2010 • Full Paper April 26-30 • Raleigh • NC • USA The Anatomy of a Large-Scale Social Search Engine Damon Horowitz Sepandar D. Kamvar Aardvark Stanford University [email protected] [email protected] ABSTRACT The differences how people find information in a library We present Aardvark, a social search engine. With Aard- versus a village suggest some useful principles for designing vark, users ask a question, either by instant message, email, a social search engine. In a library, people use keywords to web input, text message, or voice. Aardvark then routes the search, the knowledge base is created by a small number of question to the person in the user’s extended social network content publishers before the questions are asked, and trust most likely to be able to answer that question. As compared is based on authority. In a village, by contrast, people use to a traditional web search engine, where the challenge lies natural language to ask questions, answers are generated in in finding the right document to satisfy a user’s information real-time by anyone in the community, and trust is based need, the challenge in a social search engine like Aardvark on intimacy. These properties have cascading effects — for lies in finding the right person to satisfy a user’s information example, real-time responses from socially proximal respon- need. Further, while trust in a traditional search engine is ders tend to elicit (and work well for) highly contextualized based on authority, in a social search engine like Aardvark, and subjective queries. For example, the query“Do you have trust is based on intimacy. -

What Is Pairs Trading

LyncP PageRank LocalRankU U HilltopU U HITSU U AT(k)U U NORM(p)U U moreU 〉〉 U Searching for a better search… LYNC Search I’m Feeling Luckier RadhikaHTU GuptaUTH NalinHTU MonizUTH SudiptoHTU GuhaUTH th CSE 401 Senior Design. April 11P ,P 2005. PageRank LocalRankU U HilltopU U HITSU U AT(k)U U NORM(p)U U moreU 〉〉 U Searching for a better search … LYNC Search Lync "for"T is a very common word and was not included in your search. [detailsHTU ]UTH Table of Contents Pages 1 – 31 for SearchingHTU for a better search UTH (2 Semesters) P P Sponsored Links AbstractHTU UTH PROBLEMU Solved U A summary of the topics covered in our paper. Beta Power! Pages 1 – 2 - CachedHTU UTH - SimilarHTU pages UTH www.PROBLEM.com IntroductionHTU and Definitions UTH FreeU CANDDE U An introduction to web searching algorithms and the Link Analysis Rank Algorithms space as well as a Come get it. Don’t be detailed list of key definitions used in the paper. left dangling! Pages 3 – 7 - CachedHTU UTH - SimilarHTU pagesUTH www.CANDDE.gov SurveyHTU of the Literature UTH A detailed survey of the different classes of Link Analysis Rank algorithms including PageRank based AU PAT on the Back U algorithms, local interconnectivity algorithms, and HITS and the affiliated family of algorithms. This The Best Authorities section also includes a detailed discuss of the theoretical drawbacks and benefits of each algorithm. on Every Subject Pages 8 – 31 - CachedHTU UTH - SimilarHTU pages UTH www.PATK.edu PageHTU Ranking Algorithms UTH PagingU PAGE U An examination of the idea of a simple page rank algorithm and some of the theoretical difficulties The shortest path to with page ranking, as well as a discussion and analysis of Google’s PageRank algorithm. -

Henry Bramwell, President, Visionary Graphics

#sbdcbootcamp Understanding who our target audience is and where they spend their time, you can build an organic presence by focusing on content that speaks to their needs and wants. What Is SEO? Search engine optimization (SEO) is the process of affecting the visibility of a website or a web page in a search engine's unpaid results—often referred to as "natural," "organic," or "earned" results. SEO Approach BLACK HAT WHITE HAT Black Hat SEO • Exploits weaknesses in search engine algorithms to obtain high rankings for a website. • Keyword Stuffing, Cloaking, Hidden Text and links and Link Spam. • Quick, unpredictable and short-lasting growth in rankings. White Hat SEO • Utilize techniques and methods to improve search rankings in accordance with the Search Engine’s Guidelines. • High quality content, website HTML optimization, relevant inbound links, social authority . • Steady, gradual, last growth in rankings. Meta Tags Meta Tags explain to search engines and (sometimes) searchers themselves what your page is about. Meta Tags are inserted into every page of your website code. How SEO Has Changed OLD SEO NEW SEO Focus on singular Focus on long tail keyword keywords, keyword intent and searcher’s needs How SEO Has Changed • When search engines were first created, early search marketers were able to easily find ways to make the search engine think that their client's site was the one that should rank well. • In some cases it was as simple as putting in some code on the website called a meta keywords tag. The meta keywords tag would tell search engines what the page was about.