Computer Language & Compilers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Derivatives of Parsing Expression Grammars

Derivatives of Parsing Expression Grammars Aaron Moss Cheriton School of Computer Science University of Waterloo Waterloo, Ontario, Canada [email protected] This paper introduces a new derivative parsing algorithm for recognition of parsing expression gram- mars. Derivative parsing is shown to have a polynomial worst-case time bound, an improvement on the exponential bound of the recursive descent algorithm. This work also introduces asymptotic analysis based on inputs with a constant bound on both grammar nesting depth and number of back- tracking choices; derivative and recursive descent parsing are shown to run in linear time and constant space on this useful class of inputs, with both the theoretical bounds and the reasonability of the in- put class validated empirically. This common-case constant memory usage of derivative parsing is an improvement on the linear space required by the packrat algorithm. 1 Introduction Parsing expression grammars (PEGs) are a parsing formalism introduced by Ford [6]. Any LR(k) lan- guage can be represented as a PEG [7], but there are some non-context-free languages that may also be represented as PEGs (e.g. anbncn [7]). Unlike context-free grammars (CFGs), PEGs are unambiguous, admitting no more than one parse tree for any grammar and input. PEGs are a formalization of recursive descent parsers allowing limited backtracking and infinite lookahead; a string in the language of a PEG can be recognized in exponential time and linear space using a recursive descent algorithm, or linear time and space using the memoized packrat algorithm [6]. PEGs are formally defined and these algo- rithms outlined in Section 3. -

Adaptive LL(*) Parsing: the Power of Dynamic Analysis

Adaptive LL(*) Parsing: The Power of Dynamic Analysis Terence Parr Sam Harwell Kathleen Fisher University of San Francisco University of Texas at Austin Tufts University [email protected] [email protected] kfi[email protected] Abstract PEGs are unambiguous by definition but have a quirk where Despite the advances made by modern parsing strategies such rule A ! a j ab (meaning “A matches either a or ab”) can never as PEG, LL(*), GLR, and GLL, parsing is not a solved prob- match ab since PEGs choose the first alternative that matches lem. Existing approaches suffer from a number of weaknesses, a prefix of the remaining input. Nested backtracking makes de- including difficulties supporting side-effecting embedded ac- bugging PEGs difficult. tions, slow and/or unpredictable performance, and counter- Second, side-effecting programmer-supplied actions (muta- intuitive matching strategies. This paper introduces the ALL(*) tors) like print statements should be avoided in any strategy that parsing strategy that combines the simplicity, efficiency, and continuously speculates (PEG) or supports multiple interpreta- predictability of conventional top-down LL(k) parsers with the tions of the input (GLL and GLR) because such actions may power of a GLR-like mechanism to make parsing decisions. never really take place [17]. (Though DParser [24] supports The critical innovation is to move grammar analysis to parse- “final” actions when the programmer is certain a reduction is time, which lets ALL(*) handle any non-left-recursive context- part of an unambiguous final parse.) Without side effects, ac- free grammar. ALL(*) is O(n4) in theory but consistently per- tions must buffer data for all interpretations in immutable data forms linearly on grammars used in practice, outperforming structures or provide undo actions. -

Lecture 3: Recursive Descent Limitations, Precedence Climbing

Lecture 3: Recursive descent limitations, precedence climbing David Hovemeyer September 9, 2020 601.428/628 Compilers and Interpreters Today I Limitations of recursive descent I Precedence climbing I Abstract syntax trees I Supporting parenthesized expressions Before we begin... Assume a context-free struct Node *Parser::parse_A() { grammar has the struct Node *next_tok = lexer_peek(m_lexer); following productions on if (!next_tok) { the nonterminal A: error("Unexpected end of input"); } A → b C A → d E struct Node *a = node_build0(NODE_A); int tag = node_get_tag(next_tok); (A, C, E are if (tag == TOK_b) { nonterminals; b, d are node_add_kid(a, expect(TOK_b)); node_add_kid(a, parse_C()); terminals) } else if (tag == TOK_d) { What is the problem node_add_kid(a, expect(TOK_d)); node_add_kid(a, parse_E()); with the parse function } shown on the right? return a; } Limitations of recursive descent Recall: a better infix expression grammar Grammar (start symbol is A): A → i = A T → T*F A → E T → T/F E → E + T T → F E → E-T F → i E → T F → n Precedence levels: Nonterminal Precedence Meaning Operators Associativity A lowest Assignment = right E Expression + - left T Term * / left F highest Factor No Parsing infix expressions Can we write a recursive descent parser for infix expressions using this grammar? Parsing infix expressions Can we write a recursive descent parser for infix expressions using this grammar? No Left recursion Left-associative operators want to have left-recursive productions, but recursive descent parsers can’t handle left recursion -

Packrat Parsers Can Support Left Recursion

Packrat Parsers Can Support Left Recursion Alessandro Warth, James R. Douglass, Todd Millstein To be published as part of ACM SIGPLAN 2008 Workshop on Partial VPRI Technical Report TR-2007-002 Evaluation and Program Manipulation (PEPM ’08) January 2008. Viewpoints Research Institute, 1209 Grand Central Avenue, Glendale, CA 91201 t: (818) 332-3001 f: (818) 244-9761 Packrat Parsers Can Support Left Recursion ∗ Alessandro Warth James R. Douglass Todd Millstein University of California, Los Angeles The Boeing Company University of California, Los Angeles and Viewpoints Research Institute [email protected] [email protected] [email protected] Abstract ing [10], eliminates the need for moded lexers [9] when combin- Packrat parsing offers several advantages over other parsing tech- ing grammars (e.g., in Domain-Specific Embedded Language niques, such as the guarantee of linear parse times while supporting (DSEL) implementations). backtracking and unlimited look-ahead. Unfortunately, the limited Unfortunately, “like other recursive descent parsers, packrat support for left recursion in packrat parser implementations makes parsers cannot support left-recursion” [6], which is typically used them difficult to use for a large class of grammars (Java’s, for exam- to express the syntax of left-associative operators. To better under- ple). This paper presents a modification to the memoization mech- stand this limitation, consider the following rule for parsing expres- anism used by packrat parser implementations that makes it possi- sions: ble for them to support (even indirectly or mutually) left-recursive rules. While it is possible for a packrat parser with our modification expr ::= <expr> "-" <num> / <num> to yield super-linear parse times for some left-recursive grammars, Note that the first alternative in expr begins with expr itself. -

Parsing, Lexical Analysis, and Tools

CS 345 Parsing, Lexical Analysis, and Tools William Cook 1 2 Parsing techniques Top-Down • Begin with start symbol, derive parse tree • Match derived non-terminals with sentence • Use input to select from multiple options Bottom Up • Examine sentence, applying reductions that match • Keep reducing until start symbol is derived • Collects a set of tokens before deciding which production to use 3 Top-Down Parsing Recursive Descent • Interpret productions as functions, nonterminals as calls • Must predict which production will match – looks ahead at a few tokens to make choice • Handles EBNF naturally • Has trouble with left-recursive, ambiguous grammars – left recursion is production of form E ::= E … Also called LL(k) • scan input Left to right • use Left edge to select productions • use k symbols of look-ahead for prediction 4 Recursive Descent LL(1) Example Example E ::= E + E | E – E | T note: left recursion T ::= N | ( E ) N ::= { 0 | 1 | … | 9 } { … } means repeated Problems: • Can’t tell at beginning whether to use E + E or E - E – would require arbitrary look-ahead – But it doesn’t matter because they both begin with T • Left recursion in E will never terminate… 5 Recursive Descent LL(1) Example Example E ::= T [ + E | – E ] [ … ] means optional T ::= N | ( E ) N ::= { 0 | 1 | … | 9 } Solution • Combine equivalent forms in original production: E ::= E + E | E – E | T • There are algorithms for reorganizing grammars – cf. Greibach normal form (out of scope of this course) 6 LL Parsing Example E•23+7 E ::= T [ + E | – E ] T•23+7 T ::= N | ( E ) N ::= { 0 | 1 | … | 9 } N•23+7 23 +7 • • = Current location 23+•7 Preduction 23+E•7 indent = function call 23+T•7 23+N•7 23+7 • Intuition: Growing the parse tree from root down towards terminals. -

CS412/CS413 Introduction to Compilers Tim Teitelbaum

CS412/CS413 Introduction to Compilers Tim Teitelbaum Lecture 7: LL parsing and AST construction February 4, 2008 CS 412/413 Spring 2008 Introduction to Compilers 1 LL(1) Parsing •Last time: – how to build a parsing table for an LL(1) grammar (use FIRST/FOLLOW sets) – how to construct a recursive-descent parser from the parsing table • Grammars may not be LL(1) – Use left factoring when grammar has multiple productions starting with the same symbol. – Other problematic cases? CS 412/413 Spring 2008 Introduction to Compilers 2 if-then-else • How to write a grammar for if stmts? S → if (E) S S → if (E) S else S S → other Is this grammar ok? CS 412/413 Spring 2008 Introduction to Compilers 3 No—Ambiguous! •How to parse? S → if (E) S S → if (E) S else S if (E1) if (E2) S1 else S2 S → other S → if (E) S if → if (E) if (E) S else S E1 if E2 S1 S2 S → if (E) S else S if → if (E) if (E) S else S E1 if S2 E 2 S1 Which “if” is the “else” attached to? CS 412/413 Spring 2008 Introduction to Compilers 4 Grammar for Closest-if Rule • Want to rule out if (E) if (E) S else S • Impose that unmatched “if” statements occur only on the “else” clauses statement → matched | unmatched matched → if (E) _______ else __________ | other unmatched → if (E) ________ | if (E) _______ else __________ CS 412/413 Spring 2008 Introduction to Compilers 5 Grammar for Closest-if Rule • Want to rule out if (E) if (E) S else S • Impose that unmatched “if” statements occur only on the “else” clauses statement → matched | unmatched matched → if (E) matched else matched | other -

Parsing I: Earley Parser

Parsing I: Earley Parser CMSC 35100 Natural Language Processing May 1, 2003 Roadmap • Parsing: – Accepting & analyzing – Combining top-down & bottom-up constraints • Efficiency – Earley parsers • Probabilistic CFGs – Handling ambiguity – more likely analyses – Adding probabilities • Grammar • Parsing: probabilistic CYK • Learning probabilities: Treebanks & Inside-Outside • Issues with probabilities Representation: Context-free Grammars • CFGs: 4-tuple – A set of terminal symbols: Σ – A set of non-terminal symbols: N – A set of productions P: of the form A -> α • Where A is a non-terminal and α in ( Σ U N)* – A designated start symbol S • L = W|w in Σ* and S=>*w – Where S=>*w means S derives w by some seq Representation: Context-free Grammars • Partial example – Σ: the, cat, dog, bit, bites, man – N: NP, VP, AdjP, Nominal – P: S-> NP VP; NP -> Det Nom; Nom-> N Nom|N –S S NP VP Det Nom V NP N Det Nom N The dog bit the man Parsing Goals • Accepting: – Legal string in language? • Formally: rigid • Practically: degrees of acceptability • Analysis – What structure produced the string? • Produce one (or all) parse trees for the string Parsing Search Strategies • Top-down constraints: – All analyses must start with start symbol: S – Successively expand non-terminals with RHS – Must match surface string • Bottom-up constraints: – Analyses start from surface string – Identify POS – Match substring of ply with RHS to LHS – Must ultimately reach S Integrating Strategies • Left-corner parsing: – Top-down parsing with bottom-up constraints – Begin -

Modular and Efficient Top-Down Parsing for Ambiguous Left

Modular and Efficient Top-Down Parsing for Ambiguous Left-Recursive Grammars Richard A. Frost and Rahmatullah Hafiz Paul C. Callaghan School of Computer Science Department of Computer Science University of Windsor University of Durham Canada U.K. [email protected] [email protected] Abstract parser combinators, and Frost 2006 for a discussion of their use in natural-language processing) and def- In functional and logic programming, inite clause grammars (DCGs) respectively. For ex- parsers can be built as modular executable ample, consider the following grammar, in which specifications of grammars, using parser s stands for sentence, np for nounphrase, vp for combinators and definite clause grammars verbphrase, and det for determiner: respectively. These techniques are based on s ::=npvp top-down backtracking search. Commonly np ::= noun | det noun vp ::= verb np used implementations are inefficient for det ::= 'a' | 't' ambiguous languages, cannot accommodate noun ::= 'i' | 'm' | 'p' | 'b' left-recursive grammars, and require expo- verb ::= 's' nential space to represent parse trees for A set of parsers for this grammar can be con- highly ambiguous input. Memoization is structed in the Haskell functional programming lan- known to improve efficiency, and work by guage as follows, where term, `orelse`, and other researchers has had some success in `thenS` are appropriately-defined higher-order accommodating left recursion. This paper functions called parser combinators. (Note that combines aspects of previous approaches backquotes surround infix functions in Haskell). and presents a method by which parsers can s = np `thenS` vp be built as modular and efficient executable np = noun `orelse` (det `thenS` noun) vp = verb `thenS` np specifications of ambiguous grammars det = term 'a' `orelse` term 't' containing unconstrained left recursion. -

On the Covering Problem for Left-Recursive

CORE Metadata, citation and similar papers at core.ac.uk Provided by Elsevier - Publisher Connector Theoretical Computer Science II ( 1979) l-l 1 @ North-Holland Publishing Company ON THE COVERING PROBLEM FOR LEFT-RECURSIVE Eljas SOISALON-SOININEN Department of Computer Science, University of Helsinki, Tiiiiliinkatu 1 I, SF~OOIOOHeisin ki IO. Finland Communicated by Arto Salomaa Received 15 December 1977 Abstract. Two new proofs of the fact that proper left-recursive grammars can be covered by non-left-recursive grammars are presented. The first proof is based on a simple trick inspired by the over ten-year-old idea that semantic Information hanged on tkc: productions can be carried along in the transformations. The second proof involves a new method for eliminating kft recursion from a proper context-free grammar in such a way thyr the covering grammar is obtained directly. 1. Introduction In a recent paper ‘51,,__ Nijholt pointed out that some conjectures expressed in [l] and [3] on the elimination of left recursion from context-free grammars are not valid: Nijholt [S] gave an algorithm that removes left recursion from proper context-free grammars in such a way that the resulting grammar right covers the original grammar; i.e. the right parses in the original grammar are homomorphic images of the right parses in the resulting grammar without left recursion” In addition, since the motivation of the elimination of left recursion is that simple tog-down parsing algorithms do not tolerate left recursion, Nijholt [ 51 proved by an additional transformation that left-recursive proper grammars can be, as he says, l$t-to-right/ covered by non-left-recursive grammars; i.e. -

Generalised Recursive Descent Parsing and Follow-Determinism

Generalised Recursive Descent Parsing and Follow-Determinism Adrian Johnstone and Elizabeth Scott* Royal Holloway, University of London Abstract. This paper presents a construct for mapping arbitrary non- left recursive context-free grammars into recursive descent parsers that: handle ambiguous granunars correctly; perform with LL(1 ) efficiency on LL (1) grammars; allow straightforward implementation of both inherit ed and synthesized attributes; and allow semantic actions to be added at any point in the grammar. We describe both the basic algorithm and a tool, GRDP, which generates parsers which use this technique. Modifi- cations of the basic algorithm to improve efficiency lead to a discussion of/ollow-deterrainisra, a fundamental property that gives insights into the behavioux of both LL and LR parsers. 1 Introduction Practical parsers for computer languages need to display near-linear parse times because we routinely expect compilers and interpreters to process inputs that are many thousands of language tokens long. Presently available practical (near- linear) parsing methods impose restrictions on their input grammars which have led to a 'tuning' of high level language syntax to the available efficient pars- ing algorithms, particularly LR bottom-up parsing and, for the Pascal family languages, to LL(1) parsers. The key observation was that, although left to themselves humans would use notations that were very difficult to parse, there exist notations that could be parsed in time proportional to the length of the string being parsed whilst still being easily comprehended by programmers. It is our view that this process has gone a little too far, and the feedback from theory into engineering practice has become a constraint on language design, and particularly on the design of prototype and production language parsers. -

Parsing Expression Grammars Made Practical

Parsing Expression Grammars Made Practical Nicolas Laurent ∗ Kim Mens ICTEAM, Universite´ catholique de Louvain, ICTEAM, Universite´ catholique de Louvain, Belgium Belgium [email protected] [email protected] Abstract this task. These include parser generators (like the venerable Parsing Expression Grammars (PEGs) define languages by Yacc) and more recently parser combinator libraries [5]. specifying a recursive-descent parser that recognises them. Most of the work on parsing has been built on top of The PEG formalism exhibits desirable properties, such as Chomsky’s context-free grammars (CFGs). Ford’s parsing closure under composition, built-in disambiguation, unifica- expression grammars (PEGs) [3] are an alternative formal- tion of syntactic and lexical concerns, and closely matching ism exhibiting interesting properties. Whereas CFGs use programmer intuition. Unfortunately, state of the art PEG non-deterministic choice between alternative constructs, parsers struggle with left-recursive grammar rules, which are PEGs use prioritized choice. This makes PEGs unambiguous not supported by the original definition of the formalism and by construction. This is only one of the manifestations of a can lead to infinite recursion under naive implementations. broader philosophical difference between CFGs and PEGs. Likewise, support for associativity and explicit precedence CFGs are generative: they describe a language, and the is spotty. To remedy these issues, we introduce Autumn, a grammar itself can be used to enumerate the set of sen- general purpose PEG library that supports left-recursion, left tences belonging to that language. PEGs on the other hand and right associativity and precedence rules, and does so ef- are recognition-based: they describe a predicate indicating ficiently. -

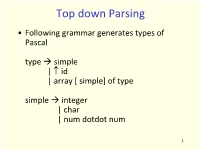

Top Down Parsing • Following Grammar Generates Types of Pascal

Top down Parsing • Following grammar generates types of Pascal type simple | id | array [ simple] of type simple integer | char | num dotdot num 1 Example … • Construction of a parse tree is done by starting the root labeled by a start symbol • repeat following two steps – at a node labeled with non terminal A select one of the productions of A and construct children nodes (Which production?) – find the next node at which subtree is Constructed (Which node?) 2 • Parse array [ num dotdot num ] of integer Start symbol type Expanded using the rule type simple simple • Cannot proceed as non terminal “simple” never generates a string beginning with token “array”. Therefore, requires back-tracking. • Back-tracking is not desirable, therefore, take help of a “look-ahead” token. The current token is treated as look- ahead token. (restricts the class of grammars) 3 array [ num dotdot num ] of integer Start symbol look-ahead Expand using the rule type type array [ simple ] of type array [ simple ] of type Left most non terminal Expand using the rule Simple num dotdot num num dotdot num simple all the tokens exhausted Left most non terminal integer Parsing completed Expand using the rule type simple Left most non terminal Expand using the rule simple integer 4 Recursive descent parsing First set: Let there be a production A then First() is the set of tokens that appear as the first token in the strings generated from For example : First(simple) = {integer, char, num} First(num dotdot num) = {num} 5 Define a procedure for each non terminal