LUJAN-THESIS-2020.Pdf (4.693Mb)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Songs by Title

Songs by Title Title Artist Title Artist #1 Goldfrapp (Medley) Can't Help Falling Elvis Presley John Legend In Love Nelly (Medley) It's Now Or Never Elvis Presley Pharrell Ft Kanye West (Medley) One Night Elvis Presley Skye Sweetnam (Medley) Rock & Roll Mike Denver Skye Sweetnam Christmas Tinchy Stryder Ft N Dubz (Medley) Such A Night Elvis Presley #1 Crush Garbage (Medley) Surrender Elvis Presley #1 Enemy Chipmunks Ft Daisy Dares (Medley) Suspicion Elvis Presley You (Medley) Teddy Bear Elvis Presley Daisy Dares You & (Olivia) Lost And Turned Whispers Chipmunk Out #1 Spot (TH) Ludacris (You Gotta) Fight For Your Richard Cheese #9 Dream John Lennon Right (To Party) & All That Jazz Catherine Zeta Jones +1 (Workout Mix) Martin Solveig & Sam White & Get Away Esquires 007 (Shanty Town) Desmond Dekker & I Ciara 03 Bonnie & Clyde Jay Z Ft Beyonce & I Am Telling You Im Not Jennifer Hudson Going 1 3 Dog Night & I Love Her Beatles Backstreet Boys & I Love You So Elvis Presley Chorus Line Hirley Bassey Creed Perry Como Faith Hill & If I Had Teddy Pendergrass HearSay & It Stoned Me Van Morrison Mary J Blige Ft U2 & Our Feelings Babyface Metallica & She Said Lucas Prata Tammy Wynette Ft George Jones & She Was Talking Heads Tyrese & So It Goes Billy Joel U2 & Still Reba McEntire U2 Ft Mary J Blige & The Angels Sing Barry Manilow 1 & 1 Robert Miles & The Beat Goes On Whispers 1 000 Times A Day Patty Loveless & The Cradle Will Rock Van Halen 1 2 I Love You Clay Walker & The Crowd Goes Wild Mark Wills 1 2 Step Ciara Ft Missy Elliott & The Grass Wont Pay -

Love & Wedding

651 LOVE & WEDDING THE O’NEILL PLANNING RODGERS BROTHERS – THE MUSIC & ROMANCE A DAY TO REMEMBER FOR YOUR WEDDING 35 songs, including: All at PIANO MUSIC FOR Book/CD Pack Once You Love Her • Do YOUR WEDDING DAY Cherry Lane Music I Love You Because You’re Book/CD Pack The difference between a Beautiful? • Hello, Young Minnesota brothers Tim & good wedding and a great Lovers • If I Loved You • Ryan O’Neill have made a wedding is the music. With Isn’t It Romantic? • My Funny name for themselves playing this informative book and Valentine • My Romance • together on two pianos. accompanying CD, you can People Will Say We’re in Love They’ve sold nearly a million copies of their 16 CDs, confidently select classical music for your wedding • We Kiss in a Shadow • With a Song in My Heart • performed for President Bush and provided music ceremony regardless of your musical background. Younger Than Springtime • and more. for the NBC, ESPN and HBO networks. This superb The book includes piano solo arrangements of each ______00313089 P/V/G...............................$16.99 songbook/CD pack features their original recordings piece, as well as great tips and tricks for planning the of 16 preludes, processionals, recessionals and music for your entire wedding day. The CD includes ROMANCE: ceremony and reception songs, plus intermediate to complete performances of each piece, so even if BOLEROS advanced piano solo arrangements for each. Includes: you’re not familiar with the titles, you can recognize FAVORITOS Air on the G String • Ave Maria • Canon in D • Jesu, your favorites with just one listen! The book is 48 songs in Spanish, Joy of Man’s Desiring • Ode to Joy • The Way You divided into selections for preludes, processionals, including: Adoro • Always Look Tonight • The Wedding Song • and more, with interludes, recessionals and postludes, and contains in My Heart • Bésame bios and photos of the O’Neill Brothers. -

Rca Records to Release American Idol: Greatest Moments on October 1

THE RCA RECORDS LABEL ___________________________________________ RCA RECORDS TO RELEASE AMERICAN IDOL: GREATEST MOMENTS ON OCTOBER 1 On the heels of crowning Kelly Clarkson as America's newest pop superstar, RCA Records is proud to announce the release of American Idol: Greatest Moments on Oct. 1. The first full-length album of material from Fox's smash summer television hit, "American Idol," will include four songs by Clarkson, two by runner-up Justin Guarini, a song each by the remaining eight finalists, and “California Dreamin’” performed by all ten. All 14 tracks on American Idol: Greatest Moments, produced by Steve Lipson (Backstreet Boys, Annie Lennox), were performed on "American Idol" during the final weeks of the reality-based television barnburner. In addition to Kelly and Justin, the show's remaining eight finalists featured on the album include Nikki McKibbon, Tamyra Gray, EJay Day, RJ Helton, AJ Gil, Ryan Starr, Christina Christian, and Jim Verraros. Producer Steve Lipson, who put the compilation together in an unprecedented two-weeks time, finds a range of pop gems on the album. "I think Kelly's version of ‘Natural Woman' is really strong. Her vocals are brilliant. She sold me on the song completely. I like Christina's 'Ain't No Sunshine.' I think she got the emotion of it across well. Nikki is very good as well -- very underestimated. And Tamyra is brilliant too so there's not much more to say about her." RCA Records will follow American Idol: Greatest Moments with the debut album from “American Idol” champion Kelly Clarkson in the first quarter of 2003. -

D3.2 – Predictive Analytics and Recommendation Framework V2

D3.2 – Predictive analytics and recommendation framework v2 Αugust 31st, 2019 Authors: Thomas Lidy (MMAP), Adrian Lecoutre (MMAP), Khalil Boulkenafet (MMAP), Manos Schinas (CERTH), Christos Koutlis (CERTH), Symeon Papadopoulos (CERTH) Contributor/s: Vasiliki Gkatziaki (CERTH), Emmanouil Krasanakis (CERTH), Polychronis Charitidis (CERTH) Deliverable Lead Beneficiary: MMAP This project has been co-funded by the HORIZON 2020 Programme of the European Union. This publication reflects the views only of the author, and the Commission cannot be held responsible for any use, which may be made of the information contained therein. Multimodal Predictive Analytics and Recommendation Services for the Music Industry 2 Deliverable number or D3.2 Predictive analytics and recommendation framework supporting document title Type Report Dissemination level Public Publication date 31-08-2019 Author(s) Thomas Lidy (MMAP), Adrian Lecoutre (MMAP), Khalil Boulkenafet (MMAP), Manos Schinas (CERTH), Christos Koutlis (CERTH), Symeon Papadopoulos (CERTH) Contributor(s) Emmanouil Krasanakis (CERTH), Vasiliki Gkatziaki (CERTH), Polychronis Charitidis (CERTH) Reviewer(s) Rémi Mignot (IRCAM) Keywords Track popularity, artist popularity, music genre popularity, track recognition estimation, emerging artist discovery, popularity forecasting Website www.futurepulse.eu CHANGE LOG Version Date Description of change Responsible V0.1 25/06/2019 First deliverable draft version, table of contents Thomas Lidy (MMAP) V0.2 18/07/2019 Main contribution on track recognition estimation -

By Song Title

Solar Entertainments Karaoke Song Listing By Song Title 3 Britney Spears 2000s 17 MK 2010s 22 Lily Allen 2000s 39 Queen 1970s 679 Fetty Wap 2010s 711 Beyonce 2010s 1973 James Blunt 2000s 1999 Prince 1980s 2002 Anne Marie 2010s #ThatPower Will.I.Am & Justin Bieber 2010s 007 (Shanty Town) Desmond Dekker & The Aces 1960s 1 800 273 8255 Logic & Alessia Cara & Khalid 2010s 1 Thing Amerie 2000s 10/10 Paolo Nutini 2010s 10000 Hours Dan & Shay & Justin Bieber 2010s 18 & Life Skid Row 1980s 2 Become 1 Spice Girls 1990s 2 Hearts Kylie Minogue 2000s 20th Century Boy T Rex 1970s 21 Guns Green Day 2000s 21st Century Breakdown Green Day 2000s 21st Century Christmas Cliff Richard 2000s 22 (Twenty Two) Taylor Swift 2010s 24K Magic Bruno Mars 2010s 2U David Guetta & Justin Bieber 2010s 3 AM Busted 2000s 3 Nights Dominic Fike 2010s 3 Words Cheryl Cole 2000s 30 Days Saturdays 2010s 34+35 Ariana Grande 2020s 4 44 Jay Z 2010s 4 In The Morning Gwen Stefani 2000s 4 Minutes Madonna & Justin Timberlake 2000s 5 Colours In Her Hair McFly 2000s 5,6,7,8 Steps 1990s 500 Miles (I'm Gonna Be) Proclaimers 1980s 7 Rings Ariana Grande 2010s 7 Things Miley Cyrus 2000s 7 Years Lukas Graham 2010s 74 75 Connells 1990s 9 To 5 Dolly Parton 1980s 90 Days Pink & Wrabel 2010s 99 Red Balloons Nena 1980s A Bad Dream Keane 2000s A Blossom Fell Nat King Cole 1950s A Change Would Do You Good Sheryl Crow 1990s A Cover Is Not The Book Mary Poppins Returns Soundtrack 2010s A Design For Life Manic Street Preachers 1990s A Different Beat Boyzone 1990s A Different Corner George Michael 1980s -

1 the Brookings Institution Responding to an Historic

CRISIS-2009/03/13 1 THE BROOKINGS INSTITUTION RESPONDING TO AN HISTORIC ECONOMIC CRISIS: THE OBAMA PROGRAM Washington, D.C. Friday, March 13, 2009 PARTICIPANTS: Welcome and Introduction: MARTIN BAILY, Senior Fellow The Brookings Institution Featured Speaker: LAWRENCE SUMMERS, Director White House National Economic Council * * * * * P R O C E E D I N G S ANDERSON COURT REPORTING 706 Duke Street, Suite 100 Alexandria, VA 22314 Phone (703) 519-7180 Fax (703) 519-7190 CRISIS-2009/03/13 2 MR. BAILY: Welcome to Brookings. This is really a great occasion. My name is Martin Baily and I lead the Business and Public Policy Initiative here at Brookings. We're very fortunate that we had Christine Romer this week and now Larry Summers. It's a great pleasure and privilege. The format is I'm just going to give a short introduction, Larry is going to give his talk, and then he has agreed to take questions. Larry earned his Ph.D. at Harvard and in 1983 at age 28 became the youngest tenured professor in Harvard's history. He was Chief Economist at the World Bank where he played a key role in designing strategies to assist developing countries. He then served as Under Secretary of the Treasury for International Affairs, Deputy Secretary of the Treasury, and then from 1999 to 2001 as the seventy-first United States Secretary of the Treasury. I was lucky enough to be one of his colleagues in the administration at least during some of those years. After the Treasury he served as the twenty-seventh President of Harvard University, and until joining the Obama Administration he was the Charles W. -

$40 a Day Episode Title: Nantucket Episode # AD1D01 Script Version: 1St Writer: Don Colliver P. 1 COLD OPEN N40104 04.13.21, RR

$40 A Day Episode Title: Nantucket Episode # AD1D01 Script Version: 1st Writer: Don Colliver COLD OPEN N40104 04.13.21, RR OC VO: Hi everybody, I’m Rachael Ray N40106 11.45.23, Flower to Nan sign and today I’m visiting Nantucket N40110 13.41.52, Whaling paint4 Island, Massachusetts. Now, this N40106 11.47.29, Walking on cbblest. formal whaling village is known for it’s N40107 12.04.03, Old cottage cobblestone streets, and it’s cute N40109 14.49.15, Driving past cottage cottages and it’s many lighthouses. All N40104 04.27.59, Lighthouse CU this New England charm draws N40109 14.43.44, Lighthouse pull vacationers from all around who come N40107 12.00.32, Arno’s rest. Sign here to sail, to swim, to shop, and to N40109 14.51.58, Toppers sign dine. Nantucket has many marvelous N40107 12.10.22, Outdoor café restaurants serving up some fabulous N40106 11.44.15, Kids window shop fare, but how fair are the prices? N40106 11.47.51, Mob passes bldg. N40108 15.06.51, Caviar beauty N40108 15.11.03, Lady dines N40104 04.14.09, RR OC RR: Well, I’m about to find out, cause N40104 04.15.42, $ CU I’ve got just 24 hours and 40 dollars. MONEY-MONEY-MONEY! N40104 04.14.13, RR OC RR: Will I find a whale of a deal, or will my budget sleep with the fishes? You’ve got to come back to find out. GRPX OPENING TITLES MUSIC N40101 01.37.18, RR OC RR: Yum-oh. -

The Symbolic Rape of Representation: a Rhetorical Analysis of Black Musical Expression on Billboard's Hot 100 Charts

THE SYMBOLIC RAPE OF REPRESENTATION: A RHETORICAL ANALYSIS OF BLACK MUSICAL EXPRESSION ON BILLBOARD'S HOT 100 CHARTS Richard Sheldon Koonce A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY December 2006 Committee: John Makay, Advisor William Coggin Graduate Faculty Representative Lynda Dee Dixon Radhika Gajjala ii ABSTRACT John J. Makay, Advisor The purpose of this study is to use rhetorical criticism as a means of examining how Blacks are depicted in the lyrics of popular songs, particularly hip-hop music. This study provides a rhetorical analysis of 40 popular songs on Billboard’s Hot 100 Singles Charts from 1999 to 2006. The songs were selected from the Billboard charts, which were accessible to me as a paid subscriber of Napster. The rhetorical analysis of these songs will be bolstered through the use of Black feminist/critical theories. This study will extend previous research regarding the rhetoric of song. It also will identify some of the shared themes in music produced by Blacks, particularly the genre commonly referred to as hip-hop music. This analysis builds upon the idea that the majority of hip-hop music produced and performed by Black recording artists reinforces racial stereotypes, and thus, hegemony. The study supports the concept of which bell hooks (1981) frequently refers to as white supremacist capitalist patriarchy and what Hill-Collins (2000) refers to as the hegemonic domain. The analysis also provides a framework for analyzing the themes of popular songs across genres. The genres ultimately are viewed through the gaze of race and gender because Black male recording artists perform the majority of hip-hop songs. -

JOHN HEBER STANSFIELD the Story of a Shepherd Artist

JOHN HEBER STANSFIELD The Story of a Shepherd Artist by Jesse Serena Stansfield Christensen and Jacqueline Christensen Larsen Contents Preface vii Chapter One. The Stansfield Lineage, 1822-1872 1 Chapter Two. The Danish Lineage, 1823-1886 5 Chapter Three. John Stansfield and Ane Sophie Nielsen, 1869-1904 8 Chapter Four. John Heber Stansfield and Elvina Elvira Jensen, 1905-1916 15 Chapter Five. Winters in California, 1917-1920 27 Chapter Six. The County Infirmary, 1921-1923 35 Chapter Seven. Realizing Success, 1924-1928 45 Chapter Eight. Returning Home, 1928-1935 56 Chapter Nine. Art Instructor at Snow College, 1935-1945 68 Chapter Ten. Echo Lodge, 1945-1953 83 Epilogue 101 Compiled List of Known Paintings Index 2 Utah I have trod the valleys of Utah, her rocks, her sand, and her sage. I have crossed her clear, cool mountain streams. I have watched her rivers rage. I have climbed her cedar-crested hills where the crow and the blue jay dwell. I have smelled the quaint, sweet odor of sage and chaparral. I have ascended her lofty mountains clothed in scarlet frocks. I have heard the eagle screeching from its perch upon the rocks. I have tarried in the aspen groves with hummingbird and bee. I have carved my name in the tender bark of the slender aspen tree. I have sauntered her shaded woodland among the fern and columbine. I have routed the bear, black and cinnamon tip, and the crouching mountain lion. I have eaten her wild strawberries, and I have eaten her cherrychokes. I have seen the gray ground strewn with acorns from the oaks. -

Karaoke with a Message – August 16, 2019 – 8:30PM

Another Protest Song: Karaoke with a Message – August 16, 2019 – 8:30PM a project of Angel Nevarez and Valerie Tevere for SOMA Summer 2019 at La Morenita Canta Bar (Puente de la Morena 50, 11870 Ciudad de México, MX) karaoke provided by La Morenita Canta Bar songbook edited by Angel Nevarez and Valerie Tevere ( ) 18840 (Ghost) Riders In The Sky Johnny Cash 10274 (I Am Not A) Robot Marina & Diamonds 00005 (I Can't Get No) Satisfaction Rolling Stones 17636 (I Hate) Everything About You Three Days Grace 15910 (I Want To) Thank You Freddie Jackson 05545 (I'm Not Your) Steppin' Stone Monkees 06305 (It's) A Beautiful Mornin' Rascals 19116 (Just Like) Starting Over John Lennon 15128 (Keep Feeling) Fascination Human League 04132 (Reach Up For The) Sunrise Duran Duran 05241 (Sittin' On) The Dock Of The Bay Otis Redding 17305 (Taking My) Life Away Default 15437 (Who Says) You Can't Have It All Alan Jackson # 07630 18 'til I Die Bryan Adams 20759 1994 Jason Aldean 03370 1999 Prince 07147 2 Legit 2 Quit MC Hammer 18961 21 Guns Green Day 004-m 21st Century Digital Boy Bad Religion 08057 21 Questions 50 Cent & Nate Dogg 00714 24 Hours At A Time Marshall Tucker Band 01379 25 Or 6 To 4 Chicago 14375 3 Strange Days School Of Fish 08711 4 Minutes Madonna 08867 4 Minutes Madonna & Justin Timberlake 09981 4 Minutes Avant 18883 5 Miles To Empty Brownstone 13317 500 Miles Peter Paul & Mary 00082 59th Street Bridge Song Simon & Garfunkel 00384 9 To 5 Dolly Parton 08937 99 Luftballons Nena 03637 99 Problems Jay-Z 03855 99 Red Balloons Nena 22405 1-800-273-8255 -

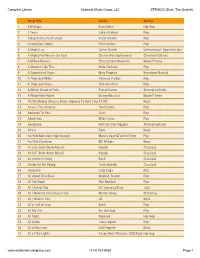

String Repertoire

Complete Library Ocdamia Music Group, LLC STRINGS (Duet, Trio Quartet) Song Title Artists Genres 1 24k Magic Bruno Mars Hip-Hop 2 7 Years Lukas Graham Pop 3 A Day in the Life of a Fool Frank Sinatra Pop 4 A Hard Day's Night Peter Sellers Pop 5 A Media Luz Carlos Gardel International - Spanish/Latin 6 A Mighty Fortress Is Our God Church Worship/Hymnal Christian/Catholic 7 A Million Dreams The Greatest Showman Movie/Theme 8 A Moment Like This Kelly Clarkson Pop 9 A Spoonful of Sugar Mary Poppins Broadway Musical 10 A Thousand Miles Vanessa Carlton Pop 11 A Thousand Years Christina Perri Pop 12 A Whiter Shade of Pale Procol Harum Alternative/Indie 13 A Whole New World Disney/Musical Movie/Theme 14 AC/DC Medley (Back in Black, Highway To Hell, Thunderstruck)AC/DC Rock 15 Across The Universe The Beatles Pop 16 Addicted To You Avicii Pop 17 Adore You Miley Cyrus Pop 18 Aeroplane Red Hot Chili Peppers Alternative/Indie 19 Africa Toto Rock 20 Ain't No Mountain High Enough Marvin Gaye &Tammi Terrel Pop 21 Ain't No Sunshine Bill Withers Rock 22 Air in D (from Water Music) Handel Classical 23 Air in F (from Water Music) Handel Classical 24 Air on the G string Bach Classical 25 Album for the Young Tschaikovsky Classical 26 Alejandro Lady Gaga Pop 27 All About That Bass Meghan Trainor Pop 28 All Fall Down One Republic Pop 29 All I Ask of You Arr. Lynne Latham Jazz 30 All I Want for Christmas Is You Mariah Carey Christmas 31 All I Want Is You U2 Rock 32 All is Full of Love Bjork Pop 33 All My Life Kci and Jojo Pop 34 All Night Beyoncé Hip-Hop 35 All -

Songs by Title

Andromeda II DJ Entertainment Songs by Title www.adj2.com Title Artist Title Artist #1 Nelly A Dozen Roses Monica (I Got That) Boom Boom Britney Spears & Ying Yang Twins A Heady Tale Fratellis, The (I Just Wanna) Fly Sugar Ray A House Is Not A Home Luther Vandross (I'll Never Be) Maria Magdalena Sandra A Long Walk Jill Scott + 1 Martin Solveig & Sam White A Man Without Love Englebert Humperdinck 1 2 3 Miami Sound Machine A Matter Of Trust Billy Joel 1 2 3 4 I Love You Plain White T's A Milli Lil Wayne 1 2 Step Ciara & Missy Elliott A Moment Like This,zip Leona 1 Thing Amerie A Moument Like This Kelly Clarkson 10 Million People Example A Mover El Cu La Sonora Dinamita 10,000 Hours Dan + Shay, Justin Bieber A Night To Remember High School Musical 3 10,000 Nights Alphabeat A Song For Mama Boyz Ii Men 100% Pure Love Crystal Waters A Sunday Kind Of Love Reba McEntire 12 Days Of X Various A Team, The Ed Sheeran 123 Gloria Estefan A Thin Line Between Love & Hate H-Town 1234 Feist A Thousand Miles Vanessa Carlton 16 @ War Karina A Thousand Years Christina Perri 17 Forever Metro Station A Whole New World Zayn, Zhavia Ward 19-2000 Gorillaz ABC Jackson 5 1973 James Blunt Abc 123 Levert 1982 Randy Travis Abide With Me West Angeles Gogic 1985 Bowling For Soup About A Girl Sugababes 1999 Prince About You Now Sugababes 2 Become 1 Spice Girls, The Abracadabra Steve Miller Band 2 Hearts Kylie Minogue Absolutely (Story Of A Girl) Nine Days 20 Years Ago Kenny Rogers Acapella Kelis 21 Guns Green Day According To You Orianthi 21 Questions 50 Cent Achy Breaky Heart Billy Ray Cyrus 21st Century Breakdown Green Day Act A Fool Ludacris 21st Century Girl Willow Smith Actos De Un Tonto Conjunto Primavera 22 Lily Allen Adam Ant Goody Two Shoes 22 Taylor Swift Adams Song Blink 182 24K Magic Bruno Mars Addicted To You Avicii 29 Palms Robert Plant Addictive Truth Hurts & Rakim 2U David Guetta Ft.