1 How Philosophy of Mind Can Shape the Future

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

17. Idealism, Or Something Near Enough Susan Schneider (In

17. Idealism, or Something Near Enough Susan Schneider (In Pearce and Goldsmidt, OUP, in press.) Some observations: 1. Mathematical entities do not come for free. There is a field, philosophy of mathematics, and it studies their nature. In this domain, many theories of the nature of mathematical entities require ontological commitments (e.g., Platonism). 2. Fundamental physics (e.g., string theory, quantum mechanics) is highly mathematical. Mathematical entities purport to be abstract—they purport to be non-spatial, atemporal, immutable, acausal and non-mental. (Whether they truly are abstract is a matter of debate, of course.) 3. Physicalism, to a first approximation, holds that everything is physical, or is composed of physical entities. 1 As such, physicalism is a metaphysical hypothesis about the fundamental nature of reality. In Section 1 of this paper, I urge that it is reasonable to ask the physicalist to inquire into the nature of mathematical entities, for they are doing heavy lifting in the physicalist’s approach, describing and identifying the fundamental physical entities that everything that exists is said to reduce to (supervene on, etc.). I then ask whether the physicalist in fact has the resources to provide a satisfying account of the nature of these mathematical entities, given existing work in philosophy of mathematics on nominalism and Platonism that she would likely draw from. And the answer will be: she does not. I then argue that this deflates the physicalist program, in significant ways: physicalism lacks the traditional advantages of being the most economical theory, and having a superior story about mental causation, relative to competing theories. -

Neural Lace" Company

5 Neuroscience Experts Weigh in on Elon Musk's Mysterious "Neural Lace" Company By Eliza Strickland (/author/strickland-eliza) Posted 12 Apr 2017 | 21:15 GMT Elon Musk has a reputation as the world’s greatest doer. He can propose crazy ambitious technological projects—like reusable rockets for Mars exploration and hyperloop tunnels for transcontinental rapid transit—and people just assume he’ll pull it off. So his latest venture, a new company called Neuralink that will reportedly build brain implants both for medical use and to give healthy people superpowers, has gotten the public excited about a coming era of consumerfriendly neurotech. Even neuroscientists who work in the field, who know full well how difficult it is to build working brain gear that passes muster with medical regulators, feel a sense of potential. “Elon Musk is a person who’s going to take risks and inject a lot of money, so it will be exciting to see what he gets up to,” says Thomas Oxley, a neural engineer who has been developing a medical brain implant since 2010 (he hopes to start its first clinical trial in 2018). Neuralink is still mysterious. An article in The Wall Street Journal (https://www.wsj.com/articles/elonmusklaunches neuralinktoconnectbrainswithcomputers1490642652) announced the company’s formation and first hires, while also spouting vague verbiage about “cranial computers” that would Image: iStockphoto serve as “a layer of artificial intelligence inside the brain.” So IEEE Spectrum asked the experts about what’s feasible in this field, and what Musk might be planning. -

Comment Attachment

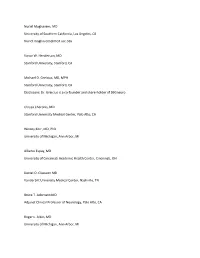

Nuriel Moghavem, MD University of Southern California, Los Angeles, CA [email protected] Victor W. Henderson, MD Stanford University, Stanford, CA Michael D. Greicius, MD, MPH Stanford University, Stanford, CA Disclosure: Dr. Greicius is a co-founder and share-holder of SBGneuro Chrysa Cheronis, MD Stanford University Medical Center, Palo Alto, CA Wesley Kerr, MD, PhD University of Michigan, Ann Arbor, MI Alberto Espay, MD University of Cincinnati Academic Health Center, Cincinnati, OH Daniel O. Claassen MD Vanderbilt University Medical Center, Nashville, TN Bruce T. Adornato MD Adjunct Clinical Professor of Neurology, Palo Alto, CA Roger L. Albin, MD University of Michigan, Ann Arbor, MI R. Ryan Darby, MD Vanderbilt University Medical Center, Nashville, TN Graham Garrison, MD University of Cincinnati, Cincinnati, Ohio Lina Chervak, MD University of Cincinnati Medical Center, Cincinnati, OH Abhimanyu Mahajan, MD, MHS Rush University Medical Center, Chicago, IL Richard Mayeux, MD Columbia University, New York, NY Lon Schneider MD Keck School of Medicine of the University of Southern California, Los Angeles, CAv Sami Barmada, MD PhD University of Michigan, Ann Arbor, MI Evan Seevak, MD Alameda Health System, Oakland, CA Will Mantyh MD University of Minnesota, Minneapolis, MN Bryan M. Spann, DO, PhD Consultant, Cognitive and Neurobehavior Disorders, Scottsdale, AZ Kevin Duque, MD University of Cincinnati, Cincinnati, OH Lealani Mae Acosta, MD, MPH Vanderbilt University Medical Center, Nashville, TN Marie Adachi, MD Alameda Health System, Oakland, CA Marina Martin, MD, MPH Stanford University School of Medicine, Palo Alto, CA Matthew Schrag MD, PhD Vanderbilt University Medical Center, Nashville, TN Matthew L. Flaherty, MD University of Cincinnati, Cincinnati, OH Stephanie Reyes, MD Durham, NC Hani Kushlaf, MD University of Cincinnati, Cincinnati, OH David B Clifford, MD Washington University in St Louis, St Louis, Missouri Marc Diamond, MD UT Southwestern Medical Center, Dallas, TX Disclosure: Dr. -

KNOWLEDGE ACCORDING to IDEALISM Idealism As a Philosophy

KNOWLEDGE ACCORDING TO IDEALISM Idealism as a philosophy had its greatest impact during the nineteenth century. It is a philosophical approach that has as its central tenet that ideas are the only true reality, the only thing worth knowing. In a search for truth, beauty, and justice that is enduring and everlasting; the focus is on conscious reasoning in the mind. The main tenant of idealism is that ideas and knowledge are the truest reality. Many things in the world change, but ideas and knowledge are enduring. Idealism was often referred to as “idea-ism”. Idealists believe that ideas can change lives. The most important part of a person is the mind. It is to be nourished and developed. Etymologically Its origin is: from Greek idea “form, shape” from weid- also the origin of the “his” in his-tor “wise, learned” underlying English “history.” In Latin this root became videre “to see” and related words. It is the same root in Sanskrit veda “knowledge as in the Rig-Veda. The stem entered Germanic as witan “know,” seen in Modern German wissen “to know” and in English “wisdom” and “twit,” a shortened form of Middle English atwite derived from æt “at” +witen “reproach.” In short Idealism is a philosophical position which adheres to the view that nothing exists except as it is an idea in the mind of man or the mind of God. The idealist believes that the universe has intelligence and a will; that all material things are explainable in terms of a mind standing behind them. PHILOSOPHICAL RATIONALE OF IDEALISM a) The Universe (Ontology or Metaphysics) To the idealist, the nature of the universe is mind; it is an idea. -

Mind-Crafting: Anticipatory Critique of Transhumanist Mind-Uploading in German High Modernist Novels Nathan Jensen Bates a Disse

Mind-Crafting: Anticipatory Critique of Transhumanist Mind-Uploading in German High Modernist Novels Nathan Jensen Bates A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2018 Reading Committee: Richard Block, Chair Sabine Wilke Ellwood Wiggins Program Authorized to Offer Degree: Germanics ©Copyright 2018 Nathan Jensen Bates University of Washington Abstract Mind-Crafting: Anticipatory Critique of Transhumanist Mind-Uploading in German High Modernist Novels Nathan Jensen Bates Chair of the Supervisory Committee: Professor Richard Block Germanics This dissertation explores the question of how German modernist novels anticipate and critique the transhumanist theory of mind-uploading in an attempt to avert binary thinking. German modernist novels simulate the mind and expose the indistinct limits of that simulation. Simulation is understood in this study as defined by Jean Baudrillard in Simulacra and Simulation. The novels discussed in this work include Thomas Mann’s Der Zauberberg; Hermann Broch’s Die Schlafwandler; Alfred Döblin’s Berlin Alexanderplatz: Die Geschichte von Franz Biberkopf; and, in the conclusion, Irmgard Keun’s Das Kunstseidene Mädchen is offered as a field of future inquiry. These primary sources disclose at least three aspects of the mind that are resistant to discrete articulation; that is, the uploading or extraction of the mind into a foreign context. A fourth is proposed, but only provisionally, in the conclusion of this work. The aspects resistant to uploading are defined and discussed as situatedness, plurality, and adaptability to ambiguity. Each of these aspects relates to one of the three steps of mind- uploading summarized in Nick Bostrom’s treatment of the subject. -

Superhuman Enhancements Via Implants: Beyond the Human Mind

philosophies Article Superhuman Enhancements via Implants: Beyond the Human Mind Kevin Warwick Office of the Vice Chancellor, Coventry University, Priory Street, Coventry CV1 5FB, UK; [email protected] Received: 16 June 2020; Accepted: 7 August 2020; Published: 10 August 2020 Abstract: In this article, a practical look is taken at some of the possible enhancements for humans through the use of implants, particularly into the brain or nervous system. Some cognitive enhancements may not turn out to be practically useful, whereas others may turn out to be mere steps on the way to the construction of superhumans. The emphasis here is the focus on enhancements that take such recipients beyond the human norm rather than any implantations employed merely for therapy. This is divided into what we know has already been tried and tested and what remains at this time as more speculative. Five examples from the author’s own experimentation are described. Each case is looked at in detail, from the inside, to give a unique personal experience. The premise is that humans are essentially their brains and that bodies serve as interfaces between brains and the environment. The possibility of building an Interplanetary Creature, having an intelligence and possibly a consciousness of its own, is also considered. Keywords: human–machine interaction; implants; upgrading humans; superhumans; brain–computer interface 1. Introduction The future life of superhumans with fantastic abilities has been extensively investigated in philosophy, literature and film. Despite this, the concept of human enhancement can often be merely directed towards the individual, particularly someone who is deemed to have a disability, the idea being that the enhancement brings that individual back to some sort of human norm. -

Is AI Intelligent, Really? Bruce D

Seattle aP cific nivU ersity Digital Commons @ SPU SPU Works Summer August 23rd, 2019 Is AI intelligent, really? Bruce D. Baker Seattle Pacific nU iversity Follow this and additional works at: https://digitalcommons.spu.edu/works Part of the Artificial Intelligence and Robotics Commons, Comparative Methodologies and Theories Commons, Epistemology Commons, Philosophy of Science Commons, and the Practical Theology Commons Recommended Citation Baker, Bruce D., "Is AI intelligent, really?" (2019). SPU Works. 140. https://digitalcommons.spu.edu/works/140 This Article is brought to you for free and open access by Digital Commons @ SPU. It has been accepted for inclusion in SPU Works by an authorized administrator of Digital Commons @ SPU. Bruce Baker August 23, 2019 Is AI intelligent, really? Good question. On the surface, it seems simple enough. Assign any standard you like as a demonstration of intelligence, and then ask whether you could (theoretically) set up an AI to perform it. Sure, it seems common sense that given sufficiently advanced technology you could set up a computer or a robot to do just about anything that you could define as being doable. But what does this prove? Have you proven the AI is really intelligent? Or have you merely shown that there exists a solution to your pre- determined puzzle? Hmmm. This is why AI futurist Max Tegmark emphasizes the difference between narrow (machine-like) and broad (human-like) intelligence.1 And so the question remains: Can the AI be intelligent, really, in the same broad way its creator is? Why is this question so intractable? Because intelligence is not a monolithic property. -

Copyrighted Material

Index affordances 241–2 apps 11, 27–33, 244–5 afterlife 194, 227, 261 Ariely, Dan 28 Agar, Nicholas 15, 162, 313 Aristotle 154, 255 Age of Spiritual Machines, The 47, 54 Arkin, Ronald 64 AGI (Artificial General Intelligence) Armstrong, Stuart 12, 311 61–86, 98–9, 245, 266–7, Arp, Hans/Jean 240–2, 246 298, 311 artificial realities, see virtual reality AGI Nanny 84, 311 Asimov, Isaac 45, 268, 274 aging 61, 207, 222, 225, 259, 315 avatars 73, 76, 201, 244–5, 248–9 AI (artificial intelligence) 3, 9, 12, 22, 30, 35–6, 38–44, 46–58, 72, 91, Banks, Iain M. 235 95, 99, 102, 114–15, 119, 146–7, Barabási, Albert-László 311 150, 153, 157, 198, 284, 305, Barrat, James 22 311, 315 Berger, T.W. 92 non-determinism problem 95–7 Bernal,J.D.264–5 predictions of 46–58, 311 biological naturalism 124–5, 128, 312 altruism 8 biological theories of consciousness 14, Amazon Elastic Compute Cloud 40–1 104–5, 121–6, 194, 312 Andreadis, Athena 223 Blackford, Russell 16–21, 318 androids 44, 131, 194, 197, 235, 244, Blade Runner 21 265, 268–70, 272–5, 315 Block, Ned 117, 265 animal experimentationCOPYRIGHTED 24, 279–81, Blue Brain MATERIAL Project 2, 180, 194, 196 286–8, 292 Bodington, James 16, 316 animal rights 281–2, 285–8, 316 body, attitudes to 4–5, 222–9, 314–15 Anissimov, Michael 12, 311 “Body by Design” 244, 246 Intelligence Unbound: The Future of Uploaded and Machine Minds, First Edition. Edited by Russell Blackford and Damien Broderick. -

Coalcrashsinksminegiant

For personal non-commercial use only. Do not edit or alter. Reproductions not permitted. To reprint or license content, please contact our reprints and licensing department at +1 800-843-0008 or www.djreprints.com Jeffrey Herbst A Fare Change Facebook’s Algorithm For Business Fliers Is a News Editor THE MIDDLE SEAT | D1 OPINION | A15 ASSOCIATED PRESS ***** THURSDAY, APRIL 14, 2016 ~ VOL. CCLXVII NO. 87 WSJ.com HHHH $3.00 DJIA 17908.28 À 187.03 1.1% NASDAQ 4947.42 À 1.55% STOXX 600 343.06 À 2.5% 10-YR. TREAS. À 6/32 , yield 1.760% OIL $41.76 g $0.41 GOLD $1,246.80 g $12.60 EURO $1.1274 YEN 109.32 What’s Migrants Trying to Leave Greece Meet Resistance at Border Big Bank’s News Earnings Business&Finance Stoke Optimism .P. Morgan posted bet- Jter-than-expected re- sults, delivering a reassur- BY EMILY GLAZER ing report on its business AND PETER RUDEGEAIR and the U.S. economy. A1 The Dow climbed 187.03 J.P. Morgan Chase & Co. de- points to 17908.28, its high- livered a reassuring report on est level since November, as the state of U.S. consumers J.P. Morgan’s earnings ignited and corporations Wednesday, gains in financial shares. C1 raising hopes that strength in the economy will help banks Citigroup was the only offset weakness in their Wall bank whose “living will” plan Street trading businesses. wasn’t rejected by either the Shares of the New York Fed or the FDIC, while Wells bank, which reported better- Fargo drew a rebuke. -

Maybe Not--But You Can Have Fun Trying

12/29/2010 Can You Live Forever? Maybe Not--But… Permanent Address: http://www.scientificamerican.com/article.cfm?id=e-zimmer-can-you-live-forever Can You Live Forever? Maybe Not--But You Can Have Fun Trying In this chapter from his new e-book, journalist Carl Zimmer tries to reconcile the visions of techno-immortalists with the exigencies imposed by real-world biology By Carl Zimmer | Wednesday, December 22, 2010 | 14 comments Editor's Note: Carl Zimmer, author of this month's article, "100 Trillion Connections," has just brought out a much-acclaimed e-book, Brain Cuttings: 15 Journeys Through the Mind (Scott & Nix), that compiles a series of his writings on neuroscience. In this chapter, adapted from an article that was first published in Playboy, Zimmer takes the reader on a tour of the 2009 Singularity Summit in New York City. His ability to contrast the fantastical predictions of speakers at the conference with the sometimes more skeptical assessments from other scientists makes his account a fascinating read. Let's say you transfer your mind into a computer—not all at once but gradually, having electrodes inserted into your brain and then wirelessly outsourcing your faculties. Someone reroutes your vision through cameras. Someone stores your memories on a net of microprocessors. Step by step your metamorphosis continues until at last the transfer is complete. As engineers get to work boosting the performance of your electronic mind so you can now think as a god, a nurse heaves your fleshy brain into a bag of medical waste. As you —for now let's just call it "you"—start a new chapter of existence exclusively within a machine, an existence that will last as long as there are server farms and hard-disk space and the solar power to run them, are "you" still actually you? This question was being considered carefully and thoroughly by a 43-year-old man standing on a giant stage backed by high black curtains. -

William Gibson's Neuromancer and Its Relevanceto Culture And

“KALAM” International Journal of Faculty of Arts and Culture, South Eastern University of Sri Lanka William Gibson’s Neuromancer and Its Relevanceto Culture and Technology Noeline Shirome .G South Eastern University of Sri Lanka Abstract We live in a time where technology is in a rapid growth. These advancements have made various changes in the cultural sphere. Computers have drastically started to influence the lifestyle of people through various systems of information technology. Thus, within a fragment of time anyone can access to any sort of resources on the planet. This advancement is in way shrinking the division of mind and machine as most people depend vastly on the outside sources of intelligence through technological devices. The concept of mind uploading, trans-humans, cyber space, virtual reality, and etc. have interested the cyberpunk writers and influenced them to project these ideas in their literary texts. Thus the present paper explores the relationship between William Gibson’s cyberpunk science fiction Neuromancer and its relevance to culture and technology. It first focuses on the novel Neuromancer, and then goes onto its cultural and technological relevance with present time, and discusses the close connection between reality and cyberpunk literature. Key words Cyberpunk, cyber space, mind uploading, mindclones, mindfile, William Gibson Introduction in the novel named Henry Dorsett Case is a The link between computer technology and hacker who eventually gets into the digitalised literature play an important role in society, as system and logs into the Matrix where the literary texts reflects the contemporary copy of his consciousness exists forever. The situation. In this context, cyberpunk science similar idea of this mind uploading is now an fictions play an important role in exploring emerging concept in the twenty-first century. -

Living in a Matrix, She Thinks to Herself

y most accounts, Susan Schneider lives a pretty normal life. BAn assistant professor of LIVING IN philosophy, she teaches classes, writes academic papers and A MATrix travels occasionally to speak at a philosophy or science lecture. At home, she shuttles her daughter to play dates, cooks dinner A PHILOSOPHY and goes mountain biking. ProfESSor’S LifE in Then suddenly, right in the middle of this ordinary American A WEB OF QUESTIONS existence, it happens. Maybe we’re all living in a Matrix, she thinks to herself. It’s like in the by Peter Nichols movie when the question, What is the Matrix? keeps popping up on Neo’s computer screen and sets him to wondering. Schneider starts considering all the what-ifs and the maybes that now pop up all around her. Maybe the warm sun on my face, the trees swishing by and the car I’m driving down the road are actually code streaming through the circuits of a great computer. What if this world, which feels so compellingly real, is only a simulation thrown up by a complex and clever computer game? If I’m in it, how could I even know? What if my real- seeming self is nothing more than an algorithm on a computational network? What if everything is a computer-generated dream world – a momentary assemblage of information clicking and switching and running away on a grid laid down by some advanced but unknown intelligence? It’s like that time, after earning an economics degree as an undergrad at Berkeley, she suddenly switched to philosophy.