Feature Modeling and Automated Analysis for an Embedded Software Product Family

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

United States Patent (19) 11 Patent Number: 5,526,129 Ko (45) Date of Patent: Jun

US005526129A United States Patent (19) 11 Patent Number: 5,526,129 Ko (45) Date of Patent: Jun. 11, 1996 54). TIME-BASE-CORRECTION IN VIDEO 56) References Cited RECORDING USINGA FREQUENCY-MODULATED CARRIER U.S. PATENT DOCUMENTS 4,672,470 6/1987 Morimoto et al. ...................... 358/323 75) Inventor: Jeone-Wan Ko, Suwon, Rep. of Korea 5,062,005 10/1991 Kitaura et al. ....... ... 358/320 5,142,376 8/1992 Ogura ............... ... 358/310 (73) Assignee: Sam Sung Electronics Co., Ltd., 5,218,449 6/1993 Ko et al. ...... ... 358/320 Suwon, Rep. of Korea 5,231,507 7/1993 Sakata et al. ... 358/320 5,412,481 5/1995 Ko et al. ...................... ... 359/320 21 Appl. No.: 203,029 Primary Examiner-Thai Q. Tran Assistant Examiner-Khoi Truong 22 Filed: Feb. 28, 1994 Attorney, Agent, or Firm-Robert E. Bushnell Related U.S. Application Data (57) ABSTRACT 63 Continuation-in-part of Ser. No. 755,537, Sep. 6, 1991, A circuit for recording and reproducing a TBC reference abandoned. signal for use in video recording/reproducing systems includes a circuit for adding to a video signal to be recorded (30) Foreign Application Priority Data a TBC reference signal in which the TBC reference signal Nov. 19, 1990 (KR) Rep. of Korea ...................... 90/18736 has a period adaptively varying corresponding to a synchro nizing variation of the video signal; and a circuit for extract (51 int. Cl. .................. H04N 9/79 ing and reproducing a TBC reference signal from a video (52) U.S. Cl. .......................... 358/320; 358/323; 358/330; signal read-out from the recording medium in order to 358/337; 360/36.1; 360/33.1; 348/571; correct time-base errors in the video signal. -

Analog/SDI to SDI/Optical Converter with TBC/Frame Sync User Guide

Analog/SDI to SDI/Optical Converter with TBC/Frame Sync User Guide ENSEMBLE DESIGNS Revision 6.0 SW v1.0.8 This user guide provides detailed information for using the BrightEye™1 Analog/SDI to SDI/Optical Converter with Time Base Corrector and Frame Sync. The information in this user guide is organized into the following sections: • Product Overview • Functional Description • Applications • Rear Connections • Operation • Front Panel Controls and Indicators • Using The BrightEye Control Application • Warranty and Factory Service • Specifications • Glossary BrightEye-1 BrightEye 1 Analog/SDI to SDI/Optical Converter with TBC/FS PRODUCT OVERVIEW The BrightEye™ 1 Converter is a self-contained unit that can accept both analog and digital video inputs and output them as optical signals. Analog signals are converted to digital form and are then frame synchronized to a user-supplied video reference signal. When the digital input is selected, it too is synchronized to the reference input. Time Base Error Correction is provided, allowing the use of non-synchronous sources such as consumer VTRs and DVD players. An internal test signal generator will produce Color Bars and the pathological checkfield test signals. The processed signal is output as a serial digital component television signal in accordance with ITU-R 601 in both electrical and optical form. Front panel controls permit the user to monitor input and reference status, proper optical laser operation, select video inputs and TBC/Frame Sync function, and adjust video level. Control and monitoring can also be done using the BrightEye PC or BrightEye Mac application from a personal computer with USB support. -

Designline PROFILE 42

High Performance Displays FLAT TV SOLUTIONS DesignLine PROFILE 42 Plasma FlatTV 106cm / 42" WWW.CONRAC.DE HIGH PERFORMANCE DISPLAYS FLAT TV SOLUTIONS DesignLine PROFILE 42 (106cm / 42 Zoll Diagonale) Neu: Verarbeitet HD-Signale ! New: HD-Compliant ! Einerseits eine bestechend klare Linienführung. Andererseits Akzente durch die farblich gestalteten Profilleisten in edler Metallic-Lackierung. Das Heimkino-Erlebnis par Excellence. Impressively clear lines teamed with decorative aluminium strips in metallic finish provide coloured highlights. The ultimate home cinema experience. Für höchste Ansprüche: Die FlatTVs der DesignLine kombinieren Hightech mit einzigartiger Optik. Die komplette Elektronik sowie die hochwertigen Breitband-Stereolautsprecher wurden komplett ins Gehäuse integriert. Der im Lieferumfang enthaltene Design-Standfuß aus Glas lässt sich für die Wandmontage einfach und problemlos entfernen, so dass das Display noch platzsparender wie ein Bild an der Wand angebracht werden kann. Die extrem flachen Bildschirme bieten eine unübertroffene Bildbrillanz und -schärfe. Das lüfterlose Konzept basiert auf dem neuesten Stand der Technik: Ohne störende Nebengeräusche hören Sie nur das, was Sie hören möchten. Einfaches Handling per Fernbedienung und mit übersichtlichem On-Screen-Menü. Die Kombination aus Flachdisplay-Technologie, einer High Performance Scaling Engine und einem zukunftsweisenden De-Interlacer* mit speziellen digitalen Algorithmen zur optimalen Darstellung bewegter Bilder bietet Ihnen ein unvergleichliches Fernseherlebnis. Zusätzlich vermittelt die Noise Reduction eine angenehme Bildruhe. For the most decerning tastes: DesignLine flat panel TVs combine advanced technology with outstanding appearance. All the electronics and the high-quality broadband stereo speakers have been fully integrated in the casing. The design glass stand included in the scope of supply can easily be removed for wall assembly, allowing the display to be mounted to the wall like a picture to save even more space. -

Electronic Services Capability Listing

425 BAYVIEW AVENUE, AMITYVILLE, NEW YORK 11701-0455 TEL: 631-842-5600 FSCM 26269 www.usdynamicscorp.com e-mail: [email protected] ELECTRONIC SERVICES CAPABILITY LISTING OEM P/N USD P/N FSC NIIN NSN DESCRIPTION NHA SYSTEM PLATFORM(S) 00110201-1 5998 01-501-9981 5998-01-501-9981 150 VDC POWER SUPPLY CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110204-1 5998 01-501-9977 5998-01-501-9977 FILAMENT POWER SUPPLY CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110213-1 5998 01-501-5907 5998-01-501-5907 IGBT SWITCH CIRCUIT CARD ASSEMBLY AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 00110215-1 5820 01-501-5296 5820-01-501-5296 RADIO TRANSMITTER MODULATOR AN/MSQ-T43 THREAT RADAR SYSTEM SIMULATOR 004088768 5999 00-408-8768 5999-00-408-8768 HEAT SINK MSP26F/TRANSISTOR HEAT EQUILIZER B-1B 0050-76522 * * *-* DEGASSER ENDCAP * * 01-01375-002 5985 01-294-9788 5985-01-294-9788 ANTENNA 1 ELECTRONICS ASSEMBLY AN/APG-68 F-16 01-01375-002A 5985 01-294-9788 5985-01-294-9788 ANTENNA 1 ELECTRONICS ASSEMBLY AN/APG-68 F-16 015-001-004 5895 00-964-3413 5895-00-964-3413 CAVITY TUNED OSCILLATOR AN/ALR-20A B-52 016024-3 6210 01-023-8333 6210-01-023-8333 LIGHT INDICATOR SWITCH F-15 01D1327-000 573000 5996 01-097-6225 5996-01-097-6225 SOLID STATE RF AMPLIFIER AN/MST-T1 MULTI-THREAT EMITTER SIMULATOR 02032356-1 570722 5865 01-418-1605 5865-01-418-1605 THREAT INDICATOR ASSEMBLY C-130 02-11963 2915 00-798-8292 2915-00-798-8292 COVER, SHIPPING J-79-15/17 ENGINE 025100 5820 01-212-2091 5820-01-212-2091 RECEIVER TRANSMITTER (BASEBAND -

Medion Pure Retro

Medion Pure Retro This symbol signifies a possible danger to your life and health, if specific requests to take action are not complied with or if appropriate precautionary measures are not taken. This symbol warns against wrong behavior that will have the consequence of environmental damage. This symbol provides information about handling of the product or about the relevant part of the operating instructions to which particular attention should be paid. Copyright © 2004 All rights reserved. This manual is protected by Copyright. The Copyright is owned by Medion®. Trademarks: MS-DOS® and Windows® are registered trademarks of Microsoft®. Pentium® is a registered trademark of Intel®. Other trademarks are the property of their respective owners. The information in this document is subject to change without notice. [2950-4042-C217 Rev.00] Inhaltsverzeichnis 1. Richtlinien ........................................................................ 2 2. Sicherheitshinweise ............................................................... 2 2.1 Hinweise für Ihre Sicherheit.................................................... 2 DEUTSCH 2.2 Allgemeine Hinweise.......................................................... 3 3. Übersicht ......................................................................... 5 3.1 Lieferumfang ................................................................. 5 3.2 Anschlüsse .................................................................. 6 3.3 Bedientasten und Funktionen der Fernbedienung................................ -

Advanced PAL Comb Filter-II (APCF-II) MC141627

MOTOROLA Order this document SEMICONDUCTOR TECHNICAL DATA by MC141627/D MC141627 Product Preview Advanced PAL Comb Filter-II FT SUFFIX (APCF-II) QFP PACKAGE CASE 898 The Advanced PAL Comb Filter–II is a video signal processor for VCRs, 48 1 LDPs, and TVs. It separates the Luminance Y and Chrominance C signal from the NTSC/PAL composite signal by using digital signal processing techniques ORDERING INFORMATION which minimize dot–crawl and cross–color. The built–in 4xFSC PLL circuit MC141627FT Quad Flat Package (QFP) allows a subcarrier signal input, which generates 4xFSC clock for video signal processing. This filter allows a video signal input of an extended frequency bandwidth by using a 4xFSC clock. The built–in vertical enhancer circuit reduces noise and dot crawl on the Luminance Y signal. The built–in A/D and D/A converters allow easy connection to analog video circuits. • Built–In High Speed 8–Bit A/D Converter • Four Line Memories (4540 Bytes) • Advanced Comb–II Process • Built–In Vertical Enhancer • Vertical Dot Reduction Process • Two Built–In High Speed 8–Bit D/A Converters • Built–In 4xFSC PLL Circuit • Built–In Clamp Circuit • Digital Interface Mode • On–Chip Reference Voltage for A/D Converter PIN ASSIGNMENT D5 D6 D7 C0 C1 D4 C3 C2 C4 C5 C6 C7 36 25 D3 37 24 TE1 D2 TE0 D1 MODE1 D0 MODE0 BYPASS CLK(AD) VH GND(D) GND(D) NC VCC(D) CLC FSC CLout N/M Vin PAL/NTSC RBT RTP Comb/BPF 48 13 1 12 out out CC bias Y C PCO BIAS I FILIN OV CC(DA) CC(AD) V REF(DA) V GND(AD) GND(DA) NC = NO CONNECTION This document contains information on a product under development. -

TELE INTERNATIONAL SATELLITE IFA Special All Satellites All Frequencies All Footprints

TELE INTERNATIONAL SATELLITE IFA Special All Satellites All Frequencies All Footprints NOKIATest: 9800 S http://www.TELE-satellite.com B 9318 E ISSN 0931-4733 07-08 3 Inhalt Content 1999/08 Advertisers Index STRONG 2 Satellite Venues SCaT, Mumbai 4 KBS Media Enterprises 7 Leserbriefe 10 Letter To The Editor Anton Kathrein 22 Industry Interview ProVision 8 Messen 12 Satellite Fairs Irrflüge 26 Espionage Story Mascom 9 IFA 99 14 Exhibition Preview Satelliten und Medien 34 News Mediastar 11 Giuliano Berretta 18 Industry Interview Satelliten-Panorama 38 New Products HUMAX 13 SHARP 15 HC Electronica 17 Satellite Products Zinwell 21 www.TELE-satellite.com/TSI/9908/nokia.shtml Eurosat 23 NOKIA Mediamaster 9800S 54 Digi Receiver with Open TV and Common Interface HUTH 25 www.TELE-satellite.com/TSI/9908/asc.shtml ALPS 29 KLINSERER 31 ASC-TEC MS 98 NT 58 DiSEqC-Multiswitches MAX Communication 33 www.TELE-satellite.com/TSI/9908/lorenzen.shtml EuroCom Italy 35 LORENZEN SL No. 5 62 Analogue Receiver with Low Threshold Müller 37 www.TELE-satellite.com/TSI/9908/praxis.shtml STS 41 PRAXIS DigiMaster 9800CI 66 Digital Receiver with Common Interface PRAXIS 43 www.TELE-satellite.com/TSI/9908/amstrad.shtml Weiß, I.F.V. 45 AMSTRAD SAT 401, SDU 80 72 Complete Set for 2-satellite reception Doebis Meßtechnik 47 www.TELE-satellite.com/TSI/9908/kathrein.shtml Shinwon / Grundig 49 KATHREIN UFD 510 78 Digital Receiver with Open TV and CI Doebis 51 www.TELE-satellite.com/TSI/9908/hyundai.shtml M.T.I. / Hirschmann 53 HYUNDAI HSS-700A 82 Digital Receiver with LT and SCPC Hornscheidt / Promax 61 www.TELE-satellite.com/TSI/9908/mti.shtml Astro Strobel / TRIAX 65 MTI Blue Line 88 Universal, Twin, and Quatro LNB ITU 76-77 Ankaro / KWS / TRIAX 87 Antennes, Paris 95 Telecomp, Cairo 99 Robots in Space 90 Spacecom 103 Total Eclipse 92 www.satellite-shop.com SatExpo, Vicenza 113 Low Cost Reception 96 New Satellite Products at AEF Istanbul 104 ACT Conference, Arlington 115 Show Report: Kiev 100 New Satellite Products at Mediacast, Lond. -

![(12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin Et A]](https://docslib.b-cdn.net/cover/6267/12-united-states-patent-10-patent-n0-us-8-073-418-b2-lin-et-a-386267.webp)

(12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin Et A]

US008073418B2 (12) United States Patent (10) Patent N0.: US 8,073,418 B2 Lin et a]. (45) Date of Patent: Dec. 6, 2011 (54) RECEIVING SYSTEMS AND METHODS FOR (56) References Cited AUDIO PROCESSING U.S. PATENT DOCUMENTS (75) Inventors: Chien-Hung Lin, Kaohsiung (TW); 4 414 571 A * 11/1983 Kureha et al 348/554 Hsing-J“ Wei, Keelung (TW) 5,012,516 A * 4/1991 Walton et a1. .. 381/3 _ _ _ 5,418,815 A * 5/1995 Ishikawa et a1. 375/216 (73) Ass1gnee: Mediatek Inc., Sc1ence-Basedlndustr1al 6,714,259 B2 * 3/2004 Kim ................. .. 348/706 Park, Hsin-Chu (TW) 7,436,914 B2* 10/2008 Lin ....... .. 375/347 2009/0262246 A1* 10/2009 Tsaict a1. ................... .. 348/604 ( * ) Notice: Subject to any disclaimer, the term of this * Cited by examiner patent is extended or adjusted under 35 U'S'C' 154(1)) by 793 days' Primary Examiner * Sonny Trinh (21) App1_ NO; 12/185,778 (74) Attorney, Agent, or Firm * Winston Hsu; Scott Margo (22) Filed: Aug. 4, 2008 (57) ABSTRACT (65) Prior Publication Data A receiving system for audio processing includes a ?rst Us 2010/0029240 A1 Feb 4 2010 demodulation unit and a second demodulation unit. The ?rst ' ’ demodulation unit is utilized for receiving an audio signal and (51) Int_ CL generating a ?rst demodulated audio signal. The second H043 1/10 (200601) demodulation unit is utilized for selectively receiving the H043 5/455 (200601) audio signal or the ?rst demodulated audio signal according (52) us. Cl. ....................... .. 455/312- 455/337- 348/726 to a Setting Ofa television audio System Which the receiving (58) Field Of Classi?cation Search ............... -

A Look at SÉCAM III

Viewer License Agreement You Must Read This License Agreement Before Proceeding. This Scroll Wrap License is the Equivalent of a Shrink Wrap ⇒ Click License, A Non-Disclosure Agreement that Creates a “Cone of Silence”. By viewing this Document you Permanently Release All Rights that would allow you to restrict the Royalty Free Use by anyone implementing in Hardware, Software and/or other Methods in whole or in part what is Defined and Originates here in this Document. This Agreement particularly Enjoins the viewer from: Filing any Patents (À La Submarine?) on said Technology & Claims and/or the use of any Restrictive Instrument that prevents anyone from using said Technology & Claims Royalty Free and without any Restrictions. This also applies to registering any Trademarks including but not limited to those being marked with “™” that Originate within this Document. Trademarks and Intellectual Property that Originate here belong to the Author of this Document unless otherwise noted. Transferring said Technology and/or Claims defined here without this Agreement to another Entity for the purpose of but not limited to allowing that Entity to circumvent this Agreement is Forbidden and will NOT release the Entity or the Transfer-er from Liability. Failure to Comply with this Agreement is NOT an Option if access to this content is desired. This Document contains Technology & Claims that are a Trade Secret: Proprietary & Confidential and cannot be transferred to another Entity without that Entity agreeing to this “Non-Disclosure Cone of Silence” V.L.A. Wrapper. Combining Other Technology with said Technology and/or Claims by the Viewer is an acknowledgment that [s]he is automatically placing Other Technology under the Licenses listed below making this License Self-Enforcing under an agreement of Confidentiality protected by this Wrapper. -

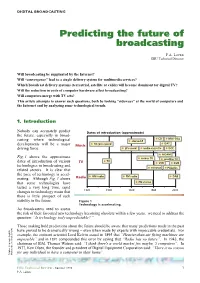

Predicting the Future of Broadcasting

DIGITAL BROADCASTING Predicting the future of broadcasting P.A. Laven EBU Technical Director Will broadcasting be supplanted by the Internet? Will “convergence” lead to a single delivery system for multimedia services? Which broadcast delivery systems (terrestrial, satellite or cable) will become dominant for digital TV? Will the reduction in costs of computer hardware affect broadcasting? Will computers merge with TV sets? This article attempts to answer such questions, both by looking “sideways” at the world of computers and the Internet and by analyzing some technological trends. 1. Introduction Nobody can accurately predict Dates of introduction (approximate) the future, especially in broad- CD Mini-Disc casting where technological stereo LP DAT developments will be a major Music 78 rpm record driving force. LP record audio cassette DCC NICAM Fig. 1 shows the approximate colour TV satellite TV TV dates of introduction of various TV VCR DVB technologies in broadcasting and teletext PALplus related sectors. It is clear that the pace of technology is accel- AM radio FM radio DAB erating. Although Fig. 1 shows Radio that some technologies have FM stereo lasted a very long time, rapid changes in technology mean that 1920 1940 1960 1980 2000 there is little prospect of such stability in the future. Figure 1 Technology is accelerating. As broadcasters need to assess the risk of their favoured new technology becoming obsolete within a few years, we need to address the question: “Is technology truly unpredictable? ” Those making bold predictions about the future should be aware that many predictions made in the past have proved to be dramatically wrong – even when made by experts with impeccable credentials. -

Brighteye 72 - Page 1 3G/HD/SD SDI to HDMI Converter User Guide Brighteyetm 72

3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 BrightEyeTM 72 3G/HD/SD SDI to HDMI Converter User Guide Revision 1.1 SW v1.0.0 www.ensembledesigns.com BrightEye 72 - Page 1 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 Contents PRODUCT OVERVIEW AND FUNCTIONAL DESCRIPTION 4 Use with any HDMI Monitor 4 SDI Input 4 Test Signal Generator 4 Video Proc Amp and Color Corrector 4 Audio Metering Overlay and Disembedding 5 Graticule Overlay 5 Closed Caption Decoding 6 Timecode Reader 6 Horizontal and Vertical Shift 7 BLOCK DIAGRAM 8 APPLICATIONS 10 REAR CONNECTORS 11 Power Connection 11 USB Connector 11 HDMI Out 11 Audio Out 11 3G/HD/SD SDI In 12 3G/HD/SD SDI Out 12 MODULE CONFIGURATION AND CONTROL 13 Front Panel Controls and Indicators 13 Status Indicators 13 Adjusting Parameters from the Front Panel 14 Front Panel Control and On-Screen Display 14 Examples of Using the Front Panel in Conjunction with On-Screen Display 15 www.ensembledesigns.com BrightEye 72 - Page 2 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 USING THE BRIGHTEYE CONTROL APPLICATION 18 Software version requirement 18 Input Menu 19 Proc Menu 20 Color Correct Menu 21 Audio Menu 22 Config Menu 23 Captions Menu 24 Timecode Menu 25 SOFTWARE UPDATING 26 WARRANTY AND FACTORY SERVICE 26 Warranty 26 Factory Service 26 SPECIFICATIONS 27 GLOSSARY 29 www.ensembledesigns.com BrightEye 72 - Page 3 3G/HD/SD SDI to HDMI Converter User Guide BrightEyeTM 72 PRODUCT OVERVIEW AND FUNCTIONAL DESCRIPTION The BrightEye 72 turns any High Definition Multimedia Interface (HDMI) display into a monitoring solution with professional monitoring features. -

Types of BNC Connectors

Types of BNC Connectors The BNC (Bayonet Neill–Concelman) connector is a miniature quick connect / disconnect radio frequency connector used for coaxial cable. It features two bayonet lugs on the female connector; mating is fully achieved with a quarter turn of the coupling nut. BNC connectors are most commonly made in 50 ohm and 75 ohm versions, matched for use with cables of the same characteristic impedance. The 75 ohm connector is dimensionally slightly different from the 50 ohm variant, but the two nevertheless can be made to mate. The 75 ohm types can sometimes be recognized by the reduced or absent dielectric in the mating ends but this is by no means reliable. There was a proposal in the early 1970s for the dielectric material to be coloured red in 75 ohm connectors, and while this is occasionally implemented, it did not become standard. The 75 ohm connectors are typically specified for use at frequencies up to 2 GHz. 75 ohm BNC connectors are primarily used in Video (particularly HD video signals) and DS3 Telco central office applications. Many VHF receivers use 75 ohm antenna inputs, so they often used 75 ohm BNC connectors. The 50 ohm connectors are typically specified for use at frequencies up to 4 GHz. 50 ohm connectors are used for data and RF. A 95 ohm variant is used within the aerospace sector, but rarely elsewhere. It is used with the 95 ohm video connections for glass cockpit displays on some aircraft. Compatibility The different versions are designed to mate with each other, and a 75 ohm and a 50 ohm BNC connector which both comply with the 1978 standard, IEC 169-8, will mate non-destructively.