Letter and Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FM ALISA MELEKHINA Is Currently Balancing Her Law and Chess Careers. Inside, She Interviews Three Other Lifelong Chess Players Wrestling with a Similar Dilemma

NAKAMURA WINS GIBRALTAR / SO FINISHES SECOND AT TATA STEEL APRIL 2015 Career Crossroads FM ALISA MELEKHINA is currently balancing her law and chess careers. Inside, she interviews three other lifelong chess players wrestling with a similar dilemma. IFC_Layout 1 3/11/2015 6:02 PM Page 1 OIFC_pg1_Layout 1 3/11/2015 7:11 PM Page 1 World’s biggest open tournament! 43rd annual WORLD OPEN Hyatt Regency Crystal City, near D.C. 9rounds,June30-July5,July1-5,2-5or3-5 $210,000 Guaranteed Prizes! Master class prizes raised by $10,000 GM & IM norms possible, mixed doubles prizes, GM lectures & analysis! VISIT OUR NATION’S CAPITAL SPECIAL FEATURES! 4) Provisional (under 26 games) prize The World Open completes a three 1) Schedule options. 5-day is most limits in U2000 & below. year run in the Washington area before popular, 4-day and 3-day save time & 5) Unrated not allowed in U1200 returning to Philadelphia in 2016. money.New,leisurely6-dayhas three1- though U1800;$1000 limit in U2000. $99 rooms, valet parking $6 (if full, round days. Open plays 5-day only. 6) Mixed Doubles: $3000-1500-700- about $7-15 nearby), free airport shuttle. 2) GM & IM norms possible in Open. 500-300 for male/female teams. Fr e e s hutt l e to DC Metro, minutes NOTECHANGE:Mas ters can now play for 7) International 6/26-30: FIDE norms from Washington’s historic attractions! both norms & large class prizes! possible, warm up for main event. Als o 8sections:Open,U2200,U2000, 3) Prize limit $2000 if post-event manyside events. -

United States Chess Federation How Many Variations?

UNITED STATES CHESS FEDERATION , ( I -• -I USCF .J •- .J America's Chess Periodical Volume XVI. Number 9 SEPTEMBER, \961 40 Cents HOW MANY VARIATIONS? 169,518,829,100,544,000,000,000,000,000 X 700 (See ~II. 253) REPORT FROM FIDE At tile ' CCClll 1'10£ COllgrefl heW at night, Mr. Hellimo has put forward the Leipzig, GrunJmmtcr M ilora V/(fmtlr 0/ Yu· original proposition to adjourn the game gruiall/6 fIIbcd mallll qlll!lIiOW} all to ,1M for a pause of 2 hours already after I adutsabilily of .Wltg lectmru In malor chaA first period of only 2 hour::, in view of event, aru:i Ille ".0. and co"", of Immlllll.re the lact that in its initial phase a game draWl by agreemlm' . J\/1 of tlw member ollcrs much less chances [or an effective C(JImlr/c1 0/ FIDE were poUcd 01 10 I/urlr analysis than later on. wcleu;JIC(!J 1111(/ idCf/iI all t/1e5e 111."0 (I I.e,· Among the federalions and persons litms and foffowllIg I.J Iflc $rUllIIllJry: who have recommended an organization of play allowing a great number or Summary of the resulh of the Inquiry on gumcs to be terminated in the sa me day the questions r.iHd by Grand-MlSler as they have been begun, certain have Vidmar. lironounced themselves in lavour of a The questions raised by Grand·Master lengthening of the [irs!: period ol play. Vidmar have aroused a rather vivid in while others wish to keep the duration terest and the number of answers pre. -

NEWSLETTER 158 (May 29, 2014)

NEWSLETTER 158 (May 29, 2014) ECU PRESIDENT SILVIO DANAILOV MEETS WITH THE PRESIDENT OF THE ITALIAN CHESS FEDERATION, MR. GIANPIETRO PAGNONCELLI On 21st of May, 2014 in Milan, the ECU President Silvio Danailov met with the Presidеnt of the Italian Chess Federation, Mr. Gianpietro Pagnoncelli. In a pleasant conversation at the office of the Italian Chess Federation they discussed the work done by Silvio Danailov and his team during the last 4 years. Silvio Danailov expressed his special thanks to Mr. Pagnoncelli for his great contribution in mobilization of the Italian members of the European Parliament during the campaign for adoption of the ECU program “Chess in School”. As we have already informed you the Italian MEP, Mr. Mario Mauro, was among the most active deputies, who supported the efforts of Mr. Danailov. Both Presidents discussed also the new joint project between ECU and UNESCO, “Chess as UNESCO intangible world heritage”. Silvio Danailov proposed one member of the commission, which will be responsible for this topic, to be elected by the Italian Chess Federation. Mr. Pagnoncelli said that this will be decided on the next ICF Board meeting. ECU President congrtulated Mr. Gianpietro Pagnoncelli for his sucessful 10-year career as President of the Italian Chess Federation, at the beginning of which Italy had just 2 grandmasters. Now ICF can be proud with 11 GMs, one of them, Fabiano Caruana is currently No 5 in the world. Silvio Danailov congratulated Mr. Pagnoncelli also for the acceptance of the ICF for a member of the Italian Olympic Committee rightly aloud. This achievement happened for the first time in the history of chess. -

1967 U.S. Women's Champion

1967 U.S. WOMEN'S CHAMPION Edith Lude Wear:!, lelt, pr.Hnllnq 11M cup .... hkh .hc donal~ In 1951. /9$1 U.s. Wornetn'. Champion Mrs. G/Hla Gro"er accept. lhe ClIp Im~kIfely followlno lhe toumomcml. S •• p. 190. ~ UNITED STATES ~ ._-- - - - --- -~ - ------ ---- -- -- . -- - - --~ -_. - Volume XXII Number 6 July, 1967 EDITOR: Burt Hochberg ------- --- --- --- -- CONTENTS Sarajevo 1967, by Dimitrije Bjelica .... ... ... ...... ... ...... .... ........... .............. 184 PRESIDENT Marshall Rohland Twa Games Fram Sara jevo, by Robert Byrne ... ... ......... ... ...... ................ 185 VICI·PRESIDENT Dutch Treat, by Bernard Zuckerman ............................ .... .................... 188 Isaac Kashdan REGIONAL VICE·PRESIDENTS Chess Life, Here and There, compiled by Wm. Go ichberg ......... ... 189. 203, 204, 207, 215 NEW ENCJLAND James Bolton Harold Dolldls Ell Buurdon Women's Chess, by Kothryn Slater ..... ......... ... ...... ............ .... ..... ............ 190 EASTERN Ii Obl'M LaBeU" Lewis E. Wood MIchael Raimo The College Column, by Mark L. Schwarcz ... ...... ...... ........................... 191 MID-ATLANTIC Earl Clary Steve Carruthers RObert Erk",. Observation Point, by Miro Rodojcic ... ...... .... ... ... .. ... ...... ... ... ............... 193 SOUTHERN Phlllp Lamb I-'w t H Lah.de Carroll M. Crull U. S. Open ... ..... ... ... ..... .. .. .. ........... .. ... ... .. ...................... ..... .................... 197 GREAT LAKES Donald W. Hlldlng Dr. Harvey M~ Clellan V. E. Vandenbur g Lorry Evans on Chess ... -

Monarch Assurance International Open Chess

Isle of Man (IoM) Open The event of 2016 definitely got the Isle of Man back on the international chess map! Isle of Man (IoM) Open has been played under three different labels: Monarch Assurance International Open Chess Tournament at the Cherry Orchard Hotel (1st-10th), later Ocean Castle Hotel (11th-16th), always in Port Erin (1993 – 2007, in total 16 annual editions) PokerStars Isle of Man International (2014 & 15) in the Royal Hall at the Villa Marina in Douglas Chess.com Isle of Man International (since 2016) in the Royal Hall at the Villa Marina in Douglas The Isle of Man is a self-governing Crown dependency in the Irish Sea between England and Northern Ireland. The island has been inhabited since before 6500 BC. In the 9th century, Norsemen established the Kingdom of the Isles. Magnus III, King of Norway, was also known as King of Mann and the Isles between 1099 and 1103. In 1266, the island became part of Scotland and came under the feudal lordship of the English Crown in 1399. It never became part of the Kingdom of Great Britain or its successor the United Kingdom, retaining its status as an internally self-governing Crown dependency. http://iominternationalchess.com/ For a small country, sport in the Isle of Man plays an important part in making the island known to the wider world. The principal international sporting event held on the island is the annual Isle of Man TT motorcycling event: https://en.wikipedia.org/wiki/Sport_in_the_Isle_of_Man#Other_sports Isle of Man also organized the 1st World Senior Team Chess Championship, In Port Erin, Isle Of Man, 5-12 October 2004 http://www.saund.co.uk/britbase/worldseniorteam2004/ Korchnoi who had to hurry up to the forthcoming 2004 Chess Olympiad at Calvià, agreed to play the first four days for the team of Switzerland which took finally the bronze medal, performing at 3.5/4, drawing vs. -

FIDE Trainers' Commission (TRG) 49 Meeting (05.09.2016) Minutes

FIDE Trainers’ Commission (TRG) 49th Meeting (05.09.2016) Minutes & Decisions The Meeting was held during the 42nd Chess Olympiad, in Baku, Azerbaijan. The councillors U.Boensch, J.Petronic and A.Petrosian were informed and voted via Internet. Presents: Chairman: A.Michalschishin (SLO), Secretary: E.Grivas (GRE) The Chairman and the Secretary informed the panel on the various projects and achievements of the Commission and answered to the various questions and proposals. 1. Applications for Trainers: The Council received relevant applications (Annexes: TRG-01-Application) by the: 1.1. Cuban Chess Federation: FIDE Senior Trainer: Lazaro Antonio Bueno Perez. 1.2. Bulgarian Chess Federation: FIDE Senior Trainer: Kiril Georgiev (AP-FIDE ED). 1.3. All Indian Chess Federation: FIDE Senior Trainer: Ramachandran Ramesh (FT). 1.4. Azerbaijan Chess Federation: FIDE Senior Trainer: Emil Sutovsky (AP-FIDE ED). 1.5. United States of America Chess Federation: FIDE Senior Trainer: Maurice Ashley. 1.6. Russian Chess Federation: FIDE Senior Trainer: Evgeniy Najer. 1.7.Russian Chess Federation: FIDE Senior Trainer: Vladimir Potkin. 1.8. Azerbaijan Chess Federation: FIDE Senior Trainer: Ilgar Bajarani Applications - FIDE Senior Trainer/Developmental Instructor 1 Bueno Perez Lazaro Antonio 17.12.1956 Cuba 3500691 2 Georgiev Kiril 28.11.1965 Bulgaria 2900017 3 Ramesh Ramachandran 20.04.1976 India 5002109 4 Sutovsky Emil 19.09.1977 Israel 2802007 5 Ashley Maurice 06.03.1966 US America 2001012 6 Najer Evgeniy 22.06.1977 Russia 4118987 7 Potkin Vladimir 28.06.1982 Russia 4131061 8 Bajarani Ilgar 21.06.1965 Azerbaijan 13400223 TRG voted according to the Regulations and: 1.1. -

Tradewise Gibraltar Chess Festival 2017

Tradewise Gibraltar Chess Festival 2017 Monday 23 January - Thursday 2 February 2017 Round 3 Report: Thursday 26 January 2017 - by John Saunders (@JohnChess) How to Draw with Your Dad Nine players – Mickey Adams (England), Nikita Vitiugov, Vadim Zvjaginsev (both Russia), Sam Shankland (USA), Eduardo Iturrizaga (Venezuela), Emil Sutovsky (Israel), Maxime Lagarde (France), Kacper Piorun (Poland) and Ju Wenjun (China) – share the lead on 3/3 after another tough round of chess in the Tradewise Gibraltar Masters at the Caleta Hotel in Gibraltar. The elite 2700+ players are still finding it very hard to impose themselves on the group of players rated 200 or 300 points below. Veselin Topalov picked up this point in his master class on Wednesday, stating that these days there is little or no difference in the quality of the first 10 to 15 opening moves between 2300-rated players and the elite. So the big names have to work harder to get their points, perhaps adopting a Carlsen-style approach, avoiding long, forcing lines in the opening and outplaying their opponents in endgames. This has been exemplified in Gibraltar by the only two 2700+ rated players to figure in the group on 3/3, namely Adams and Vitiugov, who have been grinding out the wins in long games. Mickey Adams ground out a long win against Bogdan-Daniel Deac (Romania) Perhaps the most enjoyable game for the audience in round three was the battle between Peter Svidler and Nitzan Steinberg. The 18-year-old IM from Israel has made quite an impression in Gibraltar, drawing with the second seed Maxime Vachier-Lagrave and now the sixth seed. -

1951 Universities Chess Annual, 2Nd Issue, November 1951

THE UNIVERSITIES CHESS ANNUAL CONTENTS: Page The Dutch Tour By D. V. Mardle ... ... 2 Swansea Successes By L. W . Barden ... ... 4 Universities Team Championship By D. J. Youston ... ... 6 The Oxford Congress By D. J. Youston . .. ... 9 Annotated Games ... ... 15 The University Chess Clubs ... 17 SECOND ISSUE NOVEMBER, 1951 PRICE I /9 THE BRITISH UNIVERSITIES CHESS ASSOCIATION OFFICERS, 1951— 52. President: B. H. WOOD, M.Sc. Vice-Presidents: Miss Elaine Saunders, Mr. C. H. O’D Alexander, Alderman J. N. Derbyshire, Sir L. S. Dyer Bart., Professor L. S. Penrose, Sir R. Robinson, Dr. H. C. Schenk, Sir G. A. Thomas Bart, Mr. T. H. Tylor. Chairman: R. J. TAYLER. Hon. Secretary: D. V. MARDLE, Christ’s College, Cambridge. Hon. Treasurer: D. J. YOUSTON, Hertford College, Oxford. Match Captain: P. j. OAKLEY. Regional Representatives: North— R. L. Williamson; South— J. M. Hancock; Wales— S. Usher. THE CHAMPIONS Universities Team Champions, 1951 - - OXFORD. Universities Individual Champion, 1951 - D. V. MARDLE. Universities Correspondence Champion, 1951 - P. J. OAKLEY. CHESS . SUTTON COLDFIELD Each month, more copies of CHESS are bought than all other British chess periodicals, duplicated or printed, fortnightly, monthly or what you will, put together. Why not take advantage of our sample offer: 25 back numbers for 5s., postage 10d. Our postal chess organisation (“ Postal chess from 5s. per year ”) has more members than all similar organisations within 500 miles put together. For fifteen years we have answered an average of 150 letters a day. Whatever your needs, if they are connected with chess, your enquiry will have our careful attention. -

Chess Moves.Qxp

ChessChess MovesMoveskk ENGLISH CHESS FEDERATION | MEMBERS’ NEWSLETTER | July 2013 EDITION InIn thisthis issueissue ------ 100100 NOTNOT OUT!OUT! FEAFEATURETURE ARARTICLESTICLES ...... -- thethe BritishBritish ChessChess ChampionshipsChampionships 4NCL4NCL EuropeanEuropean SchoolsSchools 20132013 -- thethe finalfinal weekendweekend -- picturespictures andand resultsresults GreatGreat BritishBritish ChampionsChampions TheThe BunrBunrattyatty ChessChess ClassicClassic -- TheThe ‘Absolute‘Absolute ChampionChampion -- aa looklook backback -- NickNick PertPert waswas therethere && annotatesannotates thethe atat JonathanJonathan Penrose’Penrose’ss championshipchampionship bestbest games!games! careercareer MindedMinded toto SucceedSucceed BookshelfBookshelf lookslooks atat thethe REALREAL secretssecrets ofof successsuccess PicturedPictured -- 20122012 BCCBCC ChampionsChampions JovankJovankaa HouskHouskaa andand GawainGawain JonesJones PhotogrPhotographsaphs courtesycourtesy ofof BrendanBrendan O’GormanO’Gorman [https://picasawe[https://picasaweb.google.com/105059642136123716017]b.google.com/105059642136123716017] CONTENTS News 2 Chess Moves Bookshelf 30 100 NOT OUT! 4 Book Reviews - Gary Lane 35 Obits 9 Grand Prix Leader Boards 36 4NCL 11 Calendar 38 Great British Champions - Jonathan Penrose 13 Junior Chess 18 The Bunratty Classic - Nick Pert 22 Batsford Competition 29 News GM Norm for Yang-Fang Zhou Congratulations to 18-year-old Yang-Fan Zhou (far left), who won the 3rd Big Slick International (22-30 June) GM norm section with a score of 7/9 and recorded his first grandmaster norm. He finished 1½ points ahead of GMs Keith Arkell, Danny Gormally and Bogdan Lalic, who tied for second. In the IM norm section, 16-year-old Alan Merry (left) achieved his first international master norm by winning the section with an impressive score of 7½ out of 9. An Englishman Abroad It has been a successful couple of months for England’s (and the world’s) most travelled grandmaster, Nigel Short. In the Sigeman & Co. -

FIDE Trainers Committee V22.1.2005

FIDE Training! Don’t miss the action! by International Master Jovan Petronic Chairman, FIDE Computer & Internet Chess Committee In 1998 FIDE formed a powerful Committee comprising of leading chess trainers around the chess globe. Accordingly, it was named the FIDE Trainers Committee, and below, I will try to summarize the immense useful information for the readers, current major chess training activities and appeals of the Committee, etc. Among their main tasks in the period 1998-2002 were FIDE licensing of chess trainers and the recognition of these by the International Olympic Committee, benefiting in the long run, to all chess federations, trainers and their students. The proven benefits of playing and studying chess have led to countries, such as Slovenia, introducing chess into their compulsory school curiccullum! The ASEAN Chess Academy, headed by FIDE Vice President, FM, IA & IO - Ignatius Leong, has organized from November 7th to 14th 2003 - a Training Course under the auspices of FIDE and the International Olympic Committee! IM Nikola Karaklajic from Serbia & Montenegro provided the training. The syllabus was targeted for middle and lower levels. At the time, the ASEAN Chess Academy had a syllabus for 200 lessons of 90 minutes each! I am personally proud of having completed such a Course in Singapore. You may view the official Certificate I received here. Another Trainers’ Course was conducted by FIDE and the Asean Chess Academy from 12th to 17th December 2004! Extensive testing was done, here is the list of the candidates who had passed the FIDE Trainer high criteria! The main lecturer was FIDE Senior Trainer Israel Gelfer. -

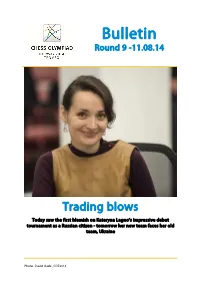

Bulletin Round 9 -11.08.14

Bulletin Round 9 -11.08.14 Trading blows Today saw the first blemish on Kateryna Lagno's impressive debut tournament as a Russian citizen - tomorrow her new team faces her old team, Ukraine Photo: David Llada , COT2014 Chess Olympiad Tromsø 2014 – Bulletin Round 9– 11.08.14 Round 9 interim report: Election focus Today's play would have a hard time living up to the energy and excitement levels displayed in the FIDE presidential election. Even the players in action were fascinated by what they might be missing during the rounds, and one top GM wondered if it was possible to find out the latest voting news during the game. By GM Jonathan Tisdall The story could be told in two tweets by Georgios Souleidis, the official Olympiad photographer present at the FIDE General Assembly. This was the scene as former World Champion Garry Kasparov made some final efforts to hold back the tide before the vote: And here he is after the count was over and incumbent Kirsan Ilyumzhinov had again won by a landslide: Chess Olympiad Tromsø 2014 – Bulletin Round 9– 11.08.14 The chess the items in the window were auctioned off, but NRK TV had a board reshuffle request arranged, so the people in charge of the shop did not seem to that Norway 2 could also be on main cameras understand that holding the event at 10 in the today, since they were facing number one seeded morning was not the way to capture the Russia. This means that there was a smorgasbord maximum chess audience. -

CHESS-Nov2011 Cbase.Pdf

November 2011 Cover_Layout 1 03/11/2011 14:52 Page 1 Contents Nov 2011_Chess mag - 21_6_10 03/11/2011 11:59 Page 1 Chess Contents Chess Magazine is published monthly. Founding Editor: B.H. Wood, OBE. M.Sc † Editorial Editor: Jimmy Adams Malcolm Pein on the latest developments in chess 4 Acting Editor: John Saunders ([email protected]) Executive Editor: Malcolm Pein Readers’ Letters ([email protected]) You have your say ... Basman on Bilbao, etc 7 Subscription Rates: United Kingdom Sao Paulo / Bilbao Grand Slam 1 year (12 issues) £49.95 The intercontinental super-tournament saw Ivanchuk dominate in 2 year (24 issues) £89.95 Brazil, but then Magnus Carlsen took over in Spain. 8 3 year (36 issues) £125.00 Cartoon Time Europe Oldies but goldies from the CHESS archive 15 1 year (12 issues) £60.00 2 year (24 issues) £112.50 Sadler Wins in Oslo 3 year (36 issues) £165.00 Matthew Sadler is on a roll! First, Barcelona, and now Oslo. He annotates his best game while Yochanan Afek covers the action 16 USA & Canada 1 year (12 issues) $90 London Classic Preview 2 year (24 issues) $170 We ask the pundits what they expect to happen next month 20 3 year (36 issues) $250 Interview: Nigel Short Rest of World (Airmail) Carl Portman caught up with the English super-GM in Shropshire 22 1 year (12 issues) £72 2 year (24 issues) £130 Kasparov - Short Blitz Match 3 year (36 issues) £180 Garry and Nigel re-enacted their 1993 title match at a faster time control in Belgium.