Ivar Allan Rodriguez Hannikainen

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gold Medal IPO 2012 Tadas Krisciunas, Lithuania

Tadas Krisciunas (Litauen) - Goldmedaille bei der IPO 2012 in Oslo Topic Nr. 4: “And when we question whether the underlying object is such as it appears, we grant the fact that it appears, and our doubt does not concern the appearance itself but the account given of that appearance – and that is a different thing from questioning the appearance itself. For example, honey appears to us to be sweet (and this we grant, for we perceive sweetness through the senses), but whether it is also sweet in its essence is for us a matter of doubt, since this is not an appearance but a judgment about the appearance.” Sextus Empiricus, Outlines of Pyrrhonism I. 10 (2nd century AD). Among the schools of Hellenistic philosophy, one of much interest for anyone with an interest in epistemology flourished. Skeptics, as they were called, combining the negative1 arguments of the rival- ing schools of Stoics and Epicureans, tried to disprove2 the possibility of knowledge. One of the key works in the tradition of Hellenistic skepticism is Sextus Empiricus’ “Outlines of Pyrrhonism.” As the title shows, in the work, Sextus Empiricus tries to outline the skeptical tradition started by Pyrrho. In this essay, I am going to discuss a certain distinction made by Sextus Empiricus. The distinc- tion is between what the philosopher calls appearances and underlying objects (D)3. I will try to compre- hend the motivation for such a distinction and the logical consequences of it. However, I will try to give some arguments against this distinction, showing how the problems the distinction addresses can be dealt with in other ways. -

The Divine Within: Selected Writings on Enlightenment Free Download

THE DIVINE WITHIN: SELECTED WRITINGS ON ENLIGHTENMENT FREE DOWNLOAD Aldous Huxley,Huston Smith | 305 pages | 02 Jul 2013 | HARPER PERENNIAL | 9780062236814 | English | New York, United States The Divine Within: Selected Writings on Enlightenment (Paperback) Born into a French noble family in southern France, Montesquieu practiced law in adulthood and witnessed great political upheaval across Britain and France. Aldous Huxley mural. Animal testing Archival research Behavior epigenetics Case The Divine Within: Selected Writings on Enlightenment Content analysis Experiments Human subject research Interviews Neuroimaging Observation Psychophysics Qualitative research Quantitative research Self-report inventory Statistical surveys. By the decree of the angels, and by the command of the holy men, we excommunicate, expel, curse and damn Baruch de Espinoza, with the consent of God, Blessed be He, and with the consent of all the Holy Congregation, in front of these holy Scrolls with the six-hundred-and-thirteen precepts which are written therein, with the excommunication with which Joshua banned The Divine Within: Selected Writings on Enlightenment[57] with the curse with which Elisha cursed the boys [58] and with all the curses which are written in the Book of the Law. Huxley consistently examined the spiritual basis of both the individual and human society, always seeking to reach an authentic and The Divine Within: Selected Writings on Enlightenment defined experience of the divine. Spinoza's Heresy: Immortality and the Jewish Mind. And when I read about how he decided to end his life while tripping on LSD I thought that was really heroic. Miguel was a successful merchant and became a warden of the synagogue and of the Amsterdam Jewish school. -

Constraints on an External Reality Under the Simulation Hypothesis

Constraints on an external reality under the simulation hypothesis Max Hodak (Originally: 9 May 2020, Last Revised: 14 November 2020) Abstract Whether or not we are living in a simulation has become the subject of much lighthearted conjecture. Despite it being often considered an unfalsifiable question, here I present an argument that places strong contraints on the nature of any universe that contains ours as a \simulation," and I argue that these constraints are so strict that the most likely conclusion is that we are not living in a simulation. The crux of the idea is that almost any external world-simulation interface allows for Lorentz violations, and that these cannot be remedied in a simulation that implements quantum mechanics as we observe it. These arguments do not preclude all possibility that we are living in a simulation, but they impose significant constraints on what such a thing could mean or how the physics of the \external" universe must work. 1 Assumptions all practical purposes.1{3 The analysis that follows requires making three key Assumption A. A simulation should be somehow assumptions. accessible to its creators. Assumption C. The state of a valid universe Similarly, this is difficult to formalize fully gen- must be internally consistent at all times. erally, but for a quantum mechanical universe like ours with Hermitian observables, we can say some- Consistency is an essential property of any thing like the space of positive operator-valued mea- mathematically-describable physics; when we dis- sures available to the creators of the simulation is cover contradiction implied by our mathematics, we not empty; that is, they have some way to measure know that something must be wrong. -

A Critical Engagement of Bostrom's Computer Simulation Hypothesis

A Critical Engagement of Bostrom’s Computer Simulation Hypothesis Norman K. Swazo, Ph.D., M.H.S.A. Professor of Philosophy and Director of Undergraduate Studies North South University Plot 15, Block B, Bashundhara R/A Dhaka 1229, Bangladesh Email: [email protected]; [email protected] Abstract In 2003, philosopher Nick Bostrom presented the provocative idea that we are now living in a computer simulation. Although his argument is structured to include a “hypothesis,” it is unclear that his proposition can be accounted as a properly scientific hypothesis. Here Bostrom’s argument is engaged critically by accounting for philosophical and scientific positions that have implications for Bostrom’s principal thesis. These include discussions from Heidegger, Einstein, Heisenberg, Feynman, and Dreyfus that relate to modelling of structures of thinking and computation. In consequence of this accounting, given that there seems to be no reasonably admissible evidence to count for the task of falsification, one concludes that the computer simulation argument’s hypothesis is only speculative and not scientific. Keywords: Bostrom; simulation hypothesis; Heidegger; Dreyfus; Feynman; falsifiability 1. Introduction In a paper published in 2003, Nick Bostrom argued that at least one of several propositions is likely to be true: (1) the human species is very likely to go extinct before reaching a “posthuman” stage, i.e., (fp≈0); (2) any posthuman civilization is extremely unlikely to run a significant number of simulations of their evolutionary history (or variations thereof), i.e., (fI≈0); (3) we are almost certainly living in a computer simulation, i.e., (fsim≈1). (Bostrom 2003) Proposition (3) is characterized as the simulation hypothesis, thus only a part of Bostrom’s simulation argument. -

2020 WWE Finest

BASE BASE CARDS 1 Angel Garza Raw® 2 Akam Raw® 3 Aleister Black Raw® 4 Andrade Raw® 5 Angelo Dawkins Raw® 6 Asuka Raw® 7 Austin Theory Raw® 8 Becky Lynch Raw® 9 Bianca Belair Raw® 10 Bobby Lashley Raw® 11 Murphy Raw® 12 Charlotte Flair Raw® 13 Drew McIntyre Raw® 14 Edge Raw® 15 Erik Raw® 16 Humberto Carrillo Raw® 17 Ivar Raw® 18 Kairi Sane Raw® 19 Kevin Owens Raw® 20 Lana Raw® 21 Liv Morgan Raw® 22 Montez Ford Raw® 23 Nia Jax Raw® 24 R-Truth Raw® 25 Randy Orton Raw® 26 Rezar Raw® 27 Ricochet Raw® 28 Riddick Moss Raw® 29 Ruby Riott Raw® 30 Samoa Joe Raw® 31 Seth Rollins Raw® 32 Shayna Baszler Raw® 33 Zelina Vega Raw® 34 AJ Styles SmackDown® 35 Alexa Bliss SmackDown® 36 Bayley SmackDown® 37 Big E SmackDown® 38 Braun Strowman SmackDown® 39 "The Fiend" Bray Wyatt SmackDown® 40 Carmella SmackDown® 41 Cesaro SmackDown® 42 Daniel Bryan SmackDown® 43 Dolph Ziggler SmackDown® 44 Elias SmackDown® 45 Jeff Hardy SmackDown® 46 Jey Uso SmackDown® 47 Jimmy Uso SmackDown® 48 John Morrison SmackDown® 49 King Corbin SmackDown® 50 Kofi Kingston SmackDown® 51 Lacey Evans SmackDown® 52 Mandy Rose SmackDown® 53 Matt Riddle SmackDown® 54 Mojo Rawley SmackDown® 55 Mustafa Ali Raw® 56 Naomi SmackDown® 57 Nikki Cross SmackDown® 58 Otis SmackDown® 59 Robert Roode Raw® 60 Roman Reigns SmackDown® 61 Sami Zayn SmackDown® 62 Sasha Banks SmackDown® 63 Sheamus SmackDown® 64 Shinsuke Nakamura SmackDown® 65 Shorty G SmackDown® 66 Sonya Deville SmackDown® 67 Tamina SmackDown® 68 The Miz SmackDown® 69 Tucker SmackDown® 70 Xavier Woods SmackDown® 71 Adam Cole NXT® 72 Bobby -

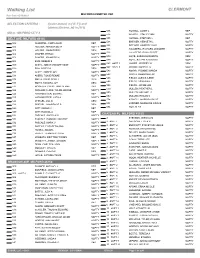

Walking List CLERMONT MILFORD EXEMPTED VSD Run Date:05/19/2021

Walking List CLERMONT MILFORD EXEMPTED VSD Run Date:05/19/2021 SELECTION CRITERIA : ({voter.status} in ['A','I']) and {district.District_id} in [112] 535 HOWELL, JANET L REP MD-A - MILFORD CITY A 535 HOWELL, STACY LYNN NOPTY BELT AVE MILFORD 45150 535 HOWELL, STEPHEN L REP 538 BREWER, KENNETH L NOPTY 502 KLOEPPEL, CODY ALAN REP 538 BREWER, KIMBERLY KAY NOPTY 505 HOLSER, AMANDA BETH NOPTY 538 HALLBERG, RICHARD LEANDER NOPTY 505 HOLSER, JOHN PERRY DEM 538 HALLBERG, RYAN SCOTT NOPTY 506 SHAFER, ETHAN NOPTY 539 HOYE, SARAH ELIZABETH DEM 506 SHAFER, JENNIFER M NOPTY 539 HOYE, STEPHEN MICHAEL NOPTY 508 BUIS, DEBBIE S NOPTY 542 #APT 1 LANIER, JEFFREY W DEM 509 WHITE, JONATHAN MATTHEW NOPTY 542 #APT 3 MASON, ROBERT G NOPTY 510 ROA, JOYCE A DEM 543 AMAYA, YVONNE WANDA NOPTY 513 WHITE, AMBER JOY NOPTY 543 NORTH, DEBORAH FAY NOPTY 514 AKERS, TONIE RENAE NOPTY 546 FIELDS, ALEXIS ILENE NOPTY 518 SMITH, DAVID SCOTT DEM 546 FIELDS, DEBORAH J NOPTY 518 SMITH, TAMARA JOY DEM 546 FIELDS, JACOB LEE NOPTY 521 MCBEATH, COURTTANY ALENE REP 550 MULLEN, HEATHER L NOPTY 522 DUNHAM CLARK, WILMA LOUISE NOPTY 550 MULLEN, MICHAEL F NOPTY 525 HACKMEISTER, EDWIN L REP 550 MULLEN, REGAN N NOPTY 525 HACKMEISTER, JUDY A NOPTY 554 KIDWELL, MARISSA PAIGE NOPTY 526 SPIEGEL, JILL D DEM 554 LINDNER, MADELINE GRACE NOPTY 526 SPIEGEL, LAWRENCE B DEM 554 ROA, ALEX NOPTY 529 WITT, AARON C REP 529 WITT, RACHEL A REP CHATEAU PL MILFORD 45150 532 PASCALE, ANGELA W NOPTY 532 PASCALE, DOMINIC VINCENT NOPTY 2 STEVENS, JESSICA M NOPTY 532 PASCALE, MARK V NOPTY 2 #APT 1 CHURCHILL, REX -

Schwitzgebel May 12, 2014 1% Skepticism, P. 1 1% Skepticism Eric

1% Skepticism Eric Schwitzgebel Department of Philosophy University of California at Riverside Riverside, CA 92521-0201 eschwitz at domain: ucr.edu May 12, 2014 Schwitzgebel May 12, 2014 1% Skepticism, p. 1 1% Skepticism Abstract: A 1% skeptic is someone who has about a 99% credence in non-skeptical realism and about a 1% credence in the disjunction of all radically skeptical scenarios combined. The first half of this essay defends the epistemic rationality of 1% skepticism, appealing to dream skepticism, simulation skepticism, cosmological skepticism, and wildcard skepticism. The second half of the essay explores the practical behavioral consequences of 1% skepticism, arguing that 1% skepticism need not be behaviorally inert. Schwitzgebel May 12, 2014 1% Skepticism, p. 2 1% Skepticism Certainly there is no practical problem regarding skepticism about the external world. For example, no one is paralyzed from action by reading about skeptical considerations or evaluating skeptical arguments. Even if one cannot figure out where a particular skeptical argument goes wrong, life goes on just the same. Similarly, there is no “existential” problem here. Reading skeptical arguments does not throw one into a state of existential dread. One is not typically disturbed or disconcerted for any length of time. One does not feel any less at home in the world, or go about worrying that one’s life might be no more than a dream (Greco 2008, p. 109). [W]hen they suspended judgement, tranquility followed as it were fortuitously, as a shadow follows a body…. [T]he aim of Sceptics is tranquility in matters of opinion and moderation of feeling in matters forced upon us (Sextus Empiricus, c. -

The Modal Future

The Modal Future A theory of future-directed thought and talk Cambridge University Press Fabrizio Cariani Contents Preface page vii Conventions and abbreviations xi Introduction xii PART ONE BACKGROUND 1 1 The symmetric paradigm 3 1.1 The symmetric paradigm 3 1.2 Symmetric semantics 4 1.3 The symmetric paradigm contextualized 10 1.4 Temporal ontology and symmetric semantics 14 Appendix to chapter 1: the logic Kt 17 2 Symmetric semantics in an asymmetric world 19 2.1 Branching metaphysics 20 2.2 Branching models 21 2.3 Symmetric semantics on branching models 27 2.4 Ways of being an Ockhamist 32 2.5 Interpreting branching models 32 PART TWO THE ROAD TO SELECTION SEMANTICS 39 3 The modal challenge 41 3.1 What is a modal? 42 3.2 The argument from common morphology 44 3.3 The argument from present-directed uses 45 3.4 The argument from modal subordination 48 3.5 The argument from acquaintance inferences 52 iii iv Contents 3.6 Morals and distinctions 54 4 Modality without quantification 56 4.1 Quantificational theories 57 4.2 Universal analyses and retrospective evaluations 59 4.3 Prior’s bet objection 60 4.4 The zero credence problem 61 4.5 Scope with negation 64 4.6 Homogeneity 66 4.7 Neg-raising to the rescue? 69 5 Basic selection semantics 73 5.1 Selection semantics: a first look 74 5.2 Basic versions of selection semantics 78 5.3 Notions of validity: a primer 81 5.4 Logical features of selection semantics 82 5.5 Solving the zero credence problem 83 5.6 Modal subordination 85 5.7 Present-directed uses of will 86 5.8 Revisiting the acquaintance -

BASE BASE CARDS 1 AJ Styles Raw 2 Aleister Black Raw 3 Alexa Bliss Smackdown 4 Mustafa Ali Smackdown 5 Andrade Raw 6 Asuka Raw 7

BASE BASE CARDS 1 AJ Styles Raw 2 Aleister Black Raw 3 Alexa Bliss SmackDown 4 Mustafa Ali SmackDown 5 Andrade Raw 6 Asuka Raw 7 King Corbin SmackDown 8 Bayley SmackDown 9 Becky Lynch Raw 10 Big E SmackDown 11 Big Show WWE 12 Billie Kay Raw 13 Bobby Lashley Raw 14 Braun Strowman SmackDown 15 "The Fiend" Bray Wyatt SmackDown 16 Brock Lesnar Raw 17 Murphy Raw 18 Carmella SmackDown 19 Cesaro SmackDown 20 Charlotte Flair Raw 21 Daniel Bryan SmackDown 22 Drake Maverick SmackDown 23 Drew McIntyre Raw 24 Elias SmackDown 25 Erik 26 Ember Moon SmackDown 27 Finn Bálor NXT 28 Humberto Carrillo Raw 29 Ivar 30 Jeff Hardy WWE 31 Jey Uso SmackDown 32 Jimmy Uso SmackDown 33 John Cena WWE 34 Kairi Sane Raw 35 Kane WWE 36 Karl Anderson Raw 37 Kevin Owens Raw 38 Kofi Kingston SmackDown 39 Lana Raw 40 Lacey Evans SmackDown 41 Luke Gallows Raw 42 Mandy Rose SmackDown 43 Naomi SmackDown 44 Natalya Raw 45 Nia Jax Raw 46 Nikki Cross SmackDown 47 Peyton Royce Raw 48 Randy Orton Raw 49 Ricochet Raw 50 Roman Reigns SmackDown 51 Ronda Rousey WWE 52 R-Truth Raw 53 Ruby Riott Raw 54 Rusev Raw 55 Sami Zayn SmackDown 56 Samoa Joe Raw 57 Sasha Banks SmackDown 58 Seth Rollins Raw 59 Sheamus SmackDown 60 Shinsuke Nakamura SmackDown 61 Shorty G SmackDown 62 Sonya Deville SmackDown 63 The Miz SmackDown 64 The Rock WWE 65 Triple H WWE 66 Undertaker WWE 67 Xavier Woods SmackDown 68 Zelina Vega Raw 69 Adam Cole NXT 70 Angel Garza NXT 71 Angelo Dawkins Raw 72 Bianca Belair NXT 73 Boa NXT 74 Bobby Fish NXT 75 Bronson Reed NXT 76 Cameron Grimes NXT 77 Candice LeRae NXT 78 Damian -

March 2020 Achievers

U.S. and Affiliates, Bermuda and Bahamas District 1 A NOEL MASON BART SMITH District 1 BK PAUL BUTT BOB HENNESSY EVAN SHOCKLEY District 1 CN MITCH BUEHLER District 1 CS CHRIS CLARK MICHEL DAMMERMAN DARREL GRATHWOHL BILLY KELLEY BRENDA MC CLUSKIE GARY SWEITZER ANGANETTA TERRY LISA TOMAZZOLI ALAN WATT EILEEN WILKINS District 1 D VERNON DAY RONALD FLUEGEL RICHARD HOLMES MARY MENDOZA DARYL ROSENKRANS PAUL SHADDICK MONIQUE WEAVER District 1 F THOMAS FREMGEN ROBERT MC CARTY District 1 G HAROLD DEHART MARY TWADDLE District 1 H DARRELL PIERCE SARA ROBISON District 1 J SUSAN BRUNEAU MICHELE NEEDHAM DANIEL PURCELL District 1 M JOE ELKS JAMES FERRERO PAULA RIDER MIKE WALKER District 10 U.S. and Affiliates, Bermuda and Bahamas RONALD BEACOM MARK BOSHAW DAN CRANE WILLIAM NELSON District 11 A1 LINDA CLARK District 11 A2 SONNY EPLIN SANDRA ROACH District 11 B2 MARSHA BROWN DONALD BROWN PAUL SCHINCARIOL THOMAS SHOEMAKER District 11 C1 RONALD HANSEN JOAN HEINZ LARRY HOLSTROM JENNIFER STEWARD WILLIAM SUTTON JOHN SUTTON District 11 C2 CHRISTINA ANDRE YVONNE CHRISTOPHER WILLIAMS District 11 D1 ADAM ANDERSON GARY BURROWS JASON LUOKKA District 11 D2 BRUCE BRONSON KATHERINE KELLY LARRY LATOUF WILLIAM SAMPSON DEBRA STEEB DAVID THUEMMEL District 12 L DANNY TACKER District 12 N LAURA BAILEY WILLIAM MCDONALD DAVID WATSON District 12 O JUDY BRATCHER CARLDEAN CARMACK JANIE HUGHES PETER LEGRO District 12 S SEAN DRIVER TONY GRIFFITH KATIE GUTHRIE-SHEARIN JEFF HARDY U.S. and Affiliates, Bermuda and Bahamas JASPER SMITH ANDREA WILSON District 13 OH1 MICHEAL GIBBS REGINALD -

Knowing and Unknowing Reality – a Beginner's and Expert's Developmental Guide to Post-Metaphysical Thinking

Knowing and Unknowing Reality – A Beginner's and Expert's Developmental Guide to Post-Metaphysical Thinking Tom Murray1 Table of Contents Preface 129 Introduction and Some Foundations 131 The Metaphysics to Come 131 A Developmental Perspective 136 The Meaning-making Drive and Unknowing 140 Magical, Mystical, and Metaphysical Thinking 144 Meaning-making in Magical, Mythical, and Rational Thinking 144 Mysticism and Logic 153 Metaphysical Thinking and Action Logics 155 Interlude: Three Historical Arcs 157 (1) The Rise and Decline of Rationality 158 (2) A Brief History of Belief Fallibility 160 (3) An Evolution in Understanding Ideas vs. the Real 162 A Philosopher's Knot – Knowing and Being Entangled 165 Is there a God? – Philosophers as Under-laborers 165 Two Truths: One Problem 167 Truth, Belief, Vulnerability, and Seriousness 171 Contours of the Real 178 What is Really Real? 178 Constructing the Real 182 Reification and Misplaced Concreteness 191 Embodied Cognition and Epistemic Drives 195 From 4th into 5th Person Perspective 195 Embodied Realism and Metaphorical Pluralism 201 Epistemic Drives 211 Phenomenology and Infinity 220 Conclusions and Summary 230 References 238 Appendix – Developmental Basics 244 1 Tom Murray is Chief Visionary and Instigator at Open Way Solutions LLC, which merges technology with integral developmental theory, and is also a Senior Research Fellow at the University of Massachusetts School of Computer Science. He is an Associate Editor at Integral Review, is on the editorial review board of the International Journal of Artificial Intelligence in Education, and has published articles on developmental theory and integral theory as they relate to wisdom skills, education, contemplative dialog, leadership, ethics, knowledge building communities, epistemology, and post-metaphysics. -

Jihalafl7177...” . .. I ...U...:Ni.Ivar

.7..I............7 . .WHWJJIIIIHIIIHIM? IIIII..II.I . I 7 [507” 7.9! :nI. Ivar... .7... «(Itkhwrrurt 7.. .11. I ..LIN71”.-. ‘. .77... In]. 1747.7... .N4..II.LII.I.’.. 711.77.}. .. .771... I}. 7 f... :7 7.1. K INN; 77.1] 77377 1..» I . W‘TIJIL. .. IPI‘IIhNIIL. .7 CI II I 1%.}! {‘74}... I.7.. I... I III-10(07):! 7IIIIIMI.VIII..7r9.{4-.II.III'477!. I.“ .l. I .1. .. 7.. 7 77.4.7.1 Lu... 779.30.. I I I1 1.7.7.771 .70 7 73.77.77 .57... 7.77.... .u77 I. .57 7.. IL... ..II.I..7..(h. II... ’3 .77. 77...... Lao. 7.7.7.5... 3.7770.Io7.l. 77621.. L'Itvh I..!n 7:. 77...“)...uhzr77 I..-Olimhx 47.... III {(770 .I I 5. IIIWVII.07 .tI..l II. Jihalafl 7.3.1“. 7177......” 5:717”... Julian, .7. pwuhmw... 7.7. A. WP fi 177.9...”qu .27! 77 . his! ...I .I: IIIIMI x..t.. I. o . L. I7. ..I.. n 7 7 I .11 2.777.. I.....uerWI .7777 III .7...7.3: 3.7.7- . 777.. I I. 1:! I . .I.7 l . 7.0.5th I ”57!: l I. .L..7.I.f. I.7.7 7.I. .n «Ir. I.. .II. lel...tu.. 7?J.h 7|- x .u. I It I II. LINI 3.. .. i 7177.7...7. ...u........ .77.. I...7..I..7 7.77... .4137.“ .7: -3 .1. 7 ""7, u». III...II ..wlw (4;. II... .. .. 3.771.... ..... :7. .Ia. I....rnl .1er.77 .