Implementing Mobile Document Capture with IBM Datacap Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ilmartin Seeks Student I Edmund J

youngstown state university youngstown, Ohio tuesday, October 14,1980 vol. 62 - no. 6 i£||||§ by Lynn Alexander until they have conclusive results. lllllll Hie Environmental Protection Phil Rogers, University Health illlil Agency (EPA) has determined and Safety Officer, is heading the that the trinitroflorinone (TNF) investigation at YSU. He says used in IBM photocopying report should be ready within two Hi machines, six of which are used weeks. on campus, may cause cancer, Although the EPA has.. not Hiilli according to an ' EPA report. in ruled whether TNF is hamful to mm Computerworld magazine humans, it is known that the (9/8/80). liguid and vapor form of the Dan Scudier, Cluef Steward of chemical cause gene mutations the Ohio Civil Service Employee and tumors in laboratory animals, Union (OCSEA) and member of Computerworld has followed the University Safety Committee the TNF controversy in a series contends, "TNF -exposure is po• of September articles: tentially dangerous to many mem• IBM officials have know for bers of the University community. ten years that TNF is a carcinogen OSCEA has urged discontinuing (cancer-causing substance), but use of the IBM copiers until a did not report its use in copier safe replacement for TNF can be models I and II, which were intro• found. duced in 1970, until this spring. "I have been told the Frank Cary, IBM chairman, University is investigating TNF in a September 9 letter to the Robert Burnside, freshman, F & PA, practices his marksmanship in the Beeghly rifle range. (Photo by but thus far I have received no editor of Computerworld, Karen McDowall) reports on what they have says IBM was under no legal uncovered." obligation to disclose the infor• Ilmartin seeks student i Edmund J. -

University Librarian President 1975

Annual Report, 1975/1976 Authors University of Arizona Library; Laird, W. David Publisher University of Arizona Library (Tucson, AZ) Download date 06/10/2021 10:30:06 Link to Item http://hdl.handle.net/10150/124702 JiNNUAL REPORT OF THE UNIVERSITY LIBRARIAN TO THE PRESIDENT FOR ThE 1975 - 1976 YEAR UNIVERSITY OF ARIZONA LIBRARY TUCSON, ARIZONA SUMMARY 1975/76 was the year of anticipation. The first major event of the year was the installation of computer terminals tying us to the 2 million plus bibliographic records at the Ohio College Library Center (OCLC) in Columbus. This hookup, as part of the ANIGOS Biblio- graphic Network based in Texas, was scheduled for September but training, programming and computer problems kept us in a state of suspense for more than four months, and by the time our first "up" day arrived in November we had anticipated ourselves into a processing backlog that will take many months from which to recover. In the long run the wait will be, and has been, worthwhile. By January we were using the computer to process as many books per month as we had been able to do with our former manual system. By April we processed double the number of books we could have produced with our manual system; and we expect that, in general, we can maintain this higher level of processing. But, as with any "assembly line type" of process, increased production in one area is not necessarily an unmixed blessing. Cataloging twice as many books means having twice as many cards to file into our catalogs; it means twice as many books to label, stamp, mark with call numbers, produce circulation cards for, send to the bindery, and on and on. -

HP Laserjet – the Early History Jim Hall

HP LaserJet – The Early History Jim Hall Foreword by John Minck If you're making a Top-10 list of inventions that changed history, you'd probably include the internal combustion engine, the transistor, the atom bomb(?), the generic cell phone, the telephone, the PC, among others. But you'd be hard pressed NOT to add the HP LaserJet printer. Its production numbers in 2006 surpassed 100 million. Arguably, the Father of the LaserJet would have to be Jim Hall, of HP's Boise Division. Jim joined the 1972 Microwave Division from GE, Lynchburg, where he worked on microwave communications gear. After several years at MWD, he transferred to the Computer Group Boise Division as it embarked on computer printing products, and began a remarkable leadership quest for the ultimate in personal printing products. Much has been written about the HP Laserjet success, but here is the story direct from the man and his team which made it happen. There were other contributors, including HP Labs, and of course, the Canon laser engine, but the Boise Division should get the kudos for persevering to an amazing and enduring product line. Invention, Indeed! Introduction for granted today with computers and printing was still the stuff of scientific fiction. Why write a LaserJet History? Over the past 15 years, various books and articles have given bits and pieces In the mid-70‟s mainframe computing was starting to of HP LaserJet history and dissected the reasons for fade and the minicomputer market (Digital its market and business success. Most1,2,3 have been Equipment, HP, Data General) was exploding. -

Administration De Maximo Asset Management

IBM Maximo Asset Management Version 7 Edition 6 Administration de Maximo Asset Management IBM Important Avant d'utiliser le présent document et le produit associé, prenez connaissance des informations générales figurant à la section «Remarques», à la page 523. La présente édition s'applique à la version 7.6.0.6 d'IBM Maximo Asset Management, ainsi qu'à toutes les versions et modifications ultérieures, sauf indication contraire dans les nouvelles éditions. LE PRESENT DOCUMENT EST LIVRE EN L'ETAT SANS AUCUNE GARANTIE EXPLICITE OU IMPLICITE. IBM DECLINE NOTAMMENT TOUTE RESPONSABILITE RELATIVE A CES INFORMATIONS EN CAS DE CONTREFACON AINSI QU'EN CAS DE DEFAUT D'APTITUDE A L'EXECUTION D'UN TRAVAIL DONNE. Ce document est mis à jour périodiquement. Chaque nouvelle édition inclut les mises à jour. Les informations qui y sont fournies sont susceptibles d'être modifiées avant que les produits décrits ne deviennent eux-mêmes disponibles. En outre, il peut contenir des informations ou des références concernant certains produits, logiciels ou services non annoncés dans ce pays. Cela ne signifie cependant pas qu'ils y seront annoncés. Pour plus de détails, pour toute demande d'ordre technique, ou pour obtenir des exemplaires de documents IBM, référez-vous aux documents d'annonce disponibles dans votre pays, ou adressez-vous à votre partenaire commercial. Vous pouvez également consulter les serveurs Internet suivants : v http://www.fr.ibm.com (serveur IBM en France) v http://www.ibm.com/ca/fr (serveur IBM au Canada) v http://www.ibm.com (serveur IBM aux Etats-Unis) Compagnie IBM France Direction Qualité 17, avenue de l'Europe 92275 Bois-Colombes Cedex © Copyright IBM France 2016. -

IBM Rational Rhapsody Case Studies

IBM Rational Rhapsody IBM Rational Rhapsody Case Studies © 2011 IBM Corporation Software and Systems Engineering | Rational Tap into Pools of Productivity To free up time for innovation with automation & integration IBM Collaborative Average Developer time lost to delays in designDesign completion Management Traditional approach 55.3 person-months World Model-driven 29.6 person-months Project savings $257,000 development (MDD) World MDD with 20.0 person-months Project savings $353,000 IBM Rational Source: 2011 EMF Study A move to architecture & design best practices and integrated solutions will significantly free up time for innovation © 2011 IBM Corporation Software and Systems Engineering | Rational Embedded Market Leadership VDC data shows IBM/Rational as the market leader of standard language-based, embedded software / system modeling tools for the 2011 calendar year*. IBM/Rational 74% Others 26% 0% 20% 40% 60% 80% percent of dollars 2011 •Source: 2012 Software & System Lifecycle Management Tools Market Intelligence Service, Volume 1: Software & System Modeling Tools – April 2012, VDC Research © 2011 IBM Corporation 3 4 Software and Systems Engineering | Rational The Quantified RoI (1 of 2) Business Challenge Rational Solution Business benefit Examples in italics below, Customer Examples (Product/Feature) (links to references) Improve productivity across product Automated generation of full production code for C, C++, Java, and - Speed and time to value Alps Electric Co., Ltd. development team Ada allows developers to focus on model level structural and behavioral design - Development cut by 50-60% - Faster delivery of software components that are in Seamless host/target development enabling development and test synch with the design before hardware available Valtech India Systems Pvt. -

Technology Standards and Products Guide Version 7.10 ● 9/13/2021

Technology Standards and Products Guide Version 7.10 ● 9/13/2021 FINAL Technology Standards and Products Guide Revision History Revision History VERSION DATE DESCRIPTION 1.0 November 2, 2001 • Provided general updates under TO 55. 2.0 January 25, 2002 • Reformatted document to align with the Department of Education Policy document. • Updated document to reflect new standards and products. 2.1 February 12, 2002 • Updated document with client feedback. • Renamed document title. 2.2 March 29, 2002 • Included updates from 1/15/2002 to 3/15/2002 in Federal Student Aid standards, products, and policies. • Added an Application Development section. 2.3 June 30, 2002 • Incorporated planned ITA upgrades • Added an Executive Summary. • Added Mobile Devices to Network Services section. • Added External Connections to External Environment section. • Updated several version numbers. 3.0 September 27, 2002 • Updated to include minor version number changes and other architectural changes. Reflects all updates through revision date. 3.1 April 1, 2003 • Updated to include minor version number changes and other architectural changes. Reflects all updates through revision date. 4.0 August 1, 2003 • Updated to include minor version number changes and other architectural changes. Reflects all updates through revision date. 4.1 August 2004 • Updated to include minor version number changes and other architectural changes. Reflects all updates through revision date. • Replaced Consistent Data with Data Strategy section. Reflects all updates through revision date. 4.2 May 2005 • Introduced the Metadata management category, added products to be provided with Advance and CSB contracts. Version: 7.10 2 9/13/2021 Technology Standards and Products Guide Revision History VERSION DATE DESCRIPTION 5.0 December 2005 • Populated the Metadata management category. -

Establishment of a Sectoral Fertilizer Documentation and Information Unit for the Arab Region at AFCFP in Kuwait DP/RAB/78/021/11—01/B

OCCASION This publication has been made available to the public on the occasion of the 50th anniversary of the United Nations Industrial Development Organisation. DISCLAIMER This document has been produced without formal United Nations editing. The designations employed and the presentation of the material in this document do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations Industrial Development Organization (UNIDO) concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries, or its economic system or degree of development. Designations such as “developed”, “industrialized” and “developing” are intended for statistical convenience and do not necessarily express a judgment about the stage reached by a particular country or area in the development process. Mention of firm names or commercial products does not constitute an endorsement by UNIDO. FAIR USE POLICY Any part of this publication may be quoted and referenced for educational and research purposes without additional permission from UNIDO. However, those who make use of quoting and referencing this publication are requested to follow the Fair Use Policy of giving due credit to UNIDO. CONTACT Please contact [email protected] for further information concerning UNIDO publications. For more information about UNIDO, please visit us at www.unido.org UNITED NATIONS INDUSTRIAL DEVELOPMENT ORGANIZATION Vienna International Centre, P.O. Box 300, 1400 Vienna, Austria Tel: (+43-1) 26026-0 · www.unido.org · [email protected] 8 I 2 5 ' 12.2 1.25 RESTRICTED \ o m 20 June 1981 English Establishment of a Sectoral Fertilizer Documentation and Information Unit for the Arab Region at AFCFP in Kuwait DP/RAB/78/021/11—01/B Technical report and final mission report Prepared for the Government of Arab States by the United Nations Industrial Development Organization, executing agency for the United Nations Development Programme Based on the work of Horst G. -

SEYBOLD SEMINARS '88 PS/2 Model 80

VVVVVV'STV>VVVV iy *)H8 CO cm =&: cr« L QJ X3 I/I X V h~ u r-J «r* L O a O • r"l o Hi c ui rH o ,— !L •M 01 3 Ll c z: 13 <r cr- m O C-4 □ C CO C CM Hi Ilj *3“ nr. CO {— X CO co r- CO nu 73 LO .5 rS o sO (N S O Os CO < 173 w *73 £ sT wH ja <N • 0 <N 13 Os SO If you’re serious about publishing on publishing Systems Report Volume 17, N**'9 ISSN: 0736-7160 January 31, 1988 The Fourth vyavcT Publishing Joins «nk to Scitex. dobes PostScript Way and tc The Mainstream Wm recorder. ^rSf,XvS»ot a version of -nieatespage ‘"O^SjSif’ USD°S . ve been performed «°r system. £.„„inns have / Son MicRosysT don m New York. yvision, S**'^att^c^Vin^SgrcssiVclyto^ . pecialists using equipmen ^ tions dedicated to wd Kevin software. This week. ,> at,Ve to Microsofrl o?!^00 the Unix 20 on and ’$££* °f «* is becoming duties with •rmen Scitex stock. / Our ^ on^ “ d"h°P 2» ssrffsfssa. booking at Unix? Be t h„ve left Xyvision and ^SssSSSsbinStiSisa and BiU Solimenos tnoug S'rT: Tbe A« Larry — commercial users. °* Composition » APPle^Ci»n.-e«^ 8 ment agree . computer | pn& / Imposition—the p Equipment and Appl d Jop. h»'“r^tieEm.oshes rmSy“"OT“ktdDEC » __>«-»,« apaMltfe do w j;*« ~ VAX computers. / c JO°* for anW ...l . _ "*>"*6 page 8. _ Hoolahan leaves -ili ,v* °cimnars. — 22 aT8cT tefbuy 10% of Sun *»* S SS KanildcPostScnpt 25 Ncw*owfotmag50S ferences & Exhibits -20 26 Xerox get ^ Crosfield number of '%bold ^minarsSeminar* for Rust, Torregianni join Hell “mducB. -

Spartan Daily

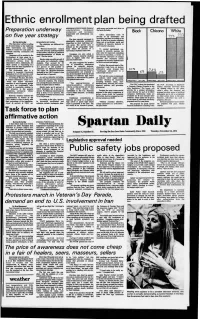

Ethnic enrollment plan being drafted admissions criteria, family financial with money put aside each term on considerations, inadequate the basis of grades. Preparation underway elementary and secondary Black Chicano White preparation and institutional con- CSUC universities train 70 straints. on five year strategy percent of all elementary and 7/7, secondary teachers according to the The plan requests campuses to plan. It calls for a greater emphasis statistically determine enrollment By Don McCarthy dergraduates are women. being put on particular needs and disparities in their individual ser- The chancellor's office is calling problems of minority students in The statistics are different for vice areas. The service area is on all universities in the California CSUC teacher education. minorities. defined as the geographic range State Universities and Colleges "Mexican-Americans remain from which 80 percent of a system to create five year plans To combat institutional con- to the most severely underrepresented university's students come from. increase minority enrollment and straints to minority success, the in the system, but there is continued plan calls for improved counseling retention. to underrepresentation of Blacks as Campuses are also called services to minorities, a review of Campuses will be assisted in the minority students well," the plan states. identify reasons the minority relevance of campus development of their plans by a rates than the leave at higher libraries and increased career recently released Plan for Student Blacks make up eight percent of general student body. Affirmative Action, from the 12th grade and community college programs for minority students such chancellor's office. -

Professional Cv

PROFESSIONAL CV OF MO M. JAMSHIDI, Ph.D., DEng. (h.c.) Fellow-Institute of Electrical and Electronic Engineers Fellow-American Society of Mechanical Engineers Fellow-American Association for the Advancement of Science Associate Fellow-American Institute for Aeronautics and Astronautics Fellow-New York Academy of Science Fellow-TWAS (The World Academy of Sciences) Foreign Member Hungarian Academy of Engineering Foreign Member Russian Academy of Nonlinear Sciences Work Phone: 1 210 458 7074 Work Fax: 1 210 458 5947 Emails: [email protected], [email protected] ACE Labs site: https://acelab.wixsite.com/acelab Revised in January 2019 PRESENT POSITIONS: Lutcher Brow Endowed Distinguished Chair, Department of Electrical and Computer Engineering, University of Texas, San Antonio, TX, USA (University of Texas System Position) 1 Member, Chancellor’s Council, University of Texas System, Austin, TX, 2012- Visiting Professor, Loughbrough University, Loughbrough, UK, 2014-2022. Honorary Professor, Deakin University, Australia, 2010-2018 Honorary Professor, University of Birmingham, UK, 2012-present Honorary Professor, Obuda University, Hungary, 2012- present Adjunct Professor, Loughbrough University, UK, 2014-present Founding Director, Center of Autonomous Control Engineering - ACE, 1995-present (UNM 1995-2006, ACE Laboratory UTSA 2006-present). Regents Professor Emeritus of Electrical and Computer Engineering, University of New Mexico, Albuquerque, NM USA, 2006- present. AT&T Professor Emeritus of Manufacturing Engineering, University of -

ED288536.Pdf

DOCUMENT RESUME IR 052 193 AUTHOR Hirshon, Arnold; And Others TITLE ULS Joint Committee to Study Library Periodical Serticas. Final Report. INSTITUTION Virginia Commonwealth Univ., Richmond. PUB DATE 30 Sep 86 NOTE 80p.; For a summary of decisions based on this report, see IR 052 194. PUB TYPE Viewpoints (120) -- Reports - Evaluative/Feasibility (142) -- Tests/Evaluation Instruments (160) £DRS PRICE MF01/PC04 Plus Postage. DESCRIPTORS *Academic Libraries; *Access to Information; Costs; Higher Education; *Library Circulation; Library Facilities; *Librsry Planning; Library Services; Library Surveys; *Periodicals; Questionnaires; *Reprography IDENTIFIERS *Virginia Commonwealth University ABSTRACT Developed in response to issues raised about the circulation of bound periodicals, the cost of photocopies, and the condition of the photocopiers, this report of the Joint Committee to Study Library Periodical Services at the Virginia Commonwealth University libraries recommends goals for periodicals services for both campuses of the university. Following a discussion of the background of the report and the goals for periodicals services, recommendations are presented for the following areas: (1) photocopiers, including photocopy services, Vendacard, cost per copy, and quality and number of photocopiers; (2) circulation of bound periodicals, including linking, loan policies, and tattle-tape; and (3) open access to current periodicals, including location, construction, security of materials, and circulation control. Sim additional general observations are also presented, and summaries of recommendations and proposed expenses are provided. Appendices include the charge to the committee and issues concerning access to periodicals; a 10-item bibliography; a periodicals survey of the academic and medical libraries of eight universities; cost comparisons of photocopier costs; and costs related to bound and unbound periodicals, including costs on linking, tattle-taping, etc., and renovation costs for open stacks. -

Asset Management Plan: 1998-2003. INSTITUTION Jefferson County School District R-1, Denver, CO

DOCUMENT RESUME ED 436 933 EF 005 525 TITLE Asset Management Plan: 1998-2003. INSTITUTION Jefferson County School District R-1, Denver, CO. PUB DATE 1998-03-00 NOTE 246p. AVAILABLE FROM Jefferson County Public Schools, 1829 Denver West Drive, Building 27, P.O. Box 4001, Golden, CO 80401-0001; Tel: 303-982-6500. PUB TYPE Numerical/Quantitative Data (110) Reports Descriptive (141) EDRS. PRICE MF01/PC10 Plus Postage. DESCRIPTORS *Budgets; *Capital Outlay (For Fixed Assets); Data Collection; *Educational Facilities Improvement; Elementary Secondary Education; *Financial Support; Public Schools; School District Spending; Tables (Data) IDENTIFIERS *Jefferson County School District CO ABSTRACT The Jefferson County School District (Denver, Colorado) presents its final scheduling and funding source information for the 1998-2003 capital improvement program and the status of the 1992-1997 completed capital improvement project costs compared to the budget. Funding data are detailed for each of the district's 182 public schools, special use facilities, support facilities; and community use facilities as well as any future capital improvement needs. The district's eight environmental regulatory programs are examined. Remaining sections provide assessment data and information on facility maintenance, pavement management, real property management, school and office equipment, sponsorship, technology, temporary buildings, and vehicle replacement. (GR) Reproductions supplied by EDRS are the best that can be made from the original document. Jefferson County