Towards More Scalability and Flexibility for Distributed Storage Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Elastic Storage for Linux on System Z IBM Research & Development Germany

Peter Münch T/L Test and Development – Elastic Storage for Linux on System z IBM Research & Development Germany Elastic Storage for Linux on IBM System z © Copyright IBM Corporation 2014 9.0 Elastic Storage for Linux on System z Session objectives • This presentation introduces the Elastic Storage, based on General Parallel File System technology that will be available for Linux on IBM System z. Understand the concepts of Elastic Storage and which functions will be available for Linux on System z. Learn how you can integrate and benefit from the Elastic Storage in a Linux on System z environment. Finally, get your first impression in a live demo of Elastic Storage. 2 © Copyright IBM Corporation 2014 Elastic Storage for Linux on System z Trademarks The following are trademarks of the International Business Machines Corporation in the United States and/or other countries. AIX* FlashSystem Storwize* Tivoli* DB2* IBM* System p* WebSphere* DS8000* IBM (logo)* System x* XIV* ECKD MQSeries* System z* z/VM* * Registered trademarks of IBM Corporation The following are trademarks or registered trademarks of other companies. Adobe, the Adobe logo, PostScript, and the PostScript logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States, and/or other countries. Cell Broadband Engine is a trademark of Sony Computer Entertainment, Inc. in the United States, other countries, or both and is used under license therefrom. Intel, Intel logo, Intel Inside, Intel Inside logo, Intel Centrino, Intel Centrino logo, Celeron, Intel Xeon, Intel SpeedStep, Itanium, and Pentium are trademarks or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. -

Oracle® Linux Administrator's Solutions Guide for Release 6

Oracle® Linux Administrator's Solutions Guide for Release 6 E37355-64 August 2017 Oracle Legal Notices Copyright © 2012, 2017, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. No other rights are granted to the U.S. -

BESIII Physical Analysis on Hadoop Platform

20th International Conference on Computing in High Energy and Nuclear Physics (CHEP2013) IOP Publishing Journal of Physics: Conference Series 513 (2014) 032044 doi:10.1088/1742-6596/513/3/032044 BESIII Physical Analysis on Hadoop Platform Jing HUO12, Dongsong ZANG12, Xiaofeng LEI12, Qiang LI12, Gongxing SUN1 1Institute of High Energy Physics, Beijing, China 2University of Chinese Academy of Sciences, Beijing, China E-mail: [email protected] Abstract. In the past 20 years, computing cluster has been widely used for High Energy Physics data processing. The jobs running on the traditional cluster with a Data-to-Computing structure, have to read large volumes of data via the network to the computing nodes for analysis, thereby making the I/O latency become a bottleneck of the whole system. The new distributed computing technology based on the MapReduce programming model has many advantages, such as high concurrency, high scalability and high fault tolerance, and it can benefit us in dealing with Big Data. This paper brings the idea of using MapReduce model to do BESIII physical analysis, and presents a new data analysis system structure based on Hadoop platform, which not only greatly improve the efficiency of data analysis, but also reduces the cost of system building. Moreover, this paper establishes an event pre-selection system based on the event level metadata(TAGs) database to optimize the data analyzing procedure. 1. Introduction 1.1 The current BESIII computing architecture High Energy Physics experiment is a typical data-intensive application. The BESIII computing system now consist of 3PB+ data, 6500 CPU cores, and it is estimated that there will be more than 10PB data produced in the future 5 years. -

Efficient Implementation of Data Objects in the OSD+-Based Fusion

Efficient Implementation of Data Objects in the OSD+-Based Fusion Parallel File System Juan Piernas(B) and Pilar Gonz´alez-F´erez Departamento de Ingenier´ıa y Tecnolog´ıa de Computadores, Universidad de Murcia, Murcia, Spain piernas,pilar @ditec.um.es { } Abstract. OSD+s are enhanced object-based storage devices (OSDs) able to deal with both data and metadata operations via data and direc- tory objects, respectively. So far, we have focused on designing and implementing efficient directory objects in OSD+s. This paper, however, presents our work on also supporting data objects, and describes how the coexistence of both kinds of objects in OSD+s is profited to efficiently implement data objects and to speed up some commonfile operations. We compare our OSD+-based Fusion Parallel File System (FPFS) with Lustre and OrangeFS. Results show that FPFS provides a performance up to 37 better than Lustre, and up to 95 better than OrangeFS, × × for metadata workloads. FPFS also provides 34% more bandwidth than OrangeFS for data workloads, and competes with Lustre for data writes. Results also show serious scalability problems in Lustre and OrangeFS. Keywords: FPFS OSD+ Data objects Lustre OrangeFS · · · · 1 Introduction File systems for HPC environment have traditionally used a cluster of data servers for achieving high rates in read and write operations, for providing fault tolerance and scalability, etc. However, due to a growing number offiles, and an increasing use of huge directories with millions or billions of entries accessed by thousands of processes at the same time [3,8,12], some of thesefile systems also utilize a cluster of specialized metadata servers [6,10,11] and have recently added support for distributed directories [7,10]. -

Respecting the Block Interface – Computational Storage Using Virtual Objects

Respecting the block interface – computational storage using virtual objects Ian F. Adams, John Keys, Michael P. Mesnier Intel Labs Abstract immediately obvious, and trade-offs need to be made. Unfor- tunately, this often means that the parallel bandwidth of all Computational storage has remained an elusive goal. Though drives within a storage server will not be available. The same minimizing data movement by placing computation close to problem exists within a single SSD, as the internal NAND storage has quantifiable benefits, many of the previous at- flash bandwidth often exceeds that of the storage controller. tempts failed to take root in industry. They either require a Enter computational storage, an oft-attempted approach to departure from the widespread block protocol to one that address the data movement problem. By keeping computa- is more computationally-friendly (e.g., file, object, or key- tion physically close to its data, we can avoid costly I/O. Var- value), or they introduce significant complexity (state) on top ious designs have been pursued, but the benefits have never of the block protocol. been enough to justify a new storage protocol. In looking We participated in many of these attempts and have since back at the decades of computational storage research, there concluded that neither a departure from nor a significant ad- is a common requirement that the block protocol be replaced dition to the block protocol is needed. Here we introduce a (or extended) with files, objects, or key-value pairs – all of block-compatible design based on virtual objects. Like a real which provide a convenient handle for performing computa- object (e.g., a file), a virtual object contains the metadata that tion. -

Globalfs: a Strongly Consistent Multi-Site File System

GlobalFS: A Strongly Consistent Multi-Site File System Leandro Pacheco Raluca Halalai Valerio Schiavoni University of Lugano University of Neuchatelˆ University of Neuchatelˆ Fernando Pedone Etienne Riviere` Pascal Felber University of Lugano University of Neuchatelˆ University of Neuchatelˆ Abstract consistency, availability, and tolerance to partitions. Our goal is to ensure strongly consistent file system operations This paper introduces GlobalFS, a POSIX-compliant despite node failures, at the price of possibly reduced geographically distributed file system. GlobalFS builds availability in the event of a network partition. Weak on two fundamental building blocks, an atomic multicast consistency is suitable for domain-specific applications group communication abstraction and multiple instances of where programmers can anticipate and provide resolution a single-site data store. We define four execution modes and methods for conflicts, or work with last-writer-wins show how all file system operations can be implemented resolution methods. Our rationale is that for general-purpose with these modes while ensuring strong consistency and services such as a file system, strong consistency is more tolerating failures. We describe the GlobalFS prototype in appropriate as it is both more intuitive for the users and detail and report on an extensive performance assessment. does not require human intervention in case of conflicts. We have deployed GlobalFS across all EC2 regions and Strong consistency requires ordering commands across show that the system scales geographically, providing replicas, which needs coordination among nodes at performance comparable to other state-of-the-art distributed geographically distributed sites (i.e., regions). Designing file systems for local commands and allowing for strongly strongly consistent distributed systems that provide good consistent operations over the whole system. -

Big Data Storage Workload Characterization, Modeling and Synthetic Generation

BIG DATA STORAGE WORKLOAD CHARACTERIZATION, MODELING AND SYNTHETIC GENERATION BY CRISTINA LUCIA ABAD DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate College of the University of Illinois at Urbana-Champaign, 2014 Urbana, Illinois Doctoral Committee: Professor Roy H. Campbell, Chair Professor Klara Nahrstedt Associate Professor Indranil Gupta Assistant Professor Yi Lu Dr. Ludmila Cherkasova, HP Labs Abstract A huge increase in data storage and processing requirements has lead to Big Data, for which next generation storage systems are being designed and implemented. As Big Data stresses the storage layer in new ways, a better understanding of these workloads and the availability of flexible workload generators are increas- ingly important to facilitate the proper design and performance tuning of storage subsystems like data replication, metadata management, and caching. Our hypothesis is that the autonomic modeling of Big Data storage system workloads through a combination of measurement, and statistical and machine learning techniques is feasible, novel, and useful. We consider the case of one common type of Big Data storage cluster: A cluster dedicated to supporting a mix of MapReduce jobs. We analyze 6-month traces from two large clusters at Yahoo and identify interesting properties of the workloads. We present a novel model for capturing popularity and short-term temporal correlations in object re- quest streams, and show how unsupervised statistical clustering can be used to enable autonomic type-aware workload generation that is suitable for emerging workloads. We extend this model to include other relevant properties of stor- age systems (file creation and deletion, pre-existing namespaces and hierarchical namespaces) and use the extended model to implement MimesisBench, a realistic namespace metadata benchmark for next-generation storage systems. -

A Decentralized Cloud Storage Network Framework

Storj: A Decentralized Cloud Storage Network Framework Storj Labs, Inc. October 30, 2018 v3.0 https://github.com/storj/whitepaper 2 Copyright © 2018 Storj Labs, Inc. and Subsidiaries This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 license (CC BY-SA 3.0). All product names, logos, and brands used or cited in this document are property of their respective own- ers. All company, product, and service names used herein are for identification purposes only. Use of these names, logos, and brands does not imply endorsement. Contents 0.1 Abstract 6 0.2 Contributors 6 1 Introduction ...................................................7 2 Storj design constraints .......................................9 2.1 Security and privacy 9 2.2 Decentralization 9 2.3 Marketplace and economics 10 2.4 Amazon S3 compatibility 12 2.5 Durability, device failure, and churn 12 2.6 Latency 13 2.7 Bandwidth 14 2.8 Object size 15 2.9 Byzantine fault tolerance 15 2.10 Coordination avoidance 16 3 Framework ................................................... 18 3.1 Framework overview 18 3.2 Storage nodes 19 3.3 Peer-to-peer communication and discovery 19 3.4 Redundancy 19 3.5 Metadata 23 3.6 Encryption 24 3.7 Audits and reputation 25 3.8 Data repair 25 3.9 Payments 26 4 4 Concrete implementation .................................... 27 4.1 Definitions 27 4.2 Peer classes 30 4.3 Storage node 31 4.4 Node identity 32 4.5 Peer-to-peer communication 33 4.6 Node discovery 33 4.7 Redundancy 35 4.8 Structured file storage 36 4.9 Metadata 39 4.10 Satellite 41 4.11 Encryption 42 4.12 Authorization 43 4.13 Audits 44 4.14 Data repair 45 4.15 Storage node reputation 47 4.16 Payments 49 4.17 Bandwidth allocation 50 4.18 Satellite reputation 53 4.19 Garbage collection 53 4.20 Uplink 54 4.21 Quality control and branding 55 5 Walkthroughs ............................................... -

Storage Systems and Input/Output 2018 Pre-Workshop Document

Storage Systems and Input/Output 2018 Pre-Workshop Document Gaithersburg, Maryland September 19-20, 2018 Meeting Organizers Robert Ross (ANL) (lead organizer) Glenn Lockwood (LBL) Lee Ward (SNL) (co-lead) Kathryn Mohror (LLNL) Gary Grider (LANL) Bradley Settlemyer (LANL) Scott Klasky (ORNL) Pre-Workshop Document Contributors Philip Carns (ANL) Quincey Koziol (LBL) Matthew Wolf (ORNL) 1 Table of Contents 1 Table of Contents 2 2 Executive Summary 4 3 Introduction 5 4 Mission Drivers 7 4.1 Overview 7 4.2 Workload Characteristics 9 4.2.1 Common observations 9 4.2.2 An example: Adjoint-based sensitivity analysis 10 4.3 Input/Output Characteristics 11 4.4 Implications of In Situ Analysis on the SSIO Community 14 4.5 Data Organization and Archiving 15 4.6 Metadata and Provenance 18 4.7 Summary 20 5 Computer Science Challenges 21 5.1 Hardware/Software Architectures 21 5.1.1 Storage Media and Interfaces 21 5.1.2 Networks 21 5.1.3 Active Storage 22 5.1.4 Resilience 23 5.1.5 Understandability 24 5.1.6 Autonomics 25 5.1.7 Security 26 5.1.8 New Paradigms 27 5.2 Metadata, Name Spaces, and Provenance 28 5.2.1 Metadata 28 5.2.2 Namespaces 30 5.2.3 Provenance 30 5.3 Supporting Science Workflows - SAK 32 5.3.1 DOE Extreme Scale Use cases - SAK 33 5.3.2 Programming Model Integration - (Workflow Composition for on line workflows, and for offline workflows ) - MW 33 5.3.3 Workflows (Engine) - Provision and Placement MW 34 5.3.4 I/O Middleware and Libraries (Connectivity) - both on-and offline, (not or) 35 2 Storage Systems and Input/Output 2018 Pre-Workshop -

HFAA: a Generic Socket API for Hadoop File Systems

HFAA: A Generic Socket API for Hadoop File Systems Adam Yee Jeffrey Shafer University of the Pacific University of the Pacific Stockton, CA Stockton, CA [email protected] jshafer@pacific.edu ABSTRACT vices: one central NameNode and many DataNodes. The Hadoop is an open-source implementation of the MapReduce NameNode is responsible for maintaining the HDFS direc- programming model for distributed computing. Hadoop na- tory tree. Clients contact the NameNode in order to perform tively integrates with the Hadoop Distributed File System common file system operations, such as open, close, rename, (HDFS), a user-level file system. In this paper, we intro- and delete. The NameNode does not store HDFS data itself, duce the Hadoop Filesystem Agnostic API (HFAA) to allow but rather maintains a mapping between HDFS file name, Hadoop to integrate with any distributed file system over a list of blocks in the file, and the DataNode(s) on which TCP sockets. With this API, HDFS can be replaced by dis- those blocks are stored. tributed file systems such as PVFS, Ceph, Lustre, or others, thereby allowing direct comparisons in terms of performance Although HDFS stores file data in a distributed fashion, and scalability. Unlike previous attempts at augmenting file metadata is stored in the centralized NameNode service. Hadoop with new file systems, the socket API presented here While sufficient for small-scale clusters, this design prevents eliminates the need to customize Hadoop’s Java implementa- Hadoop from scaling beyond the resources of a single Name- tion, and instead moves the implementation responsibilities Node. Prior analysis of CPU and memory requirements for to the file system itself. -

Oracle® Linux 7 Working with LXC

Oracle® Linux 7 Working With LXC F32445-02 October 2020 Oracle Legal Notices Copyright © 2020, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

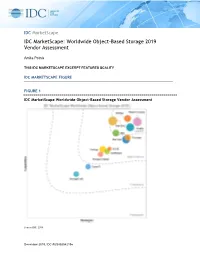

IDC Marketscape IDC Marketscape: Worldwide Object-Based Storage 2019 Vendor Assessment

IDC MarketScape IDC MarketScape: Worldwide Object-Based Storage 2019 Vendor Assessment Amita Potnis THIS IDC MARKETSCAPE EXCERPT FEATURES SCALITY IDC MARKETSCAPE FIGURE FIGURE 1 IDC MarketScape Worldwide Object-Based Storage Vendor Assessment Source: IDC, 2019 December 2019, IDC #US45354219e Please see the Appendix for detailed methodology, market definition, and scoring criteria. IN THIS EXCERPT The content for this excerpt was taken directly from IDC MarketScape: Worldwide Object-Based Storage 2019 Vendor Assessment (Doc # US45354219). All or parts of the following sections are included in this excerpt: IDC Opinion, IDC MarketScape Vendor Inclusion Criteria, Essential Guidance, Vendor Summary Profile, Appendix and Learn More. Also included is Figure 1. IDC OPINION The storage market has come a long way in terms of understanding object-based storage (OBS) technology and actively adopting it. It is a common practice for OBS to be adopted for secondary and cold storage needs at scale. Over the recent years, OBS has proven its ability to scale to tens and hundreds of petabytes and is now maturing to support newer workloads such as unstructured data analytics, IoT, AI/ML/DL, and so forth. As the price of flash declines and the data sets continue to grow, the need for analyzing the data is on the rise. Moving data sets from an object store to a high- performance tier for analysis is a thing of the past. Many vendors are enhancing their object offerings to include a flash tier or are bringing all-flash array object storage offerings to the market today. In this IDC MarketScape, IDC assesses the present commercial OBS supplier (suppliers that deliver software-defined OBS solutions as software or appliances much like other storage platforms) landscape.