A System That Enables Amateur Singers to Practice Backing Vocals for Karaoke

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Theme Park As "De Sprookjessprokkelaar," the Gatherer and Teller of Stories

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2018 Exploring a Three-Dimensional Narrative Medium: The Theme Park as "De Sprookjessprokkelaar," The Gatherer and Teller of Stories Carissa Baker University of Central Florida, [email protected] Part of the Rhetoric Commons, and the Tourism and Travel Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Baker, Carissa, "Exploring a Three-Dimensional Narrative Medium: The Theme Park as "De Sprookjessprokkelaar," The Gatherer and Teller of Stories" (2018). Electronic Theses and Dissertations, 2004-2019. 5795. https://stars.library.ucf.edu/etd/5795 EXPLORING A THREE-DIMENSIONAL NARRATIVE MEDIUM: THE THEME PARK AS “DE SPROOKJESSPROKKELAAR,” THE GATHERER AND TELLER OF STORIES by CARISSA ANN BAKER B.A. Chapman University, 2006 M.A. University of Central Florida, 2008 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the College of Arts and Humanities at the University of Central Florida Orlando, FL Spring Term 2018 Major Professor: Rudy McDaniel © 2018 Carissa Ann Baker ii ABSTRACT This dissertation examines the pervasiveness of storytelling in theme parks and establishes the theme park as a distinct narrative medium. It traces the characteristics of theme park storytelling, how it has changed over time, and what makes the medium unique. -

Sean Mcculloch – Lead Vocals Daniel Gailey – Guitar, Backing Vocals Bryce Kelly – Bass, Backing Vocals Lee Humarian – Drums

Sean McCulloch – Lead Vocals Daniel Gailey – Guitar, Backing Vocals Bryce Kelly – Bass, Backing Vocals Lee Humarian – Drums Utter and complete reinvention isn’t the only way to destroy boundaries. Oftentimes, the most invigorating renewal in any particular community comes not from a generation’s desperate search for some sort of unrealized frontier, but from a reverence for the strength of its foundation. The steadfast metal fury of PHINEHAS is focused, deliberate, and unashamed. Across three albums and two EPs, the Southern California quartet has proven to be both herald of the genre’s future and keeper of its glorious past. The New Wave Of American Metal defined by the likes of As I Lay Dying, Shadows Fall, Unearth, All That Remains, Bleeding Through, and likeminded bands on the Ozzfest stage and on the covers of heavy metal publications has found a new heir in PHINEHAS. Even as the NWOAM owed a sizeable debt to Europe’s At The Gates and In Flames and North America’s Integrity and Coalesce, PHINEHAS grab the torch from the generation just before them. The four men of mayhem find themselves increasingly celebrated by fans, critics, and contemporaries, due to their pulse-pounding brutality. Make no mistake: PHINEHAS is not a simple throwback. PHINEHAS is a distillation of everything that has made the genre great since bands first discovered the brilliant results of combining heavy metal’s technical skill with hardcore punk’s impassioned fury. SEAN MCCULLOCH has poured his heart out through his throat with decisive power, honest reflections on faith and doubt, and down-to-earth charm since 2007. -

PROFILE INTERVIEW (Pdf)

Scandinavia's leading newspaper D2/Dagens Naeringsliv Translated from Norwegian. WITH A SPIRIT ALL OF HIS OWN Norwegian, Christian Schoyen drives a Bentley, has made a Hollywood feature film, and written self-help books. The self-made millionaire works as an expert in human behavior. TEXT BIRGER EMANUELSENPHOTOS ALYSON ALIANO Los Angeles, USA “Many people grow up with wrong values” CHRISTIAN SCHOYEN Christian Schoyen steps on the gas pedal with his leather BORN: 1966. shoe, and his Bentley Continental accelerates soundlessly LIVES: Los Angeles, along Rodeo Drive in Beverly Hills, California. He passes the California. luxury stores of Tiffany, Louis Vuitton, and Channel as he EDUCATION: BBA from talks about basic life values. God is inside all of us. We have an Pacific Lutheran obligation to give back. Love is the ultimate goal for all University, Tacoma, humans. Washington. Ethnic studies at California “Every person is a unique diamond. That is the message I want to share.” State University, Northridge, California. Pearl white smile. Blond hair lightened by the sun. Tanned. PROFESSION: Founded Symmetrical facial structure. Schoyen's smile is his Executive Search trademark. It radiates towards you from everywhere: from his Research in 1997. CEO book covers, on his website www.christianschoyen.com, and of the company in from the stage. U.S.A., London, and Oslo. BRAINWASHED. The 44-year-old started his headhunting BOOKS: Secrets of the firm, Executive Search Research, in Oslo in the late ‘90s, and Executive Search shortly after the company's inception also opened offices in Experts (1999), How to both Los Angeles and London. -

Hamokara: a System for Practice of Backing Vocals for Karaoke

HAMOKARA: A SYSTEM FOR PRACTICE OF BACKING VOCALS FOR KARAOKE Mina Shiraishi, Kozue Ogasawara, and Tetsuro Kitahara College of Humanities and Sciences, Nihon University shiraishi,ogasawara,kitahara @kthrlab.jp { } ABSTRACT the two singers do not have sufficient singing skill, the pitch of the backing vocals may be influenced by the lead Creating harmony in karaoke by a lead vocalist and a back- vocals. To avoid this, the backing vocalist needs to learn to ing vocalist is enjoyable, but backing vocals are not easy sing accurately in pitch by practicing the backing vocals in for non-advanced karaoke users. First, it is difficult to find advance. musically appropriate submelodies (melodies for backing In this paper, we propose a system called HamoKara, vocals). Second, the backing vocalist has to practice back- which enables a user to practice backing vocals. This ing vocals in advance in order to play backing vocals accu- system has two functions. The first function is automatic rately, because singing submelodies is often influenced by submelody generation. For popular music songs, the sys- the singing of the main melody. In this paper, we propose tem generates submelodies and indicates them with both a backing vocals practice system called HamoKara. This a piano-roll display and a guide tone. The second func- system automatically generates a submelody with a rule- tion is support of backing vocals practice. While the user based or HMM-based method, and provides users with is singing the indicated submelody, the system shows the an environment for practicing backing vocals. Users can pitch (fundamental frequency, F0) of the singing voice on check whether their pitch is correct through audio and vi- the piano-roll display. -

Year of Publication: 2006 Citation: Lawrence, T

University of East London Institutional Repository: http://roar.uel.ac.uk This paper is made available online in accordance with publisher policies. Please scroll down to view the document itself. Please refer to the repository record for this item and our policy information available from the repository home page for further information. To see the final version of this paper please visit the publisher’s website. Access to the published version may require a subscription. Author(s): Lawrence, Tim Article title: “I Want to See All My Friends At Once’’: Arthur Russell and the Queering of Gay Disco Year of publication: 2006 Citation: Lawrence, T. (2006) ‘“I Want to See All My Friends At Once’’: Arthur Russell and the Queering of Gay Disco’ Journal of Popular Music Studies, 18 (2) 144-166 Link to published version: http://dx.doi.org/10.1111/j.1533-1598.2006.00086.x DOI: 10.1111/j.1533-1598.2006.00086.x “I Want to See All My Friends At Once’’: Arthur Russell and the Queering of Gay Disco Tim Lawrence University of East London Disco, it is commonly understood, drummed its drums and twirled its twirls across an explicit gay-straight divide. In the beginning, the story goes, disco was gay: Gay dancers went to gay clubs, celebrated their newly liberated status by dancing with other men, and discovered a vicarious voice in the form of disco’s soul and gospel-oriented divas. Received wisdom has it that straights, having played no part in this embryonic moment, co-opted the culture after they cottoned onto its chic status and potential profitability. -

CGEN Program Guidelines

Program guidelines Be part of Queensland’s largest annual youth performing arts show at the Brisbane Convention and Exhibition Centre Show week: Tuesday 13 July to Saturday 17 July 2021 Principal partner Major partner Broadcast Event Program Program partner partner partner partner 200002 Contents Contact information............................................................................................. 1 What is Creative Generation – State Schools Onstage? .........................................2 Behaviour ...........................................................................................................2 COVID-19 information ..........................................................................................2 Nomination process ............................................................................................3 1. VOCAL ...........................................................................................................6 Featured and backing vocalist ........................................................................................7 Choir .............................................................................................................................8 2. DANCE and DRAMA .........................................................................................11 Featured and solo dance ............................................................................................... 11 Massed dance and regional massed dance ................................................................. -

'Titanium' | David Guetta (Feat. Sia)

‘Titanium’ David Guetta (feat. Sia) ‘Titanium’ | David Guetta (feat. Sia) ‘Titanium’ was a number one hit for French DJ and music producer David Guetta, featuring vocals from Australian singer Sia. Initially released digitally as one of four promotional singles for the album Nothing but the Beat in August 2011, the hit was o!cially released as the fourth single from the album in December of that same year. ‘Titanium’ was well received in many of the top music markets worldwide, achieving number 1s in four countries and top 10s in an incredible twenty "ve countries across the globe. #e single was met with positive reviews, with many claiming it to be the show stopper of the album thanks to Sia’s collaboration on lyrics and phenomenal vocal performance. Described as “epic and energising”, ‘Titanium’ was musically likened to the works of Coldplay and Sia’s vocal was compared to that of Fergie. Many reviewers raved about Sia’s delivery of the song’s hook, stating that ‘Titanium’ was by far the “most intriguing hook-up” of Guetta’s "$h studio album. #e single was certi"ed 3 x Platinum in the UK with sales just short of two million copies, and 2 x Platinum in the US with four million records sold. Guetta’s album Nothing But !e Beat topped the album charts in the DJ’s home nation of France, as well as Australia, Belgium, Germany, Spain and Switzerland. While some reviewers felt it was lacking somewhat in comparison to his earlier works, the album was well-received by many with tracks ‘Night of Your Life’ and ‘Titanium’ being highlighted as the strongest on the release. -

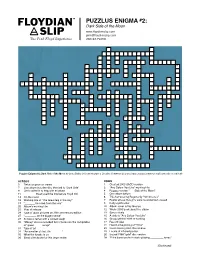

PUZZLUS ENIGMA #2: Dark Side of the Moon [email protected] the Pink Floyd Experience 260.67.FLOYD

PUZZLUS ENIGMA #2: Dark Side of the Moon www.floydianslip.com [email protected] The Pink Floyd Experience 260.67.FLOYD 1 2 3 4 5 6 7 8 9 10 11 12 1 3 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 Puzzlus Enigma #2: Dark Side of the Moon by Craig Bailey is licensed under a Creative Commons License (www.creativecommons.org/licenses/by-nc-nd/3.0/) ACROSS DOWN 3 Twice as good as stereo 1 Created 2003 SACD version 7 Live album includes disc devoted to "Dark Side" 2 "Any Colour You Like" working title 8 Chris called in to help with mixdown 4 Reggae version "___ Side of the Moon" 11 ________ Head used the title before Floyd did 5 One album before 14 On the cover 6 This hat-wearing Roger only "hit him once" 18 Working title of "The Great Gig in the Sky" 7 Beatle whose thoughts were recorded but unused 19 "_______ he cried, from the rear" 9 Early synthesizer 20 Album's working title 10 Album cover art by George 21 Year of release 12 Wrote 2005 book about the album 24 Type of glass pictured on 30th anniversary edition 13 Syncs nicely 27 "________ on the biggest wave" 15 A-side to "Any Colour You Like" 29 Actress's father with a wicked laugh 16 Group behind 2009 re-working 30 "Money" was re-recorded for inclusion on this compilation 17 Record label of "great _____ songs" 22 Heard at beginning of "Time" 31 Type of jet 23 Color missing from the rainbow 34 "As a matter of fact, it's ___ ____" 25 Locale of infrared poster 35 What the lunatic is on 26 Issued 1988 "gold" disc -

SESSION WORK – EXAMPLES: Izzy Chase London, NW7 Mbl: +44 7743 061429 Email: [email protected] Height: 5Ft 7” 1

1 | 3 Izzy Chase London, NW7 Mbl: +44 7743 061429 Email: [email protected] Height: 5ft 7” SESSION WORK – EXAMPLES: • Backing vocals for Ellie Goulding • Backing vocals for Lunchmoney Lewis on TOTP • Backing vocal TV for Rod Stewart • Backing vocals for PB Underground • Backing vocals for Kim Wilde • Backing vocalist for Mollie Marriott (Steve Marriott – Small Faces) • Regular lead vocals for London venues such as; Skygarden, Quaglinos and Primo bar • Backing vocals at Ronnie Scotts Jam nights • Backing vocals for Sarah Harding • Radio advert vocals for ‘Three Mobile’ • Backing Singer for Angel Haze • Backing Singer for Saint Saviour (Groove Armada) • Backing Singer for Kelli-Leigh (Adele/Leona Lewis) • Vocals for Jack Hues and Nick Feldman ‘Wang Chung’ • Backing vocals for Rosco Levee and The Southern Slide’s second album ‘Get It While You Can’ • Recorded vocals for many pop demos, including Blue Box studios • Performed with Mark Ronson at the Roundhouse Mainspace, Camden • Backing Vocals for Ben Mills ‘X Factor finalist’ • Supported artists such as; Ed Sheeran, Lucie Silvas, Foy Vance and Tony Moore (Cutting Crew) • Session backing vocals presented for both Universal and Geffen Records • Backing vocals with vocal coach Tee Green (Blue, Westlife, N’Sync, Kylie Minogue) and Vic Bynoe (The Drifters) • Performance live on BBC Radio 3 as part of mass choir • Session vocals both live and recorded with vocalist and producer Simon Foster (Flying Picketts, How Now Productions) • Featured Extra for 2010 late summer Malibu advert - aired on television • Performed with Boy George • Backing Choir for The Magnets • TV work - Surprise Surprise, Strictly Come Dancing, Top Of The Pops 2 | 3 Izzy Chase London, NW7 Mbl: +44 7743 061429 Email: [email protected] Height: 5ft 7” PERSONAL STATEMENT A versatile and reliable vocalist trusted to perform well under pressure and take direction easily with full understanding. -

THE BLUES DISCOGRAPHY by Phil Wight

THIRD EDITION OF THE BLUES DISCOGRAPHY By Phil Wight t’s fifty years since the publication of ‘Blues As one would expect, many entries have been extensively revised, IRecords 1943-1966’, by Mike Leadbitter and Neil these include B.B. King, Slim Harpo, Chuck Berry, the Sun and Cobra Slaven (published by Hanover Books in the U.K.). sessions. Also, the chronology of many New Orleans recordings has been revised. There are over thirty new artist entries, mostly R&B I bought a copy when it was published (I can’t associated, many of them can be classed as ‘obscure’. recall where from) and it’s still on my shelves Major reissue programmes like the U.K. Ace label’s after all these years, having survived house ‘Bayou’ series (currently standing at twenty volumes moves and living in my slightly damp with more to follow I hope) have of course thrown up a lot of previously unissued material. This garage for several years when lack of is reflected in several new entries from space became a problem. It has a lot (among others) the Baton Rouge Boys, of pencilled-in annotations but it’s Elizabeth (a really excellent vocalist who still in remarkably good nick. deserved a better shot, and maybe she got This new third edition of the ‘Blues Discography’ it under a different guise, I hope she did) continues the tradition of the two earlier editions, and the equally enigmatic Flo. The same filling in gaps and revising details. The artist roster comments regarding Ace issues apply to has been increased and album headings have been the B.B. -

Chicagoland Music Festival but from a Black Perspec- Omnipresent Backdrop

American Music Review The H. Wiley Hitchcock Institute for Studies in American Music Conservatory of Music, Brooklyn College of the City University of New York Volume XLIV, Number 2 Spring 2015 Black and White, then “Red” All Over: Chicago’s American Negro Music Festival Mark Burford, Reed College The functions of public musical spectacle in 1940s Chicago were bound up with a polyphony of stark and sometimes contradic- tory changes. Chicago’s predominantly African American South Side had become more settled as participants in the first waves of the Great Migration established firm roots, even as the city’s “Black Belt” was newly transformed by fresh arrivals that bal- looned Chicago’s black population by 77% between 1940 and 1950. Meanwhile, over the course of the decade, African Ameri- cans remained attentive to a dramatic narrowing of the political spectrum, from accommodation of a populist, patriotic progres- sivism to one dominated by virulent Cold War anticommunism. Sponsored by the Chicago Defender, arguably the country’s flagship black newspaper, and for a brief time the premiere black-organized event in the country, the American Negro Music Festival (ANMF) was through its ten years of existence respon- sive to many of the communal, civic, and national developments during this transitional decade. In seeking to showcase both racial achievement and interracial harmony, festival organizers registered ambivalently embraced shifts in black cultural identity W.C. Handy at the during and in the years following World War II, as well as the American Negro Music Festival Courtesy of St. Louis Post-Dispatch possibilities and limits of coalition politics. -

Simple Plan Is a Canadian Pop Punk Band, Which Was Created in 1999

Simple Plan is a Canadian pop punk band, which was created in 1999. The bend became famous in relative short time. The band has had no line up changes since its inception. The members of the band are Pierre, Chuck, Jeff, Charles, Sebastien and David. Pierre Charles Bouvier was born on 9th May in 1979. He is the lead singer. He also plays a guitar and writes lyrics. The song "Save You" on the album titled "Simple Plan" is about his brother that struggled with cancer. Jean-François Stinco was born on August 22nd in 1978. He is the lead guitarist. He received the nickname Jeff, because many of his non-French fluent friends couldn't pronounce 'Jean-François', so they began to call him 'JF', leading to Jeff. Charles-Andre "Chuck" Comeau born on September 17th 1979 is the drummer. He is sometimes known as the "writer" of the band as he does most of the lyrics besides Pierre, writes all the concepts, and writes the scripts to Simple Plan's music videos. David Philippe Desrosiers was born in August 29th in 1980. He is the bassist and backing vocalist of the band. Sebastien Lefebvre was born on June 5th in 1981 and is the rhythm guitarist and vocalist. Simple Plan began in 1995 with the formation of a band named Reset. The group members were 13 years old friends Pierre , Chuck, Philippe, and Adrian. The first album, No Worries, was released in 1998. After that album Chuck and Pierre left soon after to go to college. Two years later they met with high school friends Jeff and Sebastien who were in separate bands of their own, and they combined to create the band.