Appendix J Computer Arithmetic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fill Your Boots: Enhanced Embedded Bootloader Exploits Via Fault Injection and Binary Analysis

IACR Transactions on Cryptographic Hardware and Embedded Systems ISSN 2569-2925, Vol. 2021, No. 1, pp. 56–81. DOI:10.46586/tches.v2021.i1.56-81 Fill your Boots: Enhanced Embedded Bootloader Exploits via Fault Injection and Binary Analysis Jan Van den Herrewegen1, David Oswald1, Flavio D. Garcia1 and Qais Temeiza2 1 School of Computer Science, University of Birmingham, UK, {jxv572,d.f.oswald,f.garcia}@cs.bham.ac.uk 2 Independent Researcher, [email protected] Abstract. The bootloader of an embedded microcontroller is responsible for guarding the device’s internal (flash) memory, enforcing read/write protection mechanisms. Fault injection techniques such as voltage or clock glitching have been proven successful in bypassing such protection for specific microcontrollers, but this often requires expensive equipment and/or exhaustive search of the fault parameters. When multiple glitches are required (e.g., when countermeasures are in place) this search becomes of exponential complexity and thus infeasible. Another challenge which makes embedded bootloaders notoriously hard to analyse is their lack of debugging capabilities. This paper proposes a grey-box approach that leverages binary analysis and advanced software exploitation techniques combined with voltage glitching to develop a powerful attack methodology against embedded bootloaders. We showcase our techniques with three real-world microcontrollers as case studies: 1) we combine static and on-chip dynamic analysis to enable a Return-Oriented Programming exploit on the bootloader of the NXP LPC microcontrollers; 2) we leverage on-chip dynamic analysis on the bootloader of the popular STM8 microcontrollers to constrain the glitch parameter search, achieving the first fully-documented multi-glitch attack on a real-world target; 3) we apply symbolic execution to precisely aim voltage glitches at target instructions based on the execution path in the bootloader of the Renesas 78K0 automotive microcontroller. -

Arithmetic Algorithms for Extended Precision Using Floating-Point Expansions Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu

Arithmetic algorithms for extended precision using floating-point expansions Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu To cite this version: Mioara Joldes, Olivier Marty, Jean-Michel Muller, Valentina Popescu. Arithmetic algorithms for extended precision using floating-point expansions. IEEE Transactions on Computers, Institute of Electrical and Electronics Engineers, 2016, 65 (4), pp.1197 - 1210. 10.1109/TC.2015.2441714. hal- 01111551v2 HAL Id: hal-01111551 https://hal.archives-ouvertes.fr/hal-01111551v2 Submitted on 2 Jun 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. IEEE TRANSACTIONS ON COMPUTERS, VOL. , 201X 1 Arithmetic algorithms for extended precision using floating-point expansions Mioara Joldes¸, Olivier Marty, Jean-Michel Muller and Valentina Popescu Abstract—Many numerical problems require a higher computing precision than the one offered by standard floating-point (FP) formats. One common way of extending the precision is to represent numbers in a multiple component format. By using the so- called floating-point expansions, real numbers are represented as the unevaluated sum of standard machine precision FP numbers. This representation offers the simplicity of using directly available, hardware implemented and highly optimized, FP operations. -

Floating Points

Jin-Soo Kim ([email protected]) Systems Software & Architecture Lab. Seoul National University Floating Points Fall 2018 ▪ How to represent fractional values with finite number of bits? • 0.1 • 0.612 • 3.14159265358979323846264338327950288... ▪ Wide ranges of numbers • 1 Light-Year = 9,460,730,472,580.8 km • The radius of a hydrogen atom: 0.000000000025 m 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 2 ▪ Representation • Bits to right of “binary point” represent fractional powers of 2 • Represents rational number: 2i i i–1 k 2 bk 2 k=− j 4 • • • 2 1 bi bi–1 • • • b2 b1 b0 . b–1 b–2 b–3 • • • b–j 1/2 1/4 • • • 1/8 2–j 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 3 ▪ Examples: Value Representation 5-3/4 101.112 2-7/8 10.1112 63/64 0.1111112 ▪ Observations • Divide by 2 by shifting right • Multiply by 2 by shifting left • Numbers of form 0.111111..2 just below 1.0 – 1/2 + 1/4 + 1/8 + … + 1/2i + … → 1.0 – Use notation 1.0 – 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 4 ▪ Representable numbers • Can only exactly represent numbers of the form x / 2k • Other numbers have repeating bit representations Value Representation 1/3 0.0101010101[01]…2 1/5 0.001100110011[0011]…2 1/10 0.0001100110011[0011]…2 4190.308: Computer Architecture | Fall 2018 | Jin-Soo Kim ([email protected]) 5 Fixed Points ▪ p.q Fixed-point representation • Use the rightmost q bits of an integer as representing a fraction • Example: 17.14 fixed-point representation -

Hacking in C 2020 the C Programming Language Thom Wiggers

Hacking in C 2020 The C programming language Thom Wiggers 1 Table of Contents Introduction Undefined behaviour Abstracting away from bytes in memory Integer representations 2 Table of Contents Introduction Undefined behaviour Abstracting away from bytes in memory Integer representations 3 – Another predecessor is B. • Not one of the first programming languages: ALGOL for example is older. • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. • Default version in GCC gnu11 The C programming language • Invented by Dennis Ritchie in 1972–1973 4 – Another predecessor is B. • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. • Default version in GCC gnu11 The C programming language • Invented by Dennis Ritchie in 1972–1973 • Not one of the first programming languages: ALGOL for example is older. 4 • Closely tied to the development of the Unix operating system • Unix and Linux are mostly written in C • Compilers are widely available for many, many, many platforms • Still in development: latest release of standard is C18. Popular versions are C99 and C11. • Many compilers implement extensions, leading to versions such as gnu18, gnu11. -

Floating Point Formats

Telemetry Standard RCC Document 106-07, Appendix O, September 2007 APPENDIX O New FLOATING POINT FORMATS Paragraph Title Page 1.0 Introduction..................................................................................................... O-1 2.0 IEEE 32 Bit Single Precision Floating Point.................................................. O-1 3.0 IEEE 64 Bit Double Precision Floating Point ................................................ O-2 4.0 MIL STD 1750A 32 Bit Single Precision Floating Point............................... O-2 5.0 MIL STD 1750A 48 Bit Double Precision Floating Point ............................. O-2 6.0 DEC 32 Bit Single Precision Floating Point................................................... O-3 7.0 DEC 64 Bit Double Precision Floating Point ................................................. O-3 8.0 IBM 32 Bit Single Precision Floating Point ................................................... O-3 9.0 IBM 64 Bit Double Precision Floating Point.................................................. O-4 10.0 TI (Texas Instruments) 32 Bit Single Precision Floating Point...................... O-4 11.0 TI (Texas Instruments) 40 Bit Extended Precision Floating Point................. O-4 LIST OF TABLES Table O-1. Floating Point Formats.................................................................................... O-1 Telemetry Standard RCC Document 106-07, Appendix O, September 2007 This page intentionally left blank. ii Telemetry Standard RCC Document 106-07, Appendix O, September 2007 APPENDIX O FLOATING POINT -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

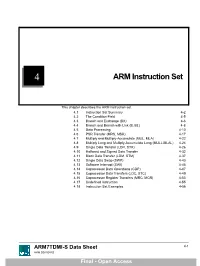

ARM Instruction Set

4 ARM Instruction Set This chapter describes the ARM instruction set. 4.1 Instruction Set Summary 4-2 4.2 The Condition Field 4-5 4.3 Branch and Exchange (BX) 4-6 4.4 Branch and Branch with Link (B, BL) 4-8 4.5 Data Processing 4-10 4.6 PSR Transfer (MRS, MSR) 4-17 4.7 Multiply and Multiply-Accumulate (MUL, MLA) 4-22 4.8 Multiply Long and Multiply-Accumulate Long (MULL,MLAL) 4-24 4.9 Single Data Transfer (LDR, STR) 4-26 4.10 Halfword and Signed Data Transfer 4-32 4.11 Block Data Transfer (LDM, STM) 4-37 4.12 Single Data Swap (SWP) 4-43 4.13 Software Interrupt (SWI) 4-45 4.14 Coprocessor Data Operations (CDP) 4-47 4.15 Coprocessor Data Transfers (LDC, STC) 4-49 4.16 Coprocessor Register Transfers (MRC, MCR) 4-53 4.17 Undefined Instruction 4-55 4.18 Instruction Set Examples 4-56 ARM7TDMI-S Data Sheet 4-1 ARM DDI 0084D Final - Open Access ARM Instruction Set 4.1 Instruction Set Summary 4.1.1 Format summary The ARM instruction set formats are shown below. 3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 9876543210 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 Cond 0 0 I Opcode S Rn Rd Operand 2 Data Processing / PSR Transfer Cond 0 0 0 0 0 0 A S Rd Rn Rs 1 0 0 1 Rm Multiply Cond 0 0 0 0 1 U A S RdHi RdLo Rn 1 0 0 1 Rm Multiply Long Cond 0 0 0 1 0 B 0 0 Rn Rd 0 0 0 0 1 0 0 1 Rm Single Data Swap Cond 0 0 0 1 0 0 1 0 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 1 Rn Branch and Exchange Cond 0 0 0 P U 0 W L Rn Rd 0 0 0 0 1 S H 1 Rm Halfword Data Transfer: register offset Cond 0 0 0 P U 1 W L Rn Rd Offset 1 S H 1 Offset Halfword Data Transfer: immediate offset Cond 0 -

Extended Precision Floating Point Arithmetic

Extended Precision Floating Point Numbers for Ill-Conditioned Problems Daniel Davis, Advisor: Dr. Scott Sarra Department of Mathematics, Marshall University Floating Point Number Systems Precision Extended Precision Patriot Missile Failure Floating point representation is based on scientific An increasing number of problems exist for which Patriot missile defense modules have been used by • The precision, p, of a floating point number notation, where a nonzero real decimal number, x, IEEE double is insufficient. These include modeling the U.S. Army since the mid-1960s. On February 21, system is the number of bits in the significand. is expressed as x = ±S × 10E, where 1 ≤ S < 10. of dynamical systems such as our solar system or the 1991, a Patriot protecting an Army barracks in Dha- This means that any normalized floating point The values of S and E are known as the significand • climate, and numerical cryptography. Several arbi- ran, Afghanistan failed to intercept a SCUD missile, number with precision p can be written as: and exponent, respectively. When discussing float- trary precision libraries have been developed, but leading to the death of 28 Americans. This failure E ing points, we are interested in the computer repre- x = ±(1.b1b2...bp−2bp−1)2 × 2 are too slow to be practical for many complex ap- was caused by floating point rounding error. The sentation of numbers, so we must consider base 2, or • The smallest x such that x > 1 is then: plications. David Bailey’s QD library may be used system measured time in tenths of seconds, using binary, rather than base 10. -

3.2 the CORDIC Algorithm

UC San Diego UC San Diego Electronic Theses and Dissertations Title Improved VLSI architecture for attitude determination computations Permalink https://escholarship.org/uc/item/5jf926fv Author Arrigo, Jeanette Fay Freauf Publication Date 2006 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California 1 UNIVERSITY OF CALIFORNIA, SAN DIEGO Improved VLSI Architecture for Attitude Determination Computations A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Electrical and Computer Engineering (Electronic Circuits and Systems) by Jeanette Fay Freauf Arrigo Committee in charge: Professor Paul M. Chau, Chair Professor C.K. Cheng Professor Sujit Dey Professor Lawrence Larson Professor Alan Schneider 2006 2 Copyright Jeanette Fay Freauf Arrigo, 2006 All rights reserved. iv DEDICATION This thesis is dedicated to my husband Dale Arrigo for his encouragement, support and model of perseverance, and to my father Eugene Freauf for his patience during my pursuit. In memory of my mother Fay Freauf and grandmother Fay Linton Thoreson, incredible mentors and great advocates of the quest for knowledge. iv v TABLE OF CONTENTS Signature Page...............................................................................................................iii Dedication … ................................................................................................................iv Table of Contents ...........................................................................................................v -

Consider an Instruction Cycle Consisting of Fetch, Operators Fetch (Immediate/Direct/Indirect), Execute and Interrupt Cycles

Module-2, Unit-3 Instruction Execution Question 1: Consider an instruction cycle consisting of fetch, operators fetch (immediate/direct/indirect), execute and interrupt cycles. Explain the purpose of these four cycles. Solution 1: The life of an instruction passes through four phases—(i) Fetch, (ii) Decode and operators fetch, (iii) execute and (iv) interrupt. The purposes of these phases are as follows 1. Fetch We know that in the stored program concept, all instructions are also present in the memory along with data. So the first phase is the “fetch”, which begins with retrieving the address stored in the Program Counter (PC). The address stored in the PC refers to the memory location holding the instruction to be executed next. Following that, the address present in the PC is given to the address bus and the memory is set to read mode. The contents of the corresponding memory location (i.e., the instruction) are transferred to a special register called the Instruction Register (IR) via the data bus. IR holds the instruction to be executed. The PC is incremented to point to the next address from which the next instruction is to be fetched So basically the fetch phase consists of four steps: a) MAR <= PC (Address of next instruction from Program counter is placed into the MAR) b) MBR<=(MEMORY) (the contents of Data bus is copied into the MBR) c) PC<=PC+1 (PC gets incremented by instruction length) d) IR<=MBR (Data i.e., instruction is transferred from MBR to IR and MBR then gets freed for future data fetches) 2. -

Akukwe Michael Lotachukwu 18/Sci01/014 Computer Science

AKUKWE MICHAEL LOTACHUKWU 18/SCI01/014 COMPUTER SCIENCE The purpose of every computer is some form of data processing. The CPU supports data processing by performing the functions of fetch, decode and execute on programmed instructions. Taken together, these functions are frequently referred to as the instruction cycle. In addition to the instruction cycle functions, the CPU performs fetch and writes functions on data. When a program runs on a computer, instructions are stored in computer memory until they're executed. The CPU uses a program counter to fetch the next instruction from memory, where it's stored in a format known as assembly code. The CPU decodes the instruction into binary code that can be executed. Once this is done, the CPU does what the instruction tells it to, performing an operation, fetching or storing data or adjusting the program counter to jump to a different instruction. The types of operations that typically can be performed by the CPU include simple math functions like addition, subtraction, multiplication and division. The CPU can also perform comparisons between data objects to determine if they're equal. All the amazing things that computers can do are performed with these and a few other basic operations. After an instruction is executed, the next instruction is fetched and the cycle continues. While performing the execute function of the instruction cycle, the CPU may be asked to execute an instruction that requires data. For example, executing an arithmetic function requires the numbers that will be used for the calculation. To deliver the necessary data, there are instructions to fetch data from memory and write data that has been processed back to memory. -

A Variable Precision Hardware Acceleration for Scientific Computing Andrea Bocco

A variable precision hardware acceleration for scientific computing Andrea Bocco To cite this version: Andrea Bocco. A variable precision hardware acceleration for scientific computing. Discrete Mathe- matics [cs.DM]. Université de Lyon, 2020. English. NNT : 2020LYSEI065. tel-03102749 HAL Id: tel-03102749 https://tel.archives-ouvertes.fr/tel-03102749 Submitted on 7 Jan 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. N°d’ordre NNT : 2020LYSEI065 THÈSE de DOCTORAT DE L’UNIVERSITÉ DE LYON Opérée au sein de : CEA Grenoble Ecole Doctorale InfoMaths EDA N17° 512 (Informatique Mathématique) Spécialité de doctorat :Informatique Soutenue publiquement le 29/07/2020, par : Andrea Bocco A variable precision hardware acceleration for scientific computing Devant le jury composé de : Frédéric Pétrot Président et Rapporteur Professeur des Universités, TIMA, Grenoble, France Marc Dumas Rapporteur Professeur des Universités, École Normale Supérieure de Lyon, France Nathalie Revol Examinatrice Docteure, École Normale Supérieure de Lyon, France Fabrizio Ferrandi Examinateur Professeur associé, Politecnico di Milano, Italie Florent de Dinechin Directeur de thèse Professeur des Universités, INSA Lyon, France Yves Durand Co-directeur de thèse Docteur, CEA Grenoble, France Cette thèse est accessible à l'adresse : http://theses.insa-lyon.fr/publication/2020LYSEI065/these.pdf © [A.