Misinformation and Disinformation in Online Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games

Vanderbilt Journal of Entertainment & Technology Law Volume 18 Issue 3 Issue 3 - Spring 2016 Article 2 2015 Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games Julian Dibbell Follow this and additional works at: https://scholarship.law.vanderbilt.edu/jetlaw Part of the Internet Law Commons, and the Labor and Employment Law Commons Recommended Citation Julian Dibbell, Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games, 18 Vanderbilt Journal of Entertainment and Technology Law 419 (2021) Available at: https://scholarship.law.vanderbilt.edu/jetlaw/vol18/iss3/2 This Article is brought to you for free and open access by Scholarship@Vanderbilt Law. It has been accepted for inclusion in Vanderbilt Journal of Entertainment & Technology Law by an authorized editor of Scholarship@Vanderbilt Law. For more information, please contact [email protected]. VANDERBILT JOURNAL OF ENTERTAINMENT & TECHNOLOGY LAW VOLUME 18 SPRING 2016 NUMBER 3 Invisible Labor, Invisible Play: Online Gold Farming and the Boundary Between Jobs and Games Julian Dibbell ABSTRACT When does work become play and play become work? Courts have considered the question in a variety of economic contexts, from student athletes seeking recognition as employees to professional blackjack players seeking to be treated by casinos just like casual players. Here, this question is applied to a relatively novel context: that of online gold farming, a gray-market industry in which wage-earning workers, largely based in China, are paid to play fantasy massively multiplayer online games (MMOs) that reward them with virtual items that their employers sell for profit to the same games' casual players. -

Using the MMORPG 'Runescape' to Engage Korean

Using the MMORPG ‘RuneScape’ to Engage Korean EFL (English as a Foreign Language) Young Learners in Learning Vocabulary and Reading Skills Kwengnam Kim Submitted in accordance with the requirements for the degree of Doctor of Philosophy The University of Leeds School of Education October 2015 -I- INTELLECTUAL PROPERTY The candidate confirms that the work submitted is her own and that appropriate credit has been given where reference has been made to the work of others. This copy has been supplied on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. © 2015 The University of Leeds and Kwengnam Kim The right of Kwengnam Kim to be identified as Author of this work has been asserted by her in accordance with the Copyright, Designs and Patents Act 1988. -II- DECLARATION OF AUTHORSHIP The work conducted during the development of this PhD thesis has led to a number of presentations and a guest talk. Papers and extended abstracts from the presentations and a guest talk have been generated and a paper has been published in the BAAL conference' proceedings. A list of the papers arising from this study is presented below. Kim, K. (2012) ‘MMORPG RuneScape and Korean Children’s Vocabulary and Reading Skills’. Paper as Guest Talk is presented at CRELL Seminar in University of Roehampton, London, UK, 31st, October 2012. Kim, K. (2012) ‘Online role-playing game and Korean children’s English vocabulary and reading skills’. Paper is presented in AsiaCALL 2012 (11th International Conference of Computer Assisted Language Learning), in Ho Chi Minh City, Vietnam, 16th-18th, November 2012. -

COMPARATIVE VIDEOGAME CRITICISM by Trung Nguyen

COMPARATIVE VIDEOGAME CRITICISM by Trung Nguyen Citation Bogost, Ian. Unit Operations: An Approach to Videogame Criticism. Cambridge, MA: MIT, 2006. Keywords: Mythical and scientific modes of thought (bricoleur vs. engineer), bricolage, cyber texts, ergodic literature, Unit operations. Games: Zork I. Argument & Perspective Ian Bogost’s “unit operations” that he mentions in the title is a method of analyzing and explaining not only video games, but work of any medium where works should be seen “as a configurative system, an arrangement of discrete, interlocking units of expressive meaning.” (Bogost x) Similarly, in this chapter, he more specifically argues that as opposed to seeing video games as hard pieces of technology to be poked and prodded within criticism, they should be seen in a more abstract manner. He states that “instead of focusing on how games work, I suggest that we turn to what they do— how they inform, change, or otherwise participate in human activity…” (Bogost 53) This comparative video game criticism is not about invalidating more concrete observances of video games, such as how they work, but weaving them into a more intuitive discussion that explores the true nature of video games. II. Ideas Unit Operations: Like I mentioned in the first section, this is a different way of approaching mediums such as poetry, literature, or videogames where works are a system of many parts rather than an overarching, singular, structured piece. Engineer vs. Bricoleur metaphor: Bogost uses this metaphor to compare the fundamentalist view of video game critique to his proposed view, saying that the “bricoleur is a skillful handy-man, a jack-of-all-trades who uses convenient implements and ad hoc strategies to achieve his ends.” Whereas the engineer is a “scientific thinker who strives to construct holistic, totalizing systems from the top down…” (Bogost 49) One being more abstract and the other set and defined. -

Can Game Companies Help America's Children?

CAN GAME COMPANIES HELP AMERICA’S CHILDREN? The Case for Engagement & VirtuallyGood4Kids™ By Wendy Lazarus Founder and Co-President with Aarti Jayaraman September 2012 About The Children’s Partnership The Children's Partnership (TCP) is a national, nonprofit organization working to ensure that all children—especially those at risk of being left behind—have the resources and opportunities they need to grow up healthy and lead productive lives. Founded in 1993, The Children's Partnership focuses particular attention on the goals of securing health coverage for every child and on ensuring that the opportunities and benefits of digital technology reach all children. Consistent with that mission, we have educated the public and policymakers about how technology can measurably improve children's health, education, safety, and opportunities for success. We work at the state and national levels to provide research, build programs, and enact policies that extend opportunity to all children and their families. Santa Monica, CA Office Washington, DC Office 1351 3rd St. Promenade 2000 P Street, NW Suite 206 Suite 330 Santa Monica, CA 90401 Washington, DC 20036 t: 310.260.1220 t: 202.429.0033 f: 310.260.1921 f: 202.429.0974 E-Mail: [email protected] Web: www.childrenspartnership.org The Children’s Partnership is a project of Tides Center. ©2012, The Children's Partnership. Permission to copy, disseminate, or otherwise use this work is normally granted as long as ownership is properly attributed to The Children's Partnership. CAN GAME -

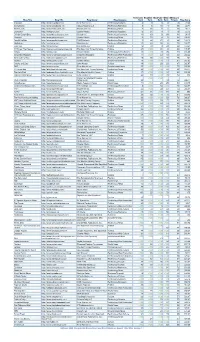

Blog Title Blog URL Blog Owner Blog Category Technorati Rank

Technorati Bloglines BlogPulse Wikio SEOmoz’s Blog Title Blog URL Blog Owner Blog Category Rank Rank Rank Rank Trifecta Blog Score Engadget http://www.engadget.com Time Warner Inc. Technology/Gadgets 4 3 6 2 78 19.23 Boing Boing http://www.boingboing.net Happy Mutants LLC Technology/Marketing 5 6 15 4 89 33.71 TechCrunch http://www.techcrunch.com TechCrunch Inc. Technology/News 2 27 2 1 76 42.11 Lifehacker http://lifehacker.com Gawker Media Technology/Gadgets 6 21 9 7 78 55.13 Official Google Blog http://googleblog.blogspot.com Google Inc. Technology/Corporate 14 10 3 38 94 69.15 Gizmodo http://www.gizmodo.com/ Gawker Media Technology/News 3 79 4 3 65 136.92 ReadWriteWeb http://www.readwriteweb.com RWW Network Technology/Marketing 9 56 21 5 64 142.19 Mashable http://mashable.com Mashable Inc. Technology/Marketing 10 65 36 6 73 160.27 Daily Kos http://dailykos.com/ Kos Media, LLC Politics 12 59 8 24 63 163.49 NYTimes: The Caucus http://thecaucus.blogs.nytimes.com The New York Times Company Politics 27 >100 31 8 93 179.57 Kotaku http://kotaku.com Gawker Media Technology/Video Games 19 >100 19 28 77 216.88 Smashing Magazine http://www.smashingmagazine.com Smashing Magazine Technology/Web Production 11 >100 40 18 60 283.33 Seth Godin's Blog http://sethgodin.typepad.com Seth Godin Technology/Marketing 15 68 >100 29 75 284 Gawker http://www.gawker.com/ Gawker Media Entertainment News 16 >100 >100 15 81 287.65 Crooks and Liars http://www.crooksandliars.com John Amato Politics 49 >100 33 22 67 305.97 TMZ http://www.tmz.com Time Warner Inc. -

NJDEP-DEP Bulletin, 9/21/2005 Issue

TABLE OF CONTENTS September 21, 2005 Volume 29 Issue 18 Application Codes and Permit Descriptions Pg. 2 General Application Milestone Codes Specific Decision Application Codes Permit Descriptions General Information Pg. 3 DEP Public Notices and Hearings and Events of Interest (Water Quality Pg. 11-16) Pg. 4-16 Watershed Management Highlands Applicability and Water Quality Management Plan Consistency Determination: Administratively Complete Applications Received Pg. 17-18 Highlands Applicability and Water Quality Management Plan Consistency Determination Pg. 19 Environmental Impact Statement and Assessments (EIS and EA) Pg. 20-21 Permit Applications Filed or Acted Upon: Land Use Regulation Program CAFRA Permit Application Pg. 22-25 Coastal Wetlands Pg. 25-26 Consistency Determination Pg. 26 Flood Hazard Area Pg. 26-28 Freshwater Wetlands Pg. 28-45 Waterfront Development Pg. 45-49 Division of Water Quality Treatment Works Approval (TWA) Pg. 50-51 Solid and Hazardous Waste Recycling Centers (B) Pg. 52 Recycling Centers (C) Pg. 52 Hazardous Waste Facilities Pg. 52-53 Incinerators Pg. 53 Sanitary Landfills Pg. 53 Transfer Stations Pg. 54 DEP Permit Liaisons and Other Governmental Contacts Inside Back Cover Acting Governor Richard J. Codey New Jersey Department of Environmental Protection Bradley M. Campbell, Commissioner General Application Milestone Codes Application Approved F = Complete for Filing M = Permit Modification Application Denied H = Public Hearing Date P = Permit Decision Date Application Withdrawal I = Additional Information -

Cyber-Synchronicity: the Concurrence of the Virtual

Cyber-Synchronicity: The Concurrence of the Virtual and the Material via Text-Based Virtual Reality A dissertation presented to the faculty of the Scripps College of Communication of Ohio University In partial fulfillment of the requirements for the degree Doctor of Philosophy Jeffrey S. Smith March 2010 © 2010 Jeffrey S. Smith. All Rights Reserved. This dissertation titled Cyber-Synchronicity: The Concurrence of the Virtual and the Material Via Text-Based Virtual Reality by JEFFREY S. SMITH has been approved for the School of Media Arts and Studies and the Scripps College of Communication by Joseph W. Slade III Professor of Media Arts and Studies Gregory J. Shepherd Dean, Scripps College of Communication ii ABSTRACT SMITH, JEFFREY S., Ph.D., March 2010, Mass Communication Cyber-Synchronicity: The Concurrence of the Virtual and the Material Via Text-Based Virtual Reality (384 pp.) Director of Dissertation: Joseph W. Slade III This dissertation investigates the experiences of participants in a text-based virtual reality known as a Multi-User Domain, or MUD. Through in-depth electronic interviews, staff members and players of Aurealan Realms MUD were queried regarding the impact of their participation in the MUD on their perceived sense of self, community, and culture. Second, the interviews were subjected to a qualitative thematic analysis through which the nature of the participant’s phenomenological lived experience is explored with a specific eye toward any significant over or interconnection between each participant’s virtual and material experiences. An extended analysis of the experiences of respondents, combined with supporting material from other academic investigators, provides a map with which to chart the synchronous and synonymous relationship between a participant’s perceived sense of material identity, community, and culture, and her perceived sense of virtual identity, community, and culture. -

Video Games and the Mobilization of Anxiety and Desire

PLAYING THE CRISIS: VIDEO GAMES AND THE MOBILIZATION OF ANXIETY AND DESIRE BY ROBERT MEJIA DISSERTATION Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Communications in the Graduate College of the University of Illinois at Urbana-Champaign, 2012 Urbana, Illinois Doctoral Committee: Professor Kent A. Ono, Chair Professor John Nerone Professor Clifford Christians Professor Robert A. Brookey, Northern Illinois University ABSTRACT This is a critical cultural and political economic analysis of the video game as an engine of global anxiety and desire. Attempting to move beyond conventional studies of the video game as a thing-in-itself, relatively self-contained as a textual, ludic, or even technological (in the narrow sense of the word) phenomenon, I propose that gaming has come to operate as an epistemological imperative that extends beyond the site of gaming in itself. Play and pleasure have come to affect sites of culture and the structural formation of various populations beyond those conceived of as belonging to conventional gaming populations: the workplace, consumer experiences, education, warfare, and even the practice of politics itself, amongst other domains. Indeed, the central claim of this dissertation is that the video game operates with the same political and cultural gravity as that ascribed to the prison by Michel Foucault. That is, just as the prison operated as the discursive site wherein the disciplinary imaginary was honed, so too does digital play operate as that discursive site wherein the ludic imperative has emerged. To make this claim, I have had to move beyond the conventional theoretical frameworks utilized in the analysis of video games. -

Let's Play! Video Game Related User-Generated Content in the Digital Age -In Search of Balance Between Copyright and Its Exce

TALLINN UNIVERSITY OF TECHNOLOGY Faculty of Social Sciences Tallinn Law School Henna Salo Let’s Play! Video Game Related User-Generated Content in the Digital Age -in Search of Balance Between Copyright and its Exceptions Master Thesis Supervisor: Addi Rull, LL.M Tallinn 2017 I hereby declare that I am the sole author of this Master Thesis and it has not been presented to any other university of examination. Henna Salo “ ..... “ ............... 2017 The Master Thesis meets the established requirements. Supervisor Addi Rull “ …….“ .................... 2017 Accepted for examination “ ..... “ ...................... 2017 Board of Examiners of Law Masters’ Theses …………………………… CONTENTS TABLE OF ABBREVIATIONS (IN ALPHABETICAL ORDER) 2 INTRODUCTION 3 1. COPYRIGHT PROTECTION OF VIDEO GAMES 12 1.1. Development of Copyright Protection of Video Games in the EU and the US 12 1.2. Video Games as Joint Works and Their Copyrighted Elements 14 1.2.1 Copyrightable Subject Matter and Originality 16 1.2.2. The Idea-expression Dichotomy 19 2. LIMITATIONS AND EXCEPTIONS TO COPYRIGHT 23 2.1. European Union 23 2.1.1 An Exhaustive List of Exceptions and the Three-Step Test 23 2.1.2 Exceptions in Practice 27 2.2 United States 29 2.2.1 Digital Millenium Copyright Act and Fair Use 29 2.2.2 Fair Use Interpretation in Case Law 31 2.3. Video Game Related User-Generated Content as Derivative Works 34 3. COPYRIGHT ENFORCEMENT 37 3.1. Copyright Enforcement in United States 37 3.2. European Copyright Enforcement Mechanisms 40 3.3. Contractual Limitation of the Use of Copyrighted Materials 45 4. CONFLICTING INTERESTS AND FINDING THE BALANCE 48 4.1. -

8 Guilt in Dayz Marcus Carter and Fraser Allison Guilt in Dayz

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Sydney eScholarship FOR REPOSITORY USE ONLY DO NOT DISTRIBUTE 8 Guilt in DayZ Marcus Carter and Fraser Allison Guilt in DayZ Marcus Carter and Fraser Allison © Massachusetts Institute of Technology All Rights Reserved I get a sick feeling in my stomach when I kill someone. —Player #1431’s response to the question “Do you ever feel bad killing another player in DayZ ?” Death in most games is simply a metaphor for failure (Bartle 2010). Killing another player in a first-person shooter (FPS) game such as Call of Duty (Infinity Ward 2003) is generally considered to be as transgressive as taking an opponent’s pawn in chess. In an early exploratory study of players’ experiences and processing of violence in digital videogames, Christoph Klimmt and his colleagues concluded that “moral man- agement does not apply to multiplayer combat games” (2006, 325). In other words, player killing is not a violation of moral codes or a source of moral concern for players. Subsequent studies of player experiences of guilt and moral concern in violent video- games (Hartmann, Toz, and Brandon 2010; Hartmann and Vorderer 2010; Gollwitzer and Melzer 2012) have consequently focused on the moral experiences associated with single-player games and the engagement with transgressive fictional, virtual narrative content. This is not the case, however, for DayZ (Bohemia Interactive 2017), a zombie- themed FPS survival game in which players experience levels of moral concern and anguish that might be considered extreme for a multiplayer digital game. -

Leadership Emerges Spontaneously During Games 29 April 2013

Leadership emerges spontaneously during games 29 April 2013 (Phys.org) —Video game and augmented-reality beekeeper's website that was supposedly hacked game players can spontaneously build virtual by aliens. The coded messages revealed teams and leadership structures without special geographic coordinates of real pay telephones tools or guidance, according to researchers. situated throughout the United States. Players then waited at those payphones for calls that contained Players in a game that mixed real and online more clues. worlds organized and operated in teams that resembled a military organization with only Because the game did not have a leadership rudimentary online tools available and almost no infrastructure, players established their own military background, said Tamara Peyton, doctoral websites and online forums on other websites to student in information sciences and technology, discuss structure, strategy and tactics. Penn State. A group of gamers from Washington, D.C., one of "The fact that they formed teams and interacted the most successful groups in the game, as well as they did may mean that game designers established an organization with a general and should resist over-designing the leadership groups of lieutenants and privates. The numbers of structures," said Peyton. "If you don't design the members in each rank were roughly proportional to leadership structures well, you shouldn't design the amount of soldiers who fill out ranks in the U.S. them at all and, instead, let the players figure it military, Peyton said. out." The players assigned their own ranks, rather than Peyton, who worked with Alyson Young, graduate have ranks dictated to them. -

Massively Multiplayer Online Role Playing Games (Mmorpgs) in Malaysia

Massively Multiplayer Online Role Playing Games (MMORPGs) in Malaysia: The Global-Local Nexus A thesis presented to the faculty of the Center for International Studies of Ohio University In partial fulfillment of the requirements for the degree Master of Arts Benjamin Y. Loh August 2013 © 2013 Benjamin Y. Loh. All Rights Reserved. 2 This thesis titled Massively Multiplayer Online Role Playing Games (MMORPGs) in Malaysia: The Global-Local Nexus by BENJAMIN Y. LOH has been approved for the Center for International Studies by Yeong H. Kim Associate Professor of Geography Christine Su Director, Southeast Asian Studies Ming Li Interim Executive Director, Center for International Studies 3 ABSTRACT LOH, BENJAMIN Y., M.A., August 2013, Asian Studies Massively Multiplayer Online Role Playing Games (MMORPGs) in Malaysia: The Global-Local Nexus Director of Thesis: Yeong H. Kim Massively Multiplayer Online Role-Playing Games (MMORPGs) are online entities that are truly global and borderless by nature, but in smaller countries like Malaysia, they are licensed by global developers to local publishers to be localized for local players. From a globalization perspective this appears to be a one-way, top-down relationship from the global to the local. However, this is not the case as the relationship is interchangeable, known as the Global-Local Nexus, as neither of these forces has control over the other, but at the same time they have great influence over one another. This thesis examines the Global-Local Nexus in MMORPGs industry in Malaysia between global developers and local publishers and players. The research was conducted through a series of personal interviews with local publisher representatives and local players.