Applied Cryptography and Data Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 3 – Block Ciphers and the Data Encryption Standard

Chapter 3 –Block Ciphers and the Data Cryptography and Network Encryption Standard Security All the afternoon Mungo had been working on Stern's Chapter 3 code, principally with the aid of the latest messages which he had copied down at the Nevin Square drop. Stern was very confident. He must be well aware London Central knew about that drop. It was obvious Fifth Edition that they didn't care how often Mungo read their messages, so confident were they in the by William Stallings impenetrability of the code. —Talking to Strange Men, Ruth Rendell Lecture slides by Lawrie Brown Modern Block Ciphers Block vs Stream Ciphers now look at modern block ciphers • block ciphers process messages in blocks, each one of the most widely used types of of which is then en/decrypted cryptographic algorithms • like a substitution on very big characters provide secrecy /hii/authentication services – 64‐bits or more focus on DES (Data Encryption Standard) • stream ciphers process messages a bit or byte at a time when en/decrypting to illustrate block cipher design principles • many current ciphers are block ciphers – better analysed – broader range of applications Block vs Stream Ciphers Block Cipher Principles • most symmetric block ciphers are based on a Feistel Cipher Structure • needed since must be able to decrypt ciphertext to recover messages efficiently • bloc k cihiphers lklook like an extremely large substitution • would need table of 264 entries for a 64‐bit block • instead create from smaller building blocks • using idea of a product cipher 1 Claude -

Feistel Like Construction of Involutory Binary Matrices with High Branch Number

Feistel Like Construction of Involutory Binary Matrices With High Branch Number Adnan Baysal1,2, Mustafa C¸oban3, and Mehmet Ozen¨ 3 1TUB¨ ITAK_ - BILGEM,_ PK 74, 41470, Gebze, Kocaeli, Turkey, [email protected] 2Kocaeli University, Department of Computer Engineering, Faculty of Engineering, Institute of Science, 41380, Umuttepe, Kocaeli, Turkey 3Sakarya University, Faculty of Arts and Sciences, Department of Mathematics, Sakarya, Turkey, [email protected], [email protected] August 4, 2016 Abstract In this paper, we propose a generic method to construct involutory binary matrices from a three round Feistel scheme with a linear round function. We prove bounds on the maximum achievable branch number (BN) and the number of fixed points of our construction. We also define two families of efficiently implementable round functions to be used in our method. The usage of these families in the proposed method produces matrices achieving the proven bounds on branch numbers and fixed points. Moreover, we show that BN of the transpose matrix is the same with the original matrix for the function families we defined. Some of the generated matrices are Maximum Distance Binary Linear (MDBL), i.e. matrices with the highest achievable BN. The number of fixed points of the generated matrices are close to the expected value for a random involution. Generated matrices are especially suitable for utilising in bitslice block ciphers and hash functions. They can be implemented efficiently in many platforms, from low cost CPUs to dedicated hardware. Keywords: Diffusion layer, bitslice cipher, hash function, involution, MDBL matrices, Fixed points. 1 Introduction Modern block ciphers and hash functions use two basic layers iteratively to provide security: confusion and diffusion. -

Block Ciphers

Block Ciphers Chester Rebeiro IIT Madras CR STINSON : chapters 3 Block Cipher KE KD untrusted communication link Alice E D Bob #%AR3Xf34^$ “Attack at Dawn!!” message encryption (ciphertext) decryption “Attack at Dawn!!” Encryption key is the same as the decryption key (KE = K D) CR 2 Block Cipher : Encryption Key Length Secret Key Plaintext Ciphertext Block Cipher (Encryption) Block Length • A block cipher encryption algorithm encrypts n bits of plaintext at a time • May need to pad the plaintext if necessary • y = ek(x) CR 3 Block Cipher : Decryption Key Length Secret Key Ciphertext Plaintext Block Cipher (Decryption) Block Length • A block cipher decryption algorithm recovers the plaintext from the ciphertext. • x = dk(y) CR 4 Inside the Block Cipher PlaintextBlock (an iterative cipher) Key Whitening Round 1 key1 Round 2 key2 Round 3 key3 Round n keyn Ciphertext Block • Each round has the same endomorphic cryptosystem, which takes a key and produces an intermediate ouput • Size of the key is huge… much larger than the block size. CR 5 Inside the Block Cipher (the key schedule) PlaintextBlock Secret Key Key Whitening Round 1 Round Key 1 Round 2 Round Key 2 Round 3 Round Key 3 Key Expansion Expansion Key Key Round n Round Key n Ciphertext Block • A single secret key of fixed size used to generate ‘round keys’ for each round CR 6 Inside the Round Function Round Input • Add Round key : Add Round Key Mixing operation between the round input and the round key. typically, an ex-or operation Confusion Layer • Confusion layer : Makes the relationship between round Diffusion Layer input and output complex. -

Report on the AES Candidates

Rep ort on the AES Candidates 1 2 1 3 Olivier Baudron , Henri Gilb ert , Louis Granb oulan , Helena Handschuh , 4 1 5 1 Antoine Joux , Phong Nguyen ,Fabrice Noilhan ,David Pointcheval , 1 1 1 1 Thomas Pornin , Guillaume Poupard , Jacques Stern , and Serge Vaudenay 1 Ecole Normale Sup erieure { CNRS 2 France Telecom 3 Gemplus { ENST 4 SCSSI 5 Universit e d'Orsay { LRI Contact e-mail: [email protected] Abstract This do cument rep orts the activities of the AES working group organized at the Ecole Normale Sup erieure. Several candidates are evaluated. In particular we outline some weaknesses in the designs of some candidates. We mainly discuss selection criteria b etween the can- didates, and make case-by-case comments. We nally recommend the selection of Mars, RC6, Serp ent, ... and DFC. As the rep ort is b eing nalized, we also added some new preliminary cryptanalysis on RC6 and Crypton in the App endix which are not considered in the main b o dy of the rep ort. Designing the encryption standard of the rst twentyyears of the twenty rst century is a challenging task: we need to predict p ossible future technologies, and wehavetotake unknown future attacks in account. Following the AES pro cess initiated by NIST, we organized an op en working group at the Ecole Normale Sup erieure. This group met two hours a week to review the AES candidates. The present do cument rep orts its results. Another task of this group was to up date the DFC candidate submitted by CNRS [16, 17] and to answer questions which had b een omitted in previous 1 rep orts on DFC. -

Lecture Four

Lecture Four Today’s Topics . Historic Symmetric ciphers . Modern symmetric ciphers . DES, AES . Asymmetric ciphers . RSA . Next class: Protocols Example Ciphers . Shift cipher: each plaintext characters is replaced by a character k to the right. (When k=3, it’s a Caesar cipher). “Watch out for Brutus!” => “Jngpu bhg sbe Oehghf!” . Only 25 choices! Not hard to break by brute force. Substitution Cipher: each character in plaintext is replaced by a corresponding character of ciphertext. E.g., cryptograms in newspapers. plaintext code: a b c d e f g h i f k l m n o p q r s t u v w x y z ciphertext code: m n b v c x z a s d f g h j k l p o i u y t r e w q . 26! Possible pairs. Is is really that hard to break? Substitution ciphers . The Caesar cipher has a small key space, but doesn’t create a statistical independence between the plaintext and the ciphertext. The best ciphers allow no statistical attacks, thereby forcing a brute force, exhaustive search; all the security lies with the key space. As cryptographic algorithms matured, the statistical independence between the plaintext and cipher text increased. Ciphers . The caesar cipher, hill cipher, and playfair cipher all work with a single alphabet for doing substitutions . They are monoalphabetic substitutions. A more complex (and more robust) alternative is to use different substitution mappings on various portions of the plaintext. Polyalphabetic substitutions. More ciphers . Vigenère cipher: each character of plaintext is encrypted with a different a cipher key. -

The Long Road to the Advanced Encryption Standard

The Long Road to the Advanced Encryption Standard Jean-Luc Cooke CertainKey Inc. [email protected], http://www.certainkey.com/˜jlcooke Abstract 1 Introduction This paper will start with a brief background of the Advanced Encryption Standard (AES) process, lessons learned from the Data Encryp- tion Standard (DES), other U.S. government Two decades ago the state-of-the-art in cryptographic publications and the fifteen first the private sector cryptography was—we round candidate algorithms. The focus of the know now—far behind the public sector. presentation will lie in presenting the general Don Coppersmith’s knowledge of the Data design of the five final candidate algorithms, Encryption Standard’s (DES) resilience to and the specifics of the AES and how it dif- the then unknown Differential Cryptanaly- fers from the Rijndael design. A presentation sis (DC), the design principles used in the on the AES modes of operation and Secure Secure Hash Algorithm (SHA) in Digital Hash Algorithm (SHA) family of algorithms Signature Standard (DSS) being case and will follow and will include discussion about point[NISTDSS][NISTDES][DC][NISTSHA1]. how it is directly implicated by AES develop- ments. The selection and design of the DES was shrouded in controversy and suspicion. This very controversy has lead to a fantastic acceler- Intended Audience ation in private sector cryptographic advance- ment. So intrigued by the NSA’s modifica- tions to the Lucifer algorithm, researchers— This paper was written as a supplement to a academic and industry alike—powerful tools presentation at the Ottawa International Linux in assessing block cipher strength were devel- Symposium. -

Confusion and Diffusion

Confusion and Diffusion Ref: William Stallings, Cryptography and Network Security, 3rd Edition, Prentice Hall, 2003 Confusion and Diffusion 1 Statistics and Plaintext • Suppose the frequency distribution of plaintext in a human-readable message in some language is known. • Or suppose there are known words or phrases that are used in the plaintext message. • A cryptanalysist can use this information to break a cryptographic algorithm. Confusion and Diffusion 2 Changing Statistics • Claude Shannon suggested that to complicate statistical attacks, the cryptographer could dissipate the statistical structure of the plaintext in the long range statistics of the ciphertext. • Shannon called this process diffusion . Confusion and Diffusion 3 Changing Statistics (p.2) • Diffusion can be accomplished by having many plaintext characters affect each ciphertext character. • An example of diffusion is the encryption of a message M=m 1,m 2,... using a an averaging: y n= ∑i=1,k mn+i (mod26). Confusion and Diffusion 4 Changing Statistics (p.3) • In binary block ciphers, such as the Data Encryption Standard (DES), diffusion can be accomplished using permutations on data, and then applying a function to the permutation to produce ciphertext. Confusion and Diffusion 5 Complex Use of a Key • Diffusion complicates the statistics of the ciphertext, and makes it difficult to discover the key of the encryption process. • The process of confusion , makes the use of the key so complex, that even when an attacker knows the statistics, it is still difficult to deduce the key. Confusion and Diffusion 6 Complex Use of a Key(p.2) • Confusion can be accomplished by using a complex substitution algorithm. -

Performance Evaluation of Newly Proposed Lightweight Cipher, BRIGHT

Received: January 22, 2019 71 Performance Evaluation of Newly Proposed Lightweight Cipher, BRIGHT Deepti Sehrawat1* Nasib Singh Gill1 1Department of Computer Science & Applications, Maharshi Dayanand University, Rohtak, Haryana, India * Corresponding author’s Email: [email protected] Abstract: Lightweight security algorithms are tailored for resource-constrained environment. To improve the efficiency of an algorithm, usually, a tradeoff is involved in lightweight cryptography in terms of its memory requirements and speed. By adopting several performance enhancement techniques, a security framework for IoT enabled applications is presented in this paper. Proposed BRIGHT family of ciphers is comparably better than existing lightweight ciphers and support a range of block and key sizes for constraint environment. It enables users to match their security needs with application requirements by supporting a range of cryptographic solutions. The BRIGHT family of ciphers is a software-oriented design. The performance of BRIGHT family of lightweight ciphers is evaluated on different parameters. All versions of BRIGHT family ciphers fulfill Strict Avalanche Criteria, key sensitivity test, and randomness test. BRIGHT family ciphers show better performance in terms of memory requirements, cost and speed as compared to existing lightweight ciphers. Keywords: Performance evaluation, BRIGHT, Cryptographic solutions, Lightweight block cipher, ARX, GFN, Feistel block ciphers. devices information security is evidently necessary 1. Introduction [3]. To provide high security and privacy, cryptographic solutions must be used. However, due In IoT field, various resource constraints devices to very low available energy, the limited size of ROM communicate in the network using RFID (Radio and RAM consumption and high-security demand in Frequency Identification Devices) which is a fast- a resource-constrained environment, lightweight growing technology that allows automated cryptographic security solutions are required [4]. -

What You Should Know for the Final Exam

MATC16 Cryptography and Coding Theory G´abor Pete University of Toronto Scarborough What you should know for the final exam Principles and goals of cryptography: Kerckhoff’s principle. Shannon’s confusion and diffusion. Possible attack situations (cipher- text only, chosen plaintext, etc.). Possible goals of attacker, and the corresponding tasks of cryptography (confidentiality, data integrity, authentication, non-repudiation). [Chap- ter 1, plus page 38 and http://en.wikipedia.org/wiki/Confusion_and_diffusion for diffusion & confusion.] Classical cryptosystems: Number theory basics: infinitely many primes exist, basics of modular arithmetic, extended Euclidean algorithm, solving ax+by = d, inverting numbers and matrices (mod n). [Sections 3.1-3 and 3.8.] Shift and affine ciphers. Their ciphertext only and known plaintext attacks. Composition of two affine ciphers is again an affine cipher. [Sections 2.1-2.] Substitution ciphers in general. [Section 2.4] Vigen`ere cipher. Known plaintext attack. Ciphertext only: finding the key length, then frequency analysis. [Section 2.3.] Hill cipher. Known plaintext attack. [Section 2.7.] One-time pad. LFSR sequences. Known plaintext attack, finding the recursion. [Sections 2.9 and 11.] Basics of Enigma. [Section 2.12, up to middle of page 53.] The DES: Feistel systems, simplified and real DES (without the exact expansion functions and S-boxes and permutations, of course), how decryption works in these DES versions. How the extra parity check bits in the real DES key ensure error detection. How confusion and diffusion are fulfilled in DES. [Sections 4.1-2 and 4.4.] Double and Triple DES. Meet-in-the-middle attack. (I mentioned here that one can organize the two lists of length n and find a match between them in almost linear time (n log n) instead of the naive approach that would give only n2, and hence would ruin the attack completely. -

A Lightweight Encryption Algorithm for Secure Internet of Things

Pre-Print Version, Original article is available at (IJACSA) International Journal of Advanced Computer Science and Applications, Vol. 8, No. 1, 2017 SIT: A Lightweight Encryption Algorithm for Secure Internet of Things Muhammad Usman∗, Irfan Ahmedy, M. Imran Aslamy, Shujaat Khan∗ and Usman Ali Shahy ∗Faculty of Engineering Science and Technology (FEST), Iqra University, Defence View, Karachi-75500, Pakistan. Email: fmusman, [email protected] yDepartment of Electronic Engineering, NED University of Engineering and Technology, University Road, Karachi 75270, Pakistan. Email: firfans, [email protected], [email protected] Abstract—The Internet of Things (IoT) being a promising and apply analytics to share the most valuable data with the technology of the future is expected to connect billions of devices. applications. The IoT is taking the conventional internet, sensor The increased number of communication is expected to generate network and mobile network to another level as every thing mountains of data and the security of data can be a threat. The will be connected to the internet. A matter of concern that must devices in the architecture are essentially smaller in size and be kept under consideration is to ensure the issues related to low powered. Conventional encryption algorithms are generally confidentiality, data integrity and authenticity that will emerge computationally expensive due to their complexity and requires many rounds to encrypt, essentially wasting the constrained on account of security and privacy [4]. energy of the gadgets. Less complex algorithm, however, may compromise the desired integrity. In this paper we propose a A. Applications of IoT: lightweight encryption algorithm named as Secure IoT (SIT). -

Stream and Block Ciphers

Symmetric Cryptography Block Ciphers and Stream Ciphers Stream Ciphers K Seeded by a key K the stream cipher Stream ⃗z generates a random bit-stream z. Cipher A stream of plain-text bits p is XORed with the pseudo-random stream to obtain the cipher text stream c ⃗c=⃗p⊕⃗z and ⃗p=⃗c⊕⃗z ⃗c cipher text ⃗p plain text The same stream generator (using the same seed) ⃗z key stream used for both encryption and decryption Stream Ciphers K →¯zk ¯c=¯p⊕¯z k ¯ci=¯pi ⊕¯z k ¯c j=¯p j ⊕¯zk Attacker has access to ¯ci and ¯c j ¯ci⊕¯c j=(¯pi⊕¯zk )⊕(¯p j⊕¯z k)=¯pi⊕ ¯p j ● XORing two cipher-texts encrypted using the same seed results in XOR of corresponding plain-texts ● Redundancy in plain-text structure can be easily used to determine both plain- texts ● And hence, the key stream ● Never reuse seed? ● Impractical ● Extend seed using an initial value (IV) which can be sent in the clear ● Never reuse IV Block Ciphers ● C=E(P,K) ● P=D(C,K) ● E() and D() are algorithms ● P is a block of “plain text” (m bits) ● C is the corresponding “cipher text” (also m bits) ● K is the secret key (k bits long) ● (k,m) block cipher – k-bit keysize, m-bit blocksize ● (m+k)-bit input, m-bit output Desired Properties ● The most efficient attack should be the brute-force attack (complexity depends only on key length) ● Knowledge of any number of plain-cipher text pairs, still does not reveal any information regarding any bit of the key. -

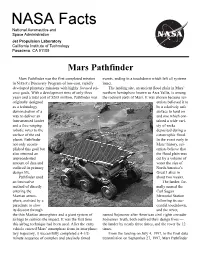

Mars Pathfinder

NASA Facts National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, CA 91109 Mars Pathfinder Mars Pathfinder was the first completed mission events, ending in a touchdown which left all systems in NASAs Discovery Program of low-cost, rapidly intact. developed planetary missions with highly focused sci- The landing site, an ancient flood plain in Mars ence goals. With a development time of only three northern hemisphere known as Ares Vallis, is among years and a total cost of $265 million, Pathfinder was the rockiest parts of Mars. It was chosen because sci- originally designed entists believed it to as a technology be a relatively safe demonstration of a surface to land on way to deliver an and one which con- instrumented lander tained a wide vari- and a free-ranging ety of rocks robotic rover to the deposited during a surface of the red catastrophic flood. planet. Pathfinder In the event early in not only accom- Mars history, sci- plished this goal but entists believe that also returned an the flood plain was unprecedented cut by a volume of amount of data and water the size of outlived its primary North Americas design life. Great Lakes in Pathfinder used about two weeks. an innovative The lander, for- method of directly mally named the entering the Carl Sagan Martian atmos- Memorial Station phere, assisted by a following its suc- parachute to slow cessful touchdown, its descent through and the rover, the thin Martian atmosphere and a giant system of named Sojourner after American civil rights crusader airbags to cushion the impact.