Music Generation and Evaluation Using R by Andrea Lanz a THESIS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

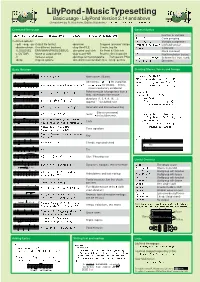

Lilypond Cheatsheet

LilyPond-MusicTypesetting Basic usage - LilyPond Version 2.14 and above Cheatsheet by R. Kainhofer, Edition Kainhofer, http://www.edition-kainhofer.com/ Command-line usage General Syntax lilypond [-l LOGLEVEL] [-dSCMOPTIONS] [-o OUTPUT] [-V] FILE.ly [FILE2.ly] \xxxx function or variable { ... } Code grouping Common options: var = {...} Variable assignment --pdf, --png, --ps Output file format -dpreview Cropped “preview” image \version "2.14.0" LilyPond version -dbackend=eps Use different backend -dlog-file=FILE Create .log file % dots Comment -l LOGLEVEL ERR/WARN/PROG/DEBUG -dno-point-and-click No Point & Click info %{ ... %} Block comment -o OUTDIR Name of output dir/file -djob-count=NR Process files in parallel c\... Postfix-notation (notes) -V Verbose output -dpixmap-format=pngalpha Transparent PNG #'(..), ##t, #'sym Scheme list, true, symb. -dhelp Help on options -dno-delete-intermediate-files Keep .ps files x-.., x^.., x_.. Directions Basic Notation Creating Staves, Voices and Groups \version "2.15.0" c d e f g a b Note names (Dutch) SMusic = \relative c'' { c1\p } Alterations: -is/-es for sharp/flat, SLyrics = \lyricmode { Oh! } cis bes as cisis beses b b! b? -isis/-eses for double, ! forces, AMusic = \relative c' { e1 } ? shows cautionary accidental \relative c' {c f d' c,} Relative mode (change less than a \score { fifth), raise/lower one octave \new ChoirStaff << \new Staff { g1 g2 g4 g8 g16 g4. g4.. durations (1, 2, 4, 8, 16, ...); append “.” for dotted note \new Voice = "Sop" { \dynamicUp \SMusic -

Scummvm Documentation

ScummVM Documentation CadiH May 10, 2021 The basics 1 Understanding the interface4 1.1 The Launcher........................................4 1.2 The Global Main Menu..................................7 2 Handling game files 10 2.1 Multi-disc games...................................... 11 2.2 CD audio.......................................... 11 2.3 Macintosh games...................................... 11 3 Adding and playing a game 13 3.1 Where to get the games.................................. 13 3.2 Adding games to the Launcher.............................. 13 3.3 A note about copyright.................................. 21 4 Saving and loading a game 22 4.1 Saving a game....................................... 22 4.2 Location of saved game files............................... 27 4.3 Loading a game...................................... 27 5 Keyboard shortcuts 30 6 Changing settings 31 6.1 From the Launcher..................................... 31 6.2 In the configuration file.................................. 31 7 Connecting a cloud service 32 8 Using the local web server 37 9 AmigaOS 4 42 9.1 What you’ll need...................................... 42 9.2 Installing ScummVM.................................... 42 9.3 Transferring game files.................................. 42 9.4 Controls........................................... 44 9.5 Paths............................................ 44 9.6 Settings........................................... 44 9.7 Known issues........................................ 44 10 Android 45 i 10.1 What you’ll need..................................... -

Symantec Web Security Service Policy Guide

Web Security Service Policy Guide Revision: NOV.07.2020 Symantec Web Security Service/Page 2 Policy Guide/Page 3 Copyrights Broadcom, the pulse logo, Connecting everything, and Symantec are among the trademarks of Broadcom. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. Copyright © 2020 Broadcom. All Rights Reserved. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. Policy Guide/Page 4 Symantec WSS Policy Guide The Symantec Web Security Service solutions provide real-time protection against web-borne threats. As a cloud-based product, the Web Security Service leverages Symantec's proven security technology, including the WebPulse™ cloud community. With extensive web application controls and detailed reporting features, IT administrators can use the Web Security Service to create and enforce granular policies that are applied to all covered users, including fixed locations and roaming users. If the WSS is the body, then the policy engine is the brain. While the WSS by default provides malware protection (blocks four categories: Phishing, Proxy Avoidance, Spyware Effects/Privacy Concerns, and Spyware/Malware Sources), the additional policy rules and options you create dictate exactly what content your employees can and cannot access—from global allows/denials to individual users at specific times from specific locations. -

How to Create Music with GNU/Linux

How to create music with GNU/Linux Emmanuel Saracco [email protected] How to create music with GNU/Linux by Emmanuel Saracco Copyright © 2005-2009 Emmanuel Saracco How to create music with GNU/Linux Warning WORK IN PROGRESS Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts, and no Back-Cover Texts. A copy of the license is available on the World Wide Web at http://www.gnu.org/licenses/fdl.html. Revision History Revision 0.0 2009-01-30 Revised by: es Not yet versioned: It is still a work in progress. Dedication This howto is dedicated to all GNU/Linux users that refuse to use proprietary software to work with audio. Many thanks to all Free developers and Free composers that help us day-by-day to make this possible. Table of Contents Forword................................................................................................................................................... vii 1. System settings and tuning....................................................................................................................1 1.1. My Studio....................................................................................................................................1 1.2. File system..................................................................................................................................1 1.3. Linux Kernel...............................................................................................................................2 -

Midi Player Download Free Free Audio and Video Player Software – Media Player Lite

midi player download free Free Audio and Video Player Software – Media Player Lite. MediaPlayerLite is a free open source audio and video player on Windows. You can play DVD, AVI, mpeg, FLV, MP4, WMV, MOV, DivX, XviD & more! Play your video and audio now completely free! Features – what can MediaPlayerLite do? Video, Image & Audio Player MPEG- 1, MPEG-2 and MPEG-4 playback. Free MIDI Player. Clicking the download button begins installation of InstallIQ™, which manages your MediaPlayerLite installation. Learn More. You may be offered to install the File Association Manager. For more information click here. You may be offered to install the Yahoo Toolbar. More Information. MediaPlayerLite – Best Software to Open Audio, Music & Sound Files. MediaPlayerLite is a extremely light-weight media player for Windows. It looks just like Windows Media Player v6.4, but has additional features for playing your media. Fast and efficient file playback and without any codecs. Advanced settings for bittrate and resolutions Batch conversion for many files needing to be converted. MediaPlayerLite Features. MediaPlayerLite is based on MPC-HT and supports the following audio, video and image formats: WAV, WMA, MP3, OGG, SND, AU, AIF, AIFC, AIFF, MIDI, MPEG, MPG, MP2, VOB, AC3, DTS, ASX, M3U, PLS, WAX, ASF, WM, WMA, WMV, AVI, CDA, JPEG, JPG, GIF, PNG, BMP, D2V, MP4, SWF, MOV, QT, FLV. Play VCD, SVCD and DVDs Option to remove Tearing Support for EVR (Enhanced Video Renderer) Subtitle Support Playback and recording of television if a supported TV tuner is installed H.264 and VC-1 with DXVA support DivX, Xvid, and Flash Video formats is available MediaPlayerLite can also use the QuickTime and the RealPlayer architectures Supports native playing of OGM and Matroska container formats Use as a Audio player. -

Argomanuv23.Pdf (All Rights Reserved for All Countries) Modules Générateurs Audio Audio Generator Modules

ARGO est constitué de plus de 100 modules ARGO is made of more than 100 de synthèse et de traitement sonore real-time sound synthesis "modules" fonctionnant en temps réel sous Max/MSP. A module is a Max/MSP patch ARGO est conçu pour des utilisateurs qui ARGO is conceived for users who n'ont jamais programmé avec Max et MSP have never programmed with Max/MSP Pour Macintosh (OS9 & OSX) For Macintosh (OS9 & OSX) et PC (Windows XP) and PC (Windows XP) Logiciel libre Freeware http://perso.orange.fr/Paresys/ARGO/ Auteur Gérard Parésys Author [email protected] ARGOManuv23.pdf (All rights reserved for all countries) Modules générateurs audio Audio generator modules Modules filtres audio Audio filter modules Modules modulateurs audio Audio Modulator modules Modules transformateurs audio Audio transformer modules Modules lecteurs/enregisteurs audio Audio Play/record modules Modules liens audio Audio link modules Modules contrôleurs Control modules Modules visu Visu modules Modules MIDI MIDI modules Les modules ARGO sont des fichiers ARGO Modules are files ("patches") (des "patches") exécutés par executed by Max/MSP application . l'application Max/MSP. Sous Windows, les patches ont l'extension For Windows, the patches have the .pat ou .mxf extension .pat or .mxf Rapidement: Quickly: (Si Max/MSP est déjà installé) (If Max/MSP is installed) 1 Ouvrir un ou plusieurs modules 1 Open one or several modules 2 Relier les modules avec : 2 Link the modules with: les 12 "ARGO Bus" 12 "ARGO Bus" les 16 "ARGO MIDI Bus" 16 "ARGO MIDI Bus" (les entrées sont en haut, -

Release 0.23~Git Max Kellermann

Music Player Daemon Release 0.23~git Max Kellermann Sep 24, 2021 CONTENTS: 1 User’s Manual 1 1.1 Introduction...............................................1 1.2 Installation................................................1 1.3 Configuration...............................................4 1.4 Advanced configuration......................................... 12 1.5 Using MPD................................................ 14 1.6 Advanced usage............................................. 16 1.7 Client Hacks............................................... 18 1.8 Troubleshooting............................................. 18 2 Plugin reference 23 2.1 Database plugins............................................. 23 2.2 Storage plugins.............................................. 24 2.3 Neighbor plugins............................................. 25 2.4 Input plugins............................................... 25 2.5 Decoder plugins............................................. 27 2.6 Encoder plugins............................................. 32 2.7 Resampler plugins............................................ 33 2.8 Output plugins.............................................. 35 2.9 Filter plugins............................................... 42 2.10 Playlist plugins.............................................. 43 2.11 Archive plugins.............................................. 44 3 Developer’s Manual 45 3.1 Introduction............................................... 45 3.2 Code Style............................................... -

Latexsample-Thesis

INTEGRAL ESTIMATION IN QUANTUM PHYSICS by Jane Doe A dissertation submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Mathematics The University of Utah May 2016 Copyright c Jane Doe 2016 All Rights Reserved The University of Utah Graduate School STATEMENT OF DISSERTATION APPROVAL The dissertation of Jane Doe has been approved by the following supervisory committee members: Cornelius L´anczos , Chair(s) 17 Feb 2016 Date Approved Hans Bethe , Member 17 Feb 2016 Date Approved Niels Bohr , Member 17 Feb 2016 Date Approved Max Born , Member 17 Feb 2016 Date Approved Paul A. M. Dirac , Member 17 Feb 2016 Date Approved by Petrus Marcus Aurelius Featherstone-Hough , Chair/Dean of the Department/College/School of Mathematics and by Alice B. Toklas , Dean of The Graduate School. ABSTRACT Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. -

![Archive and Compressed [Edit]](https://docslib.b-cdn.net/cover/8796/archive-and-compressed-edit-1288796.webp)

Archive and Compressed [Edit]

Archive and compressed [edit] Main article: List of archive formats • .?Q? – files compressed by the SQ program • 7z – 7-Zip compressed file • AAC – Advanced Audio Coding • ace – ACE compressed file • ALZ – ALZip compressed file • APK – Applications installable on Android • AT3 – Sony's UMD Data compression • .bke – BackupEarth.com Data compression • ARC • ARJ – ARJ compressed file • BA – Scifer Archive (.ba), Scifer External Archive Type • big – Special file compression format used by Electronic Arts for compressing the data for many of EA's games • BIK (.bik) – Bink Video file. A video compression system developed by RAD Game Tools • BKF (.bkf) – Microsoft backup created by NTBACKUP.EXE • bzip2 – (.bz2) • bld - Skyscraper Simulator Building • c4 – JEDMICS image files, a DOD system • cab – Microsoft Cabinet • cals – JEDMICS image files, a DOD system • cpt/sea – Compact Pro (Macintosh) • DAA – Closed-format, Windows-only compressed disk image • deb – Debian Linux install package • DMG – an Apple compressed/encrypted format • DDZ – a file which can only be used by the "daydreamer engine" created by "fever-dreamer", a program similar to RAGS, it's mainly used to make somewhat short games. • DPE – Package of AVE documents made with Aquafadas digital publishing tools. • EEA – An encrypted CAB, ostensibly for protecting email attachments • .egg – Alzip Egg Edition compressed file • EGT (.egt) – EGT Universal Document also used to create compressed cabinet files replaces .ecab • ECAB (.ECAB, .ezip) – EGT Compressed Folder used in advanced systems to compress entire system folders, replaced by EGT Universal Document • ESS (.ess) – EGT SmartSense File, detects files compressed using the EGT compression system. • GHO (.gho, .ghs) – Norton Ghost • gzip (.gz) – Compressed file • IPG (.ipg) – Format in which Apple Inc. -

Real-Time Generation of Music Notation Via Audience Interaction Using Python and GNU Lilypond Kevin C

Proceedings of the 2005 International Conference on New Interfaces for Musical Expression (NIME05), Vancouver, BC, Canada Real-Time Generation of Music Notation via Audience Interaction Using Python and GNU Lilypond Kevin C. Baird University at Buffalo 222 Baird Hall Amherst, NY 14221 01-716-564-1172 [email protected] ABSTRACT 2.2Storage as MusicXML No Clergy is an interactive music performance/installation in In order to perform stochastic transformations on the notation, which the audience is able to shape the ongoing music. In it, No Clergy needs some method of storing musically significant members of a small acoustic ensemble read music notation from information about each page of notation. I chose Recordare's computer screens. As each page refreshes, the notation is altered MusicXML, described by them as “a universal translator for and shaped by both stochastic transformations of earlier music common Western musical notation from the 17th century with the same performance and audience feedback, collected via onwards. It is designed as an interchange format for notation, standard CGI forms. analysis, retrieval, and performance applications.”[10] Each most recent XML file is accessed during the generation of subsequent Keywords pages of notation, while older files are compressed and stored. notation, stochastic, interactive, audience, Python, Lilypond 2.3Rendering of Notation Markup 1.INTRODUCTION After the “conductor” has executed the bash wrapper script, it No Clergy currently runs on a Debian GNU/Linux system. The will then have created one GNU Lilypond markup file for each ideal performance setting has one person serving as the instrument. Lilypond is a Scheme-based music typesetting “conductor” logged in to a shell on the server. -

Lilypond Informations Générales

LilyPond Le syst`eme de notation musicale Informations g´en´erales Equipe´ de d´eveloppement de LilyPond Copyright ⃝c 2009–2020 par les auteurs. This file documents the LilyPond website. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections. A copy of the license is included in the section entitled “GNU Free Documentation License”. Pour LilyPond version 2.21.82 1 LilyPond ... la notation musicale pour tous LilyPond est un logiciel de gravure musicale, destin´e`aproduire des partitions de qualit´e optimale. Ce projet apporte `al’´edition musicale informatis´ee l’esth´etique typographique de la gravure traditionnelle. LilyPond est un logiciel libre rattach´eau projet GNU (https://gnu. org). Plus sur LilyPond dans notre [Introduction], page 3, ! La beaut´epar l’exemple LilyPond est un outil `ala fois puissant et flexible qui se charge de graver toutes sortes de partitions, qu’il s’agisse de musique classique (comme cet exemple de J.S. Bach), notation complexe, musique ancienne, musique moderne, tablature, musique vocale, feuille de chant, applications p´edagogiques, grands projets, sortie personnalis´ee ainsi que des diagrammes de Schenker. Venez puiser l’inspiration dans notre galerie [Exemples], page 6, 2 Actualit´es ⟨undefined⟩ [News], page ⟨undefined⟩, ⟨undefined⟩ [News], page ⟨undefined⟩, ⟨undefined⟩ [News], page ⟨undefined⟩, [Actualit´es], page 103, i Table des mati`eres -

Lilypond Allgemeine Information

LilyPond Das Notensatzsystem Allgemeine Information Das LilyPond-Entwicklungsteam Copyright ⃝c 2009–2020 by the authors. Diese Datei dokumentiert den Internetauftritt von LilyPond. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with no Invariant Sections. A copy of the license is included in the section entitled “GNU Free Documentation License”. F¨ur LilyPond Version 2.22.1 1 LilyPond ... Notensatz f¨ur Jedermann LilyPond ist ein Notensatzsystem. Das erkl¨arte Ziel ist es, Notendruck in bestm¨oglicher Qua- lit¨atzu erstellen. Mit dem Programm wird es m¨oglich, die Asthetik¨ handgestochenen traditio- nellen Notensatzes mit computergesetzten Noten zu erreichen. LilyPond ist Freie Software und Teil des GNU-Projekts (https://gnu.org). Lesen Sie mehr in der [Einleitung], Seite 3! Sch¨oner Notensatz LilyPond ist ein sehr m¨achtiges und flexibles Werkzeug, das Notensatz unterschiedlichster Art handhaben kann: zum Beispiel klassische Musik (wie in diesem Beispiel von J. S. Bach), komplexe Notation, Alte Musik, moderne Musik, Tabulatur, Vokalmusik, Popmusik, Unterrichts- materialien, große Orchesterpartituren, individuelle L¨osungen und sogar Schenker-Graphen. Sehen Sie sich unsere [Beispiele], Seite 6, an und lassen sich inspirieren! 2 Neuigkeiten ⟨undefined⟩ [News], Seite ⟨undefined⟩, ⟨undefined⟩ [News], Seite ⟨undefined⟩, ⟨undefined⟩ [News], Seite ⟨undefined⟩, i Inhaltsverzeichnis Einleitung..................................................