Towards Structured Analysis of Broadcast Badminton Videos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

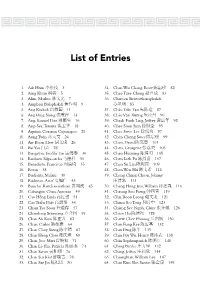

List of Entries

List of Entries 1. Aik Htun 3 34. Chan Wai Chang, Rose 82 2. Aing Khun 5 35. Chao Tzee Cheng 83 3. Alim, Markus 7 36. Charoen Siriwatthanaphakdi 4. Amphon Bulaphakdi 9 85 5. Ang Kiukok 11 37. Châu Traàn Taïo 87 6. Ang Peng Siong 14 38. Châu Vaên Xöông 90 7. Ang, Samuel Dee 16 39. Cheah Fook Ling, Jeffrey 92 8. Ang-See, Teresita 18 40. Chee Soon Juan 95 9. Aquino, Corazon Cojuangco 21 41. Chee Swee Lee 97 10. Aung Twin 24 42. Chen Chong Swee 99 11. Aw Boon Haw 26 43. Chen, David 101 12. Bai Yao 28 44. Chen, Georgette 103 13. Bangayan, Teofilo Tan 30 45. Chen Huiming 105 14. Banharn Silpa-archa 33 46. Chen Lieh Fu 107 15. Benedicto, Francisco 35 47. Chen Su Lan 109 16. Botan 38 48. Chen Wen Hsi 111 17. Budianta, Melani 40 49. Cheng Ching Chuan, Johnny 18. Budiman, Arief 43 113 19. Bunchu Rotchanasathian 45 50. Cheng Heng Jem, William 116 20. Cabangon Chua, Antonio 49 51. Cheong Soo Pieng 119 21. Cao Hoàng Laõnh 51 52. Chia Boon Leong 121 22. Cao Trieàu Phát 54 53. Chiam See Tong 123 23. Cham Tao Soon 57 54. Chiang See Ngoh, Claire 126 24. Chamlong Srimuang 59 55. Chien Ho 128 25. Chan Ah Kow 62 56. Chiew Chee Phoong 130 26. Chan, Carlos 64 57. Chin Fung Kee 132 27. Chan Choy Siong 67 58. Chin Peng 135 28. Chan Heng Chee 69 59. Chin Poy Wu, Henry 138 29. Chan, Jose Mari 71 60. -

PDF, the Physics of Badminton

PAPER • OPEN ACCESS Related content - Physics of knuckleballs The physics of badminton Baptiste Darbois Texier, Caroline Cohen, David Quéré et al. To cite this article: Caroline Cohen et al 2015 New J. Phys. 17 063001 - On the size of sports fields Baptiste Darbois Texier, Caroline Cohen, Guillaume Dupeux et al. - Indeterminacy of drag exerted on an arrow in free flight: arrow attitude and laminar- View the article online for updates and enhancements. turbulent transition T Miyazaki, T Matsumoto, R Ando et al. Recent citations - Entropy of Badminton Strike Positions Javier Galeano et al - Kinetic and kinematic determinants of shuttlecock speed in the forehand jump smash performed by elite male Malaysian badminton players Yuvaraj Ramasamy et al - Multiple Repeated-Sprint Ability Test With Four Changes of Direction for Badminton Players (Part 2): Predicting Skill Level With Anthropometry, Strength, Shuttlecock, and Displacement Velocity Michael Phomsoupha and Guillaume Laffaye This content was downloaded from IP address 170.106.35.229 on 26/09/2021 at 03:47 New J. Phys. 17 (2015) 063001 doi:10.1088/1367-2630/17/6/063001 PAPER The physics of badminton OPEN ACCESS Caroline Cohen1, Baptiste Darbois Texier1, David Quéré2 and Christophe Clanet1 RECEIVED 1 LadHyX, UMR 7646 du CNRS, Ecole Polytechnique, 91128 Palaiseau Cedex, France 15 December 2014 2 PMMH, UMR 7636 du CNRS, ESPCI, 75005 Paris, France REVISED 20 March 2015 Keywords: physics of badminton, shuttlecock flight, shuttlecock flip, badminton trajectory ACCEPTED FOR PUBLICATION 31 March 2015 PUBLISHED 1 June 2015 Abstract The conical shape of a shuttlecock allows it to flip on impact. As a light and extended particle, it flies Content from this work with a pure drag trajectory. -

Entrevista a Lee Chong

ENTREVISTA A LEE CHONG WEI Traducció de l’entrevista apareguda a http://www.badminton-information.com/lee-chong- wei.html Lee Chong Wei és un jugador professional de bàdminton, nascut a Malàisia. Va guanyar la medalla de plata a les olimpíades de Pequí 2008, amb la qual cosa es va convertir en el primer malai en aconseguir arribar a una final olímpica a l’individual masculí, acabant amb la sequera de medalles per Malàisia des de les olimpíades de 1996. Com a jugador d’individual, va aconseguir per primer cop ser número 1 al rànquing mundial el 21 d’agost de 2008, posició que ostenta també a l’actualitat. És doncs el tercer jugador masculí malai que aconsegueix aquesta posició (Rashid Sidek i Roslin Hasim ho van aconseguir abans) i és l’únic del seu país que ha mantingut aquesta posició d’honor per més de dues setmanes. Lee és també l’actual campió de l’All England. Tot i haver guanyat diferents títols en importants competicions internacionals, com són varis Super Series, i malgrat el seu estatus entre l’elit mundial, només ha aconseguit la medalla de bronze (al 2005) en campionats del món. 1. A quina edat vas començar a jugar? Als 11 anys. 2. Sempre vas tenir la intenció de fer-te jugador professional? No, en absolut. Vaig començar a jugar de forma totalment natural, ja que el meu pare entrenava i em va animar a mi a fer-ho també. 3. Quan et vas adonar que eres suficientment bo com per donar “guerra” a nivell mundial? Em sembla que tenia 17 anys. -

History of Badminton

Facts and Records History of Badminton In 1873, the Duke of Beaufort held a lawn party at his country house in the village of Badminton, Gloucestershire. A game of Poona was played on that day and became popular among British society’s elite. The new party sport became known as “the Badminton game”. In 1877, the Bath Badminton Club was formed and developed the first official set of rules. The Badminton Association was formed at a meeting in Southsea on 13th September 1893. It was the first National Association in the world and framed the rules for the Association and for the game. The popularity of the sport increased rapidly with 300 clubs being introduced by the 1920’s. Rising to 9,000 shortly after World War Π. The International Badminton Federation (IBF) was formed in 1934 with nine founding members: England, Ireland, Scotland, Wales, Denmark, Holland, Canada, New Zealand and France and as a consequence the Badminton Association became the Badminton Association of England. From nine founding members, the IBF, now called the Badminton World Federation (BWF), has over 160 member countries. The future of Badminton looks bright. Badminton was officially granted Olympic status in the 1992 Barcelona Games. Indonesia was the dominant force in that first Olympic tournament, winning two golds, a silver and a bronze; the country’s first Olympic medals in its history. More than 1.1 billion people watched the 1992 Olympic Badminton competition on television. Eight years later, and more than a century after introducing Badminton to the world, Britain claimed their first medal in the Olympics when Simon Archer and Jo Goode achieved Mixed Doubles Bronze in Sydney. -

Lin Dan Vs Lee Chong Wei: Lin 22 Wins with the Longest Winning Streak Four Times in a Row

Lin Dan vs Lee Chong Wei: Lin 22 wins with the longest winning streak four times in a row In the early morning of March 12 of Beijing time, 2012 All England Open Badminton Championships had started the men's singles finals of the competition, Once again, the final showdown between Lin and Lee had begun. Lin won the opening game with a close score of 21-19. Unfortunately in the second game with Lin leading 6-2, Lee Chong Wei had to retire. Thus Lin claimed the championship this year without further resistance. This is the fifth All England men's singles title won by Lin Dan. He has also made history winning the most All England men's singles gold medals in the last 36 years. Lin and Lee are undoubtedly the most successful and spectacular players in today’s world badminton men’s singles. The fight between them is also known as the "Lin-Lee Wars”; this is not only the indication of the intensity of their fights; it is also a good description of the smell of the gunpowder produced from their countless encounters. So far, they have met a total of 31 times; Lin Dan has a record of 22 wins and 9 losses against Lee Chong Wei, an obvious advantage on the win-loss ratio. The first meeting was at the Thomas Cup in 2004, when Lin Dan won 2-1. They last played against each other at the 2012 South Korea's Super Series, Lin Dan was overturned by his opponent 1-2. -

The Effect of Celebrity Endorsement on Brand Attitude and Purchase Intention

Journal of Global Business and Social Entrepreneurship (GBSE) Vol. 1: no. 4 (2017) page 141–150| gbse.com.my | eISSN 24621714| THE EFFECT OF CELEBRITY ENDORSEMENT ON BRAND ATTITUDE AND PURCHASE INTENTION Chin Vi Vien [email protected] Choy Tuck Yun [email protected] Pang Looi Fai [email protected] Sunway University Business School Sunway University, 47500 Selangor Darul Ehsan, Malaysia Abstract This research examines how Celebrity Endorsement affects Brand Attitude and Purchase Intention. In this study, the effect of Endorser Credibility, Endorser Likeability, Brand Image and Brand Credibility on Brand Attitude and Purchase Intention was examined. The respondents were 96 university students in Malaysia. Correlation analyses showed that all four independent variables positively and significantly affected Brand Attitude and Purchase Intention. Further multiple linear regression analyses showed that Brand Credibility was more important than Endorser Credibility in positively affecting Brand Attitude. In addition, both Endorser Credibility and Endorser Likeability were more important than Brand Credibility in positively affecting Purchase Intentions. The findings add to previous research on the importance of Celebrity Endorsement and provides practical guidance in marketing. In particular, the significance of Celebrity Endorsement over Brand Credibility in Purchase Intentions suggests further research. The findings in this research is limited to the purchase behaviour of urban young adults in Malaysia. Keywords: Celebrity Endorsement, Brand Attitude, Purchase Intention. 2017 GBSE Journal 1.0 Introduction In recent years, Malaysian organisations have increased their budget on promotional activities and have invested millions on celebrity advertisement (Freeman & Chen, 2015). Favourite Malaysian celebrities usually endorse more than one brand at any one time. -

Top 50 Badminton Prize Winners

Top 50 Badminton Prize Winners - 2017 National Total Prize Money Rank Player Association (USD) 1 Akane Yamaguchi JPN US$261,363 2 Chen Qingchen CHN 256,551 3 Tai Tzu Ying TPE 240,050 4 Srikanth Kidambi IND 236,423 5 Zheng Siwei CHN 207,581 6 Huang Yaqiong CHN 195,907 7 Kevin Sanjaya Sukamuljo INA 186,625 7 Marcus Fernaldi Gideon INA 186,625 9 Viktor Axelsen DEN 165,550 10 Lee Chong Wei MAS 165,188 11 Pusarla Venkata Sindhu IND 159,275 12 Ratchanok Intanon THA 149,813 13 Chen Long CHN 135,153 14 Lee So Hee KOR 131,799 15 Jia Yifan CHN 112,483 16 Zhang Nan CHN 106,138 17 Carsten Mogensen DEN 99,624 17 Mathias Boe DEN 99,624 19 Sayaka Sato JPN 99,095 20 Nozomi Okuhara JPN 97,913 21 Liu Yuchen CHN 97,388 22 Christinna Pedersen DEN 97,318 23 Koharu Yonemoto JPN 96,828 24 Shiho Tanaka JPN 94,043 25 Carolina Marin ESP 93,673 National Total Prize Money Rank Player Association (USD) 26 Sung Ji Hyun KOR 91,345 27 Li Junhui CHN 90,388 28 Lin Dan CHN 86,495 29 Lu Kai CHN 86,440 30 Son Wan Ho KOR 86,258 31 Shi Yuqi CHN 85,210 32 Sayaka Hirota JPN 85,037 33 Misaki Matsutomo JPN 84,525 34 Chang Ye Na KOR 80,138 35 Liu Cheng CHN 78,806 36 Wang Yilyu CHN 78,775 37 Tontowi Ahmad INA 77,565 38 Shin Seung Chan KOR 77,555 39 Chou Tien Chen TPE 76,750 40 Ayaka Takahashi JPN 76,597 41 Liliyana Natsir INA 76,500 42 Huang Dongping CHN 73,754 43 Yuki Fukushima JPN 71,168 44 Tse Ying Suet HKG 71,125 45 Tang Chun Man HKG 68,241 46 Kamilla Rytter Juhl DEN 67,684 47 Keigo Sonoda JPN 62,484 47 Takeshi Kamura JPN 62,484 49 Yu Xiaohan CHN 62,268 50 Chen Yufei CHN 60,513 Copyright: Badzine.net This list can be used for editorial purposes free of rights. -

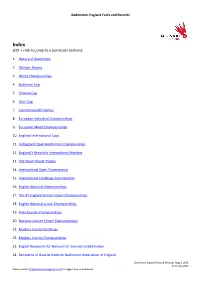

Facts and Records

Badminton England Facts and Records Index (cltr + click to jump to a particular section): 1. History of Badminton 2. Olympic Games 3. World Championships 4. Sudirman Cup 5. Thomas Cup 6. Uber Cup 7. Commonwealth Games 8. European Individual Championships 9. European Mixed Championships 10. England International Caps 11. All England Open Badminton Championships 12. England’s Record in International Matches 13. The Stuart Wyatt Trophy 14. International Open Tournaments 15. International Challenge Tournaments 16. English National Championships 17. The All England Seniors’ Open Championships 18. English National Junior Championships 19. Inter-County Championships 20. National Leisure Centre Championships 21. Masters County Challenge 22. Masters County Championships 23. English Recipients for Honours for Services to Badminton 24. Recipients of Awards made by Badminton Association of England Badminton England Facts & Records: Page 1 of 86 As at May 2021 Please contact [email protected] to suggest any amendments. Badminton England Facts and Records 25. English recipients of Awards made by the Badminton World Federation 1. The History of Badminton: Badminton House and Estate lies in the heart of the Gloucestershire countryside and is the private home of the 12th Duke and Duchess of Beaufort and the Somerset family. The House is not normally open to the general public, it dates from the 17th century and is set in a beautiful deer park which hosts the world-famous Badminton Horse Trials. The Great Hall at Badminton House is famous for an incident on a rainy day in 1863 when the game of badminton was said to have been invented by friends of the 8th Duke of Beaufort. -

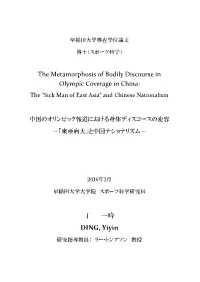

The Metamorphosis of Bodily Discourse in Olympic Coverage in China: the “Sick Man of East Asia” and Chinese Nationalism

早稲田大学審査学位論文 博士(スポーツ科学) The Metamorphosis of Bodily Discourse in Olympic Coverage in China: The “Sick Man of East Asia” and Chinese Nationalism 中国のオリンピック報道における身体ディスコースの変容 -「東亜病夫」と中国ナショナリズム- 2016年1月 早稲田大学大学院 スポーツ科学研究科 丁 一吟 DING, Yiyin 研究指導教員: リー・トンプソン 教授 Table of Contents Table of Contents ................................................................................................. i List of Figures ...................................................................................................... v List of Tables .................................................................................................... viii Acknowledgements ........................................................................................... ix Notes on Translation and Use of Terms ............................................................ xi Chapter 1. Introduction ...................................................................................... 1 1.1 Prelude: “Sick Man of East Asia” Phenomenon in Contemporary Chinese Popular Culture .......................................................................................................... 1 1.2 Olympics in China: History and external politics ............................................... 5 1.3 Sporting Stereotype and National Identity ......................................................... 9 1.4 Sport and the Body in China ............................................................................... 11 1.5 Chapter Overview ............................................................................................... -

Cellebration E-Newsletter (RGB) Revfa

THE OFFICIAL E-NEWSLETTER OF CRYOCORD JANUARY 2017 Cellebration celebrating life together Birthday celebration for Dato’ Lee Chong Wei at Perfect Pregnancy 3.0 CryoCord successfully organized an antenatal class; Perfect Pregnancy 3.0 at The Gardens Hotel, Mid Valley, Kuala Lumpur. More than 200 parents-to-be participated in the event. Perfect Pregnancy 3.0 is designed to help soon-to-be parents to prepare for labour, birth and early parenthood. The event was graced with the presence of Dato’ Lee Chong Wei and Datin Wong Mew Choo. The duo played several badminton matches with some of the lucky participants. CryoCord’s selected fans from Hong Kong also had an opportunity to share the court with the badminton ace. Towards the end of the event, a surprise birthday celebration was held for Dato’ Lee. First UC-MSC study on unrelated recipients approved by Ministry of Health Cytopeutics is now licensed to carry out stem cell research on healthy participants. The study done by Cytopeutics in collaboration with NSCMH Medical Centre uses umbilical-cord mesenchymal stem cells on unrelated recipients and is the first of its kind approved by the Ministry of Health. This research will be led by Dr. Chin Sze Piaw, Clinical and Research Advisor of Cytopeutics. Cytopeutics, a subsidiary of CryoCord group, is one of the leading stem cell research companies in Malaysia. Best Stem Cell Bank for five consecutive years CryoCord has been voted as the Best Stem Cell Bank for five consecutive years, since 2012. The voting was done by public, in an independent survey, ran by the BabyTalk Magazines across the nation. -

Bounedjah Brace Caps 5-Star Al Sadd's Rout of Al

Liverpool’s perfect start continues with 3-0 win over SUNDAY, SEPTEMBER 23, 2018 S’thampton PAGE 18 ‘I did not like my team’: Mourinho fumes at Manchester United’s draw PAGE 18 ASIA CUP INDIA VS PAKISTAN (2.30 PM) Bounedjah brace caps 5-star Al Sadd’s rout of Al Rayyan IKOLI VICTOR DOHA AL SADD produced an attack- ing masterclass as they doused any concerns of an Asian Champions League hango- ver to blow away Al Rayyan in convincing style with five magnificent goals in the Qa- tar Clasico as coach Jesualdo Ferreira strolled to a massive victory in front of 5,346 fans at Jassim Bin Hamad Stadium on Saturday. Baghdad Bounedjah opened the floodgate of goals on his 42nd QNB Stars League ap- pearance for Al Sadd and added the fourth, before goals from Abdelkarim Hassan, Akram Afif and Jung Wooyoung. This was Al Sadd’s fourth win in five league matches, Al Sadd supporters at the Jassim Bin Hamad Stadium on Saturday. and for Al Rayyan it was a harrowing experience. It was their biggest defeat in recent QNB Stars memory and first defeat of Al Duhail upstage the season as they dropped League to sixth in the league table. Al Sadd 5 Al Sadd meanwhile, have Al Gharafa 4-2 13 points with whopping 26 (Bounedjah 11’, 70’, goals scored in five games and A Hasan 31’, Akram TRIBUNE NEWS NETWORK ond time and found the net. a game still to play. 59’, W. Jung 79’) DOHA Al Duhail goalkeeper Amin bt Al Rayyan 0 Lecomte brought down Tare- Al Duhail 4 (El Arabi REIGNING QNB Stars mi and the referee promptly League champions Al Du- pointed to the penalty spot. -

Can Malaysia End Their Elusive Gold Medal Chase? the Burning Question - Can Malaysia End Their Elusive Gold Medal Chase?

The Star Section: Sport Ad Value: RM 18,821 17-Jul-2021 Size : 374cm2 PR Value: RM 56,465 The burning question - can Malaysia end their elusive gold medal chase? The burning question - can Malaysia end their elusive gold medal chase? IN less than a week, all eyes will be on three editions in Beijing (2008), London And based on the draw, this could be just has to believe in himself. Japan, a country that promises the best (2012) and Rio de Janeiro (2016.) his potential pathway to the final - For the first time, there are more Olympics action despite the Covid-19 Rio was a Games to remember when defending champion Chen Long of China Malaysian women athletes in the challenges. we nicked four silvers (three badminton, in the last 16, second seed Chou Tien- Olympics, it does not matter that some of RAJES PAUL In Malaysia, there is one burning one diving) and one bronze (cycling). chen of Taiwan in the quarter, Anthony them are there as wildcards. question - can we finally nail our first There will be 30 Malaysian athletes in Ginting of Indonesia or Anders Antonsen Their presence in the Olympics itself is gold medal? Or maybe even two? Tokyo, two fewer than in the Rio team of Denmark in the semis, and either an indication of an achievement that The wait has been long. Too long. but the smaller number does notin any Kento Momota of Japan or Viktor Axelsen many of us can only dream about. We have been sending a team to the way mean poorer quality.