Sentiment Classification of News Article

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Miss Venezuela

Universidad Católica Andrés Bello Facultad de Humanidades y Educación Escuela de Comunicación Social Comunicaciones Integradas al Mercadeo Trabajo Final de Concentración Concursos de belleza como herramienta promocional de la moda. Caso de estudio: Miss Venezuela Autoras: María Valentina Castro Lindainés Romero Tutor: Pedro José Navarro Caracas, julio 2017 INDICE GENERAL Introducción…………………………………………………………………….................3 Capítulo I. Marco Teórico……………………………….………………………………5 1. Marco Conceptual………………………………………………………….….5 1.1 Imagen……………………………………………………………………...5 1.1.1 Definición…………………………………………………………...5 1.1.2 Tipos de Imagen…………………………………………………...5 1.2 Posicionamiento…………………………………………………………...6 1.2.1 Definición…………………………………………………………...6 1.2.2 Estrategias de posicionamiento………………………………….7 1.3 Promoción………………………………………………………………….8 1.3.1 Definición…………………………………………………………...8 1.3.2 Herramientas de la mezcla de promoción………………………9 1.3.3 Medios Convencionales…………………………………………10 1.3.4 Medios no Convencionales……………………………………..11 1.3.5 Origen y Evolución…………………………………………...….12 1.4 Segmentación……………………………..……………………………..12 1.4.1 Definición…………………………….……………………………12 2. Marco Referencial……………………………………………………………14 2.1 Origen de los concursos de belleza……………………………………14 2.2 El primer concurso de belleza………………………………………….14 2.3 Miss Venezuela…………………………………………………………..16 2.3.1 Situación político, social y económica del Miss Venezuela…22 Capítulo 2. Marco Metodológico……………………………………………………..26 1. Modalidad…………………………………………………………………26 1 2. Objetivo……………………………………………………………………26 -

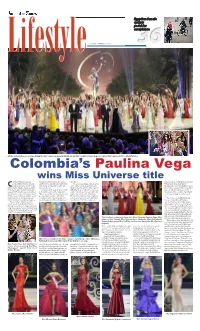

P40 Layout 1

Egyptian female cyclists pedal for acceptance TUESDAY, JANUARY 27, 2015 36 All the contestants pose on stage during the Miss Universe pageant in Miami. (Inset) Miss Colombia Paulina Vega is crowned Miss Universe 2014.— AP/AFP photos Colombia’s Paulina Vega wins Miss Universe title olombia’s Paulina Vega was Venezuelan Gabriela Isler. She edged the map. Cuban soap opera star William Levy and crowned Miss Universe Sunday, out first runner-up, Nia Sanchez from the “We are persevering people, despite Philippine boxing great Manny Cbeating out contenders from the United States, hugging her as the win all the obstacles, we keep fighting for Pacquiao. The event is actually the 2014 United States, Ukraine, Jamaica and The was announced. what we want to achieve. After years of Miss Universe pageant. The competition Netherlands at the world’s top beauty London-born Vega dedicated her title difficulty, we are leading in several areas was scheduled to take place between pageant in Florida. The 22-year-old mod- to Colombia and to all her supporters. on the world stage,” she said earlier dur- the Golden Globes and the Super Bowl el and business student triumphed over “We are proud, this is a triumph, not only ing the question round. Colombian to try to get a bigger television audi- 87 other women from around the world, personal, but for all those 47 million President Juan Manuel Santos applaud- ence. and is only the second beauty queen Colombians who were dreaming with ed her, praising the brown-haired beau- The contest, owned by billionaire from Colombia to take home the prize. -

November 2013 Current Affairs Study Material

Shared on QualifyGate.com . www.QualifyGate.com November 2013 Current Affairs Study Material INTERNATIONAL Taiwan and Singapore Signed Free Trade Agreement Taiwan signed a free trade agreement with Singapore on 7 November in Taipei, the capital of Taiwan.It is first trade agreement Taiwan has signed with a member of the Association of Southeast Nations.Singapore is Taiwan’s fifth-largest trade partner and fourth-largest export market, with bilateral trade totalling US$28.2 billion in 2012. U.S. named Nigeria’s Boko Haram ‘Terrorist’ Group The US State Department has on 13 November officially designated Boko Haram, the Nigerian Islamist group responsible for the 2011 bombing of the UN headquarters in Abuja, as a “foreign terrorist organisation”. The designation is significant because it directs US law enforcement and regulatory agencies to block business and financial transactions with Boko Haram, which is fighting to impose Islamic law in northern Nigeria and has ties to al Qaeda. Boko Haram means “Western education is sacrilege”. Abdullah Yameen Elected as President of Maldives Abdulla Yameen, the candidate of Progressive Party , has been elected as president of the Maldives on 16 November .In the presidential election, Yameen defeated Mohamed Nasheed of Maldivian Democratic Party with a margin of six thousand votes.Yameen secured 51.39 percent (1 lakh 11 thousand) of votes, whereas Mohamed Nasheed received 48.61 (1 lakh 5 thousand votes).Yameen is an economist and the half-brother of Maumoon Abdul Gayoom, the former autocratic ruler, who ruled Maldives for 30 years. Iran and P5+1 Group of Nations Signed The Historic Nuclear Deal Iran and P5+1 group of Nations reached a breakthrough deal on 24 November 2013 to curb Iran’s nuclear programme in exchange for limited sanctions relief.An agreement to this effect was signed at UN Headquarters in Geneva on 24 November 2013 between the Chief negotiator for the six nations, Catherine Marie Ashton and Iranian Foreign Minister Mohammad Jawad Zarief. -

Rihanna Se Une a Mccartney

EXCELSIOR LUNES 26 DE ENERO DE 2015 [email protected] @Funcion_Exc Foto: AP BIRDMAN SUMA TRIUNFOS Durante la entrega de los premios del Sindicato de Actores de Hollywood, el filme Birdman o La inesperada virtud de la igno- rancia, dirigido por el mexicano Alejandro G. Iñárritu, se llevó la presea por Mejor Elenco de Película, rubro en el que competía con cintas como Boyhood: Momentos de una vida, del texano LA MÁS BELLA DEL MUNDO Richard Linklater, El gran hotel Budapest, del también texano Wes Anderson, El código enigma, del noruego Morten Tyldum, y La teoría del todo, del realizador británico James Marsh. “Estoy orgulloso de haber sido parte de este gran equipo. La actuación es como el máximo trabajo en equipo. Este premio es para Alejandro (G. Iñárritu) y para los productores del filme”, dijo el actor Michael Keaton, protagonista de Birdman >10 y 11 Tras superar las pruebas de traje de baño y de noche, Paulina Vega Dieppa, originaria de Barranquilla, fue nombrada Miss Universo, imponiéndose a Estados Unidos, Holanda, Ucrania y Jamaica, las otras finalistas >8 y 9 Foto: Reuters RIHANNA SE UNE A MCCARTNEY Foto: Archivo La cantante Rihanna lanzó ayer, por POR SIEMPRE NEWMAN sorpresa, Four Five Seconds, el primer sencillo de su nuevo álbum, en el que la Un día como hoy, pero de hace 90 años, nació el actor Paul Newman, una de las más grandes estrellas del séptimo arte. acompaña el exbeatle Paul McCartney Su leyenda en la actuación se comenzó a escribir en 1954, y el rapero estadunidense Kanye West. -

Capitulo I Definición

CAPITULO I DEFINICIÓN CAPITULO I DEFINICION 1. DESCRIPCIÓN DE LA SITUACIÓN. OBJETO DE ESTUDIO. Entre las actividades más transcendentales practicadas por las mujeres en los últimos tiempos de este siglo, radica en verse preciosas físicamente, olvidándose muchas veces que, la belleza se puede descubrir también en el interior de sí mismas, por eso se hace necesario promover la inclusión y aceptación de la preciosidad femenina desde otras figuras, es decir, aceptar que se pueden ver encantadoras sin importar cuál sea la talla corporal que se luzca. Todo esto se ve reforzado por unos poderosos medios de comunicación que presentan un cierto ideal físico de mujer, necesario para triunfar en la vida, según los cánones de belleza del momento. Según Morrison (2004), a través de la historia, el ideal de belleza se ha fortalecido dentro del contexto social y cada vez ha sido más difícil de alcanzar. El fuerte impacto que ejercen los medios masivos de comunicación; sobre la promoción de este ideal de lindeza y de éxito, así como, el equivalente a estar delgado, apoya la cultura del adelgazamiento. Ahora bien, la campaña "Venezuela tiene curvas" surge del esfuerzo de diferentes jóvenes venezolanas con el propósito de fomentar un sistema 5 6 de valores, considerado perdido en un país, donde “ser bella” es poseer unos atributos de belleza con patrones o valores corporales cercanos a los 90-60-90. Con ésta iniciativa buscan romper los estereotipos e impulsar el amor propio, la sana aceptación, autoestima y desarrollo de personalidad en las chicas que por no poseer estas medidas se sienten excluidas. -

Miss Universe Venezuela, Gabriela Isler Crowned Miss Universe 2013 at Crocus City Hall in Moscow, Russia

MISS UNIVERSE VENEZUELA, GABRIELA ISLER CROWNED MISS UNIVERSE 2013 AT CROCUS CITY HALL IN MOSCOW, RUSSIA Moscow, Russia – November 9, 2013 – In front of a worldwide audience of approximately 1 billion viewers, Miss Universe Venezuela, Gabriela Isler was crowned Miss Universe 2013 from Crocus City Hall in Moscow, Russia. The 62nd Annual MISS UNIVERSE® Competition was broadcast on NBC with a Spanish simulcast on Telemundo. Miss Universe Venezuela, Gabriela Isler is the 7th young woman to take home the Miss Universe crown from Venezuela. The 25 year-old college graduate with a marketing degree enjoys flamenco dancing and baking when she is not modeling. She is from the town of Maracay, Venezuela, right outside of Caracas, the country’s capital. Thomas Roberts, anchor of (“MSNBC Live”) and Melanie Brown, best known to audiences as Mel B (“America’s Got Talent”), co-hosted the pageant. Jeannie Mai, recognized for her fashion segments on NBC’s “Today,” served as commentator. The judges who sealed the fate of this year’s winner included: Steven Tyler, Aerosmith’s front man and lead singer; Chef Nobu, acclaimed chef proprietor of Nobu and Matsuhisa restaurants; Tara Lipinski, Gold medal figure skater and 2014 Winter Olympics figure skating analyst for NBC Olympics’ multi-platform coverage; Carol Alt, TV personality, star of Fox News Channel's 'A HEALTHY YOU AND CAROL ALT’, international supermodel, actress, author, entrepreneur and raw-food enthusiast; Anne V, supermodel, actress, philanthropist and mentor on Oxygen's upcoming season of “The Face” with nine consecutive appearances in Sports Illustrated Swimsuit issue; Farouk Shami, founder of Farouk Systems, Inc. -

Gabriela Isler Miss Universe 2013

GABRIELA ISLER MISS UNIVERSE 2013 On November 9, 2013, Gabriela Isler made history when she was named Miss Universe, becoming the seventh woman from Venezuela to bring home the crown. Representing a country with such an impressive Miss Universe track record can be a source of added pressure for Venezuelan beauties competing for the ultimate title. But for 26-year-old Gabriela wearing the Venezuela sash was a great honor allowing her to compete with confidence and pride onstage. Venezuela is known for intensive training programs for its beauty queens. Gabriela credits her age and life experiences for giving her the ability to make her own decisions on how she wanted to present herself at the pageant, even at times when she was given instructions from her Venezuelan director. One request of pageant coaches to which she did acquiesce, was to wear only high heels during her three weeks on location in Russia. Surprisingly, prior to winning the Miss Venezuela title, Gabriela – a stunning beauty at nearly six feet tall – never saw herself having a career in front of the camera. Rather, she was focused on her studies and graduated from the private institution, UNITEC with a degree in marketing and business administration and eventually plans to open her own business after getting her MBA. While going to school, Gabriela was introduced to the modeling world unexpectedly when a scout spotted her unique features which she attributes to her father who is Swiss and German. Modeling led to Miss Universe Venezuela “and the rest,” as they say, “is history.” Gabriela has used her title to bring attention to important issues around the world, especially those that involve women and children. -

A Cure by 2020?

SUMMER 2014 INNThe Newsletter of amfAR,OV The FoundationAT for AIDS ResearchIONS Countdown to a Cure for AIDS: A Cure by 2020? In February 2014, amfAR announced it the scientic community a new will ratchet up the intensity of its research understanding of the challenges that efforts with the launch of a bold new must be overcome to get to a cure. And initiative, Countdown to a Cure for AIDS. there is growing condence that, with the The goal: to develop the scientic basis right investments, these challenges can for a broadly applicable cure by 2020. be overcome. “With an improving economy, To reach the ambitious goal of a recent technological advances and cure by 2020, amfAR plans to raise and momentum in the research community, dedicate up to $100 million to the effort. now is the time to commit ourselves to It is also moving to a more directed nding an accessible cure and nally research investment strategy focused on bring the global HIV/AIDS epidemic collaborative approaches to addressing to an end,” said amfAR Chairman what amfAR has identied as the four amfAR Trustee Harry Belafonte delivered a powerful call to action Kenneth Cole. key challenges that stand in the way of at the amfAR New York Gala, February 5, to launch amfAR’s Research breakthroughs over a cure: Countdown to a Cure. the last several years have brought CONTINUED ON PAGE 6 amfAR Ramps Up Investments in Cure Research THREE ROUNDS OF GRANTS IN FIRST HALF OF 2014 TOTAL ALMOST $4 MILLION In the rst six months of 2014, amfAR research that will accelerate our progress announced three new rounds of cure- toward a cure.” focused research grants to 21 teams of Representing the largest sum scientists totaling close to $4 million. -

Celsa Landaeta Y Lilibeth Morillo No

Celsa landaeta y lilibeth morillo no Continue ❗ OF EXTENSIONS AND PASSPORTS RECEIVED IN FEBRUARY, JANUARY 2020 AND DECEMBER, AUGUST 2019❗ The Consular Section of the Bolivarian Republic of Venezuela in Peru repeats the lists of passports and extensions published above (February, January 2020 and December and August 2019) and makes available to our conferences that have advanced in Peru, following telephone numbers to agree the revocation of their documents as planned. Call hours From Monday to Saturday from 9:00 to 18:00. 980 622 779 999 645 327 912 669 626 923 185 964 924 12 5 733 922 297 825 To reconcile your appointment they should appear in published lists and find the list number and order, in which they appear (Example: in case of extension, B1, No. 54; in case of obtaining a passport No. 178). The call will explain the requirements to be made. List of extensions received in February 2020: List of passports that arrived in January 2020: The list of extensions received in December 2019: The list of extensions that arrived in August 2019: Reportedly, the withdrawal of the document is strictly personal. For children and adolescents, retirement can be made by a parent. It is emphasized that no managers or processing is allowed. confirmed that in order to protect healthcare and ensure risky situations against COVID-19, the withdrawal of the document is due to the planned appointment in coordination with our consular officials. In this sense, the use of a mask is mandatory for the implementation of security measures, the arrival is strictly punctual at the time of your appointment and the visit of only the user who withdraws the document. -

Sponsorship Package

— Our Health, Our Future — CIELO GALA CIPRIANI WALL STREET, NYC | FRIDAY, OCTOBER 15, 2021 www.cielolatino.org SPONSORSHIP PACKAGE Cielo is a fundraising event of the Latino Commission on AIDS (501(c)3) 24 W 25th Street, 9th Floor, New York, NY 10010 • (212) 675-3288 www.cielolatino.org DESIGNING A WORLD WITHOUT AIDS CIELO’S IMPACT Last year we kicked-off our 30th anniversary celebration at our first ever Virtual Cielo Gala. We are excited to continue our 30-year celebration with a look toward the future and our goals for the Latino Commission on AIDS. We invite you to join us as a sponsor for our live in-person 2021 Cielo gala on October 15, 2021, at Cipriani Wall Street in NYC, when we will also simultaneously celebrate our 19th annual National Latino AIDS Awareness Day (NLAAD). As the largest annual fundraiser in response to the impact of the HIV/AIDS epidemic in the Hispanic/ Latinx community, Cielo supports the Latino Commission on AIDS’ mission to spearhead health advocacy, promote HIV education, develop model prevention programs for high-risk communities, and build capacity in our community organizations and clients. Our tireless work to serve the Latinx community did not stop in 2020; it only grew as we leveraged our expertise in the battle against HIV and AIDS, to combat the novel Coronavirus (COVID-19). We need your support to reach this goal! We could not have achieved our past successes without our partners like you. As we continue to celebrate three decades of dedication, leadership and impact, and continue our mission, we humbly ask that you consider a sponsorship that will help the Latino Commission on AIDS to continue its work protecting the health of those most vulnerable in our communities. -

“Soy Sólo Una Servidora Del Señor”

SU TIENDA HISPANAÊUÊEnvíos de dinero /i°ÊÓnÎÈÓäÓnÓÊUÊ289-362-0369 2-278 Bunting Rd., St. Catharines ON L2M 7M2 EL PRIMER PERIÓDICO HISPANO DE LAS REGIONES DE HAMILTON, HALTON, PEEL Y NIÁGARA GRATIS Vol 10 - No. 5 Febrero 2015 “Soy sólo una servidora del Señor” Un ejemplo de esfuerzo, supera- ción y amor incondicional al prójimo es Maritza Véliz. Una generosa gua- temalteca que abrazada a la palabra de Dios, tras 24 años viviendo en Ca- nadá, no ha cesado de dar lo mejor de sí para brindar apoyo a quienes llegan en busca de un mejor futuro a este país llenos de dudas, miedos y nece- sidades. Además es una diligente promo- tora del desarrollo de la comunidad a través de valores humanos y cristia- nos, valores que no sólo inculca, sino que pone en práctica cada día de su vida. ¡Tuvo un Accidente de Carro! ABOGADO Y NOTARIO PÚBLICO Federico Pérez Hernández BARRISTER & SOLICITOR Member of the Law Society of Upper Canada ¿Tiene un famliar detenido? ¿Se está separando o divorciando? LEY CRIMINAL - LEY DE FAMILIA LEY DE INMIGRACIÓN TRADUCCIONES LEGALES CERTIFICADAS 8 Main Street East, Suite 400 Hamilton, Ontario L8N 1E8 Por estas calles Reportaje especial Farándula Niágara 905-869-2468 Jóvenes hispanos donantes Un amor Paulina Vega Adriana Clifford preside 416-458-8701 de células madres 7 sin querer queriendo 9 coronada Miss Universo 13 Multicultural Center 17 [email protected] LLÁMANOS AL 905-575-2101 SOLICITANDO TU CANCIÓN FAVORITA O COMPARTIENDO INFORMACIÓN DE INTERÉS COMUNITARIO... s0RESENCIA,ATINA - Febrero 2015 Un plan a la medida comienza con una asesoría que se ajusta perfectamente a sus objetivos. -

Venezuelancrowned Miss Universein Moscow

Clooney, Cumberbatch honored at BAFTA Britannia awards MONDAY, NOVEMBER 11, 2013 39 Participants take part in the 2013 Miss Universe competition in Moscow on November 9, 2013.—AFP photos crowned Miss Universe in Moscow 25-year-oldVenezuelan Venezuelan television presenter, Gabriela Isler, on Satur- swimsuit that the organizers said was worth $1 million. the audience. The contest, whose slogan is “confidently beautiful,” was first day was crowned Miss Universe in Moscow in a glittering ceremony. The previous Miss Universe, Culpo, earlier appeared on stage wearing held in 1952 in Palm Beach in the United States. It still requires the women A Judges, including rock star Steven Tyler from Aerosmith, picked the the white cutaway swimsuit studded with diamonds, rubies and emeralds. not to be married or pregnant. Complete with a swimsuit round, the con- winner from a total of 86 contestants at the show, watched by millions of “That swimsuit travels with an armed security guard 24/7,” commented test remains hugely popular, particularly in Central and South America. viewers around the world. Last year’s winner Olivia Culpo of the United co-host Thomas Roberts, an American television presenter. Roberts, who States placed a diamond crown on the head of Isler, who wore a silver co-hosted the final with ex-Spice Girl Melanie Brown, dedicated the show to ‘I empathize with the LGBT people here’ rhine-stone studded evening dress. Isler’s triumph was the seventh Miss the people suffering from the typhoon in the Philippines.“We send our very The decision to stage the show in Moscow has sparked debate over Rus- Universe win for Venezuela, where beauty pageants are a major source of best, our thoughts.