Internet Routing Algorithms, Transmission and Time: Toward a Concept of Transmissive Control

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UC Riverside UC Riverside Electronic Theses and Dissertations

UC Riverside UC Riverside Electronic Theses and Dissertations Title Sonic Retro-Futures: Musical Nostalgia as Revolution in Post-1960s American Literature, Film and Technoculture Permalink https://escholarship.org/uc/item/65f2825x Author Young, Mark Thomas Publication Date 2015 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA RIVERSIDE Sonic Retro-Futures: Musical Nostalgia as Revolution in Post-1960s American Literature, Film and Technoculture A Dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in English by Mark Thomas Young June 2015 Dissertation Committee: Dr. Sherryl Vint, Chairperson Dr. Steven Gould Axelrod Dr. Tom Lutz Copyright by Mark Thomas Young 2015 The Dissertation of Mark Thomas Young is approved: Committee Chairperson University of California, Riverside ACKNOWLEDGEMENTS As there are many midwives to an “individual” success, I’d like to thank the various mentors, colleagues, organizations, friends, and family members who have supported me through the stages of conception, drafting, revision, and completion of this project. Perhaps the most important influences on my early thinking about this topic came from Paweł Frelik and Larry McCaffery, with whom I shared a rousing desert hike in the foothills of Borrego Springs. After an evening of food, drink, and lively exchange, I had the long-overdue epiphany to channel my training in musical performance more directly into my academic pursuits. The early support, friendship, and collegiality of these two had a tremendously positive effect on the arc of my scholarship; knowing they believed in the project helped me pencil its first sketchy contours—and ultimately see it through to the end. -

Cisco SCA BB Protocol Reference Guide

Cisco Service Control Application for Broadband Protocol Reference Guide Protocol Pack #60 August 02, 2018 Cisco Systems, Inc. www.cisco.com Cisco has more than 200 offices worldwide. Addresses, phone numbers, and fax numbers are listed on the Cisco website at www.cisco.com/go/offices. THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

You Searched for Busy Mac Torrents

1 / 2 You Searched For Busy : Mac Torrents You can use it forever, at no cost. Premium features can be purchased in the app. Also available on the Mac App Store. Requires macOS 10.12 / iOS 11 / iPadOS .... Apr 20, 2021 — If you search for the best torrent websites for Mac check out the ... Going to a cinema is not always possible with the busy life we all have to lead .... May 18, 2018 — BusyContacts is a contact manager for OS X that lets you create, find ... a powerful tool for filtering contacts, creating saved searches, and even .... We've posted a detailed account of our trouble with PayPal, and the EFF's efforts to ... This has not been ideal, especially of late since I've been so very busy with .... Back. undefined. Skip navigation. Search. Search. Search. Sign in ... Fix most file download errors If you .... 4 days ago — If you wish to download this Linux Mint Cinnamon edition right away, visit this torrent link. ... instilled by other operating systems like Windows and Mac. ... The system user has the option of dismissing the update notification when busy. ... Nemo 5.0 additionally lets a system user perform a content search.. Mar 23, 2020 — Excel For Mac Free Download Demonoid is certainly heading ... Going to a film theater is definitely not generally an option with the busy existence we all possess. ... To download torrénts conveniently we suggest best torrent client for ... You can possibly enter the name of your preferred show to search for it .... Jul 14, 2020 — You'll just need the best Mac torrenting client and a reliable Internet connection. -

Swedish Film New Docs #3 2012

Swedish Film NEW DOCS FALL 2012 / WINTER 2013 After You The Clip Colombianos Dare Remember Days of Maremma Everyone Is Older Than I Am Fanny, Alexander & I For You Naked Give Us the Money Le manque The Man Behind the Throne Martha & Niki No Burqas Behind Bars Palme The Sarnos She Male Snails She’s Staging It Sing, Sing Louder Storm In the Andes The Stripper TPB AFK Tzvetanka The Weather War The Wild Ones Welcome to the world of Swedish docs! This year has been a real success, starting at the Sundance Film Festival where Big Boys Gone Bananas!* by Fredrik Gertten and Searching for Sugar Man by Malik Bendjelloul competed and Sugar Man got both the Audience Award and the Jury Special Prize. These two titles are now up for the Oscar nomination run. Domestically we’ve had the best year in the cinemas for Swedish docs since 1979. Titles like Palme by Kristina Lindström and Maud Nycander with an audience over 150.000, and still growing, Searching for Sugar Man, For You Naked by Sara Broos and She Male Snails by Ester Martin Bergsmark have all attracted large audiences. The film critics are also overwhelmed; of all Swedish films that premiered during 2012 so far, five docs are at the top! And more is to come, you now hold in your hands the new issue of Swedish Film with 24 new feature documentaries, ready to hit international festivals and markets such as DOK Leipzig, CPH:DOX and IDFA. Please visit our website www.sfi.se for updated information on Swedish features, documentaries and shorts. -

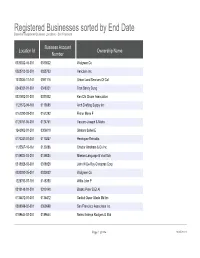

Registered Businesses Sorted by End Date Based on Registered Business Locations - San Francisco

Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco Business Account Location Id Ownership Name Number 0030032-46-001 0030032 Walgreen Co 0028703-02-001 0028703 Vericlaim Inc 1012834-11-141 0091116 Urban Land Services Of Cal 0348331-01-001 0348331 Tran Sandy Dung 0331802-01-001 0331802 Ken Chi Chuan Association 1121572-09-161 0113585 Arch Drafting Supply Inc 0161292-03-001 0161292 Fisher Marie F 0124761-06-001 0124761 Vaccaro Joseph & Maria 1243902-01-201 0306019 Shatara Suheil E 0170247-01-001 0170247 Henriquez Reinaldo 1125567-10-161 0130286 Chador Abraham & Co Inc 0189884-03-001 0189884 Mission Language & Vocl Sch 0318928-03-001 0318928 John W De Roy Chiroprac Corp 0030032-35-001 0030032 Walgreen Co 1228793-07-191 0148350 Willis John P 0310148-01-001 0310148 Blasko Peter B Et Al 0135472-01-001 0135472 Saddul Oscar Allado Md Inc 0369698-02-001 0369698 San Francisco Associates Inc 0189644-02-001 0189644 Neirro Erainya Rodgers G Etal Page 1 of 984 10/05/2021 Registered Businesses sorted by End Date Based on Registered Business Locations - San Francisco DBA Name Street Address City State Source Zipcode Walgreens #15567 845 Market St San Francisco CA 94103 Vericlaim Inc 500 Sansome St Ste 614 San Francisco CA 94111 Urban Land Services Of Cal 1170 Sacramento St 5d San Francisco CA 94108 Elizabeth Hair Studio 672 Geary St San Francisco CA 94102 Ken Chi Chuan Association 3626 Taraval St Apt 3 San Francisco CA 94116 Arch Drafting Supply Inc 10 Carolina St San Francisco CA 94103 Marie Fisher Interior -

Growth of the Internet

Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] Preliminary version, July 6, 2001 Abstract The Internet is the main cause of the recent explosion of activity in optical fiber telecommunica- tions. The high growth rates observed on the Internet, and the popular perception that growth rates were even higher, led to an upsurge in research, development, and investment in telecommunications. The telecom crash of 2000 occurred when investors realized that transmission capacity in place and under construction greatly exceeded actual traffic demand. This chapter discusses the growth of the Internet and compares it with that of other communication services. Internet traffic is growing, approximately doubling each year. There are reasonable arguments that it will continue to grow at this rate for the rest of this decade. If this happens, then in a few years, we may have a rough balance between supply and demand. Growth of the Internet K. G. Coffman and A. M. Odlyzko AT&T Labs - Research [email protected], [email protected] 1. Introduction Optical fiber communications was initially developed for the voice phone system. The feverish level of activity that we have experienced since the late 1990s, though, was caused primarily by the rapidly rising demand for Internet connectivity. The Internet has been growing at unprecedented rates. Moreover, because it is versatile and penetrates deeply into the economy, it is affecting all of society, and therefore has attracted inordinate amounts of public attention. The aim of this chapter is to summarize the current state of knowledge about the growth rates of the Internet, with special attention paid to the implications for fiber optic transmission. -

Diapositiva 1

TRANSFERENCIA O DISTRIBUCIÓN DE ARCHIVOS ENTRE IGUALES (peer-to-peer) Características, Protocolos, Software, Luis Villalta Márquez Configuración Peer-to-peer Una red peer-to-peer, red de pares, red entre iguales, red entre pares o red punto a punto (P2P, por sus siglas en inglés) es una red de computadoras en la que todos o algunos aspectos funcionan sin clientes ni servidores fijos, sino una serie de nodos que se comportan como iguales entre sí. Es decir, actúan simultáneamente como clientes y servidores respecto a los demás nodos de la red. Las redes P2P permiten el intercambio directo de información, en cualquier formato, entre los ordenadores interconectados. Peer-to-peer Normalmente este tipo de redes se implementan como redes superpuestas construidas en la capa de aplicación de redes públicas como Internet. El hecho de que sirvan para compartir e intercambiar información de forma directa entre dos o más usuarios ha propiciado que parte de los usuarios lo utilicen para intercambiar archivos cuyo contenido está sujeto a las leyes de copyright, lo que ha generado una gran polémica entre defensores y detractores de estos sistemas. Las redes peer-to-peer aprovechan, administran y optimizan el uso del ancho de banda de los demás usuarios de la red por medio de la conectividad entre los mismos, y obtienen así más rendimiento en las conexiones y transferencias que con algunos métodos centralizados convencionales, donde una cantidad relativamente pequeña de servidores provee el total del ancho de banda y recursos compartidos para un servicio o aplicación. Peer-to-peer Dichas redes son útiles para diversos propósitos. -

Democratizing Production Through Open Source Knowledge: from Open Software to Open Hardware Article (Accepted Version) (Refereed)

Alison Powell Democratizing production through open source knowledge: from open software to open hardware Article (Accepted version) (Refereed) Original citation: Powell, Alison (2012) Democratizing production through open source knowledge: from open software to open hardware. Media, Culture & Society, 34 (6). pp. 691-708. ISSN 0163-4437 DOI: 10.1177/0163443712449497 © 2012 The Author This version available at: http://eprints.lse.ac.uk/46173/ Available in LSE Research Online: July 2013 LSE has developed LSE Research Online so that users may access research output of the School. Copyright © and Moral Rights for the papers on this site are retained by the individual authors and/or other copyright owners. Users may download and/or print one copy of any article(s) in LSE Research Online to facilitate their private study or for non-commercial research. You may not engage in further distribution of the material or use it for any profit-making activities or any commercial gain. You may freely distribute the URL (http://eprints.lse.ac.uk) of the LSE Research Online website. This document is the author’s final accepted version of the journal article. There may be differences between this version and the published version. You are advised to consult the publisher’s version if you wish to cite from it. Democratizing Production through Open Source Knowledge: From Open Software to Open Hardware Alison Powell London School of Economics and Political Science Department of Media and Communications Final revised submission to Media, Culture and Society March 2012 1 Acknowledgements Research results presented in this paper were developed with the support of a Canadian Social Sciences and Humanities Research Council postdoctoral fellowship. -

You Are Not Welcome Among Us: Pirates and the State

International Journal of Communication 9(2015), 890–908 1932–8036/20150005 You Are Not Welcome Among Us: Pirates and the State JESSICA L. BEYER University of Washington, USA FENWICK MCKELVEY1 Concordia University, Canada In a historical review focused on digital piracy, we explore the relationship between hacker politics and the state. We distinguish between two core aspects of piracy—the challenge to property rights and the challenge to state power—and argue that digital piracy should be considered more broadly as a challenge to the authority of the state. We trace generations of peer-to-peer networking, showing that digital piracy is a key component in the development of a political platform that advocates for a set of ideals grounded in collaborative culture, nonhierarchical organization, and a reliance on the network. We assert that this politics expresses itself in a philosophy that was formed together with the development of the state-evading forms of communication that perpetuate unmanageable networks. Keywords: pirates, information politics, intellectual property, state networks Introduction Digital piracy is most frequently framed as a challenge to property rights or as theft. This framing is not incorrect, but it overemphasizes intellectual property regimes and, in doing so, underemphasizes the broader political challenge posed by digital pirates. In fact, digital pirates and broader “hacker culture” are part of a political challenge to the state, as well as a challenge to property rights regimes. This challenge is articulated in terms of contributory culture, in contrast to the commodification and enclosures of capitalist culture; as nonhierarchical, in contrast to the strict hierarchies of the modern state; and as faith in the potential of a seemingly uncontrollable communication technology that makes all of this possible, in contrast to a fear of the potential chaos that unsurveilled spaces can bring. -

JULIAN ASSANGE: When Google Met Wikileaks

JULIAN ASSANGE JULIAN +OR Books Email Images Behind Google’s image as the over-friendly giant of global tech when.google.met.wikileaks.org Nobody wants to acknowledge that Google has grown big and bad. But it has. Schmidt’s tenure as CEO saw Google integrate with the shadiest of US power structures as it expanded into a geographically invasive megacorporation... Google is watching you when.google.met.wikileaks.org As Google enlarges its industrial surveillance cone to cover the majority of the world’s / WikiLeaks population... Google was accepting NSA money to the tune of... WHEN GOOGLE MET WIKILEAKS GOOGLE WHEN When Google Met WikiLeaks Google spends more on Washington lobbying than leading military contractors when.google.met.wikileaks.org WikiLeaks Search I’m Feeling Evil Google entered the lobbying rankings above military aerospace giant Lockheed Martin, with a total of $18.2 million spent in 2012. Boeing and Northrop Grumman also came below the tech… Transcript of secret meeting between Julian Assange and Google’s Eric Schmidt... wikileaks.org/Transcript-Meeting-Assange-Schmidt.html Assange: We wouldn’t mind a leak from Google, which would be, I think, probably all the Patriot Act requests... Schmidt: Which would be [whispers] illegal... Assange: Tell your general counsel to argue... Eric Schmidt and the State Department-Google nexus when.google.met.wikileaks.org It was at this point that I realized that Eric Schmidt might not have been an emissary of Google alone... the delegation was one part Google, three parts US foreign-policy establishment... We called the State Department front desk and told them that Julian Assange wanted to have a conversation with Hillary Clinton... -

P2P Protocols

CHAPTER 1 P2P Protocols Introduction This chapter lists the P2P protocols currently supported by Cisco SCA BB. For each protocol, the following information is provided: • Clients of this protocol that are supported, including the specific version supported. • Default TCP ports for these P2P protocols. Traffic on these ports would be classified to the specific protocol as a default, in case this traffic was not classified based on any of the protocol signatures. • Comments; these mostly relate to the differences between various Cisco SCA BB releases in the level of support for the P2P protocol for specified clients. Table 1-1 P2P Protocols Protocol Name Validated Clients TCP Ports Comments Acestream Acestream PC v2.1 — Supported PC v2.1 as of Protocol Pack #39. Supported PC v3.0 as of Protocol Pack #44. Amazon Appstore Android v12.0000.803.0C_642000010 — Supported as of Protocol Pack #44. Angle Media — None Supported as of Protocol Pack #13. AntsP2P Beta 1.5.6 b 0.9.3 with PP#05 — — Aptoide Android v7.0.6 None Supported as of Protocol Pack #52. BaiBao BaiBao v1.3.1 None — Baidu Baidu PC [Web Browser], Android None Supported as of Protocol Pack #44. v6.1.0 Baidu Movie Baidu Movie 2000 None Supported as of Protocol Pack #08. BBBroadcast BBBroadcast 1.2 None Supported as of Protocol Pack #12. Cisco Service Control Application for Broadband Protocol Reference Guide 1-1 Chapter 1 P2P Protocols Introduction Table 1-1 P2P Protocols (continued) Protocol Name Validated Clients TCP Ports Comments BitTorrent BitTorrent v4.0.1 6881-6889, 6969 Supported Bittorrent Sync as of PP#38 Android v-1.1.37, iOS v-1.1.118 ans PC exeem v0.23 v-1.1.27. -

Peer-To-Peer

Peer-to-peer T-110.7100 Applications and Services in Internet, Fall 2009 Jukka K. Nurminen 1 V1-Filename.ppt / 2008-10-22 / Jukka K. Nurminen Schedule Tue 15.9.2009 Introduction to P2P (example Content delivery (BitTorrent 12-14 P2P systems, history of P2P, and CoolStreaming) what is P2P) Tue 22.9.2009 Unstructured content search Structured content search 12-14 (Napster, Gnutella, Kazaa) (DHT) Tue 29.9.2009 Energy-efficiency & Mobile P2P 12-14 2 2008-10-22 / Jukka K. Nurminen Azureus BitTorrent client 3 2008-10-22 / Jukka K. Nurminen BearShare 4 2008-10-22 / Jukka K. Nurminen Symbian S60 versions: Symella and SymTorrent 5 2008-10-22 / Jukka K. Nurminen Skype How skype works: http://arxiv.org/ftp/cs/papers/0412/0412017.pdf 6 2008-10-22 / Jukka K. Nurminen SETI@home (setiathome.berkeley.edu) • Currently the largest distributed computing effort with over 3 million users • SETI@home is a scientific experiment that uses Internet-connected computers in the Search for Extraterrestrial Intelligence (SETI). You can participate by running a free program that downloads and analyzes radio telescope data. 7 2008-10-22 / Jukka K. Nurminen Folding@home (http://folding.stanford.edu/) 8 2008-10-22 / Jukka K. Nurminen PPLive, TVU, … “PPLive is a P2P television network software that famous all over the world. It has the largest number of users, the most extensive coverage in internet.” PPLive • 100 million downloads of its P2P streaming video client • 24 million users per month • access to 900 or so live TV channels • 200 individual advertisers this year alone Source: iResearch, August, 2008 9 2008-10-22 / Jukka K.