Absolutely No Free Lunches!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Version of 21.8.15 Chapter 43 Topologies and Measures II The

Version of 21.8.15 Chapter 43 Topologies and measures II The first chapter of this volume was ‘general’ theory of topological measure spaces; I attempted to distinguish the most important properties a topological measure can have – inner regularity, τ-additivity – and describe their interactions at an abstract level. I now turn to rather more specialized investigations, looking for features which offer explanations of the behaviour of the most important spaces, radiating outwards from Lebesgue measure. In effect, this chapter consists of three distinguishable parts and two appendices. The first three sections are based on ideas from descriptive set theory, in particular Souslin’s operation (§431); the properties of this operation are the foundation for the theory of two classes of topological space of particular importance in measure theory, the K-analytic spaces (§432) and the analytic spaces (§433). The second part of the chapter, §§434-435, collects miscellaneous results on Borel and Baire measures, looking at the ways in which topological properties of a space determine properties of the measures it carries. In §436 I present the most important theorems on the representation of linear functionals by integrals; if you like, this is the inverse operation to the construction of integrals from measures in §122. The ideas continue into §437, where I discuss spaces of signed measures representing the duals of spaces of continuous functions, and topologies on spaces of measures. The first appendix, §438, looks at a special topic: the way in which the patterns in §§434-435 are affected if we assume that our spaces are not unreasonably complex in a rather special sense defined in terms of measures on discrete spaces. -

Interim Report IR-07-027 Adaptive Dynamics for Physiologically

International Institute for Tel: +43 2236 807 342 Applied Systems Analysis Fax: +43 2236 71313 Schlossplatz 1 E-mail: [email protected] A-2361 Laxenburg, Austria Web: www.iiasa.ac.at Interim Report IR-07-027 Adaptive dynamics for physiologically structured population models Michel Durinx ([email protected]) Johan A.J. Metz ([email protected]) Géza Meszéna ([email protected]) Approved by Ulf Dieckmann Leader, Evolution and Ecology Program October 2007 Interim Reports on work of the International Institute for Applied Systems Analysis receive only limited review. Views or opinions expressed herein do not necessarily represent those of the Institute, its National Member Organizations, or other organizations supporting the work. IIASA STUDIES IN ADAPTIVE DYNAMICS NO. 133 The Evolution and Ecology Program at IIASA fosters the devel- opment of new mathematical and conceptual techniques for un- derstanding the evolution of complex adaptive systems. Focusing on these long-term implications of adaptive processes in systems of limited growth, the Evolution and Ecology Program brings together scientists and institutions from around the world with IIASA acting as the central node. EEP Scientific progress within the network is collected in the IIASA Studies in Adaptive Dynamics series. No. 1 Metz JAJ, Geritz SAH, Meszéna G, Jacobs FJA, van No. 11 Geritz SAH, Metz JAJ, Kisdi É, Meszéna G: The Dy- Heerwaarden JS: Adaptive Dynamics: A Geometrical Study namics of Adaptation and Evolutionary Branching. IIASA of the Consequences of Nearly Faithful Reproduction. IIASA Working Paper WP-96-077 (1996). Physical Review Letters Working Paper WP-95-099 (1995). -

Beyond Lebesgue and Baire IV: Density Topologies and a Converse

Beyond Lebesgue and Baire IV: Density topologies and a converse Steinhaus-Weil Theorem by N. H. Bingham and A. J. Ostaszewski On the centenary of Hausdorff’s Mengenlehre (1914) and Denjoy’s Approximate continuity (1915) Abstract. The theme here is category-measure duality, in the context of a topological group. One can often handle the (Baire) category case and the (Lebesgue, or Haar) measure cases together, by working bi-topologically: switching between the original topology and a suitable refinement (a density topology). This prompts a systematic study of such density topologies, and the corresponding σ-ideals of negligibles. Such ideas go back to Weil’s classic book, and to Hashimoto’s ideal topologies. We make use of group norms, which cast light on the interplay between the group and measure structures. The Steinhaus- Weil interior-points theorem (‘on AA−1’) plays a crucial role here; so too does its converse, the Simmons-Mospan theorem. Key words. Steinhaus-Weil property, Weil topology, shift-compact, density topology, Hashimoto ideal topology, group norm Mathematics Subject Classification (2000): Primary 26A03; 39B62. 1 Introduction This paper originates from several sources. The first three are our earlier studies ‘Beyond Lebesgue and Baire I-III’ ([BinO1,2], [Ost3]), the general arXiv:1607.00031v2 [math.GN] 8 Nov 2017 theme of which is the similarity (indeed, duality) between measure and ca- tegory, and the primacy of category/topology over measure in many areas. The second three are recent studies by the second author on the Effros Open Mapping Principle ([Ost4,5,6]; §6.4 below). The Steinhaus-Weil property (critical for regular variation: see [BinGT, Th. -

NEGLIGIBLE SETS for REAL CONNECTIVITY FUNCTIONS Jack B

NEGLIGIBLE SETS FOR REAL CONNECTIVITY FUNCTIONS jack b. brown Introduction. Only functions from the interval 1= [0, l] into I will be considered in this paper, and no distinction will be made be- tween a function and its graph. For real functions / from / into I, the property of connectivity is intermediate to that of continuity and the Darboux property, and is equivalent to the property of / being a connected set in the plane. For some connectivity functions g from I into I, it can be observed that there is a subset Af of / such that every function from / into I which agrees with g on 7 —Af is a connectivity function (in this case, M will be said to be g-negli- gible). For example, if Af is a Cantor subset of I and g is a function from I into I such that if x belongs to some component (a, b) of I— M, g (x) = | si n {cot [tr (x —a) / (b —a ]} |, then M is g-negligible. The following theorems will be proved: Theorem 1. If M is a subset of I, then the following statements are equivalent: (i) there is a connectivity function g from I into I such that M is g-negligible, and (ii) every subinterval of I contains c-many points of I—M (c denotes the cardinality of the continuum). Theorem 2. If g is a connectivity function from I into I, then the following statements are equivalent: (i) g is dense in I2, (ii) every nowhere dense subset of I is g-negligible, and (iii) there exists a dense subset of I which is g-negligible. -

One Concrete Application of Bernstein Sets in Measure Theory

One Concrete Application of Bernstein Sets in Measure Theory Mariam Beriashvili I. Vekua Institute of Applied Mathematics Tbilisi State Univeristy mariam [email protected] February 4, 2016 Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 1 / 16 Paradoxical Subsets of R Vitali Set Hamel Bases Bernstein Set Luzini Set Sierpinski Set Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 2 / 16 Bernstein Sets Definition Let X be a subset of the real line R. We say that X is a Bernstein set in R if, for every non-empty perfect set P ⊂ R, both intersections P \ X and P \ (R n X ) are nonempty. Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 3 / 16 Relationships between some Paradoxical Sets Theorem There exists a subset X of R such that X is simultaneously a Vitali set and a Bernstein set. Theorem There exists a Hamel basis of R which simultaneously is a Bernstein set. Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 4 / 16 General Measure Extension Problem Problem of measure extension has a three aspects: Pure set-theoretical Algebraic Topological Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 5 / 16 The Role of Bernstein Sets In particular, we envisage Bernstein subsets of the real line R from the point of view of their measurability with respect to certain classes of measures on R. The importance of Bernstein sets in various questions of general topology, measure theory an the theory of Boolean algebras is well known. Mariam Beriashvili (Tbilisi State University) Short title February 4, 2016 6 / 16 We shall say that a set Y ⊂ E is relatively measurable with respect to the class M if there exists at least one measure µ 2 M such that Y is measurable with respect to µ. -

Pdf/Math/ 0307008V4.Pdf

Version of 17.11.04 Chapter 23 The Radon-Nikod´ym Theorem In Chapter 22, I discussed the indefinite integrals of integrable functions on R, and gave what I hope you feel are satisfying descriptions both of the functions which are indefinite integrals (the absolutely continuous functions) and of how to find which functions they are indefinite integrals of (you differentiate them). For general measure spaces, we have no structure present which can give such simple formulations; but nevertheless the same questions can be asked and, up to a point, answered. The first section of this chapter introduces the basic machinery needed, the concept of ‘countably additive’ functional and its decomposition into positive and negative parts. The main theorem takes up the second section: indefinite integrals are the ‘truly continuous’ additive functionals; on σ-finite spaces, these are the ‘absolutely continuous’ countably additive functionals. In §233 I discuss the most important single application of the theorem, its use in providing a concept of ‘conditional expectation’. This is one of the central concepts of probability theory – as you very likely know; but the form here is a dramatic generalization of the elementary concept of the conditional probability of one event given another, and needs the whole strength of the general theory of measure and integration as developed in Volume 1 and this chapter. I include some notes on convex functions, up to and including versions of Jensen’s inequality (233I-233J). While we are in the area of ‘pure’ measure theory, I take the opportunity to discuss some further topics. I begin with some essentially elementary constructions, image measures, sums of measures and indefinite- integral measures; I think the details need a little attention, and I work through them in §234. -

UCLA Previously Published Works

UCLA UCLA Previously Published Works Title On Total Variation Minimization and Surface Evolution Using Parametric Maximum Flows Permalink https://escholarship.org/uc/item/5sd211v1 Journal International Journal of Computer Vision, 84(3) ISSN 1573-1405 Authors Chambolle, Antonin Darbon, Jérôme Publication Date 2009-09-01 DOI 10.1007/s11263-009-0238-9 Peer reviewed eScholarship.org Powered by the California Digital Library University of California Int J Comput Vis (2009) 84: 288–307 DOI 10.1007/s11263-009-0238-9 On Total Variation Minimization and Surface Evolution Using Parametric Maximum Flows Antonin Chambolle · Jérôme Darbon Received: 23 June 2005 / Accepted: 8 April 2009 / Published online: 23 April 2009 © The Author(s) 2009. This article is published with open access at Springerlink.com Abstract In a recent paper Boykov et al. (LNCS, Vol. 3953, a discrete minimal surface problem, using a maximum pp. 409–422, 2006) propose an approach for computing flow/graph-cut algorithm (Ahuja et al. 1993). This kind of curve and surface evolution using a variational approach and technique has become very popular in the past year in im- the geo-cuts method of Boykov and Kolmogorov (Interna- age processing, for segmentation problems but also stereo tional conference on computer vision, pp. 26–33, 2003). We correspondence, etc., in particular since the apparition of recall in this paper how this is related to well-known ap- quite efficient algorithms (Boykov and Kolmogorov 2004) proaches for mean curvature motion, introduced by Alm- for graphs with low-connectivity, typically in use in this kind gren et al. (SIAM Journal on Control and Optimization of applications. -

Effros, Baire, Steinhaus and Non- Separability

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by LSE Research Online Adam Ostaszewski Effros, Baire, Steinhaus and non- separability Article (Accepted version) (Refereed) Original citation: Ostaszewski, Adam (2015) Effros, Baire, Steinhaus and non-separability. Topology and its Applications, 195 . pp. 265-274. ISSN 0166-8641 DOI: 10.1016/j.topol.2015.09.033 Reuse of this item is permitted through licensing under the Creative Commons: © 2015 Elsevier B.V. CC-BY-NC-ND This version available at: http://eprints.lse.ac.uk/64401/ Available in LSE Research Online: November 2015 LSE has developed LSE Research Online so that users may access research output of the School. Copyright © and Moral Rights for the papers on this site are retained by the individual authors and/or other copyright owners. You may freely distribute the URL (http://eprints.lse.ac.uk) of the LSE Research Online website. Effros, Baire, Steinhaus and Non-Separability By A. J. Ostaszewski Abstract. We give a short proof of an improved version of the Effros Open Mapping Principle via a shift-compactness theorem (also with a short proof), involving ‘sequential analysis’rather than separability, deducing it from the Baire property in a general Baire-space setting (rather than under topological completeness). It is applicable to absolutely-analytic normed groups (which include complete metrizable topological groups), and via a Steinhaus-type Sum-set Theorem (also a consequence of the shift-compactness theorem) in- cludes the classical Open Mapping Theorem (separable or otherwise). Keywords: Open Mapping Theorem, absolutely analytic sets, base-- discrete maps, demi-open maps, Baire spaces, Baire property, group-action shift-compactness. -

Problem Set 2 Autumn 2017

d-math Functional Analysis I ETH Zürich Prof. A. Carlotto Problem Set 2 Autumn 2017 2.1. A metric on sequences L Let S := {(sn)n∈N | ∀n ∈ R : sn ∈ R} be the set of all real-valued sequences. (a) Prove that |x − y | d (x ) , (y ) := X 2−n n n n n∈N n n∈N 1 + |x − y | n∈N n n defines a metric d on S. (b) Show that (S, d) is complete. (c) Show that Sc := {(sn)n∈N ∈ S | ∃N ∈ N ∀n ≥ N : sn = 0}, the space of sequences with compact support, is dense in (S, d). 2.2. A metric on C0(Rm) L m ◦ Let K1 ⊂ K2 ⊂ ... ⊂ R be a family of compact subsets such that Kn ⊂ Kn+1 for S m every n ∈ N and n∈N Kn = R . (a) Prove that kf − gk 0 d(f, g) := X 2−n C (Kn) 1 + kf − gk 0 n∈N C (Kn) defines a metric d on C0(Rm). (b) Show that (C0(Rm), d) is complete. 0 m (c) Show that Cc (R ), the space of continuous functions with compact support, is dense in (C0(Rm), d). Remark. The same conclusions with the same proofs also hold for any open set Ω ⊆ Rm in place of Rm. The metric d deals with the fact that C0(Rm) contains 2 unbounded functions like f(x) = |x| for which supx∈Rm |f(x)| = ∞. 2.3. Statements of Baire L Definition. Let (M, d) be a metric space and A ⊂ M a subset. -

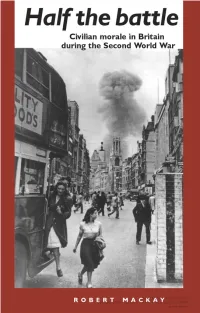

Robert Mackay - 9781526137425 Downloaded from Manchesterhive.Com at 09/24/2021 07:30:30PM Via Free Access HALF the BATTLE

Robert Mackay - 9781526137425 Downloaded from manchesterhive.com at 09/24/2021 07:30:30PM via free access HALF THE BATTLE Robert Mackay - 9781526137425 Downloaded from manchesterhive.com at 09/24/2021 07:30:30PM via free access prelim.p65 1 16/09/02, 09:21 Robert Mackay - 9781526137425 Downloaded from manchesterhive.com at 09/24/2021 07:30:30PM via free access prelim.p65 2 16/09/02, 09:21 HALF THE BATTLE Civilian morale in Britain during the Second World War ROBERT MACKAY Manchester University Press Manchester and New York distributed exclusively in the USA by Palgrave Robert Mackay - 9781526137425 Downloaded from manchesterhive.com at 09/24/2021 07:30:30PM via free access prelim.p65 3 16/09/02, 09:21 Copyright © Robert Mackay 2002 The right of Robert Mackay to be identified as the author of this work has been asserted by him in accordance with the Copyright, Designs and Patents Act 1988. Published by Manchester University Press Oxford Road, Manchester M13 9NR, UK and Room 400, 175 Fifth Avenue, New York, NY 10010, USA www.manchesteruniversitypress.co.uk Distributed exclusively in the USA by Palgrave, 175 Fifth Avenue, New York, NY 10010, USA Distributed exclusively in Canada by UBC Press, University of British Columbia, 2029 West Mall, Vancouver, BC, Canada V6T 1Z2 British Library Cataloguing-in-Publication Data A catalogue record for this book is available from the British Library Library of Congress Cataloging-in-Publication Data applied for ISBN 0 7190 5893 7 hardback 0 7190 5894 5 paperback First published 2002 10 09 08 07 06 05 04 03 02 10 9 8 7 6 5 4 3 2 1 Typeset by Freelance Publishing Services, Brinscall, Lancs. -

2 Negligible Sets 15 2.1 Porosity

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by idUS. Depósito de Investigación Universidad de Sevilla FACULTAD DE MATEMATICAS´ DEPARTAMENTO DE ANALISIS´ MATEMATICO´ GENERICITY OF THE FIXED POINT PROPERTY UNDER RENORMING Ph. D. Thesis presented by Supaluk Phothi UNIVERSIDAD DE SEVILLA FACULTAD DE MATEMATICAS´ DEPARTAMENTO DE ANALISIS´ MATEMATICO´ GENERICITY OF THE FIXED POINT PROPERTY UNDER RENORMING Memoria presentada por Supaluk Phothi para optar al grado de Doctora en Matemticas por la Universidad de Sevilla Fdo. Supaluk Phothi V◦.B◦.: Director del trabajo Fdo. Tom´asDom´ınguezBenavides Catedr´aticodel Departamento de An´alisisMatem´atico de la Universidad de Sevilla Sevilla, Julio de 2010 RESUMEN Una aplicaci´on T definida de un espacio m´etrico M en M se dice no expan- siva si d(T x; T y) ≤ d(x; y) para todo x; y 2 M. Diremos que un espacio de Banach X tiene la Propiedad D´ebildel Punto Fijo (w-FPP) si para toda aplicaci´onno expansiva T definida de un subconjunto d´ebilmente compacto convexo C de X en C tiene un punto fijo. En esta disertaci´on,estudiamos principalmente la w-FPP como una propiedad gen´ericaen el conjunto de todas las normas equivalentes de un espacio de Banach reflexivo dado. Una propiedad P se dice gen´ericaen un conjunto A si todos los elementos de A sat- isfacen P excepto aquellos pertenecientes a un conjunto de tama~nopeque~no. Con el fin de establecer los resultados de este trabajo, consideraremos varias nociones de conjuntos peque~nos,como por ejemplo los conjuntos de Baire de primera categor´ıa,conjuntos porosos, conjuntos nulos Gausianos o conjuntos direccionalmente porosos. -

Sets on Which Measurable Functions Are Determined by Their Range

Sets on which measurable functions are determined by their range Maxim R. Burke∗ Krzysztof Ciesielski† Department of Mathematics and Computer Science, Department of Mathematics, University of Prince Edward Island, West Virginia University, Charlottetown, P.E.I., Canada C1A4P3 Morgantown, WV 26506-6310, USA [email protected] [email protected] Version of 96/01/14 Abstract We study sets on which measurable real-valued functions on a measurable space with negligibles are determined by their range. 1 Introduction In [BD, theorem 8.5], it is shown that, under the Continuum Hypothesis (CH), in any separable Baire topological space X there is a set M such that for any two continuous real-valued functions f and g on X,iff and g are not constant on any nonvoid open set then f[M] ⊆ g[M] implies f = g. In particular, if f[M]=g[M] then f = g. Sets havingthis last property with respect to entire (analytic) functions in the complex plane were studied in [DPR] where they were called sets of range uniqueness (SRU’s).We study the properties of such sets in measurable spaces with negligibles. (See below for the definition.) We prove a generalization of the aforementioned result [BD, theorem 8.5] to such spaces (Theorem 4.3) and answer Question 1 from [BD] by showingthat CH cannot be omitted from the hypothesis of their theorem (Example 5.17). We also study the descriptive nature of SRU’s for the nowhere constant continuous functions on Baire Tychonoff topological spaces. When X = R, the result of [BD, theorem 8.5] states that, under CH, there is a set M ⊆ R such that for any two nowhere constant continuous functions f,g: R → R,iff[M] ⊆ g[M] then f = g.Itis shown in [BD, theorem 8.1] that there is (in ZFC) a set M ⊆ R such that for any continuous functions f,g: R → R,iff has countable level sets and g[M] ⊆ f[M] then g is constant on the connected components of {x ∈ R: f(x) = g(x)}.