Music and Operations Research - the Perfect Match?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Detecting Harmonic Change in Musical Audio

Detecting Harmonic Change In Musical Audio Christopher Harte and Mark Sandler Martin Gasser Centre for Digital Music Austrian Research Institute for Artificial Queen Mary, University of London Intelligence(OF¨ AI) London, UK Freyung 6/6, 1010 Vienna, Austria [email protected] [email protected] ABSTRACT We propose a novel method for detecting changes in the harmonic content of musical audio signals. Our method uses a new model for Equal Tempered Pitch Class Space. This model maps 12-bin chroma vectors to the interior space of a 6-D polytope; pitch classes are mapped onto the vertices of this polytope. Close harmonic relations such as fifths and thirds appear as small Euclidian distances. We calculate the Euclidian distance between analysis frames n + 1 and n − 1 to develop a harmonic change measure for frame n. A peak in the detection function denotes a transi- tion from one harmonically stable region to another. Initial experiments show that the algorithm can successfully detect harmonic changes such as chord boundaries in polyphonic audio recordings. Categories and Subject Descriptors J.0 [Computer Applications]: General General Terms Figure 1: Flow Diagram of HCDF System. Algorithms, Theory has many potential applications in the segmentation of au- dio signals, particularly as a preprocessing stage for further Keywords harmonic recognition and classification algorithms. The pri- Pitch Space, Harmonic, Segmentation, Music, Audio mary motivation for this work is for use in chord recogni- tion from audio. Used as a segmentation algorithm, this approach provides a good foundation for solving the general 1. INTRODUCTION chord recognition problem of needing to know the positions In this paper we introduce a novel process for detect- of chord boundaries in the audio data before being able to ing changes in the harmonic content of audio signals. -

Melodies in Space: Neural Processing of Musical Features A

Melodies in space: Neural processing of musical features A DISSERTATION SUBMITTED TO THE FACULTY OF THE GRADUATE SCHOOL OF THE UNIVERSITY OF MINNESOTA BY Roger Edward Dumas IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY Dr. Apostolos P. Georgopoulos, Adviser Dr. Scott D. Lipscomb, Co-adviser March 2013 © Roger Edward Dumas 2013 Table of Contents Table of Contents .......................................................................................................... i Abbreviations ........................................................................................................... xiv List of Tables ................................................................................................................. v List of Figures ............................................................................................................. vi List of Equations ...................................................................................................... xiii Chapter 1. Introduction ............................................................................................ 1 Melody & neuro-imaging .................................................................................................. 1 The MEG signal ................................................................................................................................. 3 Background ........................................................................................................................... 6 Melodic pitch -

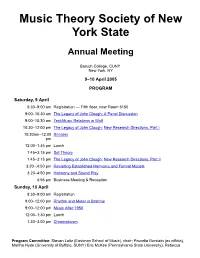

Program and Abstracts for 2005 Meeting

Music Theory Society of New York State Annual Meeting Baruch College, CUNY New York, NY 9–10 April 2005 PROGRAM Saturday, 9 April 8:30–9:00 am Registration — Fifth floor, near Room 5150 9:00–10:30 am The Legacy of John Clough: A Panel Discussion 9:00–10:30 am TextMusic Relations in Wolf 10:30–12:00 pm The Legacy of John Clough: New Research Directions, Part I 10:30am–12:00 Scriabin pm 12:00–1:45 pm Lunch 1:45–3:15 pm Set Theory 1:45–3:15 pm The Legacy of John Clough: New Research Directions, Part II 3:20–;4:50 pm Revisiting Established Harmonic and Formal Models 3:20–4:50 pm Harmony and Sound Play 4:55 pm Business Meeting & Reception Sunday, 10 April 8:30–9:00 am Registration 9:00–12:00 pm Rhythm and Meter in Brahms 9:00–12:00 pm Music After 1950 12:00–1:30 pm Lunch 1:30–3:00 pm Chromaticism Program Committee: Steven Laitz (Eastman School of Music), chair; Poundie Burstein (ex officio), Martha Hyde (University of Buffalo, SUNY) Eric McKee (Pennsylvania State University); Rebecca Jemian (Ithaca College), and Alexandra Vojcic (Juilliard). MTSNYS Home Page | Conference Information Saturday, 9:00–10:30 am The Legacy of John Clough: A Panel Discussion Chair: Norman Carey (Eastman School of Music) Jack Douthett (University at Buffalo, SUNY) Nora Engebretsen (Bowling Green State University) Jonathan Kochavi (Swarthmore, PA) Norman Carey (Eastman School of Music) John Clough was a pioneer in the field of scale theory. -

Messiaen's Regard IV: Automatic Segmentation Using the Spiral Array

REGARDS ON TWO REGARDS BY MESSIAEN: AUTOMATIC SEGMENTATION USING THE SPIRAL ARRAY Elaine Chew University of Southern California Viterbi School of Engineering Epstein Department of Industrial and Systems Engineering Integrated Media Systems Center [email protected] ABSTRACT the same space and uses spatial points in the model’s interior to summarize and represent segments of music. Segmentation by pitch context is a fundamental process The array of pitch representations in the Spiral Array is in music cognition, and applies to both tonal and atonal akin to Longuet-Higgins’ Harmonic Network [7] and the music. This paper introduces a real-time, O(n), tonnetz of neo-Riemannian music theory [4]. The key algorithm for segmenting music automatically by pitch spirals, generated by mathematical aggregation, collection using the Spiral Array model. The correspond in structure to Krumhansl’s network of key segmentation algorithm is applied to Olivier Messiaen’s relations [5] and key representations in Lerdahl’s tonal Regard de la Vierge (Regard IV) and Regard des pitch space [6], each modeled using entirely different prophètes, des bergers et des Mages (Regard XVI) from approaches. his Vingt Regards sur l’Enfant Jésus. The algorithm The present segmentation algorithm, named Argus, is uses backward and forward windows at each point in the latest in a series of computational analysis techniques time to capture local pitch context in the recent past and utilizing the Spiral Array. The Spiral Array model has not-too-distant future. The content of each window is been used in the design of algorithms for key-finding [2] mapped to a spatial point, called the center of effect and off-line determining of key boundaries [3], among (c.e.), in the interior of the Spiral Array. -

A Multi-Level Tonal Interval Space for Modelling Pitch Relatedness and Musical Consonance

Journal of New Music Research, 2016 Vol. 45, No. 4, 281–294, http://dx.doi.org/10.1080/09298215.2016.1182192 A multi-level tonal interval space for modelling pitch relatedness and musical consonance Gilberto Bernardes1 ,DiogoCocharro1,MarceloCaetano1 ,CarlosGuedes1,2 and Matthew E.P. Davies1 1INESC TEC, Portugal; 2New York University Abu Dhabi, United Arab Emirates (Received 12 March 2015; accepted 18 April 2016) Abstract The intelligibility and high explanatory power of tonal pitch spaces usually hide complex theories, which need to account In this paper we present a 12-dimensional tonal space in the for a variety of subjective and contextual factors. To a certain context of the ,Chew’sSpiralArray,andHarte’s Tonnetz extent, the large number of different, and sometimes con- 6-dimensional Tonal Centroid Space. The proposed Tonal tradictory, tonal pitch spaces presented in the literature help Interval Space is calculated as the weighted Discrete Fourier us understand the complexity of such representations. Exist- Transform of normalized 12-element chroma vectors, which ing tonal spaces can be roughly divided into two categories, we represent as six circles covering the set of all possible pitch each anchored to a specific discipline and applied methods. intervals in the chroma space. By weighting the contribution On the one hand we have models grounded in music theory of each circle (and hence pitch interval) independently, we (Cohn, 1997, 1998;Lewin,1987;Tymoczko,2011; Weber, can create a space in which angular and Euclidean distances 1817–1821), and on the other hand, models based on cogni- among pitches, chords, and regions concur with music theory tive psychology (Krumhansl, 1990;Longuet-Higgins,1962; principles. -

Visualization of Low Dimensional Structure in Tonal Pitch Space

VISUALIZATION OF LOW DIMENSIONAL STRUCTURE IN TONAL PITCH SPACE J. Ashley Burgoyne and Lawrence K. Saul Department of Computer and Information Science University of Pennsylvania {burgoyne,lsaul}@cis.upenn.edu ABSTRACT d] F] f] A a C c g] B b D d F f In his 2001 monograph Tonal Pitch Space, Fred Ler- dahl defined an distance function over tonal and post-tonal c] E e G g B[ b[ harmonies distilled from years of research on music cog- nition. Although this work references the toroidal struc- f] A a C c E[ e[ ture commonly associated with harmonic space, it stops b D d F f A[ a[ short of presenting an explicit embedding of this torus. It is possible to use statistical techniques to recreate such an e G g B[ b[ D[ d[ embedding from the distance function, yielding a more a C c E[ e[ G[ g[ complex structure than the standard toroidal model has heretofore assumed. Nonlinear techniques can reduce the Figure 1. Weber’s diagram of tonal space dimensionality of this structure and be tuned to emphasize global or local structure. The resulting manifolds high- light the relationships inherent in the tonal system and of- over harmonies. No other research to date has attempted fer a basis for future work in machine-assisted analysis to embed it explicitly. David Temperley used a MIDI- and music theory. based approach to implement many components of the theory [9] but has not yet treated the topology of the space. The Mathematical Music Theory Group at the Techni- 1. -

Towards Automatic Extraction of Harmony Information from Music Signals

Towards Automatic Extraction of Harmony Information from Music Signals Thesis submitted in partial fulfilment of the requirements of the University of London for the Degree of Doctor of Philosophy Christopher Harte August 2010 Department of Electronic Engineering, Queen Mary, University of London I certify that this thesis, and the research to which it refers, are the product of my own work, and that any ideas or quotations from the work of other people, published or otherwise, are fully acknowledged in accordance with the standard referencing practices of the discipline. I acknowledge the helpful guidance and support of my supervisor, Professor Mark Sandler. 2 Abstract In this thesis we address the subject of automatic extraction of harmony information from audio recordings. We focus on chord symbol recognition and methods for evaluating algorithms designed to perform that task. We present a novel six-dimensional model for equal tempered pitch space based on concepts from neo-Riemannian music theory. This model is employed as the basis of a harmonic change detection function which we use to improve the performance of a chord recognition algorithm. We develop a machine readable text syntax for chord symbols and present a hand labelled chord transcription collection of 180 Beatles songs annotated using this syntax. This collection has been made publicly avail- able and is already widely used for evaluation purposes in the research community. We also introduce methods for comparing chord symbols which we subsequently use for analysing the statistics of the transcription collection. To ensure that researchers are able to use our transcriptions with confidence, we demonstrate a novel alignment algorithm based on simple audio fingerprints that allows local copies of the Beatles audio files to be accurately aligned to our transcriptions automatically. -

Musical Tension Curves and Its Applications

Musical Tension Curves and its Applications Min-Joon Yoo and In-Kwon Lee Department of Computer Science, Yonsei University [email protected], [email protected] Abstract Madson and Fredrickson 1993; Krumhansl 1996). In these attempts, the tension at each part of music has been mea- We suggest a graphical representation of the musical tension sured by manual inputs of the participants in the experiments. flow in tonal music using a piecewise parametric curve, which In our work, we developed several methods to automatically is a function of time illustrating the changing degree of ten- compute the tension of a chord by combining known experi- sion in a corresponding chord progression. The tension curve mental results. Then the series of tension values correspond- can be edited by using conventional curve editing techniques ing to the given chord progression in the input music are in- to reharmonize the original music with reflecting the user’s terpolated to construct a smooth piecewise parametric curve. demand to control the tension of music. We introduce three We exploit the B-spline curve representation that is one of the different methods to measure the tension of a chord in terms most suitable method to model a complex shape such as ten- of a specific key, which can be used to represent the tension sion flow. Once the tension curve is constructed, we can edit of the chord numerically. Then, by interpolating the series of the tension flow of the original music by geometrically edit- numerical tension values, a tension curve is constructed. In ing the tension curve, where the tension curve editing also this paper, we show the tension curve editing method can be generates the new reharmonization of the original chord pro- effectively used in several interesting applications: enhanc- gression. -

A Unified Helical Structure in 3D Pitch Space for Mapping Flat Musical Isomorphism

HEXAGONAL CYLINDRICAL LATTICES: A UNIFIED HELICAL STRUCTURE IN 3D PITCH SPACE FOR MAPPING FLAT MUSICAL ISOMORPHISM A Thesis Submitted to the Faculty of Graduate Studies and Research In Partial Fulfillment of the Requirements for the Degree of Master of Science in Computer Science University of Regina By Hanlin Hu Regina, Saskatchewan December 2015 Copytright c 2016: Hanlin Hu UNIVERSITY OF REGINA FACULTY OF GRADUATE STUDIES AND RESEARCH SUPERVISORY AND EXAMINING COMMITTEE Hanlin Hu, candidate for the degree of Master of Science in Computer Science, has presented a thesis titled, Hexagonal Cylindrical Lattices: A Unified Helical Structure in 3D Pitch Space for Mapping Flat Musical Isomorphism, in an oral examination held on December 8, 2015. The following committee members have found the thesis acceptable in form and content, and that the candidate demonstrated satisfactory knowledge of the subject material. External Examiner: Dr. Dominic Gregorio, Department of Music Supervisor: Dr. David Gerhard, Department of Computer Science Committee Member: Dr. Yiyu Yao, Department of Computer Science Committee Member: Dr. Xue-Dong Yang, Department of Computer Science Chair of Defense: Dr. Allen Herman, Department of Mathematics & Statistics Abstract An isomorphic keyboard layout is an arrangement of notes of a scale such that any musical construct has the same shape regardless of the root note. The mathematics of some specific isomorphisms have been explored since the 1700s, however, only recently has a general theory of isomorphisms been developed such that any set of musical intervals can be used to generate a valid layout. These layouts have been implemented in the design of electronic musical instruments and software applications. -

A Report on Musical Structure Visualization

A Report on Musical Structure Visualization Winnie Wing-Yi Chan Supervised by Professor Huamin Qu Department of Computer Science and Engineering Hong Kong University of Science and Technology Clear Water Bay, Kowloon, Hong Kong August 2007 i Abstract In this report we present the related work of musical structure visualization accompanied with brief discussions on its background and importance. In section 1, the background, motivations and challenges are first introduced. Some definitions and terminology are then presented in section 2 for clarification. In the core part, section 3, a variety of existing techniques from visualization, human-computer interaction and computer music research will be described and evaluated. Lastly, observations and lessons learned from these visual tools are discussed in section 4 and the report is concluded in section 5. ii Contents 1 Introduction 1 1.1 Background ........................................... 1 1.2 Motivations ........................................... 1 1.3Challenges............................................ 2 2 Definitions and Terminology 2 2.1 Sound and Music ........................................ 2 2.2 Music Visualization ....................................... 2 3 Visualization Techniques 3 3.1ArcDiagrams.......................................... 3 3.2Isochords............................................ 3 3.3ImproViz............................................ 4 3.4 Graphic Scores ......................................... 6 3.5 Simplified Scores ....................................... -

Harmonic Change Detection from Musical Audio

FACULDADE DE ENGENHARIA DA UNIVERSIDADE DO PORTO Harmonic Change Detection from Musical Audio Pedro Ramoneda Franco Mestrado Integrado em Engenharia Informática e Computação Supervisor: Gilberto Bernardes July 28, 2020 Harmonic Change Detection from Musical Audio Pedro Ramoneda Franco Mestrado Integrado em Engenharia Informática e Computação July 28, 2020 Abstract In this dissertation, we advance an enhanced method for computing Harte et al.’s [31] Harmonic Change Detection Function (HCDF). HCDF aims to detect harmonic transitions in musical audio signals. HCDF is crucial both for the chord recognition in Music Information Retrieval (MIR) and a wide range of creative applications. In light of recent advances in harmonic description and transformation, we depart from the original architecture of Harte et al.’s HCDF, to revisit each one of its component blocks, which are evaluated using an exhaustive grid search aimed to identify optimal parameters across four large style-specific musical datasets. Our results show that the newly proposed methods and parameter optimization improve the detection of harmonic changes, by 5:57% (f-score) with respect to previous methods. Furthermore, while guaranteeing recall values at > 99%, our method improves precision by 6:28%. Aiming to leverage novel strategies for real-time harmonic-content audio processing, the optimized HCDF is made available for Javascript and the MAX and Pure Data multimedia programming environments. Moreover, all the data as well as the Python code used to generate them, are made available. Keywords: Harmonic changes, Musical audio segmentation, Harmonic-content description, Mu- sic information retrieval i ii Resumo Nesta dissertação, avançamos um método melhorado para computar Harte et al.’s [31] Harmonic Change Detection Function (HCDF). -

Proceedings of Cogsci 2001

Modeling Tonality: Applications to Music Cognition Elaine Chew ([email protected]) University of Southern California Integrated Media Systems Center, and Department of Industrial and Systems Engineering Los Angeles, CA 90089-1450 USA Abstract this piece?” He responded with a reasonable question: “What do you mean by key?” I began singing the piece Processing musical information is a task many of us per- and stopped mid-stream. I then asked the student if he form effortlessly, and often, unconsciously. In order to could sing me the note on which the piece should end. gain a better understanding of this basic human cognitive 4 ability, we propose a mathematical model for tonality, the Without hesitation, he sang the correct pitch , thereby underlying principles for tonal music. The model simul- successfully picking out the first degree, and most sta- taneously incorporates pitch, interval, chord and key rela- ble pitch, in the key. The success of this method raised tions. It generates spatial counterparts for these musical more questions than it answered. What is it we know that entities by aggregating musical information. The model causes us to hear one pitch as being more stable than oth- also serves as a framework on which to design algorithms that can mimic the human ability to organize musical in- ers? How does the mind assess the function of this stable put. One such skill is the ability to determine the key of a pitch over time as the music evolves? musical passage. This is equivalent to being able to pick Before we can study music cognition, we first need a out the most stable pitch in the passage, also known as representation for musical structure.