Russian Social Media Influence: Understanding

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

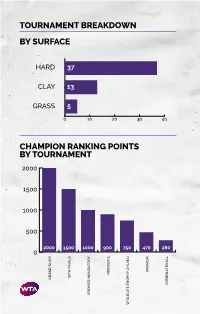

Tournament Breakdown by Surface Champion Ranking Points By

TOURNAMENT BREAKDOWN BY SURFACE HAR 37 CLAY 13 GRASS 5 0 10 20 30 40 CHAMPION RANKING POINTS BY TOURNAMENT 2000 1500 1000 500 2000 1500 1000 900 750 470 280 0 PREMIER PREMIER TA FINALS TA GRAN SLAM INTERNATIONAL PREMIER MANATORY TA ELITE TROPHY HUHAI TROPHY ELITE TA 55 WTA TOURNAMENTS BY REGION BY COUNTRY 8 CHINA 2 SPAIN 1 MOROCCO UNITED STATES 2 SWITZERLAND 7 OF AMERICA 1 NETHERLANDS 3 AUSTRALIA 1 AUSTRIA 1 NEW ZEALAND 3 GREAT BRITAIN 1 COLOMBIA 1 QATAR 3 RUSSIA 1 CZECH REPUBLIC 1 ROMANIA 2 CANADA 1 FRANCE 1 THAILAND 2 GERMANY 1 HONG KONG 1 TURKEY UNITED ARAB 2 ITALY 1 HUNGARY 1 EMIRATES 2 JAPAN 1 SOUTH KOREA 1 UZBEKISTAN 2 MEXICO 1 LUXEMBOURG TOURNAMENTS TOURNAMENTS International Tennis Federation As the world governing body of tennis, the Davis Cup by BNP Paribas and women’s Fed Cup by International Tennis Federation (ITF) is responsible for BNP Paribas are the largest annual international team every level of the sport including the regulation of competitions in sport and most prized in the ITF’s rules and the future development of the game. Based event portfolio. Both have a rich history and have in London, the ITF currently has 210 member nations consistently attracted the best players from each and six regional associations, which administer the passing generation. Further information is available at game in their respective areas, in close consultation www.daviscup.com and www.fedcup.com. with the ITF. The Olympic and Paralympic Tennis Events are also an The ITF is committed to promoting tennis around the important part of the ITF’s responsibilities, with the world and encouraging as many people as possible to 2020 events being held in Tokyo. -

Onomastics – Using Naming Patterns in Genealogy

Onomastics – Using Naming Patterns in Genealogy 1. Where did your family live before coming to America? 2. When did your family come to America? 3. Where did your family first settle in America? Background Onomastics or onomatology is the study of proper names of all kinds and the origins of names. Toponomy or toponomastics, is the study of place names. Anthroponomastics is the study of personal names. Names were originally used to identify characteristics of a person. Over time, some of those original meanings have been lost. Additionally, patterns developed in many cultures to further identify a person and to carry on names within families. In genealogy, knowing the naming patterns of a cultural or regional group can lead to breaking down brick walls. Your great-great-grandfather’s middle name might be his mother’s maiden name – a possible clue – depending on the naming patterns used in that time and place. Of course, as with any clues, you need to find documents to back up your guesses, but the clues may lead you in the right direction. Patronymics Surnames based on the name of the father, such as Johnson and Nielson, are called patronyms. Historically people only had one name, but as the population of an area grew, more was needed. Many cultures used patronyms, with every generation having a different “last name.” John, the son of William, would be John Williamson. His son, Peter, would be Peter Johnson; Peter’s son William would be William Peterson. Patronyms were found in places such as Scandinavia (sen/dottir), Scotland Mac/Nic), Ireland (O’/Mac/ni), Wales (ap/verch), England (Fitz), Spain (ez), Portugal (es), Italy (di/d’/de), France (de/des/du/le/à/fitz), Germany (sen/sohn/witz), the Netherlands (se/sen/zoon/dochter), Russia (vich/ovna/yevna/ochna), Poland (wicz/wski/wsky/icki/-a), and in cultures using the Hebrew (ben/bat) and Arabic (ibn/bint) languages. -

Boxing's Biggest Stars Collide on the Big Screen in "THE ONE: Floyd ‘Money' Mayweather Vs

July 30, 2013 Boxing's Biggest Stars Collide On The Big Screen In "THE ONE: Floyd ‘Money' Mayweather vs. Canelo Alvarez" Exciting Co-Main Event Features Danny Garcia and Lucas Matthysse NCM Fathom Events, Mayweather Promotions, Golden Boy Promotions, Canelo Promotions, SHOWTIME PPV® and O'Reilly Auto Parts Bring Long-Awaited Fights to Movie Theaters Nationwide Live from Las Vegas on Saturday, Sept. 14 CENTENNIAL, Colo.--(BUSINESS WIRE)-- Fans across the country can watch the much-anticipated match-up between the reigning boxing kings of the United States and Mexico when NCM Fathom Events, Mayweather Promotions, Golden Boy Promotions, Canelo Promotions, SHOWTIME PPV® and O'Reilly Auto Parts bring boxing's biggest star Floyd "Money" Mayweather and Super Welterweight World Champion Canelo Alvarez to the big screen. In what is anticipated to be one of the biggest fights in boxing history, both Mayweather and Canelo will defend their undefeated records in an action-packed, live broadcast from the MGM Grand Garden Arena in Las Vegas, Nev. on Mexican Independence Day weekend. "The One: Mayweather vs. Canelo" will be broadcast to select theaters nationwide on Saturday, Sept. 14 at 9:00 p.m. ET / 8:00 p.m. CT / 7:00 p.m. MT / 6:00 p.m. PT / 5:00 p.m. AK / 3:00 p.m. HI. In addition to the explosive main event, Unified Super Lightweight World Champion Danny "Swift" Garcia and WBC Interim Super Lightweight World Champion Lucas "The Machine" Matthysse will meet in a bout fans have been clamoring to see. Garcia vs. Matthysse will anchor the fight's undercard with additional bouts to be announced shortly. -

IMES Alumni Newsletter No.8

IMES ALUMNI NEWSLETTER Souk at Fez, Morocco Issue 8, Winter 2016 8, Winter Issue © Andrew Meehan From the Head of IMES Dr Andrew Marsham Welcome to the Winter 2016 IMES Alumni Newsletter, in which we congrat- ulate the postgraduate Masters and PhD graduates who qualified this year. There is more from graduation day on pages 3-5. We wish all our graduates the very best for the future. We bid farewell to Dr Richard Todd, who has taught at IMES since 2006. Richard was a key colleague in the MA Arabic degree, and has contributed to countless other aspects of IMES life. We wish him the very best for his new post at the University of Birmingham. Memories of Richard at IMES can be found on page 17. Elsewhere, there are the regular features about IMES events, as well as articles on NGO work in Beirut, on the SkatePal charity, poems to Syria, on recent workshops on masculinities and on Arab Jews, and memories of Arabic at Edinburgh in the late 1960s and early 1970s from Professor Miriam Cooke (MA Arabic 1971). Very many thanks to Katy Gregory, Assistant Editor, and thanks to all our contributors. As ever, we all look forward to hearing news from former students and colleagues—please do get in touch at [email protected] 1 CONTENTS Atlas Mountains near Marrakesh © Andrew Meehan Issue no. 8 Snapshots 3 IMES Graduates November 2016 6 Staff News Editor 7 Obituary: Abdallah Salih Al-‘Uthaymin Dr Andrew Marsham Features 8 Student Experience: NGO Work in Beirut Assistant Editor and Designer 9 Memories of Arabic at Edinburgh 10 Poems to Syria Katy Gregory Seminars, Conferences and Events 11 IMES Autumn Seminar Review 2016 With thanks to all our contributors 12 IMES Spring Seminar Series 2017 13 Constructing Masculinities in the Middle East The IMES Alumni Newsletter welcomes Symposium 2016 submissions, including news, comments, 14 Arab Jews: Definitions, Histories, Concepts updates and articles. -

The Lost Generation in American Foreign Policy How American Influence Has Declined, and What Can Be Done About It

September 2020 Perspective EXPERT INSIGHTS ON A TIMELY POLICY ISSUE JAMES DOBBINS, GABRIELLE TARINI, ALI WYNE The Lost Generation in American Foreign Policy How American Influence Has Declined, and What Can Be Done About It n the aftermath of World War II, the United States accepted the mantle of global leadership and worked to build a new global order based on the principles of nonaggression and open, nondiscriminatory trade. An early pillar of this new Iorder was the Marshall Plan for European reconstruction, which British histo- rian Norman Davies has called “an act of the most enlightened self-interest in his- tory.”1 America’s leaders didn’t regard this as charity. They recognized that a more peaceful and more prosperous world would be in America’s self-interest. American willingness to shoulder the burdens of world leadership survived a costly stalemate in the Korean War and a still more costly defeat in Vietnam. It even survived the end of the Cold War, the original impetus for America’s global activ- ism. But as a new century progressed, this support weakened, America’s influence slowly diminished, and eventually even the desire to exert global leadership waned. Over the past two decades, the United States experienced a dramatic drop-off in international achievement. A generation of Americans have come of age in an era in which foreign policy setbacks have been more frequent than advances. C O R P O R A T I O N Awareness of America’s declining influence became immunodeficiency virus (HIV) epidemic and by Obama commonplace among observers during the Barack Obama with Ebola, has also been widely noted. -

About U.S. Figure Skating Figure Skating by the Numbers

ABOUT U.S. FIGURE SKATING FIGURE SKATING BY THE NUMBERS U.S. Figure Skating is the national governing body for the sport 5 The ranking of figure skating in terms of the size of its fan of figure skating in the United States. U.S. Figure Skating is base. Figure skating’s No. 5 ranking is behind only college a member of the International Skating Union (ISU), the inter- sports, NFL, MLB and NBA in 2009. (Source: US Census and national federation for figure skating, and the U.S. Olympic ESPN Sports Poll) Committee (USOC). 12 Age of the youngest athlete on the 2011–12 U.S. Team — U.S. Figure Skating is composed of member clubs, collegiate men’s skater Nathan Chen (born May 5, 1999) clubs, school-affiliated clubs, individual members, Friends of Consecutive Olympic Winter Games at which at least one U.S. Figure Skating and Basic Skills programs. 17 figure skater has won a medal, dating back to 1948, when Dick Button won his first Olympic gold The charter member clubs numbered seven in 1921 when the association was formed and first became a member of the ISU. 18 International gold medals won by the United States during the To date, U.S. Figure Skating has more than 680 member clubs. 2010–11 season 44 U.S. qualifying and international competitions available on a subscription basis on icenetwork.com U.S. Figure Skating is one of the strongest 52 World titles won by U.S. skaters all-time and largest governing bodies within the winter Olympic movement with more than 180,000 58 International medals won by U.S. -

Computer Gaming World Issue

I - Vol. 3 No. 4 Jul.-Aug. - 1983 FEATURES SUSPENDED 10 The Cryogenic Nightmare David P. Stone M.U.L.E. 12 One of Electronic Arts' New Releases Edward Curtis BATTLE FOR NORMANDY 14 Strategy and Tactics Jay Selover SCORPION'S TALE 16 Adventure Game Hints and Tips Scorpia COSMIC BALANCE CONTEST WINNER 17 Results of the Ship Design Contest KNIGHTS OF THE DESERT 18 Review Gleason & Curtis GALACTIC ADVENTURES 20 Review & Hints David Long COMPUTER GOLF! 29 Four Games Reviewed Stanley Greenlaw BOMB ALLEY 35 Review Richard Charles Karr THE COMMODORE KEY 42 A New Column Wilson & Curtis Departments Inside the Industry 4 Hobby and Industry News 5 Taking a Peek 6 Tele-Gaming 22 Real World Gaming 24 Atari Arena 28 Name of the Game 38 Silicon Cerebrum 39 The Learning Game 41 Micro-Reviews 43 Reader Input Device 51 Game Ratings 52 Game Playing Aids from Computer Gaming World COSMIC BALANCE SHIPYARD DISK Contains over 20 ships that competed in the CGW COSMIC BALANCE SHIP DESIGN CONTEST. Included are Avenger, the tournament winner; Blaze, Mongoose, and MKVP6, the judge's ships. These ships are ideal for the gamer who cannot find enough competition or wants to study the ship designs of other gamers around the country. SSI's The Cosmic Balance is required to use the shipyard disk. PLEASE SPECIFY APPLE OR ATARI VERSION WHEN ORDERING. $15.00 ROBOTWAR TOURNAMENT DISK CGW's Robotwar Diskette contains the source code for the entrants to the Second Annual CGW Robotwar Tournament (with the exception of NordenB) including the winner, DRAGON. -

GRAND PRIX 2017 22Nd DERIUGINA CUP 17.03.2017 - GRAND PRIX Seniors Hoop Final(2001 and Older)

GRAND PRIX 2017 22nd DERIUGINA CUP 17.03.2017 - GRAND PRIX Seniors Hoop Final(2001 and older) Rank Country Name/Club Year Score D E Ded. 17.800 Filanovsky Victoria 1. 1995 (1) 17.800 Israel ISR 9.000 8.800 - 16.850 Bravikova Iuliia 2. 1999 (2) 16.850 Russia RUS 8.700 8.150 - 16.650 Mazur Viktoriia 3. 1994 (3) 16.650 Ukraine UKR 8.000 8.650 - 16.400 Khonina Polina 4. 1998 (4) 16.400 Russia RUS 7.900 8.500 - 16.200 Generalova Nastasya 5. 2000 (5) 16.200 United States USA 8.600 7.600 - 14.500 Meleshchuk Yeva 6. 2001 (6) 14.500 Ukraine UKR 7.200 7.300 - 14.450 Kis Alexandra 7. 2000 (7) 14.450 Hungary HUN 6.500 7.950 - 14.150 Filiorianu Ana Luiza 8. 1999 (8) 14.150 Romania ROU 6.700 7.450 - ©2017 http://rgform.eu Printed 19.03.2017 11:40:25 GRAND PRIX 2017 22nd DERIUGINA CUP 17.03.2017 - GRAND PRIX Seniors Ball Final(2001 and older) Rank Country Name/Club Year Score D E Ded. 17.500 Taseva Katrin 1. 1997 (1) 17.500 Bulgaria BUL 9.000 8.500 - 17.350 Khonina Polina 2. 1998 (2) 17.350 Russia RUS 9.200 8.150 - 17.200 Filanovsky Victoria 3. 1995 (3) 17.200 Israel ISR 8.600 8.600 - 16.950 Harnasko Alina 4. 2001 (4) 16.950 Belarus BLR 8.500 8.450 - 16.300 Generalova Nastasya 5. 2000 (5) 16.300 United States USA 8.400 7.900 - 15.800 Filiorianu Ana Luiza 6. -

Researching Soviet/Russian Intelligence in America: Bibliography (Last Updated: October 2018)

Know Your FSB From Your KGB: Researching Soviet/Russian Intelligence in America: Bibliography (Last updated: October 2018) 1. Federal Government Sources A. The 2016 US Presidential Election Assessing Russian Activities and Intentions in Recent US Elections. Office of the Director of National intelligence, January 6, 2017. Committee Findings on the 2017 Intelligence Community Assessment. Senate Select Committee on Intelligence, July 3, 2018. Disinformation: Panel I, Panel II. A Primer in Russian Active Measures and Influence Campaigns: Hearing Before the Select Committee on Intelligence of the United States Senate, One Hundred Fifteenth Congress, First Session, Thursday, March 30, 2017. (Y 4.IN 8/19: S.HRG.115-40/) Link: http://purl.fdlp.gov/GPO/gpo86393 FACT SHEET: Actions in Response to Russian Malicious Cyber Activity and Harassment. White House Office of the Press Secretary, December 29, 2016. Grand Jury Indicts 12 Russian Intelligence Officers for Hacking Offenses Related to the 2016 Election. Department of Justice Office of Public Affairs, July 13, 2018. Grizzly Steppe: Russian Malicious Cyber Activity. U.S. Department of Homeland Security, and Federal Bureau of Investigation, December 29, 2016. Information Warfare: Issues for Congress. Congressional Research Service, March 5, 2018. Minority Views: The Minority Members of the House Permanent Select Committee on Intelligence on March 26, 2018, Submit the Following Minority Views to the Majority-Produced "Report on Russian active Measures, March 22, 2018." House Permanent Select Committee on Intelligence, March 26, 2018. Open Hearing: Social Media Influence in the 2016 U.S. Election: Hearing Before the Select Committee on Intelligence of the United States Senate, One Hundred Fifteenth Congress, First Session, Wednesday, November 1, 2017. -

Disinformation, and Influence Campaigns on Twitter 'Fake News'

Disinformation, ‘Fake News’ and Influence Campaigns on Twitter OCTOBER 2018 Matthew Hindman Vlad Barash George Washington University Graphika Contents Executive Summary . 3 Introduction . 7 A Problem Both Old and New . 9 Defining Fake News Outlets . 13 Bots, Trolls and ‘Cyborgs’ on Twitter . 16 Map Methodology . 19 Election Data and Maps . 22 Election Core Map Election Periphery Map Postelection Map Fake Accounts From Russia’s Most Prominent Troll Farm . 33 Disinformation Campaigns on Twitter: Chronotopes . 34 #NoDAPL #WikiLeaks #SpiritCooking #SyriaHoax #SethRich Conclusion . 43 Bibliography . 45 Notes . 55 2 EXECUTIVE SUMMARY This study is one of the largest analyses to date on how fake news spread on Twitter both during and after the 2016 election campaign. Using tools and mapping methods from Graphika, a social media intelligence firm, we study more than 10 million tweets from 700,000 Twitter accounts that linked to more than 600 fake and conspiracy news outlets. Crucially, we study fake and con- spiracy news both before and after the election, allowing us to measure how the fake news ecosystem has evolved since November 2016. Much fake news and disinformation is still being spread on Twitter. Consistent with other research, we find more than 6.6 million tweets linking to fake and conspiracy news publishers in the month before the 2016 election. Yet disinformation continues to be a substantial problem postelection, with 4.0 million tweets linking to fake and conspiracy news publishers found in a 30-day period from mid-March to mid-April 2017. Contrary to claims that fake news is a game of “whack-a-mole,” more than 80 percent of the disinformation accounts in our election maps are still active as this report goes to press. -

Debtbook Diplomacy China’S Strategic Leveraging of Its Newfound Economic Influence and the Consequences for U.S

POLICY ANALYSIS EXERCISE Debtbook Diplomacy China’s Strategic Leveraging of its Newfound Economic Influence and the Consequences for U.S. Foreign Policy Sam Parker Master in Public Policy Candidate, Harvard Kennedy School Gabrielle Chefitz Master in Public Policy Candidate, Harvard Kennedy School PAPER MAY 2018 Belfer Center for Science and International Affairs Harvard Kennedy School 79 JFK Street Cambridge, MA 02138 www.belfercenter.org Statements and views expressed in this report are solely those of the authors and do not imply endorsement by Harvard University, the Harvard Kennedy School, or the Belfer Center for Science and International Affairs. This paper was completed as a Harvard Kennedy School Policy Analysis Exercise, a yearlong project for second-year Master in Public Policy candidates to work with real-world clients in crafting and presenting timely policy recommendations. Design & layout by Andrew Facini Cover photo: Container ships at Yangshan port, Shanghai, March 29, 2018. (AP) Copyright 2018, President and Fellows of Harvard College Printed in the United States of America POLICY ANALYSIS EXERCISE Debtbook Diplomacy China’s Strategic Leveraging of its Newfound Economic Influence and the Consequences for U.S. Foreign Policy Sam Parker Master in Public Policy Candidate, Harvard Kennedy School Gabrielle Chefitz Master in Public Policy Candidate, Harvard Kennedy School PAPER MARCH 2018 About the Authors Sam Parker is a Master in Public Policy candidate at Harvard Kennedy School. Sam previously served as the Special Assistant to the Assistant Secretary for Public Affairs at the Department of Homeland Security. As an academic fellow at U.S. Pacific Command, he wrote a report on anticipating and countering Chinese efforts to displace U.S. -

Hacks, Leaks and Disruptions | Russian Cyber Strategies

CHAILLOT PAPER Nº 148 — October 2018 Hacks, leaks and disruptions Russian cyber strategies EDITED BY Nicu Popescu and Stanislav Secrieru WITH CONTRIBUTIONS FROM Siim Alatalu, Irina Borogan, Elena Chernenko, Sven Herpig, Oscar Jonsson, Xymena Kurowska, Jarno Limnell, Patryk Pawlak, Piret Pernik, Thomas Reinhold, Anatoly Reshetnikov, Andrei Soldatov and Jean-Baptiste Jeangène Vilmer Chaillot Papers HACKS, LEAKS AND DISRUPTIONS RUSSIAN CYBER STRATEGIES Edited by Nicu Popescu and Stanislav Secrieru CHAILLOT PAPERS October 2018 148 Disclaimer The views expressed in this Chaillot Paper are solely those of the authors and do not necessarily reflect the views of the Institute or of the European Union. European Union Institute for Security Studies Paris Director: Gustav Lindstrom © EU Institute for Security Studies, 2018. Reproduction is authorised, provided prior permission is sought from the Institute and the source is acknowledged, save where otherwise stated. Contents Executive summary 5 Introduction: Russia’s cyber prowess – where, how and what for? 9 Nicu Popescu and Stanislav Secrieru Russia’s cyber posture Russia’s approach to cyber: the best defence is a good offence 15 1 Andrei Soldatov and Irina Borogan Russia’s trolling complex at home and abroad 25 2 Xymena Kurowska and Anatoly Reshetnikov Spotting the bear: credible attribution and Russian 3 operations in cyberspace 33 Sven Herpig and Thomas Reinhold Russia’s cyber diplomacy 43 4 Elena Chernenko Case studies of Russian cyberattacks The early days of cyberattacks: 5 the cases of Estonia,