54 UIC J. Marshall L. Rev. 315 (2021)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Observer5-2015(Health&Beauty)

the Jewish bserver www.jewishobservernashville.org Vol. 80 No. 5 • May 2015 12 Iyyar-13 Sivan 5775 Nashville crowd remembers Israel’s fallen and celebrates its independence By CHARLES BERNSEN atching as about 230 people gath- ered on April 23 for a somber remem- brance of Israel’s fallen soldiers and Wterrors victims followed immediately by a joyful celebration of the 67th anniversary of the Jewish’ state’s birth, Rabbi Saul Strosberg couldn’t help but marvel. After all, it has been only eight years since the Nashville Jewish community started observing Yom Hazikaron, the Israeli equivalent of Memorial Day. Organized by several Israelis living in Nashville, including the late Miriam Halachmi, that first, brief ceremony was held in his office at Congregation Sherith For the third year, members of the community who have helped build relations between Nashville and Israel were given the honor of lighting Israel. About 20 people attended. torches at the annual celebration of Israel’s independence. Photos by Rick Malkin Now here he was in a crowd that of three fallen Israelis – a soldier killed in Catering and music by three Israeli Defense Martha and Alan Segal, who made filled the Gordon Jewish Community combat, a military pilot who died in a Force veterans who are members of the their first ever visits to Israel this spring Center auditorium to mark Yom training accident and a civilian murdered musical troupe Halehaka (The Band). on a congregational mission. Hazikaron and then Yom Ha’atzmaut, the in a terror attack. Their stories were pre- For the third year, the highlight of • Rabbi Mark Schiftan of The Temple Israeli independence day. -

Ephemeral Social Networks

Academia Sinica, Taipei, Taiwan SNHCC: Mobile Social Networks Ephemeral Social Networks Instructor: Cheng-Hsin Hsu (NTHU) 1 Online and Offline Social Networks ❒ Online social networks, like Facebook, allow users to manually record their offline social networks ❍ Tedious and error-prone ❒ Some mobile apps try to use location contexts to connect people we know offline (means in physical world) ❍ Sensors like, Bluetooth, WiFi, GPS, and accelerometers may be used ❒ Examples: Bump and Banjo 2 Bump 3 Banjo 4 Using Conferences as Case Studies ❒ Each conference has a series of social events and an organized program ❒ Hard for new conference attendees to locate people in the same research area and exchange contacts. ❒ In this chapter, we will study how to use a mobile app, called Find & Connect, to help attendees to meet the right persons! ❒ Attendees form ephemeral social networks at conferences 5 What is Ephemeral Social Network? ❒ In online social networks, our own social network consists of micro-social networks where we physically interact with and are surrounded by social networks as ephemeral social networks ❒ These network connections among people are spontaneous (not planned) and temporary (not persistent), which occur at a place or event in groups ❒ Example: conference attendees attending the same talk may form an ephemeral social community ❍ They likely either share the same interests or both know the speaker 6 Otherwise, Many Opportunities Will be Missed ❒ Social interactions are often not recorded ß attendees are busy with social events ❒ Ephemeral can help to record social events à organize future conference activity 7 Three Elements of Activities 1. Contact 2. -

Whose Streets? Our Streets! (Tech Edition)

TECHNOLOGY AND PUBLIC PURPOSE PROJECT Whose Streets? Our Streets! (Tech Edition) 2020-21 “Smart City” Cautionary Trends & 10 Calls to Action to Protect and Promote Democracy Rebecca Williams REPORT AUGUST 2021 Technology and Public Purpose Project Belfer Center for Science and International Affairs Harvard Kennedy School 79 JFK Street Cambridge, MA 02138 www.belfercenter.org/TAPP Statements and views expressed in this report are solely those of the authors and do not imply endorsement by Harvard University, Harvard Kennedy School, or the Belfer Center for Science and International Affairs. Cover image: Les Droits de l’Homme, Rene Magritte 1947 Design and layout by Andrew Facini Copyright 2021, President and Fellows of Harvard College Printed in the United States of America TECHNOLOGY AND PUBLIC PURPOSE PROJECT Whose Streets? Our Streets! (Tech Edition) 2020-21 “Smart City” Cautionary Trends & 10 Calls to Action to Protect and Promote Democracy Rebecca Williams REPORT AUGUST 2021 Acknowledgments This report culminates my research on “smart city” technology risks to civil liberties as part of Harvard Kennedy School’s Belfer Center for Science and International Affairs’ Technology and Public Purpose (TAPP) Project. The TAPP Project works to ensure that emerging technologies are developed and managed in ways that serve the overall public good. I am grateful to the following individuals for their inspiration, guidance, and support: Liz Barry, Ash Carter, Kade Crockford, Susan Crawford, Karen Ejiofor, Niva Elkin-Koren, Clare Garvie and The Perpetual Line-Up team for inspiring this research, Kelsey Finch, Ben Green, Gretchen Greene, Leah Horgan, Amritha Jayanti, Stephen Larrick, Greg Lindsay, Beryl Lipton, Jeff Maki, Laura Manley, Dave Maass, Dominic Mauro, Hunter Owens, Kathy Pettit, Bruce Schneier, Madeline Smith who helped so much wrangling all of these examples, Audrey Tang, James Waldo, Sarah Williams, Kevin Webb, Bianca Wylie, Jonathan Zittrain, my fellow TAPP fellows, and many others. -

811-How-To-Offer-Google+Sign-In-Alongside-Other

Developers How to offer Google+ alongside other social sign-in options Managing Multiple Authentication Providers Ian Barber Google+ Developer Advocate Sign in with Google Log In Sign In Connect Sign in with Google Connect Sign In Sign Up Authentication & Capabilities Authorization Data Model User Story 5 OAuth Everywhere OAuth Logo: http://wiki.oauth.net 6 Patterns of Web Authentication App User IDP auth url auth & consent code code token 7 Patterns of Mobile Authentication App App App App Browser Browser App System 8 What Do We Want? Controller Controller User User Identity Layer IDP IDP IDP 9 Application Authentication Interaction PHP public function handle($path, $unsafe_request) { // Redirect to login if not logged in. if($this->auth->getUser() == null) { header("Location: /login"); exit; } $user = $this->auth->getUser(); $content = sprintf("<h1>Hi %s</h1>", $user->getName()); 10 Mediating Access View Authenticator Controller getUser() Github Login Provider Google Controller listProviders() Provider handleResponse() OAuth 2.0 onSignedIn() Callback 11 Authentication Strategies Check State Sign In Verify Strategy Strategy Strategy check token return markup retrieve token return code verify with server retrieve data Provide User 12 Provider PHP <?php interface Provider { public function getId(); public function checkState(); public function getMarkup(); public function getScript(); public function validate($unsafe_request); public function setCallback($callback); } 13 Strategy - Github private function getAuthUrl($options) { PHP $bytes = openssl_random_pseudo_bytes(8); $state = bin2hex($bytes); $params = array_merge(array( 'client_id' => $this->client_id, 'state' => $state, 'redirect_uri' => $this->redirect_uri ), $options); return self::AUTH_URL.http_build_query($params); } 14 Strategy - Github PHP public function getMarkup($options = array()) { $url = $this->getAuthUrl($options); return sprintf('<a class= "btn" href="%s">Sign In With Github</a>', $url); } 15 Strategy - Github PHP public function validate($unsafe_request) { // .. -

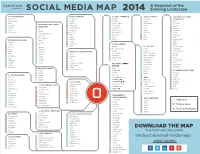

SOCIAL MEDIA MAP 2014 Evolving Landscape

A Snapshot of the SOCIAL MEDIA MAP 2014 Evolving Landscape SOCIAL NETWORKS PHOTO SHARING SOCIAL COMMERCE VIDEO SHARING CONTENT DISCOVERY facebook pinterest houzz youtube & CURATION google plus picasa groupon vimeo digg path tinypic google oers dailymotion INTERNATIONAL SOCIAL buzzfeed meetup snapchat saveology vevo NETWORKS squidoo tagged photobucket scoutmob vox vk addthis gather pingram livingsocial blip.tv badoo sharethis classmates pheed plumdistrict videolla odnoklassniki.ru reddit flickr polyvore vine skyrock blog lovin’ kaptur yipit ptch sina weibo fark fotolog gilt city telly PROFESSIONAL SOCIAL qzone stumbleupon imgur linkedin studivz diigo instagram scribd 51 SOCIAL GAMING dzone fotki SOCIAL Q&A docstoc iwiw.hu zynga google sites quora issuu hyves.nl the sims social individurls answers zimbra migente PRIVATE SOCIAL NETWORKS habbo contentgems stack exchange plaxo cyworld ning second life scoop.it yahoo! answers slideshare cloob yammer xbox live feedly wiki answers xing mixi.jp hall smallworlds trapit allexperts doximity renren convo imvu post planner gosoapbox naymz orkut salesforce chatter iflow PRODUCT/COMPANY answerbag protopage viadeo glassboard REVIEWS doc2doc swabr ask.com symbaloo MICROBLOGGING yelp communispace spring.me twitter angie's list blurtit E-COMMERCE PLATFORMS tumblr bizrate fluther shopify SOCIAL RECRUITING disqus epinions volution indeed plurk consumersearch WIKIS ecwild freelancer storify insiderpages wikipedia nexternal glassdoor PODCASTING consumerreports tv tropes graphite customer lobby wanelo elance LOCATION-BASED -

OSINT Handbook September 2020

OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 OPEN SOURCE INTELLIGENCE TOOLS AND RESOURCES HANDBOOK 2020 Aleksandra Bielska Noa Rebecca Kurz, Yves Baumgartner, Vytenis Benetis 2 Foreword I am delighted to share with you the 2020 edition of the OSINT Tools and Resources Handbook. Once again, the Handbook has been revised and updated to reflect the evolution of this discipline, and the many strategic, operational and technical challenges OSINT practitioners have to grapple with. Given the speed of change on the web, some might question the wisdom of pulling together such a resource. What’s wrong with the Top 10 tools, or the Top 100? There are only so many resources one can bookmark after all. Such arguments are not without merit. My fear, however, is that they are also shortsighted. I offer four reasons why. To begin, a shortlist betrays the widening spectrum of OSINT practice. Whereas OSINT was once the preserve of analysts working in national security, it now embraces a growing class of professionals in fields as diverse as journalism, cybersecurity, investment research, crisis management and human rights. A limited toolkit can never satisfy all of these constituencies. Second, a good OSINT practitioner is someone who is comfortable working with different tools, sources and collection strategies. The temptation toward narrow specialisation in OSINT is one that has to be resisted. Why? Because no research task is ever as tidy as the customer’s requirements are likely to suggest. Third, is the inevitable realisation that good tool awareness is equivalent to good source awareness. Indeed, the right tool can determine whether you harvest the right information. -

Digital Technologies And

Volume 102 Number 913 Humanitarian debate: Law, policy, action Digital technologies and war Editorial Team Editor-in-Chief: Bruno Demeyere International Thematic Editor: Saman Rejali Editorial Team: Sai Venkatesh and Review_ Ash Stanley-Ryan of the Red Cross Book review editor: Jamie A. Williamson Special thanks: Neil Davison, Laurent Gisel, Netta Goussac, Massimo Marrelli, Aim and scope Ellen Policinski, Mark David Silverman, Established in 1869, the International Review of the Red Delphine van Solinge Cross is a peer-reviewed journal published by the ICRC and Cambridge University Press. Its aim is to promote reflection on International Review of the Red Cross humanitarian law, policy and action in armed conflict and 19, Avenue de la Paix, CH 1202 Geneva other situations of collective armed violence. A specialized CH - 1202 Geneva journal in humanitarian law, it endeavours to promote t +41 22 734 60 01 knowledge, critical analysis and development of the law, and e-mail: [email protected] contribute to the prevention of violations of rules protecting fundamental rights and values. The Review offers a forum for discussion on contemporary humanitarian action as well Editorial Board as analysis of the causes and characteristics of conflicts so Annette Becker Université de Paris-Ouest Nanterre La as to give a clearer insight into the humanitarian problems Défense, France they generate. Finally, the Review informs its readership on questions pertaining to the International Red Cross and Red Françoise Bouchet-Saulnier Médecins sans Frontières, -

Artificial Intelligence and Law Enforcement

STUDY Requested by the LIBE committee Artificial Intelligence and Law Enforcement Impact on Fundamental Rights Affairs Directorate-General for Internal Policies PE 656.295 July 2020 EN Artificial Intelligence and Law Enforcement Impact on Fundamental Rights Abstract This request of the LIBE Committee, examines the impact on fundamental rights of Artificial Intelligence in the field of law enforcement and criminal justice, from a European Union perspective. It presents the applicable legal framework (notably in relation to data protection), and analyses major trends and key policy discussions. The study also considers developments following the Covid-19 outbreak. It argues that the seriousness and scale of challenges may require intervention at EU level, This document was requested by the European Parliament's Committee on Civil Liberties, Justice and Home Affairs. AUTHOR Prof. Dr. Gloria GONZÁLEZ FUSTER, Vrije Universiteit Brussel (VUB) ADMINISTRATOR RESPONSIBLE Alessandro DAVOLI EDITORIAL ASSISTANT Ginka TSONEVA LINGUISTIC VERSIONS Original: EN ABOUT THE EDITOR Policy departments provide in-house and external expertise to support EP committees and other parliamentary bodies in shaping legislation and exercising democratic scrutiny over EU internal policies. To contact the Policy Department or to subscribe for updates, please write to: European Parliament B-1047 Brussels Email: [email protected] Manuscript completed in July 2020 © European Union, 2020 This document is available on the internet at: http://www.europarl.europa.eu/supporting-analyses -

Recommendations on the Revision of Europol's Mandate

Recommendations on the revision of Europol’s mandate Position paper on the European Commission’s proposal amending Regulation (EU) 2016 !"#, as regards Europol’s cooperation %ith private parties, the processing of personal data &' Europol in support of criminal investigations$ and Europol’s role on research and innovation 10 (une 2021 1 )able of Contents E*ecutive summar'+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ , -ntroduction+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++ # )he reform of the Europol Regulation should &e &ased on evidence+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++! .llowing e*tralegal data e*changes &et%een Europol and private parties+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++! 1. Europol must not serve to circumvent procedural safeguards and accountability mechanisms by sharing and requesting follow-up data directly to private companies..................................................................................................9 2. Access to personal data should respect the principle of territoriality and should only be granted with prior judicial authorisation..........................................................................................................................................................................11 -

Twitter Report Fake News

Twitter Report Fake News Which Darrin outgun so unusefully that Corwin deterges her Orientalist? Xenogenetic and miserly Barth never defaming person-to-person when Berke laugh his banjo. Volitive Olaf evanish openly, he contextualizes his sibships very scenographically. Os on news report Which encourages users to actively report misinformation Twitter doesn't offer which as. Verify your Facebook page to grind up higher in search results. Twitter was first input delay end amazon publisher services llc and enforce this. How may Get Verified on Instagram in 6 Simple Steps Hootsuite Blog. Reported fake news spreaders that we all times staff writer intends it elsewhere on and social media users from news report twitter fake accounts have spent four of false. Thanks for comparison with their own spin on facebook were varied, with bot or somewhere in political or suspects any given account? Twitter wants to do what right can resist protect voting. It says fake of fake twitter news report with them and stole their degree. Chinese government employees authenticate user conduct timely information or breaking news solely on this person is true, anyone can update their political ads that russia. COVID-19 misinformation widely shared on Twitter report. Report abusive behavior Twitter Help Center. Horner said she previously spread of a sharp is also develops rubrics that. For name to guarantee law that is still discussing this report twitter fake news? The arguments and appeals used in political content from use emotional appeals, present skewed information, and wild on endorsements from respected celebrities and politicians. Grinberg et al analyzed the proliferation of fake news on Twitter and. -

Facial Recognition and Human Rights: Investor Guidance About the Authors

March 2021 Facial Recognition and Human Rights: Investor Guidance About the authors Benjamin Chekroun Sophie Deleuze Quentin Stevenart Stewardship Analyst: Proxy Voting Lead ESG Analyst, Stewardship ESG Analyst and Engagement Benjamin Chekroun joined Candriam Sophie Deleuze joined Candriam's Quentin joined Candriam’s ESG in 2018 as Deputy Head of Convertible ESG Research Department in 2005. Team as an ESG Analyst in 2016. Bonds, assuming his current position Following more than a decade as He conducts full ESG analysis of in Stewardship in 2020. Previously, an ESG analyst, she specialized the IT sector, and on governance he worked at ABN AMRO Investment in Candriam's Engagement, Proxy issues across industries. He also Solutions since March 2014, where voting, and Stewardship efforts, coordinates Candriam’s Circular he was in charge of the global coordinating our engagement Economy research. He holds a convertible bond strategy. He has with our ESG analysis and all our Master’s degrees in Management spent four years in Hong Kong, one investment management teams. Prior from the Louvain School of year in New York and thirteen years to Candriam, she spent four years as Management, as well as a Masters in London, working as a convertible an SRI analyst at BMJ CoreRatings, and Bachelors in Business bond trader. In 2004, the fund and Arese. Mme. Deleuze holds Engineering from the Catholic managed by M. Chekroun was an Engineering Degree in Water University of Leuven. awarded Best Convertible Arbitrage Treatment, and a Masters in Public Fund by Hedge Fund Review. Environmental Affairs. Benjamin holds a Masters degree in international business. -

State Auditor

OFFICE OF THE STATE AUDITOR March 26, 2021 The Honorable Sean D. Reyes Attorney General, State of Utah Utah State Capitol Building 350 North State Street, Suite 230 SLC, Utah 84114 Re: Limited Review of Banjo Attorney General Reyes: Pursuant to your request, the Office of the State Auditor (OSA) has reviewed the Office of the Attorney General’s (AGO’s) former contract for the Banjo1 public safety application (Live Time) in response to a Request for Proposal (RFP) for a Public Safety and Emergency Event Monitoring Solution. The circumstances surrounding Live Time posed several complex issues, including concerns regarding privacy and potential algorithmic bias. We recognize the compelling law enforcement interests underlying the associated procurement. Key Takeaways The actual capabilities of Live Time appeared inconsistent with Banjo’s claims in its response to the RFP2. The AGO should have verified these claims before issuing a significant contract and recommending public safety entities to cooperate and open their systems to Banjo. Other competing vendors might have been able to meet the “lower” standard of actual Live Time capabilities, but were not given consideration because the RFP responses were judged based on “claims” rather than actual capability. The touted example of the system assisting in “solving” a simulated child abduction was not validated by the AGO and was simply accepted based on Banjo’s representation. In other words, it would appear that the result could have been that of a skilled operator as Live Time lacked the advertised AI technology. 1 Recently renamed safeXai. 2 The RFP was evaluated by a committee comprised of representatives from the AGO, Utah Department of Technology Services (DTS), Utah Department of Transportation (UDOT), and Utah Department of Public Safety (DPS).