State Auditor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Department of State Treasurer Raleigh, North Carolina Statewide Financial

STATE OF NORTH CAROLINA DEPARTMENT OF STATE TREASURER RALEIGH, NORTH CAROLINA STATEWIDE FINANCIAL STATEMENT AUDIT PROCEDURES FOR THE YEAR ENDED JUNE 30, 2014 OFFICE OF THE STATE AUDITOR BETH A. WOOD, CPA STATE AUDITOR STATE OF NORTH CAROLINA Office of the State Auditor 2 S. Salisbury Street 20601 Mail Service Center Raleigh, NC 27699-0601 Telephone: (919) 807-7500 Fax: (919) 807-7647 Beth A. Wood, CPA Internet State Auditor http://www.ncauditor.net AUDITOR’S TRANSMITTAL The Honorable Pat McCrory, Governor Members of the North Carolina General Assembly The Honorable Janet Cowell, State Treasurer Department of State Treasurer As part of our audit of the State of North Carolina’s financial statements, we have audited, in accordance with the auditing standards generally accepted in the United States of America and the standards applicable to financial audits contained in Governmental Auditing Standards, issued by the Comptroller General of the United States, selected elements of the Department of State Treasurer’s financial statements, as of and for the year ended June 30, 2014. For State Health Plan cash basis expenditures, we made reference to the reports of other auditors as a basis, in part, for our opinion on the State’s financial statements. Our audit was performed by authority of Article 5A of Chapter 147 of the North Carolina General Statutes. Our audit objective was to render an opinion on the State of North Carolina’s financial statements and not the Department’s financial statements. However, the report included herein is in relation to our audit scope at the Department and not to the State of North Carolina as a whole. -

Wyoming Legislative Service Office

WYOMING LEGISLATIVE SERVICE OFFICE ISSUE BRIEF OTHER STATES’ LOCAL GOVERNMENT FISCAL PROCEDURES TRAINING August 2020 by Karla Smith, Senior Program Evaluator BACKGROUND Legislative Service Office staff presented an issue brief summarizing fiscal procedures training for Wyoming local government and political subdivision fiscal officers to the Management Audit Committee during its January 2020 meeting.1 The Committee requested further information regarding other states’ statutory requirements for fiscal procedures training for local government and special district clerks and treasurers. This issue brief examines the statutory requirements of Wyoming and nine comparator states regarding local government training in fiscal procedures. WYOMING Wyoming statutes do not require local government fiscal officers to complete state fiscal procedures training, but do require the State Auditor and the State Treasurer to provide voluntary education programs: To enhance the background and working knowledge of political subdivision treasurers in governmental accounting, portfolio reporting and compliance, and investments and cash management, the state auditor and the state treasurer shall conduct voluntary education programs for persons elected or appointed for the first time to any office or as an employee of any political subdivision where the duties of that office or positions of employment include taking actions related to investment of public funds and shall also hold annual voluntary continuing education programs for persons continuing to hold those offices and positions of 1 Wyoming LSO Issue Brief: Fiscal Procedures Training, January 2020 P a g e | 1 20IB004 • RESEARCH AND EVALUATION DIVISION • 200 W. 24th Street • Cheyenne, Wyoming 82002 TELEPHONE 307-777-7881 • EMAIL [email protected] • WEBSITE www.wyoleg.gov PAGE 2 OF 8 employment. -

Observer5-2015(Health&Beauty)

the Jewish bserver www.jewishobservernashville.org Vol. 80 No. 5 • May 2015 12 Iyyar-13 Sivan 5775 Nashville crowd remembers Israel’s fallen and celebrates its independence By CHARLES BERNSEN atching as about 230 people gath- ered on April 23 for a somber remem- brance of Israel’s fallen soldiers and Wterrors victims followed immediately by a joyful celebration of the 67th anniversary of the Jewish’ state’s birth, Rabbi Saul Strosberg couldn’t help but marvel. After all, it has been only eight years since the Nashville Jewish community started observing Yom Hazikaron, the Israeli equivalent of Memorial Day. Organized by several Israelis living in Nashville, including the late Miriam Halachmi, that first, brief ceremony was held in his office at Congregation Sherith For the third year, members of the community who have helped build relations between Nashville and Israel were given the honor of lighting Israel. About 20 people attended. torches at the annual celebration of Israel’s independence. Photos by Rick Malkin Now here he was in a crowd that of three fallen Israelis – a soldier killed in Catering and music by three Israeli Defense Martha and Alan Segal, who made filled the Gordon Jewish Community combat, a military pilot who died in a Force veterans who are members of the their first ever visits to Israel this spring Center auditorium to mark Yom training accident and a civilian murdered musical troupe Halehaka (The Band). on a congregational mission. Hazikaron and then Yom Ha’atzmaut, the in a terror attack. Their stories were pre- For the third year, the highlight of • Rabbi Mark Schiftan of The Temple Israeli independence day. -

State Auditor

To Ill vote No\/. 4t~ STATE AUDITOR I Paid for by Friends of Tom Wagner I Friends of Tom Wagner Hrst-Cia.-is ~,;Jaii P.O . Box 791 Presorted Dover, DE 19903 CSPosta~ Keep PAID Wilmington. DE State Auditor R. Thomas Wagner Permit Ko. 1858 CFE, CGFM, CICA Demonstrated Independence Tom Wagner is the last line of defense against one-party government. Since July 2013 Jorn Wagner Identified; • $1.9 million in fraud, waste, and abuse • $2 million in unclaimed federal reimbursements • More than $18 million in audit findings and cost savings "As State Auditor, I'll fight to ensure our state government and public schools spend your tax dollars effectively. You work hard for your money and you deserve an Auditor who will work just as hard to protect it." - BRENDA MA YRACK '·. __.,., ·.; 0328914M ,, . .,,._., -----~ ,,. ' The no-nonsense auditor we need to protect our tax dollars. BRENDA HAS THE RIGHT EXPERIENCE ... "Brenda will reform Wilmington attorney with a solo law practice the Auditor's office focused on auditing and audit defense. and eliminate wasteful Tech entrepreneur. practices from state University of Delaware honors graduate with government. a law degree and masters in government management from the University of Wisconsin. Delaware families need a tough but fair ... TO FIGHT FOR DELAWARE'S TAXPAYERS. ally like Brenda Push agencies to improve performance and get fighting to protect better results for you and your family. taxpayer dollars." Leave politics at the door and focus on what's not working in Dover with a fair audit plan. - CONGRESSMAN JOHN CARNEY Modernize the Auditor's office and ensure it has (FORMER LT. -

EXECUTIVE DEPARTMENT SECTION VI-1 Executive Officers Enumerated - Offices and Records - Duties

OKLAHOMA CONSTITUTION ARTICLE VI - EXECUTIVE DEPARTMENT SECTION VI-1 Executive officers enumerated - Offices and records - Duties. A. The Executive authority of the state shall be vested in a Governor, Lieutenant Governor, Secretary of State, State Auditor and Inspector, Attorney General, State Treasurer, Superintendent of Public Instruction, Commissioner of Labor, Commissioner of Insurance and other officers provided by law and this Constitution, each of whom shall keep his office and public records, books and papers at the seat of government, and shall perform such duties as may be designated in this Constitution or prescribed by law. B. The Secretary of State shall be appointed by the Governor by and with the consent of the Senate for a term of four (4) years to run concurrently with the term of the Governor. Amended by State Question Nos. 509 to 513, Legislative Referendum Nos. 209 to 213, adopted at election held on July 22, 1975, eff. Jan. 8, 1979; State Question No. 594, Legislative Referendum No. 258, adopted at election held on Aug. 26, 1986; State Question No. 613, Legislative Referendum No. 270, adopted at election held on Nov. 8, 1988. SECTION VI-2 Supreme power vested in Governor. The Supreme Executive power shall be vested in a Chief Magistrate, who shall be styled "The Governor of the State of Oklahoma." SECTION VI-3 Eligibility to certain state offices. No person shall be eligible to the office of Governor, Lieutenant Governor, Secretary of State, State Auditor and Inspector, Attorney General, State Treasurer or Superintendent of Public Instruction except a citizen of the United States of the age of not less than thirty-one (31) years and who shall have been ten (10) years next preceding his or her election, or appointment, a qualified elector of this state. -

Report from the State Auditor's Office

OFFICE OF THE 1 STATE AUDITOR Making Government Work Better Presentation to the Massachusetts Collectors and Treasurers Association June 14, 2011 Gerald A. McDonough, Deputy Auditor for Legal and Policy Office of the State Auditor Suzanne M. Bump Auditor Bump’s Priorities 2 Retooling the Office of the State Auditor for the 21st century: New employees with appropriate credentials. A diverse workforce that reflects the Commonwealth’s residents. A diversity of experiences, to meet Auditor Bump’s goals for auditing. A focus on different kinds of audits: Performance audits Tax expenditure audit Audits that help make government work better: For example, the recent audit of district courts and the new probation fees. State Audits 3 OSA audits state agencies, including: institutions of higher education; health and human service agencies; constitutional offices; and other state government executive offices, functions, and programs. Partners with a major accounting firm in performing the Single Audit of the Commonwealth, a comprehensive annual financial and compliance audit of the Commonwealth as a whole that encompasses the accounts and activities of all state agencies. Oversees state agencies’ responsibilities under the Commonwealth’s Internal Control Statute, including investigating referrals of variances, thefts, etc. Educates, guides, and assists state agencies on the importance of internal controls in order to mitigate risk. Authority and Vendor Audits 4 OSA audits quasi-public authorities such as Massport, MassDOT, and local housing authorities. Auditor Bump is enhancing the office’s work in auditing the Commonwealth’s vendors: Her goal is to identify funds that are misappropriated by vendors through waste, fraud, and abuse. -

Ephemeral Social Networks

Academia Sinica, Taipei, Taiwan SNHCC: Mobile Social Networks Ephemeral Social Networks Instructor: Cheng-Hsin Hsu (NTHU) 1 Online and Offline Social Networks ❒ Online social networks, like Facebook, allow users to manually record their offline social networks ❍ Tedious and error-prone ❒ Some mobile apps try to use location contexts to connect people we know offline (means in physical world) ❍ Sensors like, Bluetooth, WiFi, GPS, and accelerometers may be used ❒ Examples: Bump and Banjo 2 Bump 3 Banjo 4 Using Conferences as Case Studies ❒ Each conference has a series of social events and an organized program ❒ Hard for new conference attendees to locate people in the same research area and exchange contacts. ❒ In this chapter, we will study how to use a mobile app, called Find & Connect, to help attendees to meet the right persons! ❒ Attendees form ephemeral social networks at conferences 5 What is Ephemeral Social Network? ❒ In online social networks, our own social network consists of micro-social networks where we physically interact with and are surrounded by social networks as ephemeral social networks ❒ These network connections among people are spontaneous (not planned) and temporary (not persistent), which occur at a place or event in groups ❒ Example: conference attendees attending the same talk may form an ephemeral social community ❍ They likely either share the same interests or both know the speaker 6 Otherwise, Many Opportunities Will be Missed ❒ Social interactions are often not recorded ß attendees are busy with social events ❒ Ephemeral can help to record social events à organize future conference activity 7 Three Elements of Activities 1. Contact 2. -

Legislative Oversight in Delaware

Legislative Oversight in Delaware Capacity and Usage Assessment Oversight through Analytic Bureaucracies: Minimal Oversight through the Appropriations Process: Moderate Oversight through Committees: Limited Oversight through Administrative Rule Review: Minimal Oversight through Advice and Consent: Minimal Oversight through Monitoring Contracts: Limited Judgment of Overall Institutional Capacity for Oversight: Moderate Judgment of Overall Use of Institutional Capacity for Oversight: Moderate Summary Assessment Delaware appears to emphasize resolving agency performance problems rather than punishing or eliminating poorly performing programs. One element that may explain this solution-driven approach is the nature of Delaware’s general assembly itself--a small legislature with no term limits. This allows legislators to serve for long periods of time and to become better acquainted with their counterparts across the aisle, producing a more collegial environment where partisan confrontation on every issue is not always desirable. We were told that there are “a lot of relationships in a small legislature, so it is better to pick your battles than to oppose every measure.” (interview notes, 7/25/18). Major Strengths Delaware’s legislature possesses several tools to conduct oversight, foremost among them the Joint Legislative Oversight and Sunset Committee (JLOSC). This committee works with staff to conduct a very thorough review of a small number of government entities. The committee rarely eliminates these entities, although it has the power to do so. Rather it tries to determine how to improve the entities’ performance. Based on its recommendations for improvement, the committee staff and the entity help draft legislation to resolve performance problems. The emphasis the committee places on enacting legislation to resolve performance problems that it identifies ensures that oversight hearings are more than merely public scolding. -

Audit of the Agribusiness Development Corporation

Audit of the Agribusiness Development Corporation A Report to the Legislature of the State of Hawai‘i Report No. 21-01 January 2021 OFFICE OF THE AUDITOR STATE OF HAWAI‘I OFFICE OF THE AUDITOR STATE OF HAWAI‘I Constitutional Mandate Pursuant to Article VII, Section 10 of the Hawai‘i State Constitution, the Office of the Auditor shall conduct post-audits of the transactions, accounts, programs and performance of all departments, offices and agencies of the State and its political subdivisions. The Auditor’s position was established to help eliminate waste and inefficiency in government, provide the Legislature with a check against the powers of the executive branch, and ensure that public funds are expended according to legislative intent. Hawai‘i Revised Statutes, Chapter 23, gives the Auditor broad powers to examine all books, records, files, papers and documents, and financial affairs of every agency. The Auditor also has the authority to summon people to produce records and answer questions under oath. Our Mission To improve government through independent and objective analyses. We provide independent, objective, and meaningful answers to questions about government performance. Our aim is to hold agencies accountable for their policy implementation, program management, and expenditure of public funds. Our Work We conduct performance audits (also called management or operations audits), which examine the efficiency and effectiveness of government programs or agencies, as well as financial audits, which attest to the fairness of financial statements of the State and its agencies. Additionally, we perform procurement audits, sunrise analyses and sunset evaluations of proposed regulatory programs, analyses of proposals to mandate health insurance benefits, analyses of proposed special and revolving funds, analyses of existing special, revolving and trust funds, and special studies requested by the Legislature. -

Chapter 3 Auditor

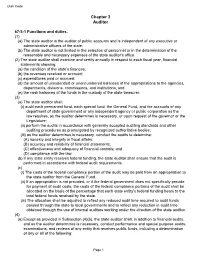

Utah Code Chapter 3 Auditor 67-3-1 Functions and duties. (1) (a) The state auditor is the auditor of public accounts and is independent of any executive or administrative officers of the state. (b) The state auditor is not limited in the selection of personnel or in the determination of the reasonable and necessary expenses of the state auditor's office. (2) The state auditor shall examine and certify annually in respect to each fiscal year, financial statements showing: (a) the condition of the state's finances; (b) the revenues received or accrued; (c) expenditures paid or accrued; (d) the amount of unexpended or unencumbered balances of the appropriations to the agencies, departments, divisions, commissions, and institutions; and (e) the cash balances of the funds in the custody of the state treasurer. (3) (a) The state auditor shall: (i) audit each permanent fund, each special fund, the General Fund, and the accounts of any department of state government or any independent agency or public corporation as the law requires, as the auditor determines is necessary, or upon request of the governor or the Legislature; (ii) perform the audits in accordance with generally accepted auditing standards and other auditing procedures as promulgated by recognized authoritative bodies; (iii) as the auditor determines is necessary, conduct the audits to determine: (A) honesty and integrity in fiscal affairs; (B) accuracy and reliability of financial statements; (C) effectiveness and adequacy of financial controls; and (D) compliance with the law. (b) If any state entity receives federal funding, the state auditor shall ensure that the audit is performed in accordance with federal audit requirements. -

Senior Auditor Evaluator I, Branch 3

CALIFORNIA STATE AUDITOR | Senior Auditor Evaluator I, Branch 3 CALIFORNIA STATE AUDITOR CAREER OPPORTUNITY SENIOR AUDITOR EVALUATOR I Branch 3 Job Control 96354 OUR MISSION The California State Auditor promotes the efficient and effective management of public funds and programs by providing to citizens and the State independent, objective, accurate, and timely evaluations of state and local governments' activities. auditor.ca.gov CALIFORNIA STATE AUDITOR | Senior Auditor Evaluator I, Branch 3 The California State Auditor is the State’s independent and nonpartisan auditing and investigative arm, serving the California State Legislature and the public. For nearly 60 years, the office has served California by auditing and reviewing state, local, or publicly created agency performance and operations; identifying wrongdoing or WHO mismanagement; and providing insight on issues. Our audits result in truthful, balanced, and unbiased information WE ARE that clarifies issues and brings more accountability to government programs. We pride ourselves on proposing innovative solutions to problems identified by our audits so that state and other public agencies can better serve Californians. Each year our recommendations result in meaningful change to government, saving taxpayers millions of dollars. The California State Auditor is now accepting COMPENSATION/BENEFITS applications for up to two Senior Auditor Evaluator I level positions. Individuals in this position serve CLASSIFICATION ANNUAL SALARY RANGE important roles in improving California government by Senior Auditor Evaluator I $68,268–89,736 assuring the performance, accountability, and transparency that its citizens deserve. Senior Auditor Evaluator I's in Branch 3 typically oversee performance Features of the comprehensive benefit package include: audits. If you are ready to take your audit career to the next level, read the details in this bulletin and submit an • Retirement (defined benefit plan), as well as 401(k) application. -

Legislative Oversight in Hawaii

Legislative Oversight in Hawaii Capacity and Usage Assessment Oversight through Analytic Bureaucracies: Moderate Oversight through the Appropriations Process: Moderate Oversight through Committees: High Oversight through Administrative Rule Review: Minimal Oversight through Advice and Consent: Limited Oversight through Monitoring Contracts: Moderate Judgment of Overall Institutional Capacity for Oversight: High Judgment of Overall Use of Institutional Capacity for Oversight: High Summary Assessment Hawaii has multiple analytic support agencies that aid the legislature with its oversight responsibilities. The system stresses transparency and public accessibility of audit reports. Audit reports are used regularly in committee hearings. Sunrise and sunset reviews provide a mechanism for legislators to influence state agencies. Analytic bureaucracies aid in these reviews. Audits also provide a mechanism to oversee contracts despite the fact that the executive branch is primarily empowered1 to review and monitor contracts. Major Strengths Audit reports are well publicized and summaries are prepared with the intent to promote public understanding and accessibility to Hawaii’s citizens. The auditor’s office provides one- page follow-up reports that describe actions taken in response to its reports. Moreover, the audit reports themselves address whether the legislature and agencies acted on audit recommendations. Audits provide a mechanism that the legislature uses to oversee contracts even though the executive branch is solely empowered to monitor contracts. Audits are used in making budget decisions and standing committees attempt to pass legislation based on audit findings. Challenges 1 http://spo.hawaii.gov/procurement-wizard/manual/contract-management/, accessed 9/5/18. The governor has strong appointment powers and can thus exert a lot of control over state government.