Site Audit Sample

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Yes Catalogue ------1

THE YES CATALOGUE ----------------------------------------------------------------------------------------------------------------------------------------------------- 1. Marquee Club Programme FLYER UK M P LTD. AUG 1968 2. MAGPIE TV UK ITV 31 DEC 1968 ???? (Rec. 31 Dec 1968) ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- 3. Marquee Club Programme FLYER UK M P LTD. JAN 1969 Yes! 56w 4. TOP GEAR RADIO UK BBC 12 JAN 1969 Dear Father (Rec. 7 Jan 1969) Anderson/Squire Everydays (Rec. 7 Jan 1969) Stills Sweetness (Rec. 7 Jan 1969) Anderson/Squire/Bailey Something's Coming (Rec. 7 Jan 1969) Sondheim/Bernstein 5. TOP GEAR RADIO UK BBC 23 FEB 1969 something's coming (rec. ????) sondheim/bernstein (Peter Banks has this show listed in his notebook.) 6. Marquee Club Programme FLYER UK m p ltd. MAR 1969 (Yes was featured in this edition.) 7. GOLDEN ROSE TV FESTIVAL tv SWITZ montreux 24 apr 1969 - 25 apr 1969 8. radio one club radio uk bbc 22 may 1969 9. THE JOHNNIE WALKER SHOW RADIO UK BBC 14 JUN 1969 Looking Around (Rec. 4 Jun 1969) Anderson/Squire Sweetness (Rec. 4 Jun 1969) Anderson/Squire/Bailey Every Little Thing (Rec. 4 Jun 1969) Lennon/McCartney 10. JAM TV HOLL 27 jun 1969 11. SWEETNESS 7 PS/m/BL & YEL FRAN ATLANTIC 650 171 27 jun 1969 F1 Sweetness (Edit) 3:43 J. Anderson/C. Squire (Bailey not listed) F2 Something's Coming' (From "West Side Story") 7:07 Sondheim/Bernstein 12. SWEETNESS 7 M/RED UK ATL/POLYDOR 584280 04 JUL 1969 A Sweetness (Edit) 3:43 Anderson/Squire (Bailey not listed) B Something's Coming (From "West Side Story") 7:07 Sondheim/Bernstein 13. -

Unlocking Trapped Value in Data | Accenture

Unlocking trapped value in data – accelerating business outcomes with artificial intelligence Actions to bridge the data and AI value gap Enterprises need a holistic data strategy to harness the massive power of artificial intelligence (AI) in the cloud By 2022, 90% of corporate strategies will explicitly cite data as a critical enterprise asset1. The reason why? Fast-growing pressure on enterprises to be more responsive, customer obsessed and market relevant. These capabilities demand real-time decision-making, augmented with AI/machine learning (ML)-based insights. And that requires end-to-end data visibility—free from complexities in the underlying infrastructure. For enterprises still reliant on legacy systems, this can be particularly challenging. Irrespective of challenges, however, the direction is clear. According to IDC estimates, spend on AI platforms and applications by enterprises in Asia Pacific (excluding Japan and China) is set to increase by a five-year CAGR of 30%, rising from US$753.6 million in 2020 to US$2.2 billion in 202 4 2. The need to invest strategically in AI/ML is higher than ever as CXOs move away from an experimental mindset and focus on breaking down data silos to extract full value from their data assets. These technologies are likely to have a significant impact on enterprises’ data strategies—making them increasingly By 2022, 90% of corporate strategies will integrated into CXOs’ growth objectives for the business. explicitly cite data as a critical enterprise asset. This is supported by research for Accenture’s Technology Vision 2021, which shows that the top three technology areas where enterprises are prioritizing - Gartner investment over the next year are cloud (45%), AI (39%) and IoT (35%)3. -

Vermont Wood Works Council

REPORT FOR APR 1, 2021 - APR 30, 2021 (GENERATED 4/30/2021) VERMONT WOOD - SEO & DIGITAL MARKETING REPORT Search Engine Visibility & Competitors NUMBER OF ORGANIC KEYWORDS IN TOP 10 BY DOMAIN verm on twood.com verm on tfu rn itu rem akers.com m adein verm on tm arketplace.c… verm on twoodworkin gsch ool.… 1/2 20 15 10 5 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr ORGANIC SEARCH ENGINE VISIBILITY ORGANIC VISIBILITY INCL. COMPETITORS 10 .0 0 Domain Organic visibilit y Pre vio us p e m adeinverm ontm arketplace.com 6.91 +0.47 7.50 verm ontwood.com 6.43 - 11.60 verm ontfurniturem akers.com 2.50 - 10.60 5.0 0 verm ontwoodworkingschool.com 1.56 = vtfpa.org 0.13 - 12.91 verm ontwoodlands.org 2.50 0.09 +89.7 3 0 .0 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr Google Keyword Ranking Distribution # OF KEYWORDS TRACKED # OF KEYWORDS IN TOP 3 # OF KEYWORDS IN TOP 10 # OF KEYWORDS IN TOP 20 0 8 10 Previou s period Previou s year Previou s period Previou s year Previou s period Previou s year 29 0% - 100% 0% 14% 0% 0% 1 of 8 Google Keyword Rankings ORGANIC POSITION NOTES Ke yword Organic posit ion Posit ion change verm ont wooden toys 4 = T he "Or ganic Posit ion" means t he it em r anking on t he Google woodworkers verm ont 4 = sear ch r esult page. -

Courseleaf's Users' Guide

Table of Contents Home ........................................................................................................................................................................................................................................... 3 CourseLeaf ........................................................................................................................................................................................................................... 4 Browsers ........................................................................................................................................................................................................................ 4 Web Site Accessiblity .................................................................................................................................................................................................... 5 Showing Differences (Red/Green Markup) ................................................................................................................................................................... 5 CourseLeaf Catalog (CAT) .................................................................................................................................................................................................. 7 Borrowed Content ........................................................................................................................................................................................................ -

The War and Fashion

F a s h i o n , S o c i e t y , a n d t h e First World War i ii Fashion, Society, and the First World War International Perspectives E d i t e d b y M a u d e B a s s - K r u e g e r , H a y l e y E d w a r d s - D u j a r d i n , a n d S o p h i e K u r k d j i a n iii BLOOMSBURY VISUAL ARTS Bloomsbury Publishing Plc 50 Bedford Square, London, WC1B 3DP, UK 1385 Broadway, New York, NY 10018, USA 29 Earlsfort Terrace, Dublin 2, Ireland BLOOMSBURY, BLOOMSBURY VISUAL ARTS and the Diana logo are trademarks of Bloomsbury Publishing Plc First published in Great Britain 2021 Selection, editorial matter, Introduction © Maude Bass-Krueger, Hayley Edwards-Dujardin, and Sophie Kurkdjian, 2021 Individual chapters © their Authors, 2021 Maude Bass-Krueger, Hayley Edwards-Dujardin, and Sophie Kurkdjian have asserted their right under the Copyright, Designs and Patents Act, 1988, to be identifi ed as Editors of this work. For legal purposes the Acknowledgments on p. xiii constitute an extension of this copyright page. Cover design by Adriana Brioso Cover image: Two women wearing a Poiret military coat, c.1915. Postcard from authors’ personal collection. This work is published subject to a Creative Commons Attribution Non-commercial No Derivatives Licence. You may share this work for non-commercial purposes only, provided you give attribution to the copyright holder and the publisher Bloomsbury Publishing Plc does not have any control over, or responsibility for, any third- party websites referred to or in this book. -

The Differentiated Classroom (Tomlinson)

Tomlinson cover final 12/8/05 9:26 AM Page 1 Education $ 21.95 TheThe The DifferentiatedDifferentiated Classroom: Differentiated esponding R to ClassroomClassroom the Ne eds s of All Learner It’s an age-old challenge: How can teachers divide their time, of All Learners to the Needs Responding resources, and efforts to effectively instruct so many students of diverse backgrounds, readiness and skill levels, and interests? The Differentiated Classroom: Responding to the Needs of All Learners offers a powerful, practi- cal solution. Drawing on nearly three decades of experience, author Carol Ann Tomlinson describes a way of thinking about teaching and learning that will change all aspects of how you approach students and your classroom. She looks to the latest research on learning, education, and change for the theoretical basis of differentiated instruction and why it’s so important to today’s children. Yet she offers much more than theory, filling the pages with real-life examples of teachers and students using—and benefiting from—differentiated instruction. At the core of the book, three chapters describe actual lessons, units, and classrooms with differentiated instruction in action. Tomlinson looks at elementary and secondary classrooms in nearly all subject areas to show how real teachers turn the challenge of differenti- ation into a reality. Her insightful analysis of how, what, and why teachers differentiate lays the groundwork for you to bring differentia- tion to your own classroom. Tomlinson’s commonsense, classroom-tested advice speaks to experi- enced and novice teachers as well as educational leaders who want to foster differentiation in their schools. -

Cisco Webex Training Center User Guide

Cisco WebEx Training Center User Guide WBS29 Copyright © 1997-2013 Cisco and/or its affiliates. All rights reserved. WEBEX, CISCO, Cisco WebEx, the CISCO logo, and the Cisco WebEx logo are trademarks or registered trademarks of Cisco and/or its affiliated entities in the United States and other countries. Third-party trademarks are the property of their respective owners. U.S. Government End User Purchasers. The Documentation and related Services qualify as "commercial items," as that term is defined at Federal Acquisition Regulation ("FAR") (48 C.F.R.) 2.101. Consistent with FAR 12.212 and DoD FAR Supp. 227.7202-1 through 227.7202-4, and notwithstanding any other FAR or other contractual clause to the contrary in any agreement into which the Agreement may be incorporated, Customer may provide to Government end user or, if the Agreement is direct, Government end user will acquire, the Services and Documentation with only those rights set forth in the Agreement. Use of either the Services or Documentation or both constitutes agreement by the Government that the Services and Documentation are commercial items and constitutes acceptance of the rights and restrictions herein. Last updated: 10232013 www.webex.com Table of Contents Setting up and Preparing for a Training Session ....................................................... 1 Setting up Training Center ........................................................................................ 1 System requirements for Training Center for Windows ..................................... -

Md Dinesh Nair

Poetry Series M.D DINESH NAIR - poems - Publication Date: 2012 Publisher: Poemhunter.com - The World's Poetry Archive M.D DINESH NAIR(9 -21) My poems tresspss the boundaries of caste, creed, nationaity and lines flip not, the lands wither not and thoughts never retreat. My negation of the concept of God is highly motivated by my own convictions and transparency of thoughts validated by common reading of the most illustrative science based articles has revealed to me the non existence of the supernatural of any Stephen Hawkins. the scientist who explained the mystery of time, the big bang theory and the various aspects of rational thinking is a great hero to me. If you think that what you think of God and religion otherwise is correct, I just leave you there and in this regard I humbly reject all your demands on reconsidering my conviction.. M.D Dinesh Nair, Lecturer in English, Sri Chaitanya Group of Colleges, Vijayawada, INDIA. e-mail: mddnair@ www.PoemHunter.com - The World's Poetry Archive 1 A Breath I Cherish I cherish your breath a lot. As your breath is a sweet sob That chimes out tales for a reverie. Perhaps you breathe for none but me. At times I miss your breath As I flee to a world of solitude. But then is heard your breath winding in To reach the peaks of my utopia. Your breath gets cannonised And my entity rebounds unto you again. A love is born and blossomed As I search for you in the dark. I cherish your breath a lot. -

App Search Optimisation Plan and Implementation. Case: Primesmith Oy (Jevelo)

App search optimisation plan and implementation. Case: Primesmith Oy (Jevelo) Thuy Nguyen Bachelor’s Thesis Degree Programme in International Business 2016 Abstract 19 August 2016 Author Thuy Nguyen Degree programme International Business Thesis title Number of pages App search optimisation plan and implementation. Case: Primesmith and appendix pages Oy (Jevelo). 49 + 7 This is a project-based thesis discussing the fundamentals of app search optimisation in modern business. Project plan and implementation were carried out for Jevelo, a B2C hand- made jewellery company, within three-month period and thenceforth recommendations were given for future development. This thesis aims to approach new marketing techniques that play important roles in online marketing tactics. The main goal of this study is to accumulate best practices of app search optimisation and develop logical thinking when performing project elements and analysing results. The theoretical framework introduces an overview of facts and the innovative updates in the internet environment. They include factors of online marketing, mobile marketing, app search optimisation, with the merger of search engine optimisation (SEO) and app store op- timisation (ASO) being considered as an immediate action for digital marketers nowadays. The project of app search optimisation in chapter 4 reflects the accomplished activities of the SEO implementation from scratch and a piece of ASO analysis was conducted and sugges- tions for Jevelo. Other marketing methods like search engine marketing, affiliate marketing, content marketing, together with the customer analysis are briefly described to support of theoretical aspects. The discussion section visualises the entire thesis process by summing up theoretical knowledge, providing project evaluation as well as a reflection of self-learning. -

Seo-101-Guide-V7.Pdf

Copyright 2017 Search Engine Journal. Published by Alpha Brand Media All Rights Reserved. MAKING BUSINESSES VISIBLE Consumer tracking information External link metrics Combine data from your web Uploading backlink data to a crawl analytics. Adding web analytics will also identify non-indexable, redi- data to a crawl will provide you recting, disallowed & broken pages with a detailed gap analysis and being linked to. Do this by uploading enable you to find URLs which backlinks from popular backlinks have generated traffic but A checker tools to track performance of AT aren’t linked to – also known as S D BA the most link to content on your site. C CK orphans. TI L LY IN A K N D A A T B A E W O R G A A T N A I D C E S IL Search Analytics E F Crawler requests A G R O DeepCrawl’s Advanced Google CH L Integrate summary data from any D Search Console Integration ATA log file analyser tool into your allows you to connect technical crawl. Integrating log file data site performance insights with enables you to discover the pages organic search information on your site that are receiving from Google Search Console’s attention from search engine bots Search Analytics report. as well as the frequency of these requests. Monitor site health Improve your UX Migrate your site Support mobile first Unravel your site architecture Store historic data Internationalization Complete competition Analysis [email protected] +44 (0) 207 947 9617 +1 929 294 9420 @deepcrawl Free trail at: https://www.deepcrawl.com/free-trial Table of Contents 9 Chapter 1: 20 -

Google Search Console

Google Search Console Google Search Console is a powerful free tool to see how your website performs in Google search results and to help monitor the health of your website. How does your site perform in Google Search results? Search Analytics is the main tool in Search Console to see how your site performs in Google search results. Using the various filters on Search Analytics you can see: The top search queries people used to find your website Which countries your web traffic is coming from What devices visitors are using (desktop, mobile, or tablet) How many people saw your site in a search results list (measured as impressions) How many people clicked on your site from their search results Your website’s ranking in a search results list for a given term For example, the search query used most often to find the SCLS website is ‘South Central Library System.’ When people search ‘South Central Library System’ www.scls.info is the first result in the list. As shown below, this July, 745 people used this search query and 544 people clicked on the search result to bring them to www.scls.info. Monitoring your website health Google Search console also has tools to monitor your website’s health. The Security Issues tab lets you know if there are any signs that your site has been hacked and has information on how to recover. The Crawl Error tool lets you know if Google was unable to navigate parts of your website in order to index it for searching. If Google can’t crawl your website, it often means visitors can’t see it either, so it is a good idea to address any errors you find here. -

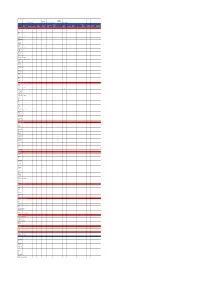

SNSW for Business Assisted Channels & Middle Office App Dev

Assisted SNSW for Channels & Digital Channels Business Personal Transactions Middle Office MyService NSW a/c Transactions Digital App Dev Digital SNSW for My Community & Service Proof Of Auth & Licence Knowledge & IT Service Tech Design & Portal Mobile Apps Website Licence Business Project (Forms) Unify Identity Tell Gov Once Linking API Platform Test Team Delivery Development Tech Stacks/Language/Tools/Frameworks Node/5 x XML x React x x x x x x NodeJS x x x Mongo DB - At x AngularJS x x Drupal 7/8 x Secure Logic PKI x HTML5 x Post CSS x Bootstrap x Springboot x x x Kotlin x x x Style components (react) x Postgres RDS x Apigee x Next.JS (react) x Graph QL x Express x x Form IO x Mongo DB/ Mongo Atlas x Mongo DB (form IO) hosted on atlas x Java x x Angular 6 x Angular 5 x SQL server x Typescript x x x Mysql Aurora DB x Vue JS x x Bulma CSS x Javascript ES6 x x x Note JS x Oauth x Objective C x Swift x Redux x ASP.Net x Docker Cloud & Infrastructure Apigee x x x x Firebase x PCF (hosting demo App) x PCF x x x x x x Google Play Store x x Apple App Store x Google SafetyNet x Google Cloud Console x Firebase Cloud Messaging x Lambda x x x Cognito x ECS x x ECR x ALB x S3 x x Jfrog x Route 53 x Form IO x Google cloud (vendor hosted) x Cloudfront x Docker x x Docker/ Kubernetes x Postgres (RDS) x x Rabbit MQ (PCF) x Secure Logic PKI x AWS Cloud x Dockerhub (DB snapshots) x Development Tools Atom x Eclipse x x Postman x x x x x x x x x VS Code x x x x Rider (Jetbrain) x Cloud 9 x PHP Storm x Sublime x Sourcetree x x x Webstorm x x x Intelij x