A Platform for Monitoring Search Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unlocking Trapped Value in Data | Accenture

Unlocking trapped value in data – accelerating business outcomes with artificial intelligence Actions to bridge the data and AI value gap Enterprises need a holistic data strategy to harness the massive power of artificial intelligence (AI) in the cloud By 2022, 90% of corporate strategies will explicitly cite data as a critical enterprise asset1. The reason why? Fast-growing pressure on enterprises to be more responsive, customer obsessed and market relevant. These capabilities demand real-time decision-making, augmented with AI/machine learning (ML)-based insights. And that requires end-to-end data visibility—free from complexities in the underlying infrastructure. For enterprises still reliant on legacy systems, this can be particularly challenging. Irrespective of challenges, however, the direction is clear. According to IDC estimates, spend on AI platforms and applications by enterprises in Asia Pacific (excluding Japan and China) is set to increase by a five-year CAGR of 30%, rising from US$753.6 million in 2020 to US$2.2 billion in 202 4 2. The need to invest strategically in AI/ML is higher than ever as CXOs move away from an experimental mindset and focus on breaking down data silos to extract full value from their data assets. These technologies are likely to have a significant impact on enterprises’ data strategies—making them increasingly By 2022, 90% of corporate strategies will integrated into CXOs’ growth objectives for the business. explicitly cite data as a critical enterprise asset. This is supported by research for Accenture’s Technology Vision 2021, which shows that the top three technology areas where enterprises are prioritizing - Gartner investment over the next year are cloud (45%), AI (39%) and IoT (35%)3. -

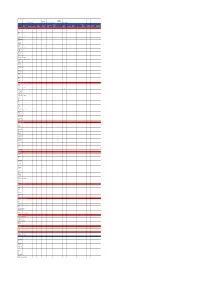

Vermont Wood Works Council

REPORT FOR APR 1, 2021 - APR 30, 2021 (GENERATED 4/30/2021) VERMONT WOOD - SEO & DIGITAL MARKETING REPORT Search Engine Visibility & Competitors NUMBER OF ORGANIC KEYWORDS IN TOP 10 BY DOMAIN verm on twood.com verm on tfu rn itu rem akers.com m adein verm on tm arketplace.c… verm on twoodworkin gsch ool.… 1/2 20 15 10 5 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr ORGANIC SEARCH ENGINE VISIBILITY ORGANIC VISIBILITY INCL. COMPETITORS 10 .0 0 Domain Organic visibilit y Pre vio us p e m adeinverm ontm arketplace.com 6.91 +0.47 7.50 verm ontwood.com 6.43 - 11.60 verm ontfurniturem akers.com 2.50 - 10.60 5.0 0 verm ontwoodworkingschool.com 1.56 = vtfpa.org 0.13 - 12.91 verm ontwoodlands.org 2.50 0.09 +89.7 3 0 .0 0 May Ju n Ju l Au g Sep Oct Nov Dec Jan Feb Mar Apr Google Keyword Ranking Distribution # OF KEYWORDS TRACKED # OF KEYWORDS IN TOP 3 # OF KEYWORDS IN TOP 10 # OF KEYWORDS IN TOP 20 0 8 10 Previou s period Previou s year Previou s period Previou s year Previou s period Previou s year 29 0% - 100% 0% 14% 0% 0% 1 of 8 Google Keyword Rankings ORGANIC POSITION NOTES Ke yword Organic posit ion Posit ion change verm ont wooden toys 4 = T he "Or ganic Posit ion" means t he it em r anking on t he Google woodworkers verm ont 4 = sear ch r esult page. -

App Search Optimisation Plan and Implementation. Case: Primesmith Oy (Jevelo)

App search optimisation plan and implementation. Case: Primesmith Oy (Jevelo) Thuy Nguyen Bachelor’s Thesis Degree Programme in International Business 2016 Abstract 19 August 2016 Author Thuy Nguyen Degree programme International Business Thesis title Number of pages App search optimisation plan and implementation. Case: Primesmith and appendix pages Oy (Jevelo). 49 + 7 This is a project-based thesis discussing the fundamentals of app search optimisation in modern business. Project plan and implementation were carried out for Jevelo, a B2C hand- made jewellery company, within three-month period and thenceforth recommendations were given for future development. This thesis aims to approach new marketing techniques that play important roles in online marketing tactics. The main goal of this study is to accumulate best practices of app search optimisation and develop logical thinking when performing project elements and analysing results. The theoretical framework introduces an overview of facts and the innovative updates in the internet environment. They include factors of online marketing, mobile marketing, app search optimisation, with the merger of search engine optimisation (SEO) and app store op- timisation (ASO) being considered as an immediate action for digital marketers nowadays. The project of app search optimisation in chapter 4 reflects the accomplished activities of the SEO implementation from scratch and a piece of ASO analysis was conducted and sugges- tions for Jevelo. Other marketing methods like search engine marketing, affiliate marketing, content marketing, together with the customer analysis are briefly described to support of theoretical aspects. The discussion section visualises the entire thesis process by summing up theoretical knowledge, providing project evaluation as well as a reflection of self-learning. -

Seo-101-Guide-V7.Pdf

Copyright 2017 Search Engine Journal. Published by Alpha Brand Media All Rights Reserved. MAKING BUSINESSES VISIBLE Consumer tracking information External link metrics Combine data from your web Uploading backlink data to a crawl analytics. Adding web analytics will also identify non-indexable, redi- data to a crawl will provide you recting, disallowed & broken pages with a detailed gap analysis and being linked to. Do this by uploading enable you to find URLs which backlinks from popular backlinks have generated traffic but A checker tools to track performance of AT aren’t linked to – also known as S D BA the most link to content on your site. C CK orphans. TI L LY IN A K N D A A T B A E W O R G A A T N A I D C E S IL Search Analytics E F Crawler requests A G R O DeepCrawl’s Advanced Google CH L Integrate summary data from any D Search Console Integration ATA log file analyser tool into your allows you to connect technical crawl. Integrating log file data site performance insights with enables you to discover the pages organic search information on your site that are receiving from Google Search Console’s attention from search engine bots Search Analytics report. as well as the frequency of these requests. Monitor site health Improve your UX Migrate your site Support mobile first Unravel your site architecture Store historic data Internationalization Complete competition Analysis [email protected] +44 (0) 207 947 9617 +1 929 294 9420 @deepcrawl Free trail at: https://www.deepcrawl.com/free-trial Table of Contents 9 Chapter 1: 20 -

Google Search Console

Google Search Console Google Search Console is a powerful free tool to see how your website performs in Google search results and to help monitor the health of your website. How does your site perform in Google Search results? Search Analytics is the main tool in Search Console to see how your site performs in Google search results. Using the various filters on Search Analytics you can see: The top search queries people used to find your website Which countries your web traffic is coming from What devices visitors are using (desktop, mobile, or tablet) How many people saw your site in a search results list (measured as impressions) How many people clicked on your site from their search results Your website’s ranking in a search results list for a given term For example, the search query used most often to find the SCLS website is ‘South Central Library System.’ When people search ‘South Central Library System’ www.scls.info is the first result in the list. As shown below, this July, 745 people used this search query and 544 people clicked on the search result to bring them to www.scls.info. Monitoring your website health Google Search console also has tools to monitor your website’s health. The Security Issues tab lets you know if there are any signs that your site has been hacked and has information on how to recover. The Crawl Error tool lets you know if Google was unable to navigate parts of your website in order to index it for searching. If Google can’t crawl your website, it often means visitors can’t see it either, so it is a good idea to address any errors you find here. -

SNSW for Business Assisted Channels & Middle Office App Dev

Assisted SNSW for Channels & Digital Channels Business Personal Transactions Middle Office MyService NSW a/c Transactions Digital App Dev Digital SNSW for My Community & Service Proof Of Auth & Licence Knowledge & IT Service Tech Design & Portal Mobile Apps Website Licence Business Project (Forms) Unify Identity Tell Gov Once Linking API Platform Test Team Delivery Development Tech Stacks/Language/Tools/Frameworks Node/5 x XML x React x x x x x x NodeJS x x x Mongo DB - At x AngularJS x x Drupal 7/8 x Secure Logic PKI x HTML5 x Post CSS x Bootstrap x Springboot x x x Kotlin x x x Style components (react) x Postgres RDS x Apigee x Next.JS (react) x Graph QL x Express x x Form IO x Mongo DB/ Mongo Atlas x Mongo DB (form IO) hosted on atlas x Java x x Angular 6 x Angular 5 x SQL server x Typescript x x x Mysql Aurora DB x Vue JS x x Bulma CSS x Javascript ES6 x x x Note JS x Oauth x Objective C x Swift x Redux x ASP.Net x Docker Cloud & Infrastructure Apigee x x x x Firebase x PCF (hosting demo App) x PCF x x x x x x Google Play Store x x Apple App Store x Google SafetyNet x Google Cloud Console x Firebase Cloud Messaging x Lambda x x x Cognito x ECS x x ECR x ALB x S3 x x Jfrog x Route 53 x Form IO x Google cloud (vendor hosted) x Cloudfront x Docker x x Docker/ Kubernetes x Postgres (RDS) x x Rabbit MQ (PCF) x Secure Logic PKI x AWS Cloud x Dockerhub (DB snapshots) x Development Tools Atom x Eclipse x x Postman x x x x x x x x x VS Code x x x x Rider (Jetbrain) x Cloud 9 x PHP Storm x Sublime x Sourcetree x x x Webstorm x x x Intelij x -

How Can I Get Deep App Screens Indexed for Google Search?

HOW CAN I GET DEEP APP SCREENS INDEXED FOR GOOGLE SEARCH? Setting up app indexing for Android and iOS apps is pretty straightforward and well documented by Google. Conceptually, it is a three-part process: 1. Enable your app to handle deep links. 2. Add code to your corresponding Web pages that references deep links. 3. Optimize for private indexing. These steps can be taken out of order if the app is still in development, but the second step is crucial. Without it, your app will be set up with deep links but not set up for Google indexing, so the deep links will not show up in Google Search. NOTE: iOS app indexing is still in limited release with Google, so there is a special form submission and approval process even after you have added all the technical elements to your iOS app. That being said, the technical implementations take some time; by the time your company has finished, Google may have opened up indexing to all iOS apps, and this cumbersome approval process may be a thing of the past. Steps For Google Deep Link Indexing Step 1: Add Code To Your App That Establishes The Deep Links A. Pick A URL Scheme To Use When Referencing Deep Screens In Your App App URL schemes are simply a systematic way to reference the deep linked screens within an app, much like a Web URL references a specific page on a website. In iOS, developers are currently limited to using Custom URL Schemes, which are formatted in a way that is more natural for app design but different from Web. -

Dataset Search: a Lightweight, Community-Built Tool to Support Research Data Discovery

Journal of eScience Librarianship Volume 10 Issue 1 Special Issue: Research Data and Article 3 Preservation (RDAP) Summit 2020 2021-01-19 Dataset Search: A lightweight, community-built tool to support research data discovery Sara Mannheimer Montana State University Et al. Let us know how access to this document benefits ou.y Follow this and additional works at: https://escholarship.umassmed.edu/jeslib Part of the Scholarly Communication Commons Repository Citation Mannheimer S, Clark JA, Hagerman K, Schultz J, Espeland J. Dataset Search: A lightweight, community- built tool to support research data discovery. Journal of eScience Librarianship 2021;10(1): e1189. https://doi.org/10.7191/jeslib.2021.1189. Retrieved from https://escholarship.umassmed.edu/jeslib/ vol10/iss1/3 Creative Commons License This work is licensed under a Creative Commons Attribution 4.0 License. This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in Journal of eScience Librarianship by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. ISSN 2161-3974 JeSLIB 2021; 10(1): e1189 https://doi.org/10.7191/jeslib.2021.1189 Full-Length Paper Dataset Search: A lightweight, community-built tool to support research data discovery Sara Mannheimer, Jason A. Clark, Kyle Hagerman, Jakob Schultz, and James Espeland Montana State University, Bozeman, MT, USA Abstract Objective: Promoting discovery of research data helps archived data realize its potential to advance knowledge. Montana -

Jsitemap Professional Functionalities

JSitemap Professional VERSION 4.8 Functionalities JSitemap Pro is a powerful component to manage sitemap and RSS contents. It embeds an innovative and revolutionary system to add dinamically whatever type of content you need to your sitemap and XML feeds without using plugins! In this way once installed component you will be able to manage virtually whatever content you want to add that is hold on the Joomla! database and is related to a particular installed extension. This is made possibile through 'Data Sources', that represent the sources of data that will be displayed in sitemap tree. Data sources rely on the powerful SQL Query Compiler that allows to extrapolate easily and through a user friendly interface every kind of data from the Joomla! Database, formatting them accordigly to generate sitemap links. JSitemap Pro main functionalities include: • Automated wizard to create data sources with one click for common extensions • SEO stats, it's capable to calculate Google and Alexa ranks, number of indexed pages, etc • Dynamic sitemap data sources without the need of plugins • Google Indexing Tester allow you to monitor how your site is being indexed in Google SERP • Search Engines Metainfo Dashboard to instruct search engines and socials about title, description and image to show • SEO Dashboard to manage common tasks easily • Automatic ping search engines to force crawling and increase visits • Automatic management for robots.txt to add sitemap entries • Integrated and assisted editor for robots.txt • Integrated and assisted editor -

Make Your Website Work for You

HOW WILL YOU MEASURE SUCCESS? Think about your existing or future website. What do you ● Track online sales want this site to accomplish? ● Collect form submissions ● Monitor phone calls Be specific ● Measure engagement Be realistic How can you track and measure success? 8 GOOGLE ANALYTICS Learn which search terms Use Google Analytics to learn how and sources drive traffic people engage with your website. See demographic information for your profitable customers Understand what content your audience is interested in Evaluate which channels Quick Tip: drive the most conversions Set up Google Analytics g.co/analytics 9 SET UP GOOGLE ANALYTICS ● Go to g.co/analytics ● Click Start for free ● Set up a website property ● Follow instructions, add tracking code to your site 10 GOOGLE ANALYTICS Who is your target audience? Demographic reports Quick Tip: A new version of Google Analytics launched on 10/14/2020. This screenshot features an older report. 11 A Great Website Is Organized 12 START WITH A PLAN A Jewelry Store Homepage What is “Information Architecture,” or IA? About Products Contact It’s a way to organize information on your site to Rings achieve business goals. Watches Necklaces 13 TIPS FOR ORGANIZING YOUR WEBSITE ● Use short, descriptive names and labels. ● Keep the most important info in main navigation. ● Keep site visitors in mind. Where and how would they look for info? 14 A Great Website Is Useful 15 46% of people say they would not purchase from a brand again if they had an interruptive mobile experience. Source: Google/Purchased, U.S., “How Brand Experiences Inspire Consumer Action,” n=2,010 U.S. -

What Is Search Engine Optimization (SEO)?

claninmarketing.com/workshops Public Relations Social Media Development + Management Search Engine Optimization Graphic Design Logo + Branding Design Website Design + Management Strategic Planning Marketing Audits Consulting Scott Clanin Owner & President Agenda What is SEO and why is it important? How Google Ranks Content How Search Algorithms work Ways to improve SEO Simple checklist My site isn’t showing up. Why? • The site isn't well connected from other sites on the web • You've just launched a new site and Google hasn't had time to crawl it yet • The design of the site makes it difficult for Google to crawl its content effectively • Google received an error when trying to crawl your site What is search engine optimization (SEO)? SEO is the practice of increasing the quantity and quality of traffic to your website through organic search engine results. Why is SEO important? Users are searching for what you have to offer. High SEO rankings will increase traffic to your website! Source: Google SEO Googlebot Search bot software that Google sends out to collect information about documents on the web to add to Google’s searchable index Crawling Process where the Googlebot goes around from website to website, finding new and updated information to report back to Google. The Googlebot finds what to crawl using links. Indexing The processing of the information gathered by the Googlebot from its crawling activities. Once documents are processed, they are added to Google’s searchable index if they are determined to be quality content. SEO Serving (and ranking) When a server types a query, Google tries to find the most relevant answer from its index based on many factors. -

SEO Zaawansowany Audyt : Strategie I Metody Optymalizacji Witryn Internetowych Dla Wyszukiwarek / Marco Maltraversi, Davide Prevosto, Giovanni Sacheli

SEO zaawansowany audyt : strategie i metody optymalizacji witryn internetowych dla wyszukiwarek / Marco Maltraversi, Davide Prevosto, Giovanni Sacheli. – Wydanie I. – Warszawa, 2021 Spis treści Przedmowa (Craig Campbell ) 19 Czym jest dla mnie audyt SEO 19 Jakich narzędzi używam 20 Od czego zaczynam analizę 20 Bazy 20 Linki wewnętrzne 21 Prędkość witryny 21 Kontrole dodatkowe 21 Analiza słów kluczowych 22 Analiza konkurentów 22 Analiza backlinków 22 Audyt to proces ciągły 22 Wstęp 23 Czego nie znajdziesz w tej książce 23 Co znajdziesz w tej książce 23 Autorzy 24 Podziękowania 25 Rozdział 1 - Optymalizacje, które robią różnicę 27 Audyt SEO - o co chodzi? 27 Kiedy należy przeprowadzać audyt SEO? 28 Jakich wyników powinniśmy oczekiwać? 28 Rozwój SEO 29 Co to jest SEO? 29 Jak przeprowadzić SEO? 30 Która operacja off-page jest najskuteczniejsza w poprawianiu pozycjonowania witryny? 35 Bzdury, jakie o SEO wygadują i nie wygadują 39 Jak działa wyszukiwarka? Jak działa Google? 40 Spam, algorytmy i filtry - z wyszukiwarką nie ma żartów 46 Ludzka strona Google’a 51 Fachowość, wiarygodność i zaufanie 51 Your money, your life 52 Rozdział 2 - Technika SEO – operacje wstępne 55 Jak stworzyć audyt SEO (Gaetano Romero) 55 Wstęp 55 Narzędzia do wykorzystania 55 Określanie możliwości budowy połączeń wewnętrznych i zewnętrznych 56 Budowa połączeń wewnętrznych 56 Identyfikacja możliwych udoskonaleń struktury informacji 56 Identyfikacja „thin contentu" 57 Upewnienie się, że metatagi są zoptymalizowane 57 Szukanie błędów na witrynie 57 Od HTTP do HTTPS - włącz