“Computer Programming IV” As Capstone Design and Laboratory Attachment Shoichi Yokoyama† Yamagata University, Yonezawa, Japan

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mysql NDB Cluster 7.5.16 (And Later)

Licensing Information User Manual MySQL NDB Cluster 7.5.16 (and later) Table of Contents Licensing Information .......................................................................................................................... 2 Licenses for Third-Party Components .................................................................................................. 3 ANTLR 3 .................................................................................................................................... 3 argparse .................................................................................................................................... 4 AWS SDK for C++ ..................................................................................................................... 5 Boost Library ............................................................................................................................ 10 Corosync .................................................................................................................................. 11 Cyrus SASL ............................................................................................................................. 11 dtoa.c ....................................................................................................................................... 12 Editline Library (libedit) ............................................................................................................. 12 Facebook Fast Checksum Patch .............................................................................................. -

Konlpy Documentation 출시 0.4.1

KoNLPy Documentation 출시 0.4.1 Lucy Park 2015D 02월 25| Contents 1 Standing on the shoulders of giants2 2 License 3 3 Contribute 4 4 Getting started 5 4.1 What is NLP?............................................5 4.2 What do I need to get started?....................................5 5 User guide 6 5.1 Installation..............................................6 5.2 Morphological analysis and POS tagging..............................7 5.3 Data.................................................. 10 5.4 Examples............................................... 11 5.5 Running tests............................................. 23 5.6 References.............................................. 23 6 API 26 6.1 konlpy Package............................................ 26 7 Indices and tables 34 Python ¨È ©] 35 i KoNLPy Documentation, 출시 0.4.1 (https://travis-ci.org/konlpy/konlpy) (https://readthedocs.org/projects/konlpy/?badge=latest) KoNLPy (pro- nounced “ko en el PIE”) is a Python package for natural language processing (NLP) of the Korean language. For installation directions, see here (page 6). For users new to NLP, go to Getting started (page 5). For step-by-step instructions, follow the User guide (page 6). For specific descriptions of each module, go see the API (page 26) documents. >>> from konlpy.tag import Kkma >>> from konlpy.utils import pprint >>> kkma= Kkma() >>> pprint(kkma.sentences(u’$, HUX8요. 반갑습니다.’)) [$, HUX8요.., 반갑습니다.] >>> pprint(kkma.nouns(u’È8t나 tX¬m@ C헙 t슈 ¸래커Ð ¨¨주8요.’)) [È8, tX, tX¬m, ¬m, C헙, t슈, ¸래커] >>> pprint(kkma.pos(u’$X보고는 실행X½, Ð러T8지@h께 $명D \대\Á8히!^^’)) [($X, NNG), (보고, NNG), (는, JX), (실행, NNG), (X½, NNG), (,, SP), (Ð러, NNG), (T8지, NNG), (@, JKM), (h께, MAG), ($명, NNG), (D, JKO), (\대\, NNG), (Á8히, MAG), (!, SF), (^^, EMO)] Contents 1 CHAPTER 1 Standing on the shoulders of giants Korean, the 13th most widely spoken language in the world (http://www.koreatimes.co.kr/www/news/nation/2014/05/116_157214.html), is a beautiful, yet complex language. -

Learning to Generate Pseudo-Code from Source Code Using Statistical Machine Translation

Learning to Generate Pseudo-code from Source Code using Statistical Machine Translation Yusuke Oda, Hiroyuki Fudaba, Graham Neubig, Hideaki Hata, Sakriani Sakti, Tomoki Toda, and Satoshi Nakamura Graduate School of Information Science, Nara Institute of Science and Technology 8916-5 Takayama, Ikoma, Nara 630-0192, Japan foda.yusuke.on9, fudaba.hiroyuki.ev6, neubig, hata, ssakti, tomoki, [email protected] Abstract—Pseudo-code written in natural language can aid comprehension of beginners because it explicitly describes the comprehension of source code in unfamiliar programming what the program is doing, but is more readable than an languages. However, the great majority of source code has no unfamiliar programming language. corresponding pseudo-code, because pseudo-code is redundant and laborious to create. If pseudo-code could be generated Fig. 1 shows an example of Python source code, and En- automatically and instantly from given source code, we could glish pseudo-code that describes each corresponding statement allow for on-demand production of pseudo-code without human in the source code.1 If the reader is a beginner at Python effort. In this paper, we propose a method to automatically (or a beginner at programming itself), the left side of Fig. generate pseudo-code from source code, specifically adopting the 1 may be difficult to understand. On the other hand, the statistical machine translation (SMT) framework. SMT, which was originally designed to translate between two natural lan- right side of the figure can be easily understood by most guages, allows us to automatically learn the relationship between English speakers, and we can also learn how to write specific source code/pseudo-code pairs, making it possible to create a operations in Python (e.g. -

Mysql Installation Guide Abstract

MySQL Installation Guide Abstract This is the MySQL Installation Guide from the MySQL 5.7 Reference Manual. For legal information, see the Legal Notices. For help with using MySQL, please visit the MySQL Forums, where you can discuss your issues with other MySQL users. Document generated on: 2021-10-06 (revision: 70984) Table of Contents Preface and Legal Notices ............................................................................................................ v 1 Installing and Upgrading MySQL ................................................................................................ 1 2 General Installation Guidance .................................................................................................... 3 2.1 Supported Platforms ....................................................................................................... 3 2.2 Which MySQL Version and Distribution to Install .............................................................. 3 2.3 How to Get MySQL ........................................................................................................ 4 2.4 Verifying Package Integrity Using MD5 Checksums or GnuPG .......................................... 5 2.4.1 Verifying the MD5 Checksum ............................................................................... 5 2.4.2 Signature Checking Using GnuPG ........................................................................ 5 2.4.3 Signature Checking Using Gpg4win for Windows ................................................. 13 2.4.4 -

Object-Oriented Virtual Sample Library: a Container of Multi-Dimensional Data for Acquisition, Visualization and Sharing

Object-oriented virtual sample library: a container of multi-dimensional data for acquisition, visualization and sharing Shin-ichi Todoroki y Advanced Materials Laboratory, National Institute for Materials Science, Namiki 1-1, Tsukuba, Ibaraki 305-0044, JAPAN Abstract. Combinatorial methods bring about enormous data not only in size but also in dimension. To handle multi-dimensional data easily, a concept of virtual container for combinatorially acquired data is demonstrated which is called “virtual sample library” (VSL). VSL stores the data hierarchically in the order of (1) coordinates in the sample library, (2) names of the measurements performed, and (3) data obtained from each measurement. Thus, the stored data are accessed intuitively just by tracing this tree-like structure and are provided for visualization and sharing with others. This framework is constructed by the aid of an object-oriented scripting language which is good at abstracting complicated data structure. In this paper, after summarizing the problems of handling data acquired from combinatorially integrated samples and availabilities of software tools to solve them, the concept of VSL is proposed and its structure and functions are demonstrated on the basis of one specific experimental data. Its extensibility as a platform for numerical simulation is also discussed. PACS numbers: 07.05.Kf, 07.05.Rm Received 12 April 2004, in final form 26 July 2004 Published 16 December 2004 Meas. Sci. Technol. , 16, 1, pp. 285-291 (2005). [ c 2005 IOP Publishing Ltd] ° http://dx.doi.org/10.1088/0957-0233/16/1/037 http://www.geocities.com/Tokyo/1406/node2.html#Todoroki05MST285 1. Introduction We can enjoy the benefit of combinatorial technology only after we can afford to analyze the large amount of data obtained. -

ML-Ask: Open Source Affect Analysis Software for Textual Input In

Ptaszynski, M et al 2017 ML-Ask: Open Source Affect Analysis Software Journal of for Textual Input in Japanese. Journal of Open Research Software, 5: 16, open research software DOI: https://doi.org/10.5334/jors.149 SOFTWARE METAPAPER ML-Ask: Open Source Affect Analysis Software for Textual Input in Japanese Michal Ptaszynski1,2, Pawel Dybala3,4, Rafal Rzepka5,6, Kenji Araki5,6 and Fumito Masui2,7 1 Software Development, Open Source Version Development, JP 2 Department of Computer Science, Kitami Institute of Technology, Kitami, JP 3 Software Development, PL 4 Institute Of Middle and Far Eastern Studies, Faculty of International and Political Studies, Jagiellonian University, Kraków, PL 5 Software Development Supervision, JP 6 Language Media Laboratory, Graduate School of Information Science and Technology, Hokkaido University, Sapporo, JP 7 Open Source Version Development Supervision, JP Corresponding author: Michal Ptaszynski ([email protected]) We present ML-Ask – the first Open Source Affect Analysis system for textual input in Japanese. ML-Ask analyses the contents of an input (e.g., a sentence) and annotates it with information regarding the contained general emotive expressions, specific emotional words, valence-activation dimensions of overall expressed affect, and particular emotion types expressed with their respective expressions. ML-Ask also incorporates the Contextual Valence Shifters model for handling negation in sentences to deal with grammatically expressible shifts in the conveyed valence. The system, designed to work mainly under Linux and MacOS, can be used for research on, or applying the techniques of Affect Analysis within the framework Japanese language. It can also be used as an experimental baseline for specific research in Affect Analysis, and as a practical tool for written contents annotation. -

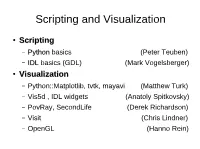

Scripting and Visualization

Scripting and Visualization ● ScriptingScripting – PythonPython basics (Peter Teuben) – IDLIDL basics (GDL) (Mark Vogelsberger) ● VisualizationVisualization – Python::Matplotlib, tvtk, mayavi (Matthew Turk) – Vis5d , IDL widgets (Anatoly Spitkovsky) – PovRay, SecondLife (Derek Richardson) – Visit (Chris Lindner) – OpenGL (Hanno Rein) S&V questions ● S: Is speed important? ● V: Specific for one type of data (point vs. grid, 1-2-3-dim?) ● V: Can it be driven by external programs (ds9, paraview) ● V: Can it be scripted? (partiview) ● V: Can it make animations? (glnemo2) ● V: Installation dependancies? Hard to install? Scripting (leaving out bash/tcsh) ● Scheme ((((((= a 1)))))) ● Perl $^_a! ● Tcl/Tk [set x [foo [bar]]] ● Python a.trim().split(':')[5] ● Ruby @a = (1,2,3) ● Cint struct B { float x[3], v[3];} ● IDL wh = where(r(delm) EQ 0, ct) ● Matlab t=r(:,1)+r(:,2)/60+r(:,3)/3600; PYTHONPYTHON http://www.python.org What's all the hype about? ● 1990 at UvA by Guido van Rossum ● Open Source, in C, portable Linux/Mac/Win/... ● Interpreted and dynamic scripting language ● Extensible and Object Oriented – Modules in python itself – Modules to any language (C, Fortran, ...) ● Many libraries have interfaces: gsl, fitsio, hdf5, pgplot,.... ● SciPy environment (numerical, plotting) The Language http://docs.python.org/tutorial ● Types: – Scalars: X=1 X=”1 2 3” – Lists: X=[1,2,3] X=range(1,4) – Tuples: X=(1,2,3,'-1','-2','-3') vx=float(X[3]) – Dictionary: X={“nbody”:10, “mode”:”euler”, “eps”:0.05} X[“eps”] ● Lots of builtin functions (and modules) -

The Graphics Cookbook SC/15.2 —Abstract Ii

SC/15.2 Starlink Project STARLINK Cookbook 15.2 A. Allan, D. Terrett 22nd August 2000 The Graphics Cookbook SC/15.2 —Abstract ii Abstract This cookbook is a collection of introductory material covering a wide range of topics dealing with data display, format conversion and presentation. Along with this material are pointers to more advanced documents dealing with the various packages, and hints and tips about how to deal with commonly occurring graphics problems. iii SC/15.2—Contents Contents 1 Introduction 3 2 Call for contributions 3 3 Subroutine Libraries 3 3.1 The PGPLOT library . 3 3.1.1 Encapsulated Postscript and PGPLOT . 5 3.1.2 PGPLOT Environment Variables . 5 3.1.3 PGPLOT Postscript Environment Variables . 5 3.1.4 Special characters inside PGPLOT text strings . 7 3.2 The BUTTON library . 7 3.3 The pgperl package . 11 3.3.1 Argument mapping – simple numbers and arrays . 12 3.3.2 Argument mapping – images and 2D arrays . 13 3.3.3 Argument mapping – function names . 14 3.3.4 Argument mapping – general handling of binary data . 14 3.4 Python PGPLOT . 14 3.5 GLISH PGPLOT . 15 3.6 ptcl Tk/Tcl and PGPLOT . 15 3.7 Starlink/Native PGPLOT . 15 3.8 Graphical Kernel System (GKS) . 16 3.8.1 Enquiring about the display . 16 3.8.2 Compiling and Linking GKS programs . 17 3.9 Simple Graphics System (SGS) . 17 3.10 PLplot Library . 17 3.10.1 PLplot and 3D Surface Plots . 18 3.11 The libjpeg Library . 19 3.12 The giflib Library . -

Release 0.5.1 Lucy Park

KoNLPy Documentation Release 0.5.1 Lucy Park Aug 03, 2018 Contents 1 Standing on the shoulders of giants2 2 License 3 3 Contribute 4 4 Getting started 5 4.1 What is NLP?............................................5 4.2 What do I need to get started?....................................5 5 User guide 7 5.1 Installation..............................................7 5.2 Morphological analysis and POS tagging.............................. 10 5.3 Data.................................................. 13 5.4 Examples............................................... 14 5.5 Running tests............................................. 28 5.6 References.............................................. 28 6 API 32 6.1 konlpy Package............................................ 32 7 Indices and tables 42 Python Module Index 43 i KoNLPy Documentation, Release 0.5.1 (https://travis-ci.org/konlpy/konlpy) (https://readthedocs.org/projects/konlpy/?badge=latest) KoNLPy (pro- nounced “ko en el PIE”) is a Python package for natural language processing (NLP) of the Korean language. For installation directions, see here (page 7). For users new to NLP, go to Getting started (page 5). For step-by-step instructions, follow the User guide (page 7). For specific descriptions of each module, go see the API (page 32) documents. >>> from konlpy.tag import Kkma >>> from konlpy.utils import pprint >>> kkma= Kkma() >>> pprint(kkma.sentences(u'$, HUX8요. 반갑습니다.')) [$, HUX8요.., 반갑습니다.] >>> pprint(kkma.nouns(u'È8t나 tX¬m@ C헙 t슈 ¸래커Ð ¨¨주8요.')) [È8, tX, tX¬m, ¬m, C헙, t슈, ¸래커] >>> pprint(kkma.pos(u'$X보고는 실행X½, Ð러T8지@h께 $명D \대\Á8히!^^')) [($X, NNG), (보고, NNG), (는, JX), (실행, NNG), (X½, NNG), (,, SP), (Ð러, NNG), (T8지, NNG), (@, JKM), (h께, MAG), ($명, NNG), (D, JKO), (\대\, NNG), (Á8히, MAG), (!, SF), (^^, EMO)] Contents 1 CHAPTER 1 Standing on the shoulders of giants Korean, the 13th most widely spoken language in the world (http://www.koreatimes.co.kr/www/news/nation/2014/05/116_157214.html), is a beautiful, yet complex language. -

Latest) Konlpy (Pro- Nounced “Ko En El PIE”) Is a Python Package for Natural Language Processing (NLP) of the Korean Language

KoNLPy Documentation Release 0.5.2 Lucy Park Dec 03, 2019 Contents 1 Standing on the shoulders of giants2 2 License 3 3 Contribute 4 4 Getting started 5 4.1 What is NLP?............................................5 4.2 What do I need to get started?....................................5 5 User guide 7 5.1 Installation..............................................7 5.2 Morphological analysis and POS tagging.............................. 10 5.3 Data.................................................. 13 5.4 Examples............................................... 15 5.5 Running tests............................................. 29 5.6 References.............................................. 29 6 API 33 6.1 konlpy Package............................................ 33 7 Indices and tables 44 Python Module Index 45 Index 46 i KoNLPy Documentation, Release 0.5.2 (https://travis-ci.org/konlpy/konlpy) (https://readthedocs.org/projects/konlpy/?badge=latest) KoNLPy (pro- nounced “ko en el PIE”) is a Python package for natural language processing (NLP) of the Korean language. For installation directions, see here (page 7). For users new to NLP, go to Getting started (page 5). For step-by-step instructions, follow the User guide (page 7). For specific descriptions of each module, go see the API (page 33) documents. >>> from konlpy.tag import Kkma >>> from konlpy.utils import pprint >>> kkma= Kkma() >>> pprint(kkma.sentences(u'$, HUX8요. 반갑습니다.')) [$, HUX8요.., 반갑습니다.] >>> pprint(kkma.nouns(u'È8t나 tX¬m@ C헙 t슈 ¸래커Ð ¨¨주8요.')) [È8, tX, tX¬m, ¬m, C헙, t슈, ¸래커] >>> pprint(kkma.pos(u'$X보고는 실행X½, Ð러T8지@h께 $명D \대\Á8히!^^')) [($X, NNG), (보고, NNG), (는, JX), (실행, NNG), (X½, NNG), (,, SP), (Ð러, NNG), (T8지, NNG), (@, JKM), (h께, MAG), ($명, NNG), (D, JKO), (\대\, NNG), (Á8히, MAG), (!, SF), (^^, EMO)] Contents 1 CHAPTER 1 Standing on the shoulders of giants Korean, the 13th most widely spoken language in the world (http://www.koreatimes.co.kr/www/news/nation/2014/05/116_157214.html), is a beautiful, yet complex language. -

Comparison of Korean Preprocessing Performance According to Tokenizer in NMT Transformer Model

Journal of Advances in Information Technology Vol. 11, No. 4, November 2020 Comparison of Korean Preprocessing Performance according to Tokenizer in NMT Transformer Model Geumcheol Kim and Sang-Hong Lee Department of Computer Science & Engineering, Anyang University, Anyang-si, Republic of Korea Email: [email protected], [email protected] Abstract—Mechanical translation using neural networks in greatly depending on the characteristics of each language. natural language processing is making rapid progress. With Recently, we are using tokenizer based on morphological the development of natural language processing model and analysis that identifies grammatical structures such as tokenizer, accurate translation is becoming possible. In this root, prefix, and verb, and Tokenizer based On Byte Pair paper, we will create a transformer model that shows high Encoding (BPE), a data compression technique that can performance recently and compare the performance of English Korean according to tokenizer. We made a reduce problems (Out of Vocabulary, OOV) that do not traditional neural network-based Neural Machine exist in learning. Translation (NMT) model using a transformer and Because the performance of these tokenizer varies compared the Korean translation results according to the from language to language, it seems necessary to find tokenizer. The Byte Pair Encoding (BPE)-based Tokenizer tokenizer that fits the Korean language. The composition showed a small vocabulary size and a fast learning speed, of this paper aims to introduce the Transformer model but due to the nature of Korean, the translation result was and create the English-Korean NMT model to enhance not good. The morphological analysis-based Tokenizer the performance of Korean translation by comparing the showed that the parallel corpus data is large and the performance according to tokenizer. -

Documentation of the Plplot Plotting Software I

Documentation of the PLplot plotting software i Documentation of the PLplot plotting software Documentation of the PLplot plotting software ii Copyright © 1994 Maurice J. LeBrun, Geoffrey Furnish Copyright © 2000-2005 Rafael Laboissière Copyright © 2000-2016 Alan W. Irwin Copyright © 2001-2003 Joao Cardoso Copyright © 2004 Andrew Roach Copyright © 2004-2013 Andrew Ross Copyright © 2004-2016 Arjen Markus Copyright © 2005 Thomas J. Duck Copyright © 2005-2010 Hazen Babcock Copyright © 2008 Werner Smekal Copyright © 2008-2016 Jerry Bauck Copyright © 2009-2014 Hezekiah M. Carty Copyright © 2014-2015 Phil Rosenberg Copyright © 2015 Jim Dishaw Redistribution and use in source (XML DocBook) and “compiled” forms (HTML, PDF, PostScript, DVI, TeXinfo and so forth) with or without modification, are permitted provided that the following conditions are met: 1. Redistributions of source code (XML DocBook) must retain the above copyright notice, this list of conditions and the following disclaimer as the first lines of this file unmodified. 2. Redistributions in compiled form (transformed to other DTDs, converted to HTML, PDF, PostScript, and other formats) must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution. Important: THIS DOCUMENTATION IS PROVIDED BY THE PLPLOT PROJECT “AS IS” AND ANY EXPRESS OR IM- PLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE PLPLOT PROJECT BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.